problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

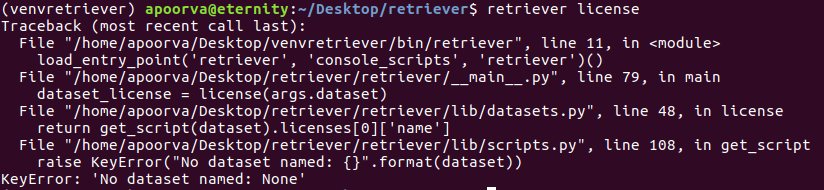

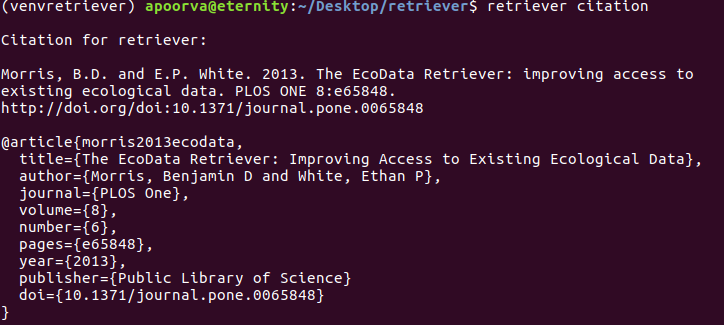

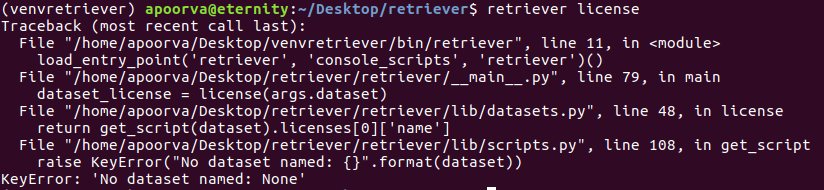

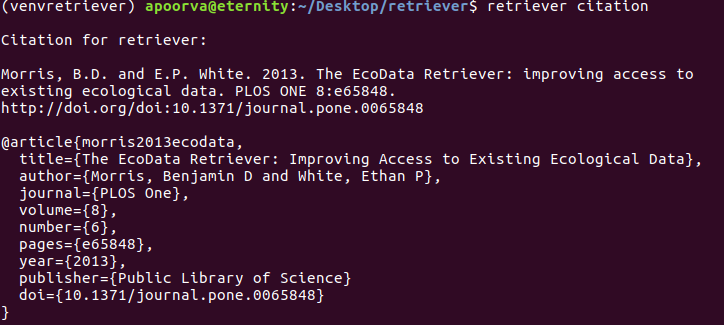

gh_patches_debug_20175 | rasdani/github-patches | git_diff | bridgecrewio__checkov-3750 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

CKV_AZURE_9 & CKV_AZURE_10 - Scan fails if protocol value is a wildcard

**Describe the issue**

CKV_AZURE_9 & CKV_AZURE_10

When scanning Bicep files the checks are looking for a protocol value of `tcp` and fail to catch when `*` is used.

**Examples**

The following bicep code fails to produce a finding for CKV_AZURE_9 & CKV_AZURE_10

```

resource nsg 'Microsoft.Network/networkSecurityGroups@2021-05-01' = {

name: nsgName

location: nsgLocation

properties: {

securityRules: [

{

name: 'badrule'

properties: {

access: 'Allow'

destinationAddressPrefix: '*'

destinationPortRange: '*'

direction: 'Inbound'

priority: 100

protocol: '*'

sourceAddressPrefix: '*'

sourcePortRange: '*'

}

}

]

}

}

```

While this works as expected:

```

resource nsg 'Microsoft.Network/networkSecurityGroups@2021-05-01' = {

name: nsgName

location: nsgLocation

properties: {

securityRules: [

{

name: 'badrule'

properties: {

access: 'Allow'

destinationAddressPrefix: '*'

destinationPortRange: '*'

direction: 'Inbound'

priority: 100

protocol: 'tcp'

sourceAddressPrefix: '*'

sourcePortRange: '*'

}

}

]

}

}

```

**Version (please complete the following information):**

- docker container 2.2.0

**Additional context**

A similar problem existed for Terraform that was previously fixed (see https://github.com/bridgecrewio/checkov/issues/601)

I believe the relevant lines is:

https://github.com/bridgecrewio/checkov/blob/master/checkov/arm/checks/resource/NSGRulePortAccessRestricted.py#LL48C4-L48C117

</issue>

<code>

[start of checkov/arm/checks/resource/NSGRulePortAccessRestricted.py]

1 import re

2 from typing import Union, Dict, Any

3

4 from checkov.common.models.enums import CheckResult, CheckCategories

5 from checkov.arm.base_resource_check import BaseResourceCheck

6

7 # https://docs.microsoft.com/en-us/azure/templates/microsoft.network/networksecuritygroups

8 # https://docs.microsoft.com/en-us/azure/templates/microsoft.network/networksecuritygroups/securityrules

9

10 INTERNET_ADDRESSES = ["*", "0.0.0.0", "<nw>/0", "/0", "internet", "any"] # nosec

11 PORT_RANGE = re.compile(r"\d+-\d+")

12

13

14 class NSGRulePortAccessRestricted(BaseResourceCheck):

15 def __init__(self, name: str, check_id: str, port: int) -> None:

16 supported_resources = (

17 "Microsoft.Network/networkSecurityGroups",

18 "Microsoft.Network/networkSecurityGroups/securityRules",

19 )

20 categories = (CheckCategories.NETWORKING,)

21 super().__init__(name=name, id=check_id, categories=categories, supported_resources=supported_resources)

22 self.port = port

23

24 def is_port_in_range(self, port_range: Union[int, str]) -> bool:

25 if re.match(PORT_RANGE, str(port_range)):

26 start, end = int(port_range.split("-")[0]), int(port_range.split("-")[1])

27 if start <= self.port <= end:

28 return True

29 if port_range in (str(self.port), "*"):

30 return True

31 return False

32

33 def scan_resource_conf(self, conf: Dict[str, Any]) -> CheckResult:

34 if "properties" in conf:

35 securityRules = []

36 if self.entity_type == "Microsoft.Network/networkSecurityGroups":

37 if "securityRules" in conf["properties"]:

38 securityRules.extend(conf["properties"]["securityRules"])

39 if self.entity_type == "Microsoft.Network/networkSecurityGroups/securityRules":

40 securityRules.append(conf)

41

42 for rule in securityRules:

43 portRanges = []

44 sourcePrefixes = []

45 if "properties" in rule:

46 if "access" in rule["properties"] and rule["properties"]["access"].lower() == "allow":

47 if "direction" in rule["properties"] and rule["properties"]["direction"].lower() == "inbound":

48 if "protocol" in rule["properties"] and rule["properties"]["protocol"].lower() == "tcp":

49 if "destinationPortRanges" in rule["properties"]:

50 portRanges.extend(rule["properties"]["destinationPortRanges"])

51 if "destinationPortRange" in rule["properties"]:

52 portRanges.append(rule["properties"]["destinationPortRange"])

53

54 if "sourceAddressPrefixes" in rule["properties"]:

55 sourcePrefixes.extend(rule["properties"]["sourceAddressPrefixes"])

56 if "sourceAddressPrefix" in rule["properties"]:

57 sourcePrefixes.append(rule["properties"]["sourceAddressPrefix"])

58

59 for portRange in portRanges:

60 if self.is_port_in_range(portRange):

61 for prefix in sourcePrefixes:

62 if prefix in INTERNET_ADDRESSES:

63 return CheckResult.FAILED

64

65 return CheckResult.PASSED

66

[end of checkov/arm/checks/resource/NSGRulePortAccessRestricted.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/checkov/arm/checks/resource/NSGRulePortAccessRestricted.py b/checkov/arm/checks/resource/NSGRulePortAccessRestricted.py

--- a/checkov/arm/checks/resource/NSGRulePortAccessRestricted.py

+++ b/checkov/arm/checks/resource/NSGRulePortAccessRestricted.py

@@ -45,7 +45,7 @@

if "properties" in rule:

if "access" in rule["properties"] and rule["properties"]["access"].lower() == "allow":

if "direction" in rule["properties"] and rule["properties"]["direction"].lower() == "inbound":

- if "protocol" in rule["properties"] and rule["properties"]["protocol"].lower() == "tcp":

+ if "protocol" in rule["properties"] and rule["properties"]["protocol"].lower() in ("tcp", "*"):

if "destinationPortRanges" in rule["properties"]:

portRanges.extend(rule["properties"]["destinationPortRanges"])

if "destinationPortRange" in rule["properties"]:

| {"golden_diff": "diff --git a/checkov/arm/checks/resource/NSGRulePortAccessRestricted.py b/checkov/arm/checks/resource/NSGRulePortAccessRestricted.py\n--- a/checkov/arm/checks/resource/NSGRulePortAccessRestricted.py\n+++ b/checkov/arm/checks/resource/NSGRulePortAccessRestricted.py\n@@ -45,7 +45,7 @@\n if \"properties\" in rule:\n if \"access\" in rule[\"properties\"] and rule[\"properties\"][\"access\"].lower() == \"allow\":\n if \"direction\" in rule[\"properties\"] and rule[\"properties\"][\"direction\"].lower() == \"inbound\":\n- if \"protocol\" in rule[\"properties\"] and rule[\"properties\"][\"protocol\"].lower() == \"tcp\":\n+ if \"protocol\" in rule[\"properties\"] and rule[\"properties\"][\"protocol\"].lower() in (\"tcp\", \"*\"):\n if \"destinationPortRanges\" in rule[\"properties\"]:\n portRanges.extend(rule[\"properties\"][\"destinationPortRanges\"])\n if \"destinationPortRange\" in rule[\"properties\"]:\n", "issue": "CKV_AZURE_9 & CKV_AZURE_10 - Scan fails if protocol value is a wildcard\n**Describe the issue**\r\nCKV_AZURE_9 & CKV_AZURE_10\r\n\r\nWhen scanning Bicep files the checks are looking for a protocol value of `tcp` and fail to catch when `*` is used.\r\n\r\n**Examples**\r\n\r\nThe following bicep code fails to produce a finding for CKV_AZURE_9 & CKV_AZURE_10\r\n```\r\nresource nsg 'Microsoft.Network/networkSecurityGroups@2021-05-01' = {\r\n name: nsgName\r\n location: nsgLocation\r\n properties: {\r\n securityRules: [\r\n {\r\n name: 'badrule'\r\n properties: {\r\n access: 'Allow'\r\n destinationAddressPrefix: '*'\r\n destinationPortRange: '*'\r\n direction: 'Inbound'\r\n priority: 100\r\n protocol: '*'\r\n sourceAddressPrefix: '*'\r\n sourcePortRange: '*'\r\n }\r\n }\r\n ]\r\n }\r\n}\r\n```\r\n\r\nWhile this works as expected:\r\n```\r\nresource nsg 'Microsoft.Network/networkSecurityGroups@2021-05-01' = {\r\n name: nsgName\r\n location: nsgLocation\r\n properties: {\r\n securityRules: [\r\n {\r\n name: 'badrule'\r\n properties: {\r\n access: 'Allow'\r\n destinationAddressPrefix: '*'\r\n destinationPortRange: '*'\r\n direction: 'Inbound'\r\n priority: 100\r\n protocol: 'tcp'\r\n sourceAddressPrefix: '*'\r\n sourcePortRange: '*'\r\n }\r\n }\r\n ]\r\n }\r\n}\r\n```\r\n\r\n**Version (please complete the following information):**\r\n - docker container 2.2.0\r\n\r\n**Additional context**\r\nA similar problem existed for Terraform that was previously fixed (see https://github.com/bridgecrewio/checkov/issues/601) \r\n\r\nI believe the relevant lines is: \r\nhttps://github.com/bridgecrewio/checkov/blob/master/checkov/arm/checks/resource/NSGRulePortAccessRestricted.py#LL48C4-L48C117\r\n\r\n\n", "before_files": [{"content": "import re\nfrom typing import Union, Dict, Any\n\nfrom checkov.common.models.enums import CheckResult, CheckCategories\nfrom checkov.arm.base_resource_check import BaseResourceCheck\n\n# https://docs.microsoft.com/en-us/azure/templates/microsoft.network/networksecuritygroups\n# https://docs.microsoft.com/en-us/azure/templates/microsoft.network/networksecuritygroups/securityrules\n\nINTERNET_ADDRESSES = [\"*\", \"0.0.0.0\", \"<nw>/0\", \"/0\", \"internet\", \"any\"] # nosec\nPORT_RANGE = re.compile(r\"\\d+-\\d+\")\n\n\nclass NSGRulePortAccessRestricted(BaseResourceCheck):\n def __init__(self, name: str, check_id: str, port: int) -> None:\n supported_resources = (\n \"Microsoft.Network/networkSecurityGroups\",\n \"Microsoft.Network/networkSecurityGroups/securityRules\",\n )\n categories = (CheckCategories.NETWORKING,)\n super().__init__(name=name, id=check_id, categories=categories, supported_resources=supported_resources)\n self.port = port\n\n def is_port_in_range(self, port_range: Union[int, str]) -> bool:\n if re.match(PORT_RANGE, str(port_range)):\n start, end = int(port_range.split(\"-\")[0]), int(port_range.split(\"-\")[1])\n if start <= self.port <= end:\n return True\n if port_range in (str(self.port), \"*\"):\n return True\n return False\n\n def scan_resource_conf(self, conf: Dict[str, Any]) -> CheckResult:\n if \"properties\" in conf:\n securityRules = []\n if self.entity_type == \"Microsoft.Network/networkSecurityGroups\":\n if \"securityRules\" in conf[\"properties\"]:\n securityRules.extend(conf[\"properties\"][\"securityRules\"])\n if self.entity_type == \"Microsoft.Network/networkSecurityGroups/securityRules\":\n securityRules.append(conf)\n\n for rule in securityRules:\n portRanges = []\n sourcePrefixes = []\n if \"properties\" in rule:\n if \"access\" in rule[\"properties\"] and rule[\"properties\"][\"access\"].lower() == \"allow\":\n if \"direction\" in rule[\"properties\"] and rule[\"properties\"][\"direction\"].lower() == \"inbound\":\n if \"protocol\" in rule[\"properties\"] and rule[\"properties\"][\"protocol\"].lower() == \"tcp\":\n if \"destinationPortRanges\" in rule[\"properties\"]:\n portRanges.extend(rule[\"properties\"][\"destinationPortRanges\"])\n if \"destinationPortRange\" in rule[\"properties\"]:\n portRanges.append(rule[\"properties\"][\"destinationPortRange\"])\n\n if \"sourceAddressPrefixes\" in rule[\"properties\"]:\n sourcePrefixes.extend(rule[\"properties\"][\"sourceAddressPrefixes\"])\n if \"sourceAddressPrefix\" in rule[\"properties\"]:\n sourcePrefixes.append(rule[\"properties\"][\"sourceAddressPrefix\"])\n\n for portRange in portRanges:\n if self.is_port_in_range(portRange):\n for prefix in sourcePrefixes:\n if prefix in INTERNET_ADDRESSES:\n return CheckResult.FAILED\n\n return CheckResult.PASSED\n", "path": "checkov/arm/checks/resource/NSGRulePortAccessRestricted.py"}]} | 1,801 | 221 |

gh_patches_debug_9237 | rasdani/github-patches | git_diff | mars-project__mars-1623 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[BUG] Setitem for DataFrame leads to a wrong dtypes

<!--

Thank you for your contribution!

Please review https://github.com/mars-project/mars/blob/master/CONTRIBUTING.rst before opening an issue.

-->

**Describe the bug**

Add columns for a DataFrame will lead to a wrong dtypes of input DataFrame.

**To Reproduce**

```python

In [1]: import mars.dataframe as md

In [2]: a = md.DataFrame({'a':[1,2,3]})

In [3]: a['new'] = 1

In [4]: a.op.inputs

Out[4]: [DataFrame <op=DataFrameDataSource, key=c212164d24d96ed634711c3b97f334cb>]

In [5]: a.op.inputs[0].dtypes

Out[5]:

a int64

new int64

dtype: object

```

**Expected behavior**

Input DataFrame's dtypes should have only one column.

</issue>

<code>

[start of mars/dataframe/indexing/setitem.py]

1 # Copyright 1999-2020 Alibaba Group Holding Ltd.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 import numpy as np

16 import pandas as pd

17 from pandas.api.types import is_list_like

18

19 from ... import opcodes

20 from ...core import OutputType

21 from ...serialize import KeyField, AnyField

22 from ...tensor.core import TENSOR_TYPE

23 from ...tiles import TilesError

24 from ..core import SERIES_TYPE, DataFrame

25 from ..initializer import Series as asseries

26 from ..operands import DataFrameOperand, DataFrameOperandMixin

27 from ..utils import parse_index

28

29

30 class DataFrameSetitem(DataFrameOperand, DataFrameOperandMixin):

31 _op_type_ = opcodes.INDEXSETVALUE

32

33 _target = KeyField('target')

34 _indexes = AnyField('indexes')

35 _value = AnyField('value')

36

37 def __init__(self, target=None, indexes=None, value=None, output_types=None, **kw):

38 super().__init__(_target=target, _indexes=indexes,

39 _value=value, _output_types=output_types, **kw)

40 if self.output_types is None:

41 self.output_types = [OutputType.dataframe]

42

43 @property

44 def target(self):

45 return self._target

46

47 @property

48 def indexes(self):

49 return self._indexes

50

51 @property

52 def value(self):

53 return self._value

54

55 def _set_inputs(self, inputs):

56 super()._set_inputs(inputs)

57 self._target = self._inputs[0]

58 if len(inputs) > 1:

59 self._value = self._inputs[-1]

60

61 def __call__(self, target: DataFrame, value):

62 inputs = [target]

63 if np.isscalar(value):

64 value_dtype = np.array(value).dtype

65 else:

66 if isinstance(value, (pd.Series, SERIES_TYPE)):

67 value = asseries(value)

68 inputs.append(value)

69 value_dtype = value.dtype

70 elif is_list_like(value) or isinstance(value, TENSOR_TYPE):

71 value = asseries(value, index=target.index)

72 inputs.append(value)

73 value_dtype = value.dtype

74 else: # pragma: no cover

75 raise TypeError('Wrong value type, could be one of scalar, Series or tensor')

76

77 if value.index_value.key != target.index_value.key: # pragma: no cover

78 raise NotImplementedError('Does not support setting value '

79 'with different index for now')

80

81 index_value = target.index_value

82 dtypes = target.dtypes

83 dtypes.loc[self._indexes] = value_dtype

84 columns_value = parse_index(dtypes.index, store_data=True)

85 ret = self.new_dataframe(inputs, shape=(target.shape[0], len(dtypes)),

86 dtypes=dtypes, index_value=index_value,

87 columns_value=columns_value)

88 target.data = ret.data

89

90 @classmethod

91 def tile(cls, op):

92 out = op.outputs[0]

93 target = op.target

94 value = op.value

95 col = op.indexes

96 columns = target.columns_value.to_pandas()

97

98 if not np.isscalar(value):

99 # check if all chunk's index_value are identical

100 target_chunk_index_values = [c.index_value for c in target.chunks

101 if c.index[1] == 0]

102 value_chunk_index_values = [v.index_value for v in value.chunks]

103 is_identical = len(target_chunk_index_values) == len(target_chunk_index_values) and \

104 all(c.key == v.key for c, v in zip(target_chunk_index_values, value_chunk_index_values))

105 if not is_identical:

106 # do rechunk

107 if any(np.isnan(s) for s in target.nsplits[0]) or \

108 any(np.isnan(s) for s in value.nsplits[0]): # pragma: no cover

109 raise TilesError('target or value has unknown chunk shape')

110

111 value = value.rechunk({0: target.nsplits[0]})._inplace_tile()

112

113 out_chunks = []

114 nsplits = [list(ns) for ns in target.nsplits]

115 if col not in columns:

116 nsplits[1][-1] += 1

117 column_chunk_shape = target.chunk_shape[1]

118 # append to the last chunk on columns axis direction

119 for c in target.chunks:

120 if c.index[-1] != column_chunk_shape - 1:

121 # not effected, just output

122 out_chunks.append(c)

123 else:

124 chunk_op = op.copy().reset_key()

125 if np.isscalar(value):

126 chunk_inputs = [c]

127 else:

128 value_chunk = value.cix[c.index[0], ]

129 chunk_inputs = [c, value_chunk]

130

131 dtypes = c.dtypes

132 dtypes.loc[out.dtypes.index[-1]] = out.dtypes.iloc[-1]

133 chunk = chunk_op.new_chunk(chunk_inputs,

134 shape=(c.shape[0], c.shape[1] + 1),

135 dtypes=dtypes,

136 index_value=c.index_value,

137 columns_value=parse_index(dtypes.index, store_data=True),

138 index=c.index)

139 out_chunks.append(chunk)

140 else:

141 # replace exist column

142 for c in target.chunks:

143 if col in c.dtypes:

144 chunk_inputs = [c]

145 if not np.isscalar(value):

146 chunk_inputs.append(value.cix[c.index[0], ])

147 chunk_op = op.copy().reset_key()

148 chunk = chunk_op.new_chunk(chunk_inputs,

149 shape=c.shape,

150 dtypes=c.dtypes,

151 index_value=c.index_value,

152 columns_value=c.columns_value,

153 index=c.index)

154 out_chunks.append(chunk)

155 else:

156 out_chunks.append(c)

157

158 params = out.params

159 params['nsplits'] = tuple(tuple(ns) for ns in nsplits)

160 params['chunks'] = out_chunks

161 new_op = op.copy()

162 return new_op.new_tileables(op.inputs, kws=[params])

163

164 @classmethod

165 def execute(cls, ctx, op):

166 target = ctx[op.target.key].copy()

167 value = ctx[op.value.key] if not np.isscalar(op.value) else op.value

168 target[op.indexes] = value

169 ctx[op.outputs[0].key] = target

170

171

172 def dataframe_setitem(df, col, value):

173 op = DataFrameSetitem(target=df, indexes=col, value=value)

174 return op(df, value)

175

[end of mars/dataframe/indexing/setitem.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/mars/dataframe/indexing/setitem.py b/mars/dataframe/indexing/setitem.py

--- a/mars/dataframe/indexing/setitem.py

+++ b/mars/dataframe/indexing/setitem.py

@@ -79,7 +79,7 @@

'with different index for now')

index_value = target.index_value

- dtypes = target.dtypes

+ dtypes = target.dtypes.copy(deep=True)

dtypes.loc[self._indexes] = value_dtype

columns_value = parse_index(dtypes.index, store_data=True)

ret = self.new_dataframe(inputs, shape=(target.shape[0], len(dtypes)),

| {"golden_diff": "diff --git a/mars/dataframe/indexing/setitem.py b/mars/dataframe/indexing/setitem.py\n--- a/mars/dataframe/indexing/setitem.py\n+++ b/mars/dataframe/indexing/setitem.py\n@@ -79,7 +79,7 @@\n 'with different index for now')\n \n index_value = target.index_value\n- dtypes = target.dtypes\n+ dtypes = target.dtypes.copy(deep=True)\n dtypes.loc[self._indexes] = value_dtype\n columns_value = parse_index(dtypes.index, store_data=True)\n ret = self.new_dataframe(inputs, shape=(target.shape[0], len(dtypes)),\n", "issue": "[BUG] Setitem for DataFrame leads to a wrong dtypes\n<!--\r\nThank you for your contribution!\r\n\r\nPlease review https://github.com/mars-project/mars/blob/master/CONTRIBUTING.rst before opening an issue.\r\n-->\r\n\r\n**Describe the bug**\r\nAdd columns for a DataFrame will lead to a wrong dtypes of input DataFrame.\r\n\r\n**To Reproduce**\r\n```python\r\nIn [1]: import mars.dataframe as md \r\n\r\nIn [2]: a = md.DataFrame({'a':[1,2,3]}) \r\n\r\nIn [3]: a['new'] = 1 \r\n\r\nIn [4]: a.op.inputs \r\nOut[4]: [DataFrame <op=DataFrameDataSource, key=c212164d24d96ed634711c3b97f334cb>]\r\n\r\nIn [5]: a.op.inputs[0].dtypes \r\nOut[5]: \r\na int64\r\nnew int64\r\ndtype: object\r\n```\r\n**Expected behavior**\r\nInput DataFrame's dtypes should have only one column.\r\n\n", "before_files": [{"content": "# Copyright 1999-2020 Alibaba Group Holding Ltd.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nimport numpy as np\nimport pandas as pd\nfrom pandas.api.types import is_list_like\n\nfrom ... import opcodes\nfrom ...core import OutputType\nfrom ...serialize import KeyField, AnyField\nfrom ...tensor.core import TENSOR_TYPE\nfrom ...tiles import TilesError\nfrom ..core import SERIES_TYPE, DataFrame\nfrom ..initializer import Series as asseries\nfrom ..operands import DataFrameOperand, DataFrameOperandMixin\nfrom ..utils import parse_index\n\n\nclass DataFrameSetitem(DataFrameOperand, DataFrameOperandMixin):\n _op_type_ = opcodes.INDEXSETVALUE\n\n _target = KeyField('target')\n _indexes = AnyField('indexes')\n _value = AnyField('value')\n\n def __init__(self, target=None, indexes=None, value=None, output_types=None, **kw):\n super().__init__(_target=target, _indexes=indexes,\n _value=value, _output_types=output_types, **kw)\n if self.output_types is None:\n self.output_types = [OutputType.dataframe]\n\n @property\n def target(self):\n return self._target\n\n @property\n def indexes(self):\n return self._indexes\n\n @property\n def value(self):\n return self._value\n\n def _set_inputs(self, inputs):\n super()._set_inputs(inputs)\n self._target = self._inputs[0]\n if len(inputs) > 1:\n self._value = self._inputs[-1]\n\n def __call__(self, target: DataFrame, value):\n inputs = [target]\n if np.isscalar(value):\n value_dtype = np.array(value).dtype\n else:\n if isinstance(value, (pd.Series, SERIES_TYPE)):\n value = asseries(value)\n inputs.append(value)\n value_dtype = value.dtype\n elif is_list_like(value) or isinstance(value, TENSOR_TYPE):\n value = asseries(value, index=target.index)\n inputs.append(value)\n value_dtype = value.dtype\n else: # pragma: no cover\n raise TypeError('Wrong value type, could be one of scalar, Series or tensor')\n\n if value.index_value.key != target.index_value.key: # pragma: no cover\n raise NotImplementedError('Does not support setting value '\n 'with different index for now')\n\n index_value = target.index_value\n dtypes = target.dtypes\n dtypes.loc[self._indexes] = value_dtype\n columns_value = parse_index(dtypes.index, store_data=True)\n ret = self.new_dataframe(inputs, shape=(target.shape[0], len(dtypes)),\n dtypes=dtypes, index_value=index_value,\n columns_value=columns_value)\n target.data = ret.data\n\n @classmethod\n def tile(cls, op):\n out = op.outputs[0]\n target = op.target\n value = op.value\n col = op.indexes\n columns = target.columns_value.to_pandas()\n\n if not np.isscalar(value):\n # check if all chunk's index_value are identical\n target_chunk_index_values = [c.index_value for c in target.chunks\n if c.index[1] == 0]\n value_chunk_index_values = [v.index_value for v in value.chunks]\n is_identical = len(target_chunk_index_values) == len(target_chunk_index_values) and \\\n all(c.key == v.key for c, v in zip(target_chunk_index_values, value_chunk_index_values))\n if not is_identical:\n # do rechunk\n if any(np.isnan(s) for s in target.nsplits[0]) or \\\n any(np.isnan(s) for s in value.nsplits[0]): # pragma: no cover\n raise TilesError('target or value has unknown chunk shape')\n\n value = value.rechunk({0: target.nsplits[0]})._inplace_tile()\n\n out_chunks = []\n nsplits = [list(ns) for ns in target.nsplits]\n if col not in columns:\n nsplits[1][-1] += 1\n column_chunk_shape = target.chunk_shape[1]\n # append to the last chunk on columns axis direction\n for c in target.chunks:\n if c.index[-1] != column_chunk_shape - 1:\n # not effected, just output\n out_chunks.append(c)\n else:\n chunk_op = op.copy().reset_key()\n if np.isscalar(value):\n chunk_inputs = [c]\n else:\n value_chunk = value.cix[c.index[0], ]\n chunk_inputs = [c, value_chunk]\n\n dtypes = c.dtypes\n dtypes.loc[out.dtypes.index[-1]] = out.dtypes.iloc[-1]\n chunk = chunk_op.new_chunk(chunk_inputs,\n shape=(c.shape[0], c.shape[1] + 1),\n dtypes=dtypes,\n index_value=c.index_value,\n columns_value=parse_index(dtypes.index, store_data=True),\n index=c.index)\n out_chunks.append(chunk)\n else:\n # replace exist column\n for c in target.chunks:\n if col in c.dtypes:\n chunk_inputs = [c]\n if not np.isscalar(value):\n chunk_inputs.append(value.cix[c.index[0], ])\n chunk_op = op.copy().reset_key()\n chunk = chunk_op.new_chunk(chunk_inputs,\n shape=c.shape,\n dtypes=c.dtypes,\n index_value=c.index_value,\n columns_value=c.columns_value,\n index=c.index)\n out_chunks.append(chunk)\n else:\n out_chunks.append(c)\n\n params = out.params\n params['nsplits'] = tuple(tuple(ns) for ns in nsplits)\n params['chunks'] = out_chunks\n new_op = op.copy()\n return new_op.new_tileables(op.inputs, kws=[params])\n\n @classmethod\n def execute(cls, ctx, op):\n target = ctx[op.target.key].copy()\n value = ctx[op.value.key] if not np.isscalar(op.value) else op.value\n target[op.indexes] = value\n ctx[op.outputs[0].key] = target\n\n\ndef dataframe_setitem(df, col, value):\n op = DataFrameSetitem(target=df, indexes=col, value=value)\n return op(df, value)\n", "path": "mars/dataframe/indexing/setitem.py"}]} | 2,679 | 144 |

gh_patches_debug_29978 | rasdani/github-patches | git_diff | NVIDIA-Merlin__NVTabular-693 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[BUG] Horovod example scripts fail when user supplies batch size parameter

**Describe the bug**

Using the batch size parameter on the TF Horovod example causes a type error with a mismatch between str and int.

**Steps/Code to reproduce bug**

Run the TF Horovod example with the arguments `--b_size 1024`.

**Expected behavior**

The script should accept a user-provided batch size.

**Environment details (please complete the following information):**

- Environment location: Bare-metal

- Method of NVTabular install: conda

**Additional context**

I believe [this line](https://github.com/NVIDIA/NVTabular/blob/main/examples/horovod/tf_hvd_simple.py#L30) and the same line in the Torch example just need type coercions from str to int.

</issue>

<code>

[start of examples/horovod/tf_hvd_simple.py]

1 # External dependencies

2 import argparse

3 import glob

4 import os

5

6 import cupy

7

8 # we can control how much memory to give tensorflow with this environment variable

9 # IMPORTANT: make sure you do this before you initialize TF's runtime, otherwise

10 # TF will have claimed all free GPU memory

11 os.environ["TF_MEMORY_ALLOCATION"] = "0.3" # fraction of free memory

12 import horovod.tensorflow as hvd # noqa: E402

13 import tensorflow as tf # noqa: E402

14

15 import nvtabular as nvt # noqa: E402

16 from nvtabular.framework_utils.tensorflow import layers # noqa: E402

17 from nvtabular.loader.tensorflow import KerasSequenceLoader # noqa: E402

18

19 parser = argparse.ArgumentParser(description="Process some integers.")

20 parser.add_argument("--dir_in", default=None, help="Input directory")

21 parser.add_argument("--b_size", default=None, help="batch size")

22 parser.add_argument("--cats", default=None, help="categorical columns")

23 parser.add_argument("--cats_mh", default=None, help="categorical multihot columns")

24 parser.add_argument("--conts", default=None, help="continuous columns")

25 parser.add_argument("--labels", default=None, help="continuous columns")

26 args = parser.parse_args()

27

28

29 BASE_DIR = args.dir_in or "./data/"

30 BATCH_SIZE = args.b_size or 16384 # Batch Size

31 CATEGORICAL_COLUMNS = args.cats or ["movieId", "userId"] # Single-hot

32 CATEGORICAL_MH_COLUMNS = args.cats_mh or ["genres"] # Multi-hot

33 NUMERIC_COLUMNS = args.conts or []

34 TRAIN_PATHS = sorted(

35 glob.glob(os.path.join(BASE_DIR, "train/*.parquet"))

36 ) # Output from ETL-with-NVTabular

37 hvd.init()

38

39 # Seed with system randomness (or a static seed)

40 cupy.random.seed(None)

41

42

43 def seed_fn():

44 """

45 Generate consistent dataloader shuffle seeds across workers

46

47 Reseeds each worker's dataloader each epoch to get fresh a shuffle

48 that's consistent across workers.

49 """

50 min_int, max_int = tf.int32.limits

51 max_rand = max_int // hvd.size()

52

53 # Generate a seed fragment

54 seed_fragment = cupy.random.randint(0, max_rand).get()

55

56 # Aggregate seed fragments from all Horovod workers

57 seed_tensor = tf.constant(seed_fragment)

58 reduced_seed = hvd.allreduce(seed_tensor, name="shuffle_seed", op=hvd.mpi_ops.Sum) % max_rand

59

60 return reduced_seed

61

62

63 proc = nvt.Workflow.load(os.path.join(BASE_DIR, "workflow/"))

64 EMBEDDING_TABLE_SHAPES = nvt.ops.get_embedding_sizes(proc)

65

66 train_dataset_tf = KerasSequenceLoader(

67 TRAIN_PATHS, # you could also use a glob pattern

68 batch_size=BATCH_SIZE,

69 label_names=["rating"],

70 cat_names=CATEGORICAL_COLUMNS + CATEGORICAL_MH_COLUMNS,

71 cont_names=NUMERIC_COLUMNS,

72 engine="parquet",

73 shuffle=True,

74 seed_fn=seed_fn,

75 buffer_size=0.06, # how many batches to load at once

76 parts_per_chunk=1,

77 global_size=hvd.size(),

78 global_rank=hvd.rank(),

79 )

80 inputs = {} # tf.keras.Input placeholders for each feature to be used

81 emb_layers = [] # output of all embedding layers, which will be concatenated

82 for col in CATEGORICAL_COLUMNS:

83 inputs[col] = tf.keras.Input(name=col, dtype=tf.int32, shape=(1,))

84 # Note that we need two input tensors for multi-hot categorical features

85 for col in CATEGORICAL_MH_COLUMNS:

86 inputs[col + "__values"] = tf.keras.Input(name=f"{col}__values", dtype=tf.int64, shape=(1,))

87 inputs[col + "__nnzs"] = tf.keras.Input(name=f"{col}__nnzs", dtype=tf.int64, shape=(1,))

88 for col in CATEGORICAL_COLUMNS + CATEGORICAL_MH_COLUMNS:

89 emb_layers.append(

90 tf.feature_column.embedding_column(

91 tf.feature_column.categorical_column_with_identity(

92 col, EMBEDDING_TABLE_SHAPES[col][0] # Input dimension (vocab size)

93 ),

94 EMBEDDING_TABLE_SHAPES[col][1], # Embedding output dimension

95 )

96 )

97 emb_layer = layers.DenseFeatures(emb_layers)

98 x_emb_output = emb_layer(inputs)

99 x = tf.keras.layers.Dense(128, activation="relu")(x_emb_output)

100 x = tf.keras.layers.Dense(128, activation="relu")(x)

101 x = tf.keras.layers.Dense(128, activation="relu")(x)

102 x = tf.keras.layers.Dense(1, activation="sigmoid")(x)

103 model = tf.keras.Model(inputs=inputs, outputs=x)

104 loss = tf.losses.BinaryCrossentropy()

105 opt = tf.keras.optimizers.SGD(0.01 * hvd.size())

106 opt = hvd.DistributedOptimizer(opt)

107 checkpoint_dir = "./checkpoints"

108 checkpoint = tf.train.Checkpoint(model=model, optimizer=opt)

109

110

111 @tf.function(experimental_relax_shapes=True)

112 def training_step(examples, labels, first_batch):

113 with tf.GradientTape() as tape:

114 probs = model(examples, training=True)

115 loss_value = loss(labels, probs)

116 # Horovod: add Horovod Distributed GradientTape.

117 tape = hvd.DistributedGradientTape(tape, sparse_as_dense=True)

118 grads = tape.gradient(loss_value, model.trainable_variables)

119 opt.apply_gradients(zip(grads, model.trainable_variables))

120 # Horovod: broadcast initial variable states from rank 0 to all other processes.

121 # This is necessary to ensure consistent initialization of all workers when

122 # training is started with random weights or restored from a checkpoint.

123 #

124 # Note: broadcast should be done after the first gradient step to ensure optimizer

125 # initialization.

126 if first_batch:

127 hvd.broadcast_variables(model.variables, root_rank=0)

128 hvd.broadcast_variables(opt.variables(), root_rank=0)

129 return loss_value

130

131

132 # Horovod: adjust number of steps based on number of GPUs.

133 for batch, (examples, labels) in enumerate(train_dataset_tf):

134 loss_value = training_step(examples, labels, batch == 0)

135 if batch % 10 == 0 and hvd.local_rank() == 0:

136 print("Step #%d\tLoss: %.6f" % (batch, loss_value))

137 hvd.join()

138 # Horovod: save checkpoints only on worker 0 to prevent other workers from

139 # corrupting it.

140 if hvd.rank() == 0:

141 checkpoint.save(checkpoint_dir)

142

[end of examples/horovod/tf_hvd_simple.py]

[start of examples/horovod/torch-nvt-horovod.py]

1 import argparse

2 import glob

3 import os

4 from time import time

5

6 import cupy

7 import torch

8

9 import nvtabular as nvt

10 from nvtabular.framework_utils.torch.models import Model

11 from nvtabular.framework_utils.torch.utils import process_epoch

12 from nvtabular.loader.torch import DLDataLoader, TorchAsyncItr

13

14 # Horovod must be the last import to avoid conflicts

15 import horovod.torch as hvd # noqa: E402, isort:skip

16

17

18 parser = argparse.ArgumentParser(description="Train a multi-gpu model with Torch and Horovod")

19 parser.add_argument("--dir_in", default=None, help="Input directory")

20 parser.add_argument("--batch_size", default=None, help="Batch size")

21 parser.add_argument("--cats", default=None, help="Categorical columns")

22 parser.add_argument("--cats_mh", default=None, help="Categorical multihot columns")

23 parser.add_argument("--conts", default=None, help="Continuous columns")

24 parser.add_argument("--labels", default=None, help="Label columns")

25 parser.add_argument("--epochs", default=1, help="Training epochs")

26 args = parser.parse_args()

27

28 hvd.init()

29

30 gpu_to_use = hvd.local_rank()

31

32 if torch.cuda.is_available():

33 torch.cuda.set_device(gpu_to_use)

34

35

36 BASE_DIR = os.path.expanduser(args.dir_in or "./data/")

37 BATCH_SIZE = args.batch_size or 16384 # Batch Size

38 CATEGORICAL_COLUMNS = args.cats or ["movieId", "userId"] # Single-hot

39 CATEGORICAL_MH_COLUMNS = args.cats_mh or ["genres"] # Multi-hot

40 NUMERIC_COLUMNS = args.conts or []

41

42 # Output from ETL-with-NVTabular

43 TRAIN_PATHS = sorted(glob.glob(os.path.join(BASE_DIR, "train", "*.parquet")))

44

45 proc = nvt.Workflow.load(os.path.join(BASE_DIR, "workflow/"))

46

47 EMBEDDING_TABLE_SHAPES = nvt.ops.get_embedding_sizes(proc)

48

49

50 # TensorItrDataset returns a single batch of x_cat, x_cont, y.

51 def collate_fn(x):

52 return x

53

54

55 # Seed with system randomness (or a static seed)

56 cupy.random.seed(None)

57

58

59 def seed_fn():

60 """

61 Generate consistent dataloader shuffle seeds across workers

62

63 Reseeds each worker's dataloader each epoch to get fresh a shuffle

64 that's consistent across workers.

65 """

66

67 max_rand = torch.iinfo(torch.int).max // hvd.size()

68

69 # Generate a seed fragment

70 seed_fragment = cupy.random.randint(0, max_rand)

71

72 # Aggregate seed fragments from all Horovod workers

73 seed_tensor = torch.tensor(seed_fragment)

74 reduced_seed = hvd.allreduce(seed_tensor, name="shuffle_seed", op=hvd.mpi_ops.Sum) % max_rand

75

76 return reduced_seed

77

78

79 train_dataset = TorchAsyncItr(

80 nvt.Dataset(TRAIN_PATHS),

81 batch_size=BATCH_SIZE,

82 cats=CATEGORICAL_COLUMNS + CATEGORICAL_MH_COLUMNS,

83 conts=NUMERIC_COLUMNS,

84 labels=["rating"],

85 devices=[gpu_to_use],

86 global_size=hvd.size(),

87 global_rank=hvd.rank(),

88 shuffle=True,

89 seed_fn=seed_fn,

90 )

91 train_loader = DLDataLoader(

92 train_dataset, batch_size=None, collate_fn=collate_fn, pin_memory=False, num_workers=0

93 )

94

95

96 EMBEDDING_TABLE_SHAPES_TUPLE = (

97 {

98 CATEGORICAL_COLUMNS[0]: EMBEDDING_TABLE_SHAPES[CATEGORICAL_COLUMNS[0]],

99 CATEGORICAL_COLUMNS[1]: EMBEDDING_TABLE_SHAPES[CATEGORICAL_COLUMNS[1]],

100 },

101 {CATEGORICAL_MH_COLUMNS[0]: EMBEDDING_TABLE_SHAPES[CATEGORICAL_MH_COLUMNS[0]]},

102 )

103

104 model = Model(

105 embedding_table_shapes=EMBEDDING_TABLE_SHAPES_TUPLE,

106 num_continuous=0,

107 emb_dropout=0.0,

108 layer_hidden_dims=[128, 128, 128],

109 layer_dropout_rates=[0.0, 0.0, 0.0],

110 ).cuda()

111

112 lr_scaler = hvd.size()

113

114 optimizer = torch.optim.Adam(model.parameters(), lr=0.01 * lr_scaler)

115

116 hvd.broadcast_parameters(model.state_dict(), root_rank=0)

117 hvd.broadcast_optimizer_state(optimizer, root_rank=0)

118

119 optimizer = hvd.DistributedOptimizer(optimizer, named_parameters=model.named_parameters())

120

121 for epoch in range(args.epochs):

122 start = time()

123 print(f"Training epoch {epoch}")

124 train_loss, y_pred, y = process_epoch(train_loader, model, train=True, optimizer=optimizer)

125 hvd.join(gpu_to_use)

126 hvd.broadcast_parameters(model.state_dict(), root_rank=0)

127 print(f"Epoch {epoch:02d}. Train loss: {train_loss:.4f}.")

128 hvd.join(gpu_to_use)

129 t_final = time() - start

130 total_rows = train_dataset.num_rows_processed

131 print(

132 f"run_time: {t_final} - rows: {total_rows} - "

133 f"epochs: {epoch} - dl_thru: {total_rows / t_final}"

134 )

135

136

137 hvd.join(gpu_to_use)

138 if hvd.local_rank() == 0:

139 print("Training complete")

140

[end of examples/horovod/torch-nvt-horovod.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/examples/horovod/tf_hvd_simple.py b/examples/horovod/tf_hvd_simple.py

--- a/examples/horovod/tf_hvd_simple.py

+++ b/examples/horovod/tf_hvd_simple.py

@@ -18,7 +18,7 @@

parser = argparse.ArgumentParser(description="Process some integers.")

parser.add_argument("--dir_in", default=None, help="Input directory")

-parser.add_argument("--b_size", default=None, help="batch size")

+parser.add_argument("--batch_size", default=None, help="batch size")

parser.add_argument("--cats", default=None, help="categorical columns")

parser.add_argument("--cats_mh", default=None, help="categorical multihot columns")

parser.add_argument("--conts", default=None, help="continuous columns")

@@ -27,7 +27,7 @@

BASE_DIR = args.dir_in or "./data/"

-BATCH_SIZE = args.b_size or 16384 # Batch Size

+BATCH_SIZE = int(args.batch_size) or 16384 # Batch Size

CATEGORICAL_COLUMNS = args.cats or ["movieId", "userId"] # Single-hot

CATEGORICAL_MH_COLUMNS = args.cats_mh or ["genres"] # Multi-hot

NUMERIC_COLUMNS = args.conts or []

diff --git a/examples/horovod/torch-nvt-horovod.py b/examples/horovod/torch-nvt-horovod.py

--- a/examples/horovod/torch-nvt-horovod.py

+++ b/examples/horovod/torch-nvt-horovod.py

@@ -34,7 +34,7 @@

BASE_DIR = os.path.expanduser(args.dir_in or "./data/")

-BATCH_SIZE = args.batch_size or 16384 # Batch Size

+BATCH_SIZE = int(args.batch_size) or 16384 # Batch Size

CATEGORICAL_COLUMNS = args.cats or ["movieId", "userId"] # Single-hot

CATEGORICAL_MH_COLUMNS = args.cats_mh or ["genres"] # Multi-hot

NUMERIC_COLUMNS = args.conts or []

| {"golden_diff": "diff --git a/examples/horovod/tf_hvd_simple.py b/examples/horovod/tf_hvd_simple.py\n--- a/examples/horovod/tf_hvd_simple.py\n+++ b/examples/horovod/tf_hvd_simple.py\n@@ -18,7 +18,7 @@\n \n parser = argparse.ArgumentParser(description=\"Process some integers.\")\n parser.add_argument(\"--dir_in\", default=None, help=\"Input directory\")\n-parser.add_argument(\"--b_size\", default=None, help=\"batch size\")\n+parser.add_argument(\"--batch_size\", default=None, help=\"batch size\")\n parser.add_argument(\"--cats\", default=None, help=\"categorical columns\")\n parser.add_argument(\"--cats_mh\", default=None, help=\"categorical multihot columns\")\n parser.add_argument(\"--conts\", default=None, help=\"continuous columns\")\n@@ -27,7 +27,7 @@\n \n \n BASE_DIR = args.dir_in or \"./data/\"\n-BATCH_SIZE = args.b_size or 16384 # Batch Size\n+BATCH_SIZE = int(args.batch_size) or 16384 # Batch Size\n CATEGORICAL_COLUMNS = args.cats or [\"movieId\", \"userId\"] # Single-hot\n CATEGORICAL_MH_COLUMNS = args.cats_mh or [\"genres\"] # Multi-hot\n NUMERIC_COLUMNS = args.conts or []\ndiff --git a/examples/horovod/torch-nvt-horovod.py b/examples/horovod/torch-nvt-horovod.py\n--- a/examples/horovod/torch-nvt-horovod.py\n+++ b/examples/horovod/torch-nvt-horovod.py\n@@ -34,7 +34,7 @@\n \n \n BASE_DIR = os.path.expanduser(args.dir_in or \"./data/\")\n-BATCH_SIZE = args.batch_size or 16384 # Batch Size\n+BATCH_SIZE = int(args.batch_size) or 16384 # Batch Size\n CATEGORICAL_COLUMNS = args.cats or [\"movieId\", \"userId\"] # Single-hot\n CATEGORICAL_MH_COLUMNS = args.cats_mh or [\"genres\"] # Multi-hot\n NUMERIC_COLUMNS = args.conts or []\n", "issue": "[BUG] Horovod example scripts fail when user supplies batch size parameter\n**Describe the bug**\r\nUsing the batch size parameter on the TF Horovod example causes a type error with a mismatch between str and int.\r\n\r\n**Steps/Code to reproduce bug**\r\nRun the TF Horovod example with the arguments `--b_size 1024`.\r\n\r\n**Expected behavior**\r\nThe script should accept a user-provided batch size.\r\n\r\n**Environment details (please complete the following information):**\r\n - Environment location: Bare-metal\r\n - Method of NVTabular install: conda\r\n \r\n**Additional context**\r\nI believe [this line](https://github.com/NVIDIA/NVTabular/blob/main/examples/horovod/tf_hvd_simple.py#L30) and the same line in the Torch example just need type coercions from str to int.\r\n\n", "before_files": [{"content": "# External dependencies\nimport argparse\nimport glob\nimport os\n\nimport cupy\n\n# we can control how much memory to give tensorflow with this environment variable\n# IMPORTANT: make sure you do this before you initialize TF's runtime, otherwise\n# TF will have claimed all free GPU memory\nos.environ[\"TF_MEMORY_ALLOCATION\"] = \"0.3\" # fraction of free memory\nimport horovod.tensorflow as hvd # noqa: E402\nimport tensorflow as tf # noqa: E402\n\nimport nvtabular as nvt # noqa: E402\nfrom nvtabular.framework_utils.tensorflow import layers # noqa: E402\nfrom nvtabular.loader.tensorflow import KerasSequenceLoader # noqa: E402\n\nparser = argparse.ArgumentParser(description=\"Process some integers.\")\nparser.add_argument(\"--dir_in\", default=None, help=\"Input directory\")\nparser.add_argument(\"--b_size\", default=None, help=\"batch size\")\nparser.add_argument(\"--cats\", default=None, help=\"categorical columns\")\nparser.add_argument(\"--cats_mh\", default=None, help=\"categorical multihot columns\")\nparser.add_argument(\"--conts\", default=None, help=\"continuous columns\")\nparser.add_argument(\"--labels\", default=None, help=\"continuous columns\")\nargs = parser.parse_args()\n\n\nBASE_DIR = args.dir_in or \"./data/\"\nBATCH_SIZE = args.b_size or 16384 # Batch Size\nCATEGORICAL_COLUMNS = args.cats or [\"movieId\", \"userId\"] # Single-hot\nCATEGORICAL_MH_COLUMNS = args.cats_mh or [\"genres\"] # Multi-hot\nNUMERIC_COLUMNS = args.conts or []\nTRAIN_PATHS = sorted(\n glob.glob(os.path.join(BASE_DIR, \"train/*.parquet\"))\n) # Output from ETL-with-NVTabular\nhvd.init()\n\n# Seed with system randomness (or a static seed)\ncupy.random.seed(None)\n\n\ndef seed_fn():\n \"\"\"\n Generate consistent dataloader shuffle seeds across workers\n\n Reseeds each worker's dataloader each epoch to get fresh a shuffle\n that's consistent across workers.\n \"\"\"\n min_int, max_int = tf.int32.limits\n max_rand = max_int // hvd.size()\n\n # Generate a seed fragment\n seed_fragment = cupy.random.randint(0, max_rand).get()\n\n # Aggregate seed fragments from all Horovod workers\n seed_tensor = tf.constant(seed_fragment)\n reduced_seed = hvd.allreduce(seed_tensor, name=\"shuffle_seed\", op=hvd.mpi_ops.Sum) % max_rand\n\n return reduced_seed\n\n\nproc = nvt.Workflow.load(os.path.join(BASE_DIR, \"workflow/\"))\nEMBEDDING_TABLE_SHAPES = nvt.ops.get_embedding_sizes(proc)\n\ntrain_dataset_tf = KerasSequenceLoader(\n TRAIN_PATHS, # you could also use a glob pattern\n batch_size=BATCH_SIZE,\n label_names=[\"rating\"],\n cat_names=CATEGORICAL_COLUMNS + CATEGORICAL_MH_COLUMNS,\n cont_names=NUMERIC_COLUMNS,\n engine=\"parquet\",\n shuffle=True,\n seed_fn=seed_fn,\n buffer_size=0.06, # how many batches to load at once\n parts_per_chunk=1,\n global_size=hvd.size(),\n global_rank=hvd.rank(),\n)\ninputs = {} # tf.keras.Input placeholders for each feature to be used\nemb_layers = [] # output of all embedding layers, which will be concatenated\nfor col in CATEGORICAL_COLUMNS:\n inputs[col] = tf.keras.Input(name=col, dtype=tf.int32, shape=(1,))\n# Note that we need two input tensors for multi-hot categorical features\nfor col in CATEGORICAL_MH_COLUMNS:\n inputs[col + \"__values\"] = tf.keras.Input(name=f\"{col}__values\", dtype=tf.int64, shape=(1,))\n inputs[col + \"__nnzs\"] = tf.keras.Input(name=f\"{col}__nnzs\", dtype=tf.int64, shape=(1,))\nfor col in CATEGORICAL_COLUMNS + CATEGORICAL_MH_COLUMNS:\n emb_layers.append(\n tf.feature_column.embedding_column(\n tf.feature_column.categorical_column_with_identity(\n col, EMBEDDING_TABLE_SHAPES[col][0] # Input dimension (vocab size)\n ),\n EMBEDDING_TABLE_SHAPES[col][1], # Embedding output dimension\n )\n )\nemb_layer = layers.DenseFeatures(emb_layers)\nx_emb_output = emb_layer(inputs)\nx = tf.keras.layers.Dense(128, activation=\"relu\")(x_emb_output)\nx = tf.keras.layers.Dense(128, activation=\"relu\")(x)\nx = tf.keras.layers.Dense(128, activation=\"relu\")(x)\nx = tf.keras.layers.Dense(1, activation=\"sigmoid\")(x)\nmodel = tf.keras.Model(inputs=inputs, outputs=x)\nloss = tf.losses.BinaryCrossentropy()\nopt = tf.keras.optimizers.SGD(0.01 * hvd.size())\nopt = hvd.DistributedOptimizer(opt)\ncheckpoint_dir = \"./checkpoints\"\ncheckpoint = tf.train.Checkpoint(model=model, optimizer=opt)\n\n\[email protected](experimental_relax_shapes=True)\ndef training_step(examples, labels, first_batch):\n with tf.GradientTape() as tape:\n probs = model(examples, training=True)\n loss_value = loss(labels, probs)\n # Horovod: add Horovod Distributed GradientTape.\n tape = hvd.DistributedGradientTape(tape, sparse_as_dense=True)\n grads = tape.gradient(loss_value, model.trainable_variables)\n opt.apply_gradients(zip(grads, model.trainable_variables))\n # Horovod: broadcast initial variable states from rank 0 to all other processes.\n # This is necessary to ensure consistent initialization of all workers when\n # training is started with random weights or restored from a checkpoint.\n #\n # Note: broadcast should be done after the first gradient step to ensure optimizer\n # initialization.\n if first_batch:\n hvd.broadcast_variables(model.variables, root_rank=0)\n hvd.broadcast_variables(opt.variables(), root_rank=0)\n return loss_value\n\n\n# Horovod: adjust number of steps based on number of GPUs.\nfor batch, (examples, labels) in enumerate(train_dataset_tf):\n loss_value = training_step(examples, labels, batch == 0)\n if batch % 10 == 0 and hvd.local_rank() == 0:\n print(\"Step #%d\\tLoss: %.6f\" % (batch, loss_value))\nhvd.join()\n# Horovod: save checkpoints only on worker 0 to prevent other workers from\n# corrupting it.\nif hvd.rank() == 0:\n checkpoint.save(checkpoint_dir)\n", "path": "examples/horovod/tf_hvd_simple.py"}, {"content": "import argparse\nimport glob\nimport os\nfrom time import time\n\nimport cupy\nimport torch\n\nimport nvtabular as nvt\nfrom nvtabular.framework_utils.torch.models import Model\nfrom nvtabular.framework_utils.torch.utils import process_epoch\nfrom nvtabular.loader.torch import DLDataLoader, TorchAsyncItr\n\n# Horovod must be the last import to avoid conflicts\nimport horovod.torch as hvd # noqa: E402, isort:skip\n\n\nparser = argparse.ArgumentParser(description=\"Train a multi-gpu model with Torch and Horovod\")\nparser.add_argument(\"--dir_in\", default=None, help=\"Input directory\")\nparser.add_argument(\"--batch_size\", default=None, help=\"Batch size\")\nparser.add_argument(\"--cats\", default=None, help=\"Categorical columns\")\nparser.add_argument(\"--cats_mh\", default=None, help=\"Categorical multihot columns\")\nparser.add_argument(\"--conts\", default=None, help=\"Continuous columns\")\nparser.add_argument(\"--labels\", default=None, help=\"Label columns\")\nparser.add_argument(\"--epochs\", default=1, help=\"Training epochs\")\nargs = parser.parse_args()\n\nhvd.init()\n\ngpu_to_use = hvd.local_rank()\n\nif torch.cuda.is_available():\n torch.cuda.set_device(gpu_to_use)\n\n\nBASE_DIR = os.path.expanduser(args.dir_in or \"./data/\")\nBATCH_SIZE = args.batch_size or 16384 # Batch Size\nCATEGORICAL_COLUMNS = args.cats or [\"movieId\", \"userId\"] # Single-hot\nCATEGORICAL_MH_COLUMNS = args.cats_mh or [\"genres\"] # Multi-hot\nNUMERIC_COLUMNS = args.conts or []\n\n# Output from ETL-with-NVTabular\nTRAIN_PATHS = sorted(glob.glob(os.path.join(BASE_DIR, \"train\", \"*.parquet\")))\n\nproc = nvt.Workflow.load(os.path.join(BASE_DIR, \"workflow/\"))\n\nEMBEDDING_TABLE_SHAPES = nvt.ops.get_embedding_sizes(proc)\n\n\n# TensorItrDataset returns a single batch of x_cat, x_cont, y.\ndef collate_fn(x):\n return x\n\n\n# Seed with system randomness (or a static seed)\ncupy.random.seed(None)\n\n\ndef seed_fn():\n \"\"\"\n Generate consistent dataloader shuffle seeds across workers\n\n Reseeds each worker's dataloader each epoch to get fresh a shuffle\n that's consistent across workers.\n \"\"\"\n\n max_rand = torch.iinfo(torch.int).max // hvd.size()\n\n # Generate a seed fragment\n seed_fragment = cupy.random.randint(0, max_rand)\n\n # Aggregate seed fragments from all Horovod workers\n seed_tensor = torch.tensor(seed_fragment)\n reduced_seed = hvd.allreduce(seed_tensor, name=\"shuffle_seed\", op=hvd.mpi_ops.Sum) % max_rand\n\n return reduced_seed\n\n\ntrain_dataset = TorchAsyncItr(\n nvt.Dataset(TRAIN_PATHS),\n batch_size=BATCH_SIZE,\n cats=CATEGORICAL_COLUMNS + CATEGORICAL_MH_COLUMNS,\n conts=NUMERIC_COLUMNS,\n labels=[\"rating\"],\n devices=[gpu_to_use],\n global_size=hvd.size(),\n global_rank=hvd.rank(),\n shuffle=True,\n seed_fn=seed_fn,\n)\ntrain_loader = DLDataLoader(\n train_dataset, batch_size=None, collate_fn=collate_fn, pin_memory=False, num_workers=0\n)\n\n\nEMBEDDING_TABLE_SHAPES_TUPLE = (\n {\n CATEGORICAL_COLUMNS[0]: EMBEDDING_TABLE_SHAPES[CATEGORICAL_COLUMNS[0]],\n CATEGORICAL_COLUMNS[1]: EMBEDDING_TABLE_SHAPES[CATEGORICAL_COLUMNS[1]],\n },\n {CATEGORICAL_MH_COLUMNS[0]: EMBEDDING_TABLE_SHAPES[CATEGORICAL_MH_COLUMNS[0]]},\n)\n\nmodel = Model(\n embedding_table_shapes=EMBEDDING_TABLE_SHAPES_TUPLE,\n num_continuous=0,\n emb_dropout=0.0,\n layer_hidden_dims=[128, 128, 128],\n layer_dropout_rates=[0.0, 0.0, 0.0],\n).cuda()\n\nlr_scaler = hvd.size()\n\noptimizer = torch.optim.Adam(model.parameters(), lr=0.01 * lr_scaler)\n\nhvd.broadcast_parameters(model.state_dict(), root_rank=0)\nhvd.broadcast_optimizer_state(optimizer, root_rank=0)\n\noptimizer = hvd.DistributedOptimizer(optimizer, named_parameters=model.named_parameters())\n\nfor epoch in range(args.epochs):\n start = time()\n print(f\"Training epoch {epoch}\")\n train_loss, y_pred, y = process_epoch(train_loader, model, train=True, optimizer=optimizer)\n hvd.join(gpu_to_use)\n hvd.broadcast_parameters(model.state_dict(), root_rank=0)\n print(f\"Epoch {epoch:02d}. Train loss: {train_loss:.4f}.\")\n hvd.join(gpu_to_use)\n t_final = time() - start\n total_rows = train_dataset.num_rows_processed\n print(\n f\"run_time: {t_final} - rows: {total_rows} - \"\n f\"epochs: {epoch} - dl_thru: {total_rows / t_final}\"\n )\n\n\nhvd.join(gpu_to_use)\nif hvd.local_rank() == 0:\n print(\"Training complete\")\n", "path": "examples/horovod/torch-nvt-horovod.py"}]} | 4,039 | 479 |

gh_patches_debug_8436 | rasdani/github-patches | git_diff | microsoft__playwright-python-959 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[Bug]: `record_har_omit_content` does not work properly

### Playwright version

1.15.3

### Operating system

Linux

### What browsers are you seeing the problem on?

Chromium, Firefox, WebKit

### Other information

Repo to present bug:

https://github.com/qwark97/har_omit_content_bug

Bug occurs also outside the docker image

### What happened? / Describe the bug

Using `record_har_omit_content` as a `new_page` parameter can manipulate presence of the `text` filed inside `entry.response.content` object in `.har` file.

Not using this parameter (defaults to `False` https://playwright.dev/python/docs/api/class-browser#browser-new-page) allows to see `text` inside `.har` file. Using `record_har_omit_content=True` also works as expected - `text` is absent. Unfortunatelly, passing `record_har_omit_content=False` explicitely **does not** work as expected -> `.har` file **will not** contain `text` filed.

It also looks like passing anything except explicit `None` as a `record_har_omit_content` value (type doesn't matter) will cause with missing `text` filed

### Code snippet to reproduce your bug

_No response_

### Relevant log output

_No response_

</issue>

<code>

[start of playwright/_impl/_browser.py]

1 # Copyright (c) Microsoft Corporation.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 import base64

16 import json

17 from pathlib import Path

18 from types import SimpleNamespace

19 from typing import TYPE_CHECKING, Dict, List, Union

20

21 from playwright._impl._api_structures import (

22 Geolocation,

23 HttpCredentials,

24 ProxySettings,

25 StorageState,

26 ViewportSize,

27 )

28 from playwright._impl._browser_context import BrowserContext

29 from playwright._impl._cdp_session import CDPSession

30 from playwright._impl._connection import ChannelOwner, from_channel

31 from playwright._impl._helper import (

32 ColorScheme,

33 ForcedColors,

34 ReducedMotion,

35 async_readfile,

36 is_safe_close_error,

37 locals_to_params,

38 )

39 from playwright._impl._network import serialize_headers

40 from playwright._impl._page import Page

41

42 if TYPE_CHECKING: # pragma: no cover

43 from playwright._impl._browser_type import BrowserType

44

45

46 class Browser(ChannelOwner):

47

48 Events = SimpleNamespace(

49 Disconnected="disconnected",

50 )

51

52 def __init__(

53 self, parent: "BrowserType", type: str, guid: str, initializer: Dict

54 ) -> None:

55 super().__init__(parent, type, guid, initializer)

56 self._browser_type = parent

57 self._is_connected = True

58 self._is_closed_or_closing = False

59 self._is_remote = False

60 self._is_connected_over_websocket = False

61

62 self._contexts: List[BrowserContext] = []

63 self._channel.on("close", lambda _: self._on_close())

64

65 def __repr__(self) -> str:

66 return f"<Browser type={self._browser_type} version={self.version}>"

67

68 def _on_close(self) -> None:

69 self._is_connected = False

70 self.emit(Browser.Events.Disconnected, self)

71 self._is_closed_or_closing = True

72

73 @property

74 def contexts(self) -> List[BrowserContext]:

75 return self._contexts.copy()

76

77 def is_connected(self) -> bool:

78 return self._is_connected

79

80 async def new_context(

81 self,

82 viewport: ViewportSize = None,

83 screen: ViewportSize = None,

84 noViewport: bool = None,

85 ignoreHTTPSErrors: bool = None,

86 javaScriptEnabled: bool = None,

87 bypassCSP: bool = None,

88 userAgent: str = None,

89 locale: str = None,

90 timezoneId: str = None,

91 geolocation: Geolocation = None,

92 permissions: List[str] = None,

93 extraHTTPHeaders: Dict[str, str] = None,

94 offline: bool = None,

95 httpCredentials: HttpCredentials = None,

96 deviceScaleFactor: float = None,

97 isMobile: bool = None,

98 hasTouch: bool = None,

99 colorScheme: ColorScheme = None,

100 reducedMotion: ReducedMotion = None,

101 forcedColors: ForcedColors = None,

102 acceptDownloads: bool = None,

103 defaultBrowserType: str = None,

104 proxy: ProxySettings = None,

105 recordHarPath: Union[Path, str] = None,

106 recordHarOmitContent: bool = None,

107 recordVideoDir: Union[Path, str] = None,

108 recordVideoSize: ViewportSize = None,

109 storageState: Union[StorageState, str, Path] = None,

110 baseURL: str = None,

111 strictSelectors: bool = None,

112 ) -> BrowserContext:

113 params = locals_to_params(locals())

114 await normalize_context_params(self._connection._is_sync, params)

115

116 channel = await self._channel.send("newContext", params)

117 context = from_channel(channel)

118 self._contexts.append(context)

119 context._browser = self

120 context._options = params

121 return context

122

123 async def new_page(

124 self,

125 viewport: ViewportSize = None,

126 screen: ViewportSize = None,

127 noViewport: bool = None,

128 ignoreHTTPSErrors: bool = None,

129 javaScriptEnabled: bool = None,

130 bypassCSP: bool = None,

131 userAgent: str = None,

132 locale: str = None,

133 timezoneId: str = None,

134 geolocation: Geolocation = None,

135 permissions: List[str] = None,

136 extraHTTPHeaders: Dict[str, str] = None,

137 offline: bool = None,

138 httpCredentials: HttpCredentials = None,

139 deviceScaleFactor: float = None,

140 isMobile: bool = None,

141 hasTouch: bool = None,

142 colorScheme: ColorScheme = None,

143 forcedColors: ForcedColors = None,

144 reducedMotion: ReducedMotion = None,

145 acceptDownloads: bool = None,

146 defaultBrowserType: str = None,

147 proxy: ProxySettings = None,

148 recordHarPath: Union[Path, str] = None,

149 recordHarOmitContent: bool = None,

150 recordVideoDir: Union[Path, str] = None,

151 recordVideoSize: ViewportSize = None,

152 storageState: Union[StorageState, str, Path] = None,

153 baseURL: str = None,

154 strictSelectors: bool = None,

155 ) -> Page:

156 params = locals_to_params(locals())

157 context = await self.new_context(**params)

158 page = await context.new_page()

159 page._owned_context = context

160 context._owner_page = page

161 return page

162

163 async def close(self) -> None:

164 if self._is_closed_or_closing:

165 return

166 self._is_closed_or_closing = True

167 try:

168 await self._channel.send("close")

169 except Exception as e:

170 if not is_safe_close_error(e):

171 raise e

172 if self._is_connected_over_websocket:

173 await self._connection.stop_async()

174

175 @property

176 def version(self) -> str:

177 return self._initializer["version"]

178

179 async def new_browser_cdp_session(self) -> CDPSession:

180 return from_channel(await self._channel.send("newBrowserCDPSession"))

181

182 async def start_tracing(

183 self,

184 page: Page = None,

185 path: Union[str, Path] = None,

186 screenshots: bool = None,

187 categories: List[str] = None,

188 ) -> None:

189 params = locals_to_params(locals())

190 if page:

191 params["page"] = page._channel

192 if path:

193 params["path"] = str(path)

194 await self._channel.send("startTracing", params)

195

196 async def stop_tracing(self) -> bytes:

197 encoded_binary = await self._channel.send("stopTracing")

198 return base64.b64decode(encoded_binary)

199

200

201 async def normalize_context_params(is_sync: bool, params: Dict) -> None:

202 params["sdkLanguage"] = "python" if is_sync else "python-async"

203 if params.get("noViewport"):

204 del params["noViewport"]

205 params["noDefaultViewport"] = True

206 if "defaultBrowserType" in params:

207 del params["defaultBrowserType"]

208 if "extraHTTPHeaders" in params:

209 params["extraHTTPHeaders"] = serialize_headers(params["extraHTTPHeaders"])

210 if "recordHarPath" in params:

211 params["recordHar"] = {"path": str(params["recordHarPath"])}

212 if "recordHarOmitContent" in params:

213 params["recordHar"]["omitContent"] = True

214 del params["recordHarOmitContent"]

215 del params["recordHarPath"]

216 if "recordVideoDir" in params:

217 params["recordVideo"] = {"dir": str(params["recordVideoDir"])}

218 if "recordVideoSize" in params:

219 params["recordVideo"]["size"] = params["recordVideoSize"]

220 del params["recordVideoSize"]

221 del params["recordVideoDir"]

222 if "storageState" in params:

223 storageState = params["storageState"]

224 if not isinstance(storageState, dict):

225 params["storageState"] = json.loads(

226 (await async_readfile(storageState)).decode()

227 )

228

[end of playwright/_impl/_browser.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/playwright/_impl/_browser.py b/playwright/_impl/_browser.py

--- a/playwright/_impl/_browser.py

+++ b/playwright/_impl/_browser.py

@@ -210,7 +210,7 @@

if "recordHarPath" in params:

params["recordHar"] = {"path": str(params["recordHarPath"])}

if "recordHarOmitContent" in params:

- params["recordHar"]["omitContent"] = True

+ params["recordHar"]["omitContent"] = params["recordHarOmitContent"]

del params["recordHarOmitContent"]

del params["recordHarPath"]

if "recordVideoDir" in params: