Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- main/README.md +36 -36

- main/adaptive_mask_inpainting.py +3 -3

- main/bit_diffusion.py +7 -7

- main/clip_guided_images_mixing_stable_diffusion.py +3 -3

- main/clip_guided_stable_diffusion.py +3 -3

- main/clip_guided_stable_diffusion_img2img.py +3 -3

- main/cogvideox_ddim_inversion.py +1 -1

- main/composable_stable_diffusion.py +5 -5

- main/fresco_v2v.py +4 -4

- main/gluegen.py +6 -6

- main/iadb.py +1 -1

- main/imagic_stable_diffusion.py +9 -9

- main/img2img_inpainting.py +5 -5

- main/instaflow_one_step.py +5 -5

- main/interpolate_stable_diffusion.py +8 -8

- main/ip_adapter_face_id.py +6 -6

- main/latent_consistency_img2img.py +2 -2

- main/latent_consistency_interpolate.py +1 -1

- main/latent_consistency_txt2img.py +2 -2

- main/llm_grounded_diffusion.py +9 -9

- main/lpw_stable_diffusion.py +14 -14

- main/lpw_stable_diffusion_onnx.py +14 -14

- main/lpw_stable_diffusion_xl.py +9 -9

- main/masked_stable_diffusion_img2img.py +2 -2

- main/masked_stable_diffusion_xl_img2img.py +2 -2

- main/matryoshka.py +14 -14

- main/mixture_tiling.py +2 -2

- main/mixture_tiling_sdxl.py +6 -6

- main/mod_controlnet_tile_sr_sdxl.py +5 -5

- main/multilingual_stable_diffusion.py +5 -5

- main/pipeline_animatediff_controlnet.py +3 -3

- main/pipeline_animatediff_img2video.py +3 -3

- main/pipeline_animatediff_ipex.py +3 -3

- main/pipeline_controlnet_xl_kolors.py +5 -5

- main/pipeline_controlnet_xl_kolors_img2img.py +5 -5

- main/pipeline_controlnet_xl_kolors_inpaint.py +5 -5

- main/pipeline_demofusion_sdxl.py +11 -11

- main/pipeline_faithdiff_stable_diffusion_xl.py +9 -9

- main/pipeline_flux_differential_img2img.py +2 -2

- main/pipeline_flux_rf_inversion.py +7 -7

- main/pipeline_flux_semantic_guidance.py +2 -2

- main/pipeline_flux_with_cfg.py +2 -2

- main/pipeline_hunyuandit_differential_img2img.py +6 -6

- main/pipeline_kolors_differential_img2img.py +5 -5

- main/pipeline_kolors_inpainting.py +7 -7

- main/pipeline_prompt2prompt.py +8 -8

- main/pipeline_sdxl_style_aligned.py +12 -12

- main/pipeline_stable_diffusion_3_differential_img2img.py +3 -3

- main/pipeline_stable_diffusion_3_instruct_pix2pix.py +3 -3

- main/pipeline_stable_diffusion_boxdiff.py +7 -7

main/README.md

CHANGED

|

@@ -10,7 +10,7 @@ Please also check out our [Community Scripts](https://github.com/huggingface/dif

|

|

| 10 |

|

| 11 |

| Example | Description | Code Example | Colab | Author |

|

| 12 |

|:--------------------------------------------------------------------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|--------------------------------------------------------------:|

|

| 13 |

-

|Spatiotemporal Skip Guidance (STG)|[Spatiotemporal Skip Guidance for Enhanced Video Diffusion Sampling](https://

|

| 14 |

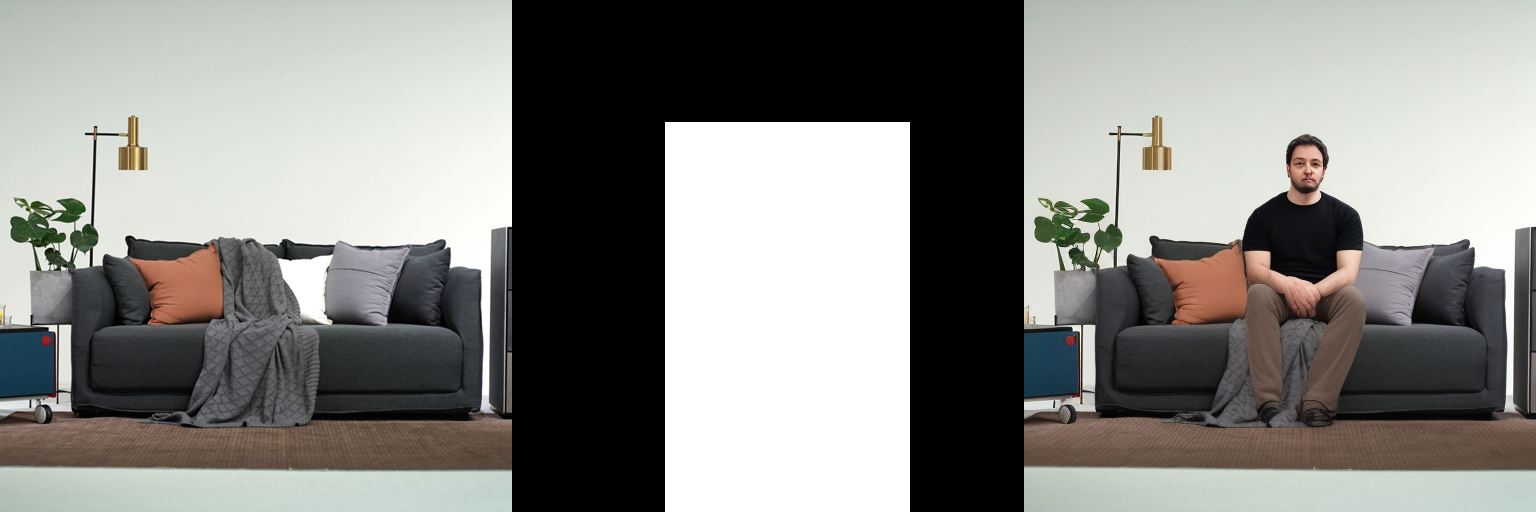

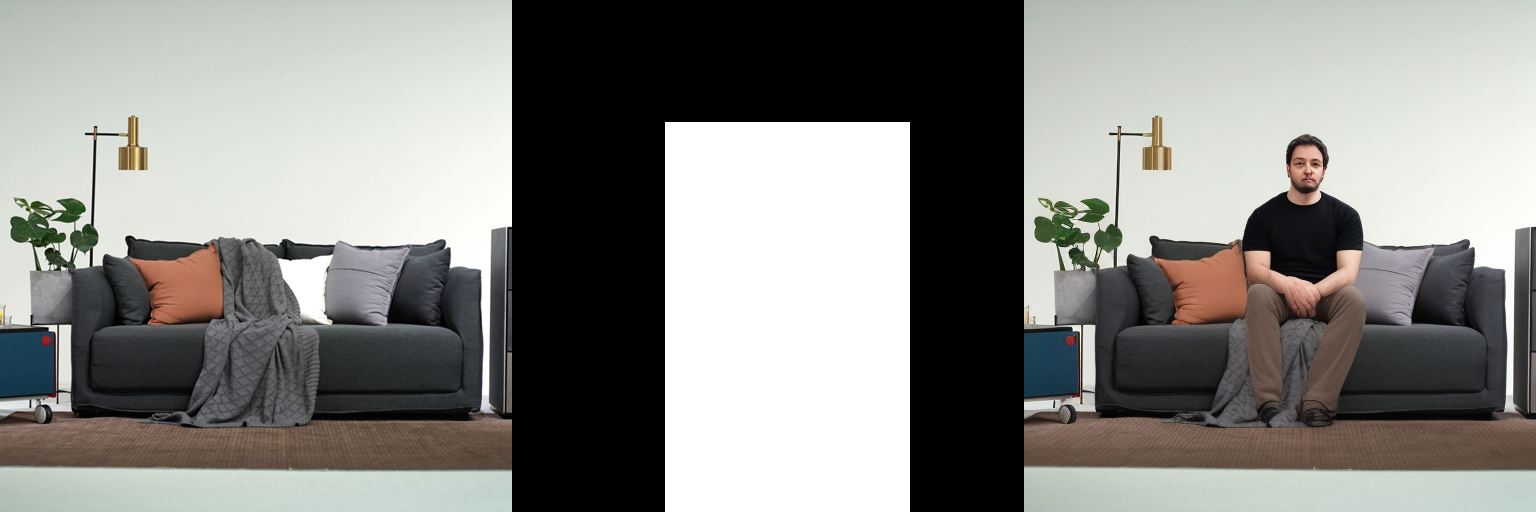

|Adaptive Mask Inpainting|Adaptive Mask Inpainting algorithm from [Beyond the Contact: Discovering Comprehensive Affordance for 3D Objects from Pre-trained 2D Diffusion Models](https://github.com/snuvclab/coma) (ECCV '24, Oral) provides a way to insert human inside the scene image without altering the background, by inpainting with adapting mask.|[Adaptive Mask Inpainting](#adaptive-mask-inpainting)|-|[Hyeonwoo Kim](https://sshowbiz.xyz),[Sookwan Han](https://jellyheadandrew.github.io)|

|

| 15 |

|Flux with CFG|[Flux with CFG](https://github.com/ToTheBeginning/PuLID/blob/main/docs/pulid_for_flux.md) provides an implementation of using CFG in [Flux](https://blackforestlabs.ai/announcing-black-forest-labs/).|[Flux with CFG](#flux-with-cfg)|[Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/flux_with_cfg.ipynb)|[Linoy Tsaban](https://github.com/linoytsaban), [Apolinário](https://github.com/apolinario), and [Sayak Paul](https://github.com/sayakpaul)|

|

| 16 |

|Differential Diffusion|[Differential Diffusion](https://github.com/exx8/differential-diffusion) modifies an image according to a text prompt, and according to a map that specifies the amount of change in each region.|[Differential Diffusion](#differential-diffusion)|[](https://huggingface.co/spaces/exx8/differential-diffusion) [](https://colab.research.google.com/github/exx8/differential-diffusion/blob/main/examples/SD2.ipynb)|[Eran Levin](https://github.com/exx8) and [Ohad Fried](https://www.ohadf.com/)|

|

|

@@ -39,17 +39,17 @@ Please also check out our [Community Scripts](https://github.com/huggingface/dif

|

|

| 39 |

| Stable UnCLIP | Diffusion Pipeline for combining prior model (generate clip image embedding from text, UnCLIPPipeline `"kakaobrain/karlo-v1-alpha"`) and decoder pipeline (decode clip image embedding to image, StableDiffusionImageVariationPipeline `"lambdalabs/sd-image-variations-diffusers"` ). | [Stable UnCLIP](#stable-unclip) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/stable_unclip.ipynb) | [Ray Wang](https://wrong.wang) |

|

| 40 |

| UnCLIP Text Interpolation Pipeline | Diffusion Pipeline that allows passing two prompts and produces images while interpolating between the text-embeddings of the two prompts | [UnCLIP Text Interpolation Pipeline](#unclip-text-interpolation-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/unclip_text_interpolation.ipynb)| [Naga Sai Abhinay Devarinti](https://github.com/Abhinay1997/) |

|

| 41 |

| UnCLIP Image Interpolation Pipeline | Diffusion Pipeline that allows passing two images/image_embeddings and produces images while interpolating between their image-embeddings | [UnCLIP Image Interpolation Pipeline](#unclip-image-interpolation-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/unclip_image_interpolation.ipynb)| [Naga Sai Abhinay Devarinti](https://github.com/Abhinay1997/) |

|

| 42 |

-

| DDIM Noise Comparative Analysis Pipeline | Investigating how the diffusion models learn visual concepts from each noise level (which is a contribution of [P2 weighting (CVPR 2022)](https://

|

| 43 |

| CLIP Guided Img2Img Stable Diffusion Pipeline | Doing CLIP guidance for image to image generation with Stable Diffusion | [CLIP Guided Img2Img Stable Diffusion](#clip-guided-img2img-stable-diffusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/clip_guided_img2img_stable_diffusion.ipynb) | [Nipun Jindal](https://github.com/nipunjindal/) |

|

| 44 |

| TensorRT Stable Diffusion Text to Image Pipeline | Accelerates the Stable Diffusion Text2Image Pipeline using TensorRT | [TensorRT Stable Diffusion Text to Image Pipeline](#tensorrt-text2image-stable-diffusion-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/tensorrt_text2image_stable_diffusion_pipeline.ipynb) | [Asfiya Baig](https://github.com/asfiyab-nvidia) |

|

| 45 |

| EDICT Image Editing Pipeline | Diffusion pipeline for text-guided image editing | [EDICT Image Editing Pipeline](#edict-image-editing-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/edict_image_pipeline.ipynb) | [Joqsan Azocar](https://github.com/Joqsan) |

|

| 46 |

-

| Stable Diffusion RePaint | Stable Diffusion pipeline using [RePaint](https://

|

| 47 |

| TensorRT Stable Diffusion Image to Image Pipeline | Accelerates the Stable Diffusion Image2Image Pipeline using TensorRT | [TensorRT Stable Diffusion Image to Image Pipeline](#tensorrt-image2image-stable-diffusion-pipeline) | - | [Asfiya Baig](https://github.com/asfiyab-nvidia) |

|

| 48 |

| Stable Diffusion IPEX Pipeline | Accelerate Stable Diffusion inference pipeline with BF16/FP32 precision on Intel Xeon CPUs with [IPEX](https://github.com/intel/intel-extension-for-pytorch) | [Stable Diffusion on IPEX](#stable-diffusion-on-ipex) | - | [Yingjie Han](https://github.com/yingjie-han/) |

|

| 49 |

| CLIP Guided Images Mixing Stable Diffusion Pipeline | Сombine images using usual diffusion models. | [CLIP Guided Images Mixing Using Stable Diffusion](#clip-guided-images-mixing-with-stable-diffusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/clip_guided_images_mixing_with_stable_diffusion.ipynb) | [Karachev Denis](https://github.com/TheDenk) |

|

| 50 |

| TensorRT Stable Diffusion Inpainting Pipeline | Accelerates the Stable Diffusion Inpainting Pipeline using TensorRT | [TensorRT Stable Diffusion Inpainting Pipeline](#tensorrt-inpainting-stable-diffusion-pipeline) | - | [Asfiya Baig](https://github.com/asfiyab-nvidia) |

|

| 51 |

-

| IADB Pipeline | Implementation of [Iterative α-(de)Blending: a Minimalist Deterministic Diffusion Model](https://

|

| 52 |

-

| Zero1to3 Pipeline | Implementation of [Zero-1-to-3: Zero-shot One Image to 3D Object](https://

|

| 53 |

| Stable Diffusion XL Long Weighted Prompt Pipeline | A pipeline support unlimited length of prompt and negative prompt, use A1111 style of prompt weighting | [Stable Diffusion XL Long Weighted Prompt Pipeline](#stable-diffusion-xl-long-weighted-prompt-pipeline) | [](https://colab.research.google.com/drive/1LsqilswLR40XLLcp6XFOl5nKb_wOe26W?usp=sharing) | [Andrew Zhu](https://xhinker.medium.com/) |

|

| 54 |

| Stable Diffusion Mixture Tiling Pipeline SD 1.5 | A pipeline generates cohesive images by integrating multiple diffusion processes, each focused on a specific image region and considering boundary effects for smooth blending | [Stable Diffusion Mixture Tiling Pipeline SD 1.5](#stable-diffusion-mixture-tiling-pipeline-sd-15) | [](https://huggingface.co/spaces/albarji/mixture-of-diffusers) | [Álvaro B Jiménez](https://github.com/albarji/) |

|

| 55 |

| Stable Diffusion Mixture Canvas Pipeline SD 1.5 | A pipeline generates cohesive images by integrating multiple diffusion processes, each focused on a specific image region and considering boundary effects for smooth blending. Works by defining a list of Text2Image region objects that detail the region of influence of each diffuser. | [Stable Diffusion Mixture Canvas Pipeline SD 1.5](#stable-diffusion-mixture-canvas-pipeline-sd-15) | [](https://huggingface.co/spaces/albarji/mixture-of-diffusers) | [Álvaro B Jiménez](https://github.com/albarji/) |

|

|

@@ -59,25 +59,25 @@ Please also check out our [Community Scripts](https://github.com/huggingface/dif

|

|

| 59 |

| sketch inpaint - Inpainting with non-inpaint Stable Diffusion | sketch inpaint much like in automatic1111 | [Masked Im2Im Stable Diffusion Pipeline](#stable-diffusion-masked-im2im) | - | [Anatoly Belikov](https://github.com/noskill) |

|

| 60 |

| sketch inpaint xl - Inpainting with non-inpaint Stable Diffusion | sketch inpaint much like in automatic1111 | [Masked Im2Im Stable Diffusion XL Pipeline](#stable-diffusion-xl-masked-im2im) | - | [Anatoly Belikov](https://github.com/noskill) |

|

| 61 |

| prompt-to-prompt | change parts of a prompt and retain image structure (see [paper page](https://prompt-to-prompt.github.io/)) | [Prompt2Prompt Pipeline](#prompt2prompt-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/prompt_2_prompt_pipeline.ipynb) | [Umer H. Adil](https://twitter.com/UmerHAdil) |

|

| 62 |

-

| Latent Consistency Pipeline | Implementation of [Latent Consistency Models: Synthesizing High-Resolution Images with Few-Step Inference](https://

|

| 63 |

| Latent Consistency Img2img Pipeline | Img2img pipeline for Latent Consistency Models | [Latent Consistency Img2Img Pipeline](#latent-consistency-img2img-pipeline) | - | [Logan Zoellner](https://github.com/nagolinc) |

|

| 64 |

| Latent Consistency Interpolation Pipeline | Interpolate the latent space of Latent Consistency Models with multiple prompts | [Latent Consistency Interpolation Pipeline](#latent-consistency-interpolation-pipeline) | [](https://colab.research.google.com/drive/1pK3NrLWJSiJsBynLns1K1-IDTW9zbPvl?usp=sharing) | [Aryan V S](https://github.com/a-r-r-o-w) |

|

| 65 |

| SDE Drag Pipeline | The pipeline supports drag editing of images using stochastic differential equations | [SDE Drag Pipeline](#sde-drag-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/sde_drag.ipynb) | [NieShen](https://github.com/NieShenRuc) [Fengqi Zhu](https://github.com/Monohydroxides) |

|

| 66 |

| Regional Prompting Pipeline | Assign multiple prompts for different regions | [Regional Prompting Pipeline](#regional-prompting-pipeline) | - | [hako-mikan](https://github.com/hako-mikan) |

|

| 67 |

| LDM3D-sr (LDM3D upscaler) | Upscale low resolution RGB and depth inputs to high resolution | [StableDiffusionUpscaleLDM3D Pipeline](https://github.com/estelleafl/diffusers/tree/ldm3d_upscaler_community/examples/community#stablediffusionupscaleldm3d-pipeline) | - | [Estelle Aflalo](https://github.com/estelleafl) |

|

| 68 |

| AnimateDiff ControlNet Pipeline | Combines AnimateDiff with precise motion control using ControlNets | [AnimateDiff ControlNet Pipeline](#animatediff-controlnet-pipeline) | [](https://colab.research.google.com/drive/1SKboYeGjEQmQPWoFC0aLYpBlYdHXkvAu?usp=sharing) | [Aryan V S](https://github.com/a-r-r-o-w) and [Edoardo Botta](https://github.com/EdoardoBotta) |

|

| 69 |

-

| DemoFusion Pipeline | Implementation of [DemoFusion: Democratising High-Resolution Image Generation With No $$$](https://

|

| 70 |

-

| Instaflow Pipeline | Implementation of [InstaFlow! One-Step Stable Diffusion with Rectified Flow](https://

|

| 71 |

-

| Null-Text Inversion Pipeline | Implement [Null-text Inversion for Editing Real Images using Guided Diffusion Models](https://

|

| 72 |

-

| Rerender A Video Pipeline | Implementation of [[SIGGRAPH Asia 2023] Rerender A Video: Zero-Shot Text-Guided Video-to-Video Translation](https://

|

| 73 |

-

| StyleAligned Pipeline | Implementation of [Style Aligned Image Generation via Shared Attention](https://

|

| 74 |

| AnimateDiff Image-To-Video Pipeline | Experimental Image-To-Video support for AnimateDiff (open to improvements) | [AnimateDiff Image To Video Pipeline](#animatediff-image-to-video-pipeline) | [](https://drive.google.com/file/d/1TvzCDPHhfFtdcJZe4RLloAwyoLKuttWK/view?usp=sharing) | [Aryan V S](https://github.com/a-r-r-o-w) |

|

| 75 |

| IP Adapter FaceID Stable Diffusion | Stable Diffusion Pipeline that supports IP Adapter Face ID | [IP Adapter Face ID](#ip-adapter-face-id) |[Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/ip_adapter_face_id.ipynb)| [Fabio Rigano](https://github.com/fabiorigano) |

|

| 76 |

| InstantID Pipeline | Stable Diffusion XL Pipeline that supports InstantID | [InstantID Pipeline](#instantid-pipeline) | [](https://huggingface.co/spaces/InstantX/InstantID) | [Haofan Wang](https://github.com/haofanwang) |

|

| 77 |

| UFOGen Scheduler | Scheduler for UFOGen Model (compatible with Stable Diffusion pipelines) | [UFOGen Scheduler](#ufogen-scheduler) | - | [dg845](https://github.com/dg845) |

|

| 78 |

| Stable Diffusion XL IPEX Pipeline | Accelerate Stable Diffusion XL inference pipeline with BF16/FP32 precision on Intel Xeon CPUs with [IPEX](https://github.com/intel/intel-extension-for-pytorch) | [Stable Diffusion XL on IPEX](#stable-diffusion-xl-on-ipex) | - | [Dan Li](https://github.com/ustcuna/) |

|

| 79 |

| Stable Diffusion BoxDiff Pipeline | Training-free controlled generation with bounding boxes using [BoxDiff](https://github.com/showlab/BoxDiff) | [Stable Diffusion BoxDiff Pipeline](#stable-diffusion-boxdiff) | - | [Jingyang Zhang](https://github.com/zjysteven/) |

|

| 80 |

-

| FRESCO V2V Pipeline | Implementation of [[CVPR 2024] FRESCO: Spatial-Temporal Correspondence for Zero-Shot Video Translation](https://

|

| 81 |

| AnimateDiff IPEX Pipeline | Accelerate AnimateDiff inference pipeline with BF16/FP32 precision on Intel Xeon CPUs with [IPEX](https://github.com/intel/intel-extension-for-pytorch) | [AnimateDiff on IPEX](#animatediff-on-ipex) | - | [Dan Li](https://github.com/ustcuna/) |

|

| 82 |

PIXART-α Controlnet pipeline | Implementation of the controlnet model for pixart alpha and its diffusers pipeline | [PIXART-α Controlnet pipeline](#pixart-α-controlnet-pipeline) | - | [Raul Ciotescu](https://github.com/raulc0399/) |

|

| 83 |

| HunyuanDiT Differential Diffusion Pipeline | Applies [Differential Diffusion](https://github.com/exx8/differential-diffusion) to [HunyuanDiT](https://github.com/huggingface/diffusers/pull/8240). | [HunyuanDiT with Differential Diffusion](#hunyuandit-with-differential-diffusion) | [](https://colab.research.google.com/drive/1v44a5fpzyr4Ffr4v2XBQ7BajzG874N4P?usp=sharing) | [Monjoy Choudhury](https://github.com/MnCSSJ4x) |

|

|

@@ -85,7 +85,7 @@ PIXART-α Controlnet pipeline | Implementation of the controlnet model for pixar

|

|

| 85 |

| Stable Diffusion XL Attentive Eraser Pipeline |[[AAAI2025 Oral] Attentive Eraser](https://github.com/Anonym0u3/AttentiveEraser) is a novel tuning-free method that enhances object removal capabilities in pre-trained diffusion models.|[Stable Diffusion XL Attentive Eraser Pipeline](#stable-diffusion-xl-attentive-eraser-pipeline)|-|[Wenhao Sun](https://github.com/Anonym0u3) and [Benlei Cui](https://github.com/Benny079)|

|

| 86 |

| Perturbed-Attention Guidance |StableDiffusionPAGPipeline is a modification of StableDiffusionPipeline to support Perturbed-Attention Guidance (PAG).|[Perturbed-Attention Guidance](#perturbed-attention-guidance)|[Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/perturbed_attention_guidance.ipynb)|[Hyoungwon Cho](https://github.com/HyoungwonCho)|

|

| 87 |

| CogVideoX DDIM Inversion Pipeline | Implementation of DDIM inversion and guided attention-based editing denoising process on CogVideoX. | [CogVideoX DDIM Inversion Pipeline](#cogvideox-ddim-inversion-pipeline) | - | [LittleNyima](https://github.com/LittleNyima) |

|

| 88 |

-

| FaithDiff Stable Diffusion XL Pipeline | Implementation of [(CVPR 2025) FaithDiff: Unleashing Diffusion Priors for Faithful Image Super-resolutionUnleashing Diffusion Priors for Faithful Image Super-resolution](https://

|

| 89 |

| Stable Diffusion 3 InstructPix2Pix Pipeline | Implementation of Stable Diffusion 3 InstructPix2Pix Pipeline | [Stable Diffusion 3 InstructPix2Pix Pipeline](#stable-diffusion-3-instructpix2pix-pipeline) | [](https://huggingface.co/BleachNick/SD3_UltraEdit_freeform) [](https://huggingface.co/CaptainZZZ/sd3-instructpix2pix) | [Jiayu Zhang](https://github.com/xduzhangjiayu) and [Haozhe Zhao](https://github.com/HaozheZhao)|

|

| 90 |

To load a custom pipeline you just need to pass the `custom_pipeline` argument to `DiffusionPipeline`, as one of the files in `diffusers/examples/community`. Feel free to send a PR with your own pipelines, we will merge them quickly.

|

| 91 |

|

|

@@ -101,7 +101,7 @@ pipe = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion

|

|

| 101 |

|

| 102 |

**KAIST AI, University of Washington**

|

| 103 |

|

| 104 |

-

[*Spatiotemporal Skip Guidance (STG) for Enhanced Video Diffusion Sampling*](https://

|

| 105 |

|

| 106 |

Following is the example video of STG applied to Mochi.

|

| 107 |

|

|

@@ -161,7 +161,7 @@ Here is the demonstration of Adaptive Mask Inpainting:

|

|

| 161 |

|

| 162 |

|

| 163 |

|

| 164 |

-

You can find additional information about Adaptive Mask Inpainting in the [paper](https://

|

| 165 |

|

| 166 |

#### Usage example

|

| 167 |

First, clone the diffusers github repository, and run the following command to set environment.

|

|

@@ -413,7 +413,7 @@ image.save("result.png")

|

|

| 413 |

|

| 414 |

### HD-Painter

|

| 415 |

|

| 416 |

-

Implementation of [HD-Painter: High-Resolution and Prompt-Faithful Text-Guided Image Inpainting with Diffusion Models](https://

|

| 417 |

|

| 418 |

|

| 419 |

|

|

@@ -428,7 +428,7 @@ Moreover, HD-Painter allows extension to larger scales by introducing a speciali

|

|

| 428 |

Our experiments demonstrate that HD-Painter surpasses existing state-of-the-art approaches qualitatively and quantitatively, achieving an impressive generation accuracy improvement of **61.4** vs **51.9**.

|

| 429 |

We will make the codes publicly available.

|

| 430 |

|

| 431 |

-

You can find additional information about Text2Video-Zero in the [paper](https://

|

| 432 |

|

| 433 |

#### Usage example

|

| 434 |

|

|

@@ -1362,7 +1362,7 @@ print("Inpainting completed. Image saved as 'inpainting_output.png'.")

|

|

| 1362 |

|

| 1363 |

### Bit Diffusion

|

| 1364 |

|

| 1365 |

-

Based <https://

|

| 1366 |

|

| 1367 |

```python

|

| 1368 |

from diffusers import DiffusionPipeline

|

|

@@ -1523,7 +1523,7 @@ As a result, you can look at a grid of all 4 generated images being shown togeth

|

|

| 1523 |

|

| 1524 |

### Magic Mix

|

| 1525 |

|

| 1526 |

-

Implementation of the [MagicMix: Semantic Mixing with Diffusion Models](https://

|

| 1527 |

|

| 1528 |

There are 3 parameters for the method-

|

| 1529 |

|

|

@@ -1754,7 +1754,7 @@ The resulting images in order:-

|

|

| 1754 |

|

| 1755 |

#### **Research question: What visual concepts do the diffusion models learn from each noise level during training?**

|

| 1756 |

|

| 1757 |

-

The [P2 weighting (CVPR 2022)](https://

|

| 1758 |

The approach consists of the following steps:

|

| 1759 |

|

| 1760 |

1. The input is an image x0.

|

|

@@ -1896,7 +1896,7 @@ image.save('tensorrt_mt_fuji.png')

|

|

| 1896 |

|

| 1897 |

### EDICT Image Editing Pipeline

|

| 1898 |

|

| 1899 |

-

This pipeline implements the text-guided image editing approach from the paper [EDICT: Exact Diffusion Inversion via Coupled Transformations](https://

|

| 1900 |

|

| 1901 |

- (`PIL`) `image` you want to edit.

|

| 1902 |

- `base_prompt`: the text prompt describing the current image (before editing).

|

|

@@ -1981,7 +1981,7 @@ Output Image

|

|

| 1981 |

|

| 1982 |

### Stable Diffusion RePaint

|

| 1983 |

|

| 1984 |

-

This pipeline uses the [RePaint](https://

|

| 1985 |

be used similarly to other image inpainting pipelines but does not rely on a specific inpainting model. This means you can use

|

| 1986 |

models that are not specifically created for inpainting.

|

| 1987 |

|

|

@@ -2576,7 +2576,7 @@ For more results, checkout [PR #6114](https://github.com/huggingface/diffusers/p

|

|

| 2576 |

|

| 2577 |

### Stable Diffusion Mixture Tiling Pipeline SD 1.5

|

| 2578 |

|

| 2579 |

-

This pipeline uses the Mixture. Refer to the [Mixture](https://

|

| 2580 |

|

| 2581 |

```python

|

| 2582 |

from diffusers import LMSDiscreteScheduler, DiffusionPipeline

|

|

@@ -2607,7 +2607,7 @@ image = pipeline(

|

|

| 2607 |

|

| 2608 |

### Stable Diffusion Mixture Canvas Pipeline SD 1.5

|

| 2609 |

|

| 2610 |

-

This pipeline uses the Mixture. Refer to the [Mixture](https://

|

| 2611 |

|

| 2612 |

```python

|

| 2613 |

from PIL import Image

|

|

@@ -2642,7 +2642,7 @@ output = pipeline(

|

|

| 2642 |

|

| 2643 |

### Stable Diffusion Mixture Tiling Pipeline SDXL

|

| 2644 |

|

| 2645 |

-

This pipeline uses the Mixture. Refer to the [Mixture](https://

|

| 2646 |

|

| 2647 |

```python

|

| 2648 |

import torch

|

|

@@ -2696,7 +2696,7 @@ image = pipe(

|

|

| 2696 |

|

| 2697 |

### Stable Diffusion MoD ControlNet Tile SR Pipeline SDXL

|

| 2698 |

|

| 2699 |

-

This pipeline implements the [MoD (Mixture-of-Diffusers)](

|

| 2700 |

|

| 2701 |

This works better with 4x scales, but you can try adjusts parameters to higher scales.

|

| 2702 |

|

|

@@ -2835,7 +2835,7 @@ image.save('tensorrt_inpaint_mecha_robot.png')

|

|

| 2835 |

|

| 2836 |

### IADB pipeline

|

| 2837 |

|

| 2838 |

-

This pipeline is the implementation of the [α-(de)Blending: a Minimalist Deterministic Diffusion Model](https://

|

| 2839 |

It is a simple and minimalist diffusion model.

|

| 2840 |

|

| 2841 |

The following code shows how to use the IADB pipeline to generate images using a pretrained celebahq-256 model.

|

|

@@ -2888,7 +2888,7 @@ while True:

|

|

| 2888 |

|

| 2889 |

### Zero1to3 pipeline

|

| 2890 |

|

| 2891 |

-

This pipeline is the implementation of the [Zero-1-to-3: Zero-shot One Image to 3D Object](https://

|

| 2892 |

The original pytorch-lightning [repo](https://github.com/cvlab-columbia/zero123) and a diffusers [repo](https://github.com/kxhit/zero123-hf).

|

| 2893 |

|

| 2894 |

The following code shows how to use the Zero1to3 pipeline to generate novel view synthesis images using a pretrained stable diffusion model.

|

|

@@ -3356,7 +3356,7 @@ Side note: See [this GitHub gist](https://gist.github.com/UmerHA/b65bb5fb9626c9c

|

|

| 3356 |

|

| 3357 |

### Latent Consistency Pipeline

|

| 3358 |

|

| 3359 |

-

Latent Consistency Models was proposed in [Latent Consistency Models: Synthesizing High-Resolution Images with Few-Step Inference](https://

|

| 3360 |

|

| 3361 |

The abstract of the paper reads as follows:

|

| 3362 |

|

|

@@ -3468,7 +3468,7 @@ assert len(images) == (len(prompts) - 1) * num_interpolation_steps

|

|

| 3468 |

|

| 3469 |

### StableDiffusionUpscaleLDM3D Pipeline

|

| 3470 |

|

| 3471 |

-

[LDM3D-VR](https://

|

| 3472 |

|

| 3473 |

The abstract from the paper is:

|

| 3474 |

*Latent diffusion models have proven to be state-of-the-art in the creation and manipulation of visual outputs. However, as far as we know, the generation of depth maps jointly with RGB is still limited. We introduce LDM3D-VR, a suite of diffusion models targeting virtual reality development that includes LDM3D-pano and LDM3D-SR. These models enable the generation of panoramic RGBD based on textual prompts and the upscaling of low-resolution inputs to high-resolution RGBD, respectively. Our models are fine-tuned from existing pretrained models on datasets containing panoramic/high-resolution RGB images, depth maps and captions. Both models are evaluated in comparison to existing related methods*

|

|

@@ -4165,7 +4165,7 @@ export_to_gif(result.frames[0], "result.gif")

|

|

| 4165 |

|

| 4166 |

### DemoFusion

|

| 4167 |

|

| 4168 |

-

This pipeline is the official implementation of [DemoFusion: Democratising High-Resolution Image Generation With No $$$](https://

|

| 4169 |

The original repo can be found at [repo](https://github.com/PRIS-CV/DemoFusion).

|

| 4170 |

|

| 4171 |

- `view_batch_size` (`int`, defaults to 16):

|

|

@@ -4259,7 +4259,7 @@ This pipeline provides drag-and-drop image editing using stochastic differential

|

|

| 4259 |

|

| 4260 |

|

| 4261 |

|

| 4262 |

-

See [paper](https://

|

| 4263 |

|

| 4264 |

```py

|

| 4265 |

import torch

|

|

@@ -4515,7 +4515,7 @@ export_to_video(

|

|

| 4515 |

|

| 4516 |

### StyleAligned Pipeline

|

| 4517 |

|

| 4518 |

-

This pipeline is the implementation of [Style Aligned Image Generation via Shared Attention](https://

|

| 4519 |

|

| 4520 |

> Large-scale Text-to-Image (T2I) models have rapidly gained prominence across creative fields, generating visually compelling outputs from textual prompts. However, controlling these models to ensure consistent style remains challenging, with existing methods necessitating fine-tuning and manual intervention to disentangle content and style. In this paper, we introduce StyleAligned, a novel technique designed to establish style alignment among a series of generated images. By employing minimal `attention sharing' during the diffusion process, our method maintains style consistency across images within T2I models. This approach allows for the creation of style-consistent images using a reference style through a straightforward inversion operation. Our method's evaluation across diverse styles and text prompts demonstrates high-quality synthesis and fidelity, underscoring its efficacy in achieving consistent style across various inputs.

|

| 4521 |

|

|

@@ -4729,7 +4729,7 @@ image = pipe(

|

|

| 4729 |

|

| 4730 |

### UFOGen Scheduler

|

| 4731 |

|

| 4732 |

-

[UFOGen](https://

|

| 4733 |

|

| 4734 |

```py

|

| 4735 |

import torch

|

|

@@ -5047,7 +5047,7 @@ make_image_grid(image, rows=1, cols=len(image))

|

|

| 5047 |

### Stable Diffusion XL Attentive Eraser Pipeline

|

| 5048 |

<img src="https://raw.githubusercontent.com/Anonym0u3/Images/refs/heads/main/fenmian.png" width="600" />

|

| 5049 |

|

| 5050 |

-

**Stable Diffusion XL Attentive Eraser Pipeline** is an advanced object removal pipeline that leverages SDXL for precise content suppression and seamless region completion. This pipeline uses **self-attention redirection guidance** to modify the model’s self-attention mechanism, allowing for effective removal and inpainting across various levels of mask precision, including semantic segmentation masks, bounding boxes, and hand-drawn masks. If you are interested in more detailed information and have any questions, please refer to the [paper](https://

|

| 5051 |

|

| 5052 |

#### Key features

|

| 5053 |

|

|

@@ -5133,7 +5133,7 @@ print("Object removal completed")

|

|

| 5133 |

|

| 5134 |

# Perturbed-Attention Guidance

|

| 5135 |

|

| 5136 |

-

[Project](https://ku-cvlab.github.io/Perturbed-Attention-Guidance/) / [arXiv](https://

|

| 5137 |

|

| 5138 |

This implementation is based on [Diffusers](https://huggingface.co/docs/diffusers/index). `StableDiffusionPAGPipeline` is a modification of `StableDiffusionPipeline` to support Perturbed-Attention Guidance (PAG).

|

| 5139 |

|

|

|

|

| 10 |

|

| 11 |

| Example | Description | Code Example | Colab | Author |

|

| 12 |

|:--------------------------------------------------------------------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|--------------------------------------------------------------:|

|

| 13 |

+

|Spatiotemporal Skip Guidance (STG)|[Spatiotemporal Skip Guidance for Enhanced Video Diffusion Sampling](https://huggingface.co/papers/2411.18664) (CVPR 2025) enhances video diffusion models by generating a weaker model through layer skipping and using it as guidance, improving fidelity in models like HunyuanVideo, LTXVideo, and Mochi.|[Spatiotemporal Skip Guidance](#spatiotemporal-skip-guidance)|-|[Junha Hyung](https://junhahyung.github.io/), [Kinam Kim](https://kinam0252.github.io/), and [Ednaordinary](https://github.com/Ednaordinary)|

|

| 14 |

|Adaptive Mask Inpainting|Adaptive Mask Inpainting algorithm from [Beyond the Contact: Discovering Comprehensive Affordance for 3D Objects from Pre-trained 2D Diffusion Models](https://github.com/snuvclab/coma) (ECCV '24, Oral) provides a way to insert human inside the scene image without altering the background, by inpainting with adapting mask.|[Adaptive Mask Inpainting](#adaptive-mask-inpainting)|-|[Hyeonwoo Kim](https://sshowbiz.xyz),[Sookwan Han](https://jellyheadandrew.github.io)|

|

| 15 |

|Flux with CFG|[Flux with CFG](https://github.com/ToTheBeginning/PuLID/blob/main/docs/pulid_for_flux.md) provides an implementation of using CFG in [Flux](https://blackforestlabs.ai/announcing-black-forest-labs/).|[Flux with CFG](#flux-with-cfg)|[Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/flux_with_cfg.ipynb)|[Linoy Tsaban](https://github.com/linoytsaban), [Apolinário](https://github.com/apolinario), and [Sayak Paul](https://github.com/sayakpaul)|

|

| 16 |

|Differential Diffusion|[Differential Diffusion](https://github.com/exx8/differential-diffusion) modifies an image according to a text prompt, and according to a map that specifies the amount of change in each region.|[Differential Diffusion](#differential-diffusion)|[](https://huggingface.co/spaces/exx8/differential-diffusion) [](https://colab.research.google.com/github/exx8/differential-diffusion/blob/main/examples/SD2.ipynb)|[Eran Levin](https://github.com/exx8) and [Ohad Fried](https://www.ohadf.com/)|

|

|

|

|

| 39 |

| Stable UnCLIP | Diffusion Pipeline for combining prior model (generate clip image embedding from text, UnCLIPPipeline `"kakaobrain/karlo-v1-alpha"`) and decoder pipeline (decode clip image embedding to image, StableDiffusionImageVariationPipeline `"lambdalabs/sd-image-variations-diffusers"` ). | [Stable UnCLIP](#stable-unclip) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/stable_unclip.ipynb) | [Ray Wang](https://wrong.wang) |

|

| 40 |

| UnCLIP Text Interpolation Pipeline | Diffusion Pipeline that allows passing two prompts and produces images while interpolating between the text-embeddings of the two prompts | [UnCLIP Text Interpolation Pipeline](#unclip-text-interpolation-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/unclip_text_interpolation.ipynb)| [Naga Sai Abhinay Devarinti](https://github.com/Abhinay1997/) |

|

| 41 |

| UnCLIP Image Interpolation Pipeline | Diffusion Pipeline that allows passing two images/image_embeddings and produces images while interpolating between their image-embeddings | [UnCLIP Image Interpolation Pipeline](#unclip-image-interpolation-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/unclip_image_interpolation.ipynb)| [Naga Sai Abhinay Devarinti](https://github.com/Abhinay1997/) |

|

| 42 |

+

| DDIM Noise Comparative Analysis Pipeline | Investigating how the diffusion models learn visual concepts from each noise level (which is a contribution of [P2 weighting (CVPR 2022)](https://huggingface.co/papers/2204.00227)) | [DDIM Noise Comparative Analysis Pipeline](#ddim-noise-comparative-analysis-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/ddim_noise_comparative_analysis.ipynb)| [Aengus (Duc-Anh)](https://github.com/aengusng8) |

|

| 43 |

| CLIP Guided Img2Img Stable Diffusion Pipeline | Doing CLIP guidance for image to image generation with Stable Diffusion | [CLIP Guided Img2Img Stable Diffusion](#clip-guided-img2img-stable-diffusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/clip_guided_img2img_stable_diffusion.ipynb) | [Nipun Jindal](https://github.com/nipunjindal/) |

|

| 44 |

| TensorRT Stable Diffusion Text to Image Pipeline | Accelerates the Stable Diffusion Text2Image Pipeline using TensorRT | [TensorRT Stable Diffusion Text to Image Pipeline](#tensorrt-text2image-stable-diffusion-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/tensorrt_text2image_stable_diffusion_pipeline.ipynb) | [Asfiya Baig](https://github.com/asfiyab-nvidia) |

|

| 45 |

| EDICT Image Editing Pipeline | Diffusion pipeline for text-guided image editing | [EDICT Image Editing Pipeline](#edict-image-editing-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/edict_image_pipeline.ipynb) | [Joqsan Azocar](https://github.com/Joqsan) |

|

| 46 |

+

| Stable Diffusion RePaint | Stable Diffusion pipeline using [RePaint](https://huggingface.co/papers/2201.09865) for inpainting. | [Stable Diffusion RePaint](#stable-diffusion-repaint )|[Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/stable_diffusion_repaint.ipynb)| [Markus Pobitzer](https://github.com/Markus-Pobitzer) |

|

| 47 |

| TensorRT Stable Diffusion Image to Image Pipeline | Accelerates the Stable Diffusion Image2Image Pipeline using TensorRT | [TensorRT Stable Diffusion Image to Image Pipeline](#tensorrt-image2image-stable-diffusion-pipeline) | - | [Asfiya Baig](https://github.com/asfiyab-nvidia) |

|

| 48 |

| Stable Diffusion IPEX Pipeline | Accelerate Stable Diffusion inference pipeline with BF16/FP32 precision on Intel Xeon CPUs with [IPEX](https://github.com/intel/intel-extension-for-pytorch) | [Stable Diffusion on IPEX](#stable-diffusion-on-ipex) | - | [Yingjie Han](https://github.com/yingjie-han/) |

|

| 49 |

| CLIP Guided Images Mixing Stable Diffusion Pipeline | Сombine images using usual diffusion models. | [CLIP Guided Images Mixing Using Stable Diffusion](#clip-guided-images-mixing-with-stable-diffusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/clip_guided_images_mixing_with_stable_diffusion.ipynb) | [Karachev Denis](https://github.com/TheDenk) |

|

| 50 |

| TensorRT Stable Diffusion Inpainting Pipeline | Accelerates the Stable Diffusion Inpainting Pipeline using TensorRT | [TensorRT Stable Diffusion Inpainting Pipeline](#tensorrt-inpainting-stable-diffusion-pipeline) | - | [Asfiya Baig](https://github.com/asfiyab-nvidia) |

|

| 51 |

+

| IADB Pipeline | Implementation of [Iterative α-(de)Blending: a Minimalist Deterministic Diffusion Model](https://huggingface.co/papers/2305.03486) | [IADB Pipeline](#iadb-pipeline) | - | [Thomas Chambon](https://github.com/tchambon)

|

| 52 |

+

| Zero1to3 Pipeline | Implementation of [Zero-1-to-3: Zero-shot One Image to 3D Object](https://huggingface.co/papers/2303.11328) | [Zero1to3 Pipeline](#zero1to3-pipeline) | - | [Xin Kong](https://github.com/kxhit) |

|

| 53 |

| Stable Diffusion XL Long Weighted Prompt Pipeline | A pipeline support unlimited length of prompt and negative prompt, use A1111 style of prompt weighting | [Stable Diffusion XL Long Weighted Prompt Pipeline](#stable-diffusion-xl-long-weighted-prompt-pipeline) | [](https://colab.research.google.com/drive/1LsqilswLR40XLLcp6XFOl5nKb_wOe26W?usp=sharing) | [Andrew Zhu](https://xhinker.medium.com/) |

|

| 54 |

| Stable Diffusion Mixture Tiling Pipeline SD 1.5 | A pipeline generates cohesive images by integrating multiple diffusion processes, each focused on a specific image region and considering boundary effects for smooth blending | [Stable Diffusion Mixture Tiling Pipeline SD 1.5](#stable-diffusion-mixture-tiling-pipeline-sd-15) | [](https://huggingface.co/spaces/albarji/mixture-of-diffusers) | [Álvaro B Jiménez](https://github.com/albarji/) |

|

| 55 |

| Stable Diffusion Mixture Canvas Pipeline SD 1.5 | A pipeline generates cohesive images by integrating multiple diffusion processes, each focused on a specific image region and considering boundary effects for smooth blending. Works by defining a list of Text2Image region objects that detail the region of influence of each diffuser. | [Stable Diffusion Mixture Canvas Pipeline SD 1.5](#stable-diffusion-mixture-canvas-pipeline-sd-15) | [](https://huggingface.co/spaces/albarji/mixture-of-diffusers) | [Álvaro B Jiménez](https://github.com/albarji/) |

|

|

|

|

| 59 |

| sketch inpaint - Inpainting with non-inpaint Stable Diffusion | sketch inpaint much like in automatic1111 | [Masked Im2Im Stable Diffusion Pipeline](#stable-diffusion-masked-im2im) | - | [Anatoly Belikov](https://github.com/noskill) |

|

| 60 |

| sketch inpaint xl - Inpainting with non-inpaint Stable Diffusion | sketch inpaint much like in automatic1111 | [Masked Im2Im Stable Diffusion XL Pipeline](#stable-diffusion-xl-masked-im2im) | - | [Anatoly Belikov](https://github.com/noskill) |

|

| 61 |

| prompt-to-prompt | change parts of a prompt and retain image structure (see [paper page](https://prompt-to-prompt.github.io/)) | [Prompt2Prompt Pipeline](#prompt2prompt-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/prompt_2_prompt_pipeline.ipynb) | [Umer H. Adil](https://twitter.com/UmerHAdil) |

|

| 62 |

+

| Latent Consistency Pipeline | Implementation of [Latent Consistency Models: Synthesizing High-Resolution Images with Few-Step Inference](https://huggingface.co/papers/2310.04378) | [Latent Consistency Pipeline](#latent-consistency-pipeline) | - | [Simian Luo](https://github.com/luosiallen) |

|

| 63 |

| Latent Consistency Img2img Pipeline | Img2img pipeline for Latent Consistency Models | [Latent Consistency Img2Img Pipeline](#latent-consistency-img2img-pipeline) | - | [Logan Zoellner](https://github.com/nagolinc) |

|

| 64 |

| Latent Consistency Interpolation Pipeline | Interpolate the latent space of Latent Consistency Models with multiple prompts | [Latent Consistency Interpolation Pipeline](#latent-consistency-interpolation-pipeline) | [](https://colab.research.google.com/drive/1pK3NrLWJSiJsBynLns1K1-IDTW9zbPvl?usp=sharing) | [Aryan V S](https://github.com/a-r-r-o-w) |

|

| 65 |

| SDE Drag Pipeline | The pipeline supports drag editing of images using stochastic differential equations | [SDE Drag Pipeline](#sde-drag-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/sde_drag.ipynb) | [NieShen](https://github.com/NieShenRuc) [Fengqi Zhu](https://github.com/Monohydroxides) |

|

| 66 |

| Regional Prompting Pipeline | Assign multiple prompts for different regions | [Regional Prompting Pipeline](#regional-prompting-pipeline) | - | [hako-mikan](https://github.com/hako-mikan) |

|

| 67 |

| LDM3D-sr (LDM3D upscaler) | Upscale low resolution RGB and depth inputs to high resolution | [StableDiffusionUpscaleLDM3D Pipeline](https://github.com/estelleafl/diffusers/tree/ldm3d_upscaler_community/examples/community#stablediffusionupscaleldm3d-pipeline) | - | [Estelle Aflalo](https://github.com/estelleafl) |

|

| 68 |

| AnimateDiff ControlNet Pipeline | Combines AnimateDiff with precise motion control using ControlNets | [AnimateDiff ControlNet Pipeline](#animatediff-controlnet-pipeline) | [](https://colab.research.google.com/drive/1SKboYeGjEQmQPWoFC0aLYpBlYdHXkvAu?usp=sharing) | [Aryan V S](https://github.com/a-r-r-o-w) and [Edoardo Botta](https://github.com/EdoardoBotta) |

|

| 69 |

+

| DemoFusion Pipeline | Implementation of [DemoFusion: Democratising High-Resolution Image Generation With No $$$](https://huggingface.co/papers/2311.16973) | [DemoFusion Pipeline](#demofusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/demo_fusion.ipynb) | [Ruoyi Du](https://github.com/RuoyiDu) |

|

| 70 |

+

| Instaflow Pipeline | Implementation of [InstaFlow! One-Step Stable Diffusion with Rectified Flow](https://huggingface.co/papers/2309.06380) | [Instaflow Pipeline](#instaflow-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/insta_flow.ipynb) | [Ayush Mangal](https://github.com/ayushtues) |

|

| 71 |

+

| Null-Text Inversion Pipeline | Implement [Null-text Inversion for Editing Real Images using Guided Diffusion Models](https://huggingface.co/papers/2211.09794) as a pipeline. | [Null-Text Inversion](https://github.com/google/prompt-to-prompt/) | - | [Junsheng Luan](https://github.com/Junsheng121) |

|

| 72 |

+

| Rerender A Video Pipeline | Implementation of [[SIGGRAPH Asia 2023] Rerender A Video: Zero-Shot Text-Guided Video-to-Video Translation](https://huggingface.co/papers/2306.07954) | [Rerender A Video Pipeline](#rerender-a-video) | - | [Yifan Zhou](https://github.com/SingleZombie) |

|

| 73 |

+

| StyleAligned Pipeline | Implementation of [Style Aligned Image Generation via Shared Attention](https://huggingface.co/papers/2312.02133) | [StyleAligned Pipeline](#stylealigned-pipeline) | [](https://drive.google.com/file/d/15X2E0jFPTajUIjS0FzX50OaHsCbP2lQ0/view?usp=sharing) | [Aryan V S](https://github.com/a-r-r-o-w) |

|

| 74 |

| AnimateDiff Image-To-Video Pipeline | Experimental Image-To-Video support for AnimateDiff (open to improvements) | [AnimateDiff Image To Video Pipeline](#animatediff-image-to-video-pipeline) | [](https://drive.google.com/file/d/1TvzCDPHhfFtdcJZe4RLloAwyoLKuttWK/view?usp=sharing) | [Aryan V S](https://github.com/a-r-r-o-w) |

|

| 75 |

| IP Adapter FaceID Stable Diffusion | Stable Diffusion Pipeline that supports IP Adapter Face ID | [IP Adapter Face ID](#ip-adapter-face-id) |[Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/ip_adapter_face_id.ipynb)| [Fabio Rigano](https://github.com/fabiorigano) |

|

| 76 |

| InstantID Pipeline | Stable Diffusion XL Pipeline that supports InstantID | [InstantID Pipeline](#instantid-pipeline) | [](https://huggingface.co/spaces/InstantX/InstantID) | [Haofan Wang](https://github.com/haofanwang) |

|

| 77 |

| UFOGen Scheduler | Scheduler for UFOGen Model (compatible with Stable Diffusion pipelines) | [UFOGen Scheduler](#ufogen-scheduler) | - | [dg845](https://github.com/dg845) |

|

| 78 |

| Stable Diffusion XL IPEX Pipeline | Accelerate Stable Diffusion XL inference pipeline with BF16/FP32 precision on Intel Xeon CPUs with [IPEX](https://github.com/intel/intel-extension-for-pytorch) | [Stable Diffusion XL on IPEX](#stable-diffusion-xl-on-ipex) | - | [Dan Li](https://github.com/ustcuna/) |

|

| 79 |

| Stable Diffusion BoxDiff Pipeline | Training-free controlled generation with bounding boxes using [BoxDiff](https://github.com/showlab/BoxDiff) | [Stable Diffusion BoxDiff Pipeline](#stable-diffusion-boxdiff) | - | [Jingyang Zhang](https://github.com/zjysteven/) |

|

| 80 |

+

| FRESCO V2V Pipeline | Implementation of [[CVPR 2024] FRESCO: Spatial-Temporal Correspondence for Zero-Shot Video Translation](https://huggingface.co/papers/2403.12962) | [FRESCO V2V Pipeline](#fresco) | - | [Yifan Zhou](https://github.com/SingleZombie) |

|

| 81 |

| AnimateDiff IPEX Pipeline | Accelerate AnimateDiff inference pipeline with BF16/FP32 precision on Intel Xeon CPUs with [IPEX](https://github.com/intel/intel-extension-for-pytorch) | [AnimateDiff on IPEX](#animatediff-on-ipex) | - | [Dan Li](https://github.com/ustcuna/) |

|

| 82 |

PIXART-α Controlnet pipeline | Implementation of the controlnet model for pixart alpha and its diffusers pipeline | [PIXART-α Controlnet pipeline](#pixart-α-controlnet-pipeline) | - | [Raul Ciotescu](https://github.com/raulc0399/) |

|

| 83 |

| HunyuanDiT Differential Diffusion Pipeline | Applies [Differential Diffusion](https://github.com/exx8/differential-diffusion) to [HunyuanDiT](https://github.com/huggingface/diffusers/pull/8240). | [HunyuanDiT with Differential Diffusion](#hunyuandit-with-differential-diffusion) | [](https://colab.research.google.com/drive/1v44a5fpzyr4Ffr4v2XBQ7BajzG874N4P?usp=sharing) | [Monjoy Choudhury](https://github.com/MnCSSJ4x) |

|

|

|

|

| 85 |

| Stable Diffusion XL Attentive Eraser Pipeline |[[AAAI2025 Oral] Attentive Eraser](https://github.com/Anonym0u3/AttentiveEraser) is a novel tuning-free method that enhances object removal capabilities in pre-trained diffusion models.|[Stable Diffusion XL Attentive Eraser Pipeline](#stable-diffusion-xl-attentive-eraser-pipeline)|-|[Wenhao Sun](https://github.com/Anonym0u3) and [Benlei Cui](https://github.com/Benny079)|

|

| 86 |

| Perturbed-Attention Guidance |StableDiffusionPAGPipeline is a modification of StableDiffusionPipeline to support Perturbed-Attention Guidance (PAG).|[Perturbed-Attention Guidance](#perturbed-attention-guidance)|[Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/perturbed_attention_guidance.ipynb)|[Hyoungwon Cho](https://github.com/HyoungwonCho)|

|

| 87 |

| CogVideoX DDIM Inversion Pipeline | Implementation of DDIM inversion and guided attention-based editing denoising process on CogVideoX. | [CogVideoX DDIM Inversion Pipeline](#cogvideox-ddim-inversion-pipeline) | - | [LittleNyima](https://github.com/LittleNyima) |

|

| 88 |

+

| FaithDiff Stable Diffusion XL Pipeline | Implementation of [(CVPR 2025) FaithDiff: Unleashing Diffusion Priors for Faithful Image Super-resolutionUnleashing Diffusion Priors for Faithful Image Super-resolution](https://huggingface.co/papers/2411.18824) - FaithDiff is a faithful image super-resolution method that leverages latent diffusion models by actively adapting the diffusion prior and jointly fine-tuning its components (encoder and diffusion model) with an alignment module to ensure high fidelity and structural consistency. | [FaithDiff Stable Diffusion XL Pipeline](#faithdiff-stable-diffusion-xl-pipeline) | [](https://huggingface.co/jychen9811/FaithDiff) | [Junyang Chen, Jinshan Pan, Jiangxin Dong, IMAG Lab, (Adapted by Eliseu Silva)](https://github.com/JyChen9811/FaithDiff) |

|

| 89 |

| Stable Diffusion 3 InstructPix2Pix Pipeline | Implementation of Stable Diffusion 3 InstructPix2Pix Pipeline | [Stable Diffusion 3 InstructPix2Pix Pipeline](#stable-diffusion-3-instructpix2pix-pipeline) | [](https://huggingface.co/BleachNick/SD3_UltraEdit_freeform) [](https://huggingface.co/CaptainZZZ/sd3-instructpix2pix) | [Jiayu Zhang](https://github.com/xduzhangjiayu) and [Haozhe Zhao](https://github.com/HaozheZhao)|

|

| 90 |

To load a custom pipeline you just need to pass the `custom_pipeline` argument to `DiffusionPipeline`, as one of the files in `diffusers/examples/community`. Feel free to send a PR with your own pipelines, we will merge them quickly.

|

| 91 |

|

|

|

|

| 101 |

|

| 102 |

**KAIST AI, University of Washington**

|

| 103 |

|

| 104 |

+

[*Spatiotemporal Skip Guidance (STG) for Enhanced Video Diffusion Sampling*](https://huggingface.co/papers/2411.18664) (CVPR 2025) is a simple training-free sampling guidance method for enhancing transformer-based video diffusion models. STG employs an implicit weak model via self-perturbation, avoiding the need for external models or additional training. By selectively skipping spatiotemporal layers, STG produces an aligned, degraded version of the original model to boost sample quality without compromising diversity or dynamic degree.

|

| 105 |

|

| 106 |

Following is the example video of STG applied to Mochi.

|

| 107 |

|

|

|

|

| 161 |

|

| 162 |

|

| 163 |

|

| 164 |

+

You can find additional information about Adaptive Mask Inpainting in the [paper](https://huggingface.co/papers/2401.12978) or in the [project website](https://snuvclab.github.io/coma).

|

| 165 |

|

| 166 |

#### Usage example

|

| 167 |

First, clone the diffusers github repository, and run the following command to set environment.

|

|

|

|

| 413 |

|

| 414 |

### HD-Painter

|

| 415 |

|

| 416 |

+

Implementation of [HD-Painter: High-Resolution and Prompt-Faithful Text-Guided Image Inpainting with Diffusion Models](https://huggingface.co/papers/2312.14091).

|

| 417 |

|

| 418 |

|

| 419 |

|

|

|

|

| 428 |

Our experiments demonstrate that HD-Painter surpasses existing state-of-the-art approaches qualitatively and quantitatively, achieving an impressive generation accuracy improvement of **61.4** vs **51.9**.

|

| 429 |

We will make the codes publicly available.

|

| 430 |

|

| 431 |

+

You can find additional information about Text2Video-Zero in the [paper](https://huggingface.co/papers/2312.14091) or the [original codebase](https://github.com/Picsart-AI-Research/HD-Painter).

|

| 432 |

|

| 433 |

#### Usage example

|

| 434 |

|

|

|

|

| 1362 |

|

| 1363 |

### Bit Diffusion

|

| 1364 |

|

| 1365 |

+

Based <https://huggingface.co/papers/2208.04202>, this is used for diffusion on discrete data - eg, discrete image data, DNA sequence data. An unconditional discrete image can be generated like this:

|

| 1366 |

|

| 1367 |

```python

|

| 1368 |

from diffusers import DiffusionPipeline

|

|

|

|

| 1523 |

|

| 1524 |

### Magic Mix

|

| 1525 |

|

| 1526 |

+

Implementation of the [MagicMix: Semantic Mixing with Diffusion Models](https://huggingface.co/papers/2210.16056) paper. This is a Diffusion Pipeline for semantic mixing of an image and a text prompt to create a new concept while preserving the spatial layout and geometry of the subject in the image. The pipeline takes an image that provides the layout semantics and a prompt that provides the content semantics for the mixing process.

|

| 1527 |

|

| 1528 |

There are 3 parameters for the method-

|

| 1529 |

|

|

|

|

| 1754 |

|

| 1755 |

#### **Research question: What visual concepts do the diffusion models learn from each noise level during training?**

|

| 1756 |

|

| 1757 |

+

The [P2 weighting (CVPR 2022)](https://huggingface.co/papers/2204.00227) paper proposed an approach to answer the above question, which is their second contribution.

|

| 1758 |

The approach consists of the following steps:

|

| 1759 |

|

| 1760 |

1. The input is an image x0.

|

|

|

|

| 1896 |

|

| 1897 |

### EDICT Image Editing Pipeline

|

| 1898 |

|

| 1899 |

+

This pipeline implements the text-guided image editing approach from the paper [EDICT: Exact Diffusion Inversion via Coupled Transformations](https://huggingface.co/papers/2211.12446). You have to pass:

|

| 1900 |

|

| 1901 |

- (`PIL`) `image` you want to edit.

|

| 1902 |

- `base_prompt`: the text prompt describing the current image (before editing).

|

|

|

|

| 1981 |

|

| 1982 |

### Stable Diffusion RePaint

|

| 1983 |

|

| 1984 |

+

This pipeline uses the [RePaint](https://huggingface.co/papers/2201.09865) logic on the latent space of stable diffusion. It can

|

| 1985 |

be used similarly to other image inpainting pipelines but does not rely on a specific inpainting model. This means you can use

|

| 1986 |

models that are not specifically created for inpainting.

|

| 1987 |

|

|

|

|

| 2576 |

|

| 2577 |

### Stable Diffusion Mixture Tiling Pipeline SD 1.5

|

| 2578 |

|

| 2579 |

+

This pipeline uses the Mixture. Refer to the [Mixture](https://huggingface.co/papers/2302.02412) paper for more details.

|

| 2580 |

|

| 2581 |

```python

|

| 2582 |

from diffusers import LMSDiscreteScheduler, DiffusionPipeline

|

|

|

|

| 2607 |

|

| 2608 |

### Stable Diffusion Mixture Canvas Pipeline SD 1.5

|

| 2609 |

|

| 2610 |

+

This pipeline uses the Mixture. Refer to the [Mixture](https://huggingface.co/papers/2302.02412) paper for more details.

|

| 2611 |

|

| 2612 |

```python

|

| 2613 |

from PIL import Image

|

|

|

|

| 2642 |

|

| 2643 |

### Stable Diffusion Mixture Tiling Pipeline SDXL

|

| 2644 |

|

| 2645 |

+

This pipeline uses the Mixture. Refer to the [Mixture](https://huggingface.co/papers/2302.02412) paper for more details.

|

| 2646 |

|

| 2647 |

```python

|

| 2648 |

import torch

|

|

|

|

| 2696 |

|

| 2697 |

### Stable Diffusion MoD ControlNet Tile SR Pipeline SDXL

|

| 2698 |

|

| 2699 |

+

This pipeline implements the [MoD (Mixture-of-Diffusers)](https://huggingface.co/papers/2408.06072) tiled diffusion technique and combines it with SDXL's ControlNet Tile process to generate SR images.

|

| 2700 |

|

| 2701 |

This works better with 4x scales, but you can try adjusts parameters to higher scales.

|

| 2702 |

|

|

|

|

| 2835 |

|

| 2836 |

### IADB pipeline

|

| 2837 |

|

| 2838 |

+

This pipeline is the implementation of the [α-(de)Blending: a Minimalist Deterministic Diffusion Model](https://huggingface.co/papers/2305.03486) paper.

|

| 2839 |

It is a simple and minimalist diffusion model.

|

| 2840 |

|

| 2841 |

The following code shows how to use the IADB pipeline to generate images using a pretrained celebahq-256 model.

|

|

|

|

| 2888 |

|

| 2889 |

### Zero1to3 pipeline

|

| 2890 |

|

| 2891 |

+

This pipeline is the implementation of the [Zero-1-to-3: Zero-shot One Image to 3D Object](https://huggingface.co/papers/2303.11328) paper.

|

| 2892 |

The original pytorch-lightning [repo](https://github.com/cvlab-columbia/zero123) and a diffusers [repo](https://github.com/kxhit/zero123-hf).

|

| 2893 |

|

| 2894 |

The following code shows how to use the Zero1to3 pipeline to generate novel view synthesis images using a pretrained stable diffusion model.

|

|

|

|

| 3356 |

|

| 3357 |

### Latent Consistency Pipeline

|

| 3358 |

|

| 3359 |

+

Latent Consistency Models was proposed in [Latent Consistency Models: Synthesizing High-Resolution Images with Few-Step Inference](https://huggingface.co/papers/2310.04378) by _Simian Luo, Yiqin Tan, Longbo Huang, Jian Li, Hang Zhao_ from Tsinghua University.

|

| 3360 |

|

| 3361 |

The abstract of the paper reads as follows:

|

| 3362 |

|

|

|

|

| 3468 |

|

| 3469 |

### StableDiffusionUpscaleLDM3D Pipeline

|

| 3470 |

|

| 3471 |

+

[LDM3D-VR](https://huggingface.co/papers/2311.03226) is an extended version of LDM3D.

|

| 3472 |

|

| 3473 |

The abstract from the paper is:

|

| 3474 |

*Latent diffusion models have proven to be state-of-the-art in the creation and manipulation of visual outputs. However, as far as we know, the generation of depth maps jointly with RGB is still limited. We introduce LDM3D-VR, a suite of diffusion models targeting virtual reality development that includes LDM3D-pano and LDM3D-SR. These models enable the generation of panoramic RGBD based on textual prompts and the upscaling of low-resolution inputs to high-resolution RGBD, respectively. Our models are fine-tuned from existing pretrained models on datasets containing panoramic/high-resolution RGB images, depth maps and captions. Both models are evaluated in comparison to existing related methods*

|

|

|

|

| 4165 |

|

| 4166 |

### DemoFusion

|

| 4167 |

|

| 4168 |

+

This pipeline is the official implementation of [DemoFusion: Democratising High-Resolution Image Generation With No $$$](https://huggingface.co/papers/2311.16973).

|

| 4169 |

The original repo can be found at [repo](https://github.com/PRIS-CV/DemoFusion).

|

| 4170 |

|

| 4171 |

- `view_batch_size` (`int`, defaults to 16):

|

|

|

|

| 4259 |

|

| 4260 |

|

| 4261 |

|

| 4262 |

+

See [paper](https://huggingface.co/papers/2311.01410), [paper page](https://ml-gsai.github.io/SDE-Drag-demo/), [original repo](https://github.com/ML-GSAI/SDE-Drag) for more information.

|

| 4263 |

|

| 4264 |

```py

|

| 4265 |

import torch

|

|

|

|

| 4515 |

|

| 4516 |

### StyleAligned Pipeline

|

| 4517 |

|

| 4518 |

+

This pipeline is the implementation of [Style Aligned Image Generation via Shared Attention](https://huggingface.co/papers/2312.02133). You can find more results [here](https://github.com/huggingface/diffusers/pull/6489#issuecomment-1881209354).

|

| 4519 |

|

| 4520 |

> Large-scale Text-to-Image (T2I) models have rapidly gained prominence across creative fields, generating visually compelling outputs from textual prompts. However, controlling these models to ensure consistent style remains challenging, with existing methods necessitating fine-tuning and manual intervention to disentangle content and style. In this paper, we introduce StyleAligned, a novel technique designed to establish style alignment among a series of generated images. By employing minimal `attention sharing' during the diffusion process, our method maintains style consistency across images within T2I models. This approach allows for the creation of style-consistent images using a reference style through a straightforward inversion operation. Our method's evaluation across diverse styles and text prompts demonstrates high-quality synthesis and fidelity, underscoring its efficacy in achieving consistent style across various inputs.

|

| 4521 |

|

|

|

|

| 4729 |

|

| 4730 |

### UFOGen Scheduler

|

| 4731 |

|

| 4732 |

+

[UFOGen](https://huggingface.co/papers/2311.09257) is a generative model designed for fast one-step text-to-image generation, trained via adversarial training starting from an initial pretrained diffusion model such as Stable Diffusion. `scheduling_ufogen.py` implements a onestep and multistep sampling algorithm for UFOGen models compatible with pipelines like `StableDiffusionPipeline`. A usage example is as follows:

|

| 4733 |

|

| 4734 |

```py

|

| 4735 |

import torch

|

|

|

|

| 5047 |

### Stable Diffusion XL Attentive Eraser Pipeline

|

| 5048 |

<img src="https://raw.githubusercontent.com/Anonym0u3/Images/refs/heads/main/fenmian.png" width="600" />

|

| 5049 |

|

| 5050 |

+

**Stable Diffusion XL Attentive Eraser Pipeline** is an advanced object removal pipeline that leverages SDXL for precise content suppression and seamless region completion. This pipeline uses **self-attention redirection guidance** to modify the model’s self-attention mechanism, allowing for effective removal and inpainting across various levels of mask precision, including semantic segmentation masks, bounding boxes, and hand-drawn masks. If you are interested in more detailed information and have any questions, please refer to the [paper](https://huggingface.co/papers/2412.12974) and [official implementation](https://github.com/Anonym0u3/AttentiveEraser).

|

| 5051 |

|

| 5052 |

#### Key features

|

| 5053 |

|

|

|

|

| 5133 |

|

| 5134 |

# Perturbed-Attention Guidance

|

| 5135 |

|

| 5136 |

+

[Project](https://ku-cvlab.github.io/Perturbed-Attention-Guidance/) / [arXiv](https://huggingface.co/papers/2403.17377) / [GitHub](https://github.com/KU-CVLAB/Perturbed-Attention-Guidance)

|

| 5137 |

|

| 5138 |

This implementation is based on [Diffusers](https://huggingface.co/docs/diffusers/index). `StableDiffusionPAGPipeline` is a modification of `StableDiffusionPipeline` to support Perturbed-Attention Guidance (PAG).

|

| 5139 |

|

main/adaptive_mask_inpainting.py

CHANGED

|

@@ -670,7 +670,7 @@ class AdaptiveMaskInpaintPipeline(

|

|

| 670 |

def prepare_extra_step_kwargs(self, generator, eta):

|

| 671 |

# prepare extra kwargs for the scheduler step, since not all schedulers have the same signature

|

| 672 |

# eta (η) is only used with the DDIMScheduler, it will be ignored for other schedulers.

|

| 673 |

-

# eta corresponds to η in DDIM paper: https://

|

| 674 |

# and should be between [0, 1]

|

| 675 |

|

| 676 |

accepts_eta = "eta" in set(inspect.signature(self.scheduler.step).parameters.keys())

|

|

@@ -917,7 +917,7 @@ class AdaptiveMaskInpaintPipeline(

|

|

| 917 |

num_images_per_prompt (`int`, *optional*, defaults to 1):

|

| 918 |

The number of images to generate per prompt.

|

| 919 |

eta (`float`, *optional*, defaults to 0.0):

|

| 920 |

-

Corresponds to parameter eta (η) from the [DDIM](https://

|

| 921 |

to the [`~schedulers.DDIMScheduler`], and is ignored in other schedulers.

|

| 922 |

generator (`torch.Generator` or `List[torch.Generator]`, *optional*):

|

| 923 |

A [`torch.Generator`](https://pytorch.org/docs/stable/generated/torch.Generator.html) to make

|

|

@@ -1012,7 +1012,7 @@ class AdaptiveMaskInpaintPipeline(

|

|

| 1012 |

|

| 1013 |

device = self._execution_device

|

| 1014 |

# here `guidance_scale` is defined analog to the guidance weight `w` of equation (2)

|

| 1015 |

-

# of the Imagen paper: https://

|

| 1016 |

# corresponds to doing no classifier free guidance.

|

| 1017 |

do_classifier_free_guidance = guidance_scale > 1.0

|

| 1018 |

|

|

|

|

| 670 |

def prepare_extra_step_kwargs(self, generator, eta):

|

| 671 |

# prepare extra kwargs for the scheduler step, since not all schedulers have the same signature

|

| 672 |

# eta (η) is only used with the DDIMScheduler, it will be ignored for other schedulers.

|

| 673 |

+

# eta corresponds to η in DDIM paper: https://huggingface.co/papers/2010.02502

|

| 674 |

# and should be between [0, 1]

|

| 675 |

|

| 676 |

accepts_eta = "eta" in set(inspect.signature(self.scheduler.step).parameters.keys())

|

|

|

|

| 917 |

num_images_per_prompt (`int`, *optional*, defaults to 1):

|

| 918 |

The number of images to generate per prompt.

|

| 919 |

eta (`float`, *optional*, defaults to 0.0):

|

| 920 |

+

Corresponds to parameter eta (η) from the [DDIM](https://huggingface.co/papers/2010.02502) paper. Only applies

|

| 921 |

to the [`~schedulers.DDIMScheduler`], and is ignored in other schedulers.

|

| 922 |

generator (`torch.Generator` or `List[torch.Generator]`, *optional*):

|

| 923 |

A [`torch.Generator`](https://pytorch.org/docs/stable/generated/torch.Generator.html) to make

|

|

|

|

| 1012 |

|

| 1013 |

device = self._execution_device

|

| 1014 |

# here `guidance_scale` is defined analog to the guidance weight `w` of equation (2)

|

| 1015 |

+

# of the Imagen paper: https://huggingface.co/papers/2205.11487 . `guidance_scale = 1`

|

| 1016 |

# corresponds to doing no classifier free guidance.

|

| 1017 |

do_classifier_free_guidance = guidance_scale > 1.0

|

| 1018 |

|

main/bit_diffusion.py

CHANGED

|

@@ -74,7 +74,7 @@ def ddim_bit_scheduler_step(

|

|

| 74 |

"Number of inference steps is 'None', you need to run 'set_timesteps' after creating the scheduler"

|

| 75 |

)

|

| 76 |

|

| 77 |

-

# See formulas (12) and (16) of DDIM paper https://

|

| 78 |

# Ideally, read DDIM paper in-detail understanding

|

| 79 |

|

| 80 |

# Notation (<variable name> -> <name in paper>

|

|

@@ -95,7 +95,7 @@ def ddim_bit_scheduler_step(

|

|

| 95 |

beta_prod_t = 1 - alpha_prod_t

|

| 96 |

|

| 97 |

# 3. compute predicted original sample from predicted noise also called

|

| 98 |

-

# "predicted x_0" of formula (12) from https://

|

| 99 |

pred_original_sample = (sample - beta_prod_t ** (0.5) * model_output) / alpha_prod_t ** (0.5)

|

| 100 |

|

| 101 |

# 4. Clip "predicted x_0"

|

|

@@ -112,10 +112,10 @@ def ddim_bit_scheduler_step(

|

|

| 112 |

# the model_output is always re-derived from the clipped x_0 in Glide

|

| 113 |

model_output = (sample - alpha_prod_t ** (0.5) * pred_original_sample) / beta_prod_t ** (0.5)

|

| 114 |

|

| 115 |

-

# 6. compute "direction pointing to x_t" of formula (12) from https://

|

| 116 |

pred_sample_direction = (1 - alpha_prod_t_prev - std_dev_t**2) ** (0.5) * model_output

|

| 117 |

|

| 118 |

-

# 7. compute x_t without "random noise" of formula (12) from https://

|

| 119 |

prev_sample = alpha_prod_t_prev ** (0.5) * pred_original_sample + pred_sample_direction

|

| 120 |

|

| 121 |

if eta > 0:

|

|

@@ -172,7 +172,7 @@ def ddpm_bit_scheduler_step(

|

|

| 172 |

beta_prod_t_prev = 1 - alpha_prod_t_prev

|

| 173 |

|

| 174 |

# 2. compute predicted original sample from predicted noise also called

|

| 175 |

-

# "predicted x_0" of formula (15) from https://

|

| 176 |

if prediction_type == "epsilon":

|

| 177 |

pred_original_sample = (sample - beta_prod_t ** (0.5) * model_output) / alpha_prod_t ** (0.5)

|

| 178 |

elif prediction_type == "sample":

|

|

@@ -186,12 +186,12 @@ def ddpm_bit_scheduler_step(

|

|

| 186 |

pred_original_sample = torch.clamp(pred_original_sample, -scale, scale)

|

| 187 |

|

| 188 |

# 4. Compute coefficients for pred_original_sample x_0 and current sample x_t

|

| 189 |

-

# See formula (7) from https://

|

| 190 |

pred_original_sample_coeff = (alpha_prod_t_prev ** (0.5) * self.betas[t]) / beta_prod_t

|

| 191 |

current_sample_coeff = self.alphas[t] ** (0.5) * beta_prod_t_prev / beta_prod_t

|

| 192 |

|

| 193 |

# 5. Compute predicted previous sample µ_t

|

| 194 |

-

# See formula (7) from https://

|

| 195 |

pred_prev_sample = pred_original_sample_coeff * pred_original_sample + current_sample_coeff * sample

|

| 196 |

|

| 197 |

# 6. Add noise

|

|

|

|

| 74 |

"Number of inference steps is 'None', you need to run 'set_timesteps' after creating the scheduler"

|

| 75 |

)

|

| 76 |

|

| 77 |

+

# See formulas (12) and (16) of DDIM paper https://huggingface.co/papers/2010.02502

|

| 78 |

# Ideally, read DDIM paper in-detail understanding

|

| 79 |

|

| 80 |

# Notation (<variable name> -> <name in paper>

|

|

|

|

| 95 |

beta_prod_t = 1 - alpha_prod_t

|

| 96 |

|

| 97 |

# 3. compute predicted original sample from predicted noise also called

|

| 98 |

+

# "predicted x_0" of formula (12) from https://huggingface.co/papers/2010.02502

|

| 99 |

pred_original_sample = (sample - beta_prod_t ** (0.5) * model_output) / alpha_prod_t ** (0.5)

|

| 100 |

|

| 101 |

# 4. Clip "predicted x_0"

|

|

|

|

| 112 |

# the model_output is always re-derived from the clipped x_0 in Glide

|

| 113 |

model_output = (sample - alpha_prod_t ** (0.5) * pred_original_sample) / beta_prod_t ** (0.5)

|

| 114 |

|

| 115 |

+

# 6. compute "direction pointing to x_t" of formula (12) from https://huggingface.co/papers/2010.02502

|

| 116 |

pred_sample_direction = (1 - alpha_prod_t_prev - std_dev_t**2) ** (0.5) * model_output

|

| 117 |

|

| 118 |

+

# 7. compute x_t without "random noise" of formula (12) from https://huggingface.co/papers/2010.02502

|

| 119 |

prev_sample = alpha_prod_t_prev ** (0.5) * pred_original_sample + pred_sample_direction

|

| 120 |

|

| 121 |

if eta > 0:

|

|

|

|

| 172 |

beta_prod_t_prev = 1 - alpha_prod_t_prev

|

| 173 |

|

| 174 |