Datasets:

admin

commited on

Commit

·

ce3cc50

1

Parent(s):

2e8e5b5

upd md

Browse files

README.md

CHANGED

|

@@ -15,15 +15,39 @@ viewer: false

|

|

| 15 |

---

|

| 16 |

|

| 17 |

# Dataset Card for Guzheng Technique 99 Dataset

|

| 18 |

-

|

|

|

|

| 19 |

|

| 20 |

-

|

|

|

|

| 21 |

|

| 22 |

-

|

| 23 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 24 |

|

| 25 |

## Dataset Structure

|

| 26 |

-

### Default Subset

|

| 27 |

<style>

|

| 28 |

.datastructure td {

|

| 29 |

vertical-align: middle !important;

|

|

@@ -44,18 +68,12 @@ Based on the above original data, we performed data processing and constructed t

|

|

| 44 |

<td>.jpg, 44100Hz</td>

|

| 45 |

<td>{onset_time : float64, offset_time : float, IPT : 7-class, note : int8}</td>

|

| 46 |

</tr>

|

| 47 |

-

<tr>

|

| 48 |

-

<td>...</td>

|

| 49 |

-

<td>...</td>

|

| 50 |

-

<td>...</td>

|

| 51 |

-

</tr>

|

| 52 |

</table>

|

| 53 |

|

| 54 |

-

### Eval Subset

|

| 55 |

| data(logCQT spectrogram) | label |

|

| 56 |

| :----------------------: | :--------------: |

|

| 57 |

| float64, 88 x 258 x 1 | float64, 7 x 258 |

|

| 58 |

-

| ... | ... |

|

| 59 |

|

| 60 |

### Data Instances

|

| 61 |

.zip(.flac, .csv)

|

|

@@ -67,27 +85,9 @@ The dataset comprises 99 Guzheng solo compositions, recorded by professionals in

|

|

| 67 |

train, validation, test

|

| 68 |

|

| 69 |

## Dataset Description

|

| 70 |

-

- **Homepage:** <https://ccmusic-database.github.io>

|

| 71 |

-

- **Repository:** <https://huggingface.co/datasets/ccmusic-database/Guzheng_Tech99>

|

| 72 |

-

- **Paper:** <https://doi.org/10.5281/zenodo.5676893>

|

| 73 |

-

- **Leaderboard:** <https://www.modelscope.cn/datasets/ccmusic-database/Guzheng_Tech99>

|

| 74 |

-

- **Point of Contact:** <https://github.com/LiDCC/GuzhengTech99/tree/windows>

|

| 75 |

-

|

| 76 |

### Dataset Summary

|

| 77 |

The integrated version provides the original content and the spectrogram generated in the experimental part of the paper cited above. For the second part, the pre-process in the paper is replicated. Each audio clip is a 3-second segment sampled at 44,100Hz, which is subsequently converted into a log Constant-Q Transform (CQT) spectrogram. A CQT accompanied by a label constitutes a single data entry, forming the first and second columns, respectively. The CQT is a 3-dimensional array with the dimension of 88 × 258 × 1, representing the frequency-time structure of the audio. The label, on the other hand, is a 2-dimensional array with dimensions of 7 × 258, which indicates the presence of seven distinct techniques across each time frame. indicating the existence of the seven techniques in each time frame. In the end, given that the raw dataset has already been split into train, valid, and test sets, the integrated dataset maintains the same split method. This dataset can be used for frame-level guzheng playing technique detection.

|

| 78 |

|

| 79 |

-

#### Totals 总量统计

|

| 80 |

-

| Statistical items 统计项 | Values 值 |

|

| 81 |

-

| :------------------------------: | :-----------------: |

|

| 82 |

-

| Total audio count 总音频数 | `99` |

|

| 83 |

-

| Total duration(s) 音频总时长(秒) | `9064.612607709747` |

|

| 84 |

-

| Total count 总数据量 | `15838` |

|

| 85 |

-

| Total duration(s) 总时长(秒) | `9760.579138441011` |

|

| 86 |

-

| Mean duration(ms) 平均时长(毫秒) | `616.2759905569524` |

|

| 87 |

-

| Min duration(ms) 最短时长(毫秒) | `34.81292724609375` |

|

| 88 |

-

| Max duration(ms) 最长时长(毫秒) | `6823.249816894531` |

|

| 89 |

-

| Class with max durs 最长时长类别 | `boxian` |

|

| 90 |

-

|

| 91 |

### Supported Tasks and Leaderboards

|

| 92 |

MIR, audio classification

|

| 93 |

|

|

@@ -142,9 +142,6 @@ Guzheng is a polyphonic instrument. In Guzheng performance, notes with different

|

|

| 142 |

#### Who are the annotators?

|

| 143 |

Students from FD-LAMT

|

| 144 |

|

| 145 |

-

### Personal and Sensitive Information

|

| 146 |

-

None

|

| 147 |

-

|

| 148 |

## Considerations for Using the Data

|

| 149 |

### Social Impact of Dataset

|

| 150 |

Promoting the development of the music AI industry

|

|

@@ -160,13 +157,14 @@ Insufficient sample

|

|

| 160 |

Dichucheng Li

|

| 161 |

|

| 162 |

### Evaluation

|

| 163 |

-

[Dichucheng Li, Mingjin Che, Wenwu Meng, Yulun Wu, Yi Yu, Fan Xia and Wei Li. "Frame-Level Multi-Label Playing Technique Detection Using Multi-Scale Network and Self-Attention Mechanism", in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2023).](https://arxiv.org/pdf/2303.13272.pdf)

|

|

|

|

| 164 |

|

| 165 |

### Citation Information

|

| 166 |

```bibtex

|

| 167 |

@dataset{zhaorui_liu_2021_5676893,

|

| 168 |

author = {Monan Zhou, Shenyang Xu, Zhaorui Liu, Zhaowen Wang, Feng Yu, Wei Li and Baoqiang Han},

|

| 169 |

-

title = {CCMusic: an Open and Diverse Database for Chinese

|

| 170 |

month = {mar},

|

| 171 |

year = {2024},

|

| 172 |

publisher = {HuggingFace},

|

|

|

|

| 15 |

---

|

| 16 |

|

| 17 |

# Dataset Card for Guzheng Technique 99 Dataset

|

| 18 |

+

## Original Content

|

| 19 |

+

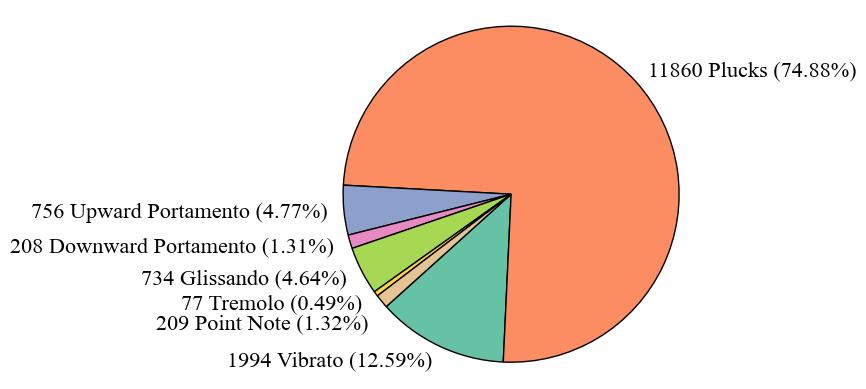

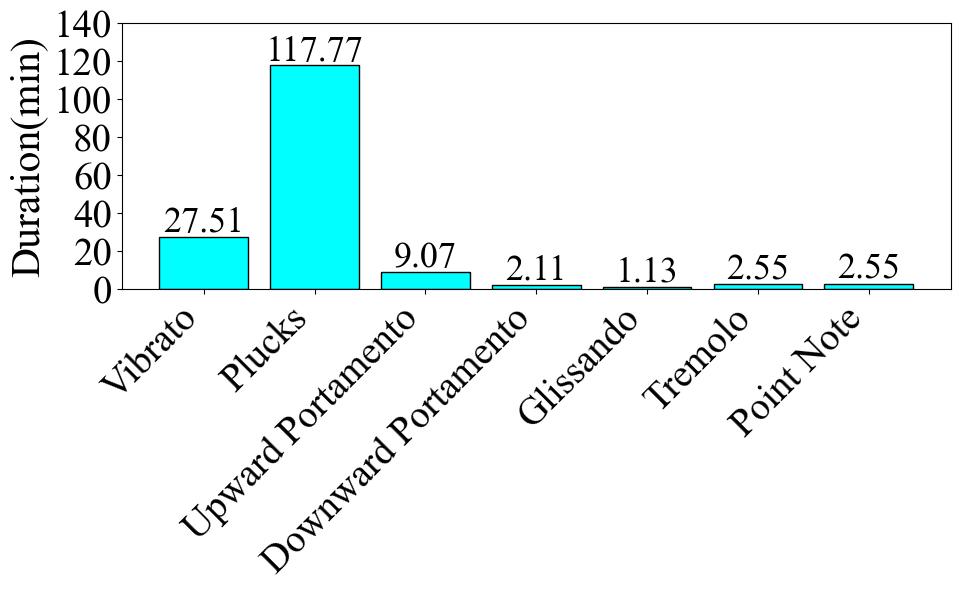

This dataset is created and used by [[1]](https://arxiv.org/pdf/2303.13272) for frame-level Guzheng playing technique detection. The original dataset encompasses 99 solo compositions for Guzheng, recorded by professional musicians within a studio environment. Each composition is annotated for every note, indicating the onset, offset, pitch, and playing techniques. This is different from the GZ IsoTech, which is annotated at the clip-level. Also, its playing technique categories differ slightly, encompassing a total of seven techniques. They are: _Vibrato (chanyin 颤音), Plucks (boxian 拨弦), Upward Portamento (shanghua 上滑), Downward Portamento (xiahua 下滑), Glissando (huazhi\guazou\lianmo\liantuo 花指\刮奏\连抹\连托), Tremolo (yaozhi 摇指), and Point Note (dianyin 点音)_. This meticulous annotation results in a total of 63,352 annotated labels.

|

| 20 |

|

| 21 |

+

## Integration

|

| 22 |

+

In the original dataset, the labels were stored in a separate CSV file. This posed usability challenges, as researchers had to perform time-consuming operations on CSV parsing and label-audio alignment. After our integration, the data structure has been streamlined and optimized. It now contains three columns: audio sampled at 44,100 Hz, pre-processed mel spectrograms, and a dictionary. This dictionary contains onset, offset, technique numeric labels, and pitch. The number of data entries after integration remains 99, with a cumulative duration amounting to 151.08 minutes. The average audio duration is 91.56 seconds.

|

| 23 |

|

| 24 |

+

We performed data processing and constructed the [default subset](#default-subset) of the current integrated version of the dataset, and the details of its data structure can be viewed through the [viewer](https://www.modelscope.cn/datasets/ccmusic-database/Guzheng_Tech99/dataPeview). In light of the fact that the current dataset has been referenced and evaluated in a published article, we transcribe here the details of the dataset processing during the evaluation in the said article: each audio clip is a 3-second segment sampled at 44,100Hz, which is then converted into a log Constant-Q Transform (CQT) spectrogram. A CQT accompanied by a label constitutes a single data entry, forming the first and second columns, respectively. The CQT is a 3-dimensional array with dimensions of 88x258x1, representing the frequency-time structure of the audio. The label, on the other hand, is a 2-dimensional array with dimensions of 7x258, indicating the presence of seven distinct techniques across each time frame. Ultimately, given that the original dataset has already been divided into train, valid, and test sets, we have integrated the feature extraction method mentioned in this article's evaluation process into the API, thereby constructing the [eval subset](#eval-subset), which is not embodied in our paper.

|

| 25 |

+

|

| 26 |

+

## Statistics

|

| 27 |

+

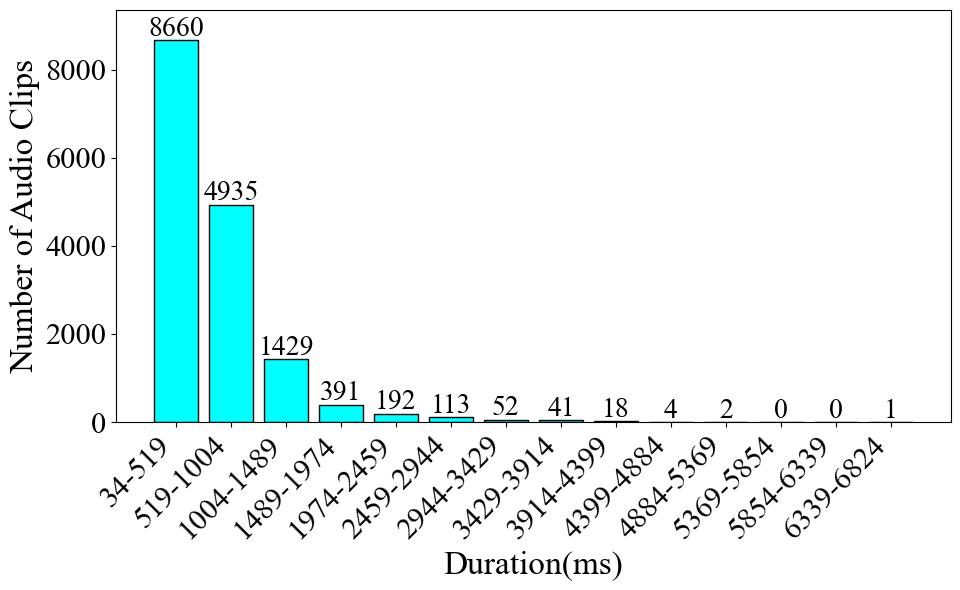

In this part, we present statistics at the label-level. The number of audio clips is equivalent to the count of either onset or offset occurrences. The duration of an audio clip is determined by calculating the offset time minus the onset time. At this level, the average audio duration is 0.62 seconds, with the shortest being 0.03 seconds and the longest 6.82 seconds.

|

| 28 |

+

|

| 29 |

+

|  |  |  |

|

| 30 |

+

| :--------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------: |

|

| 31 |

+

| **Fig. 1** | **Fig. 2** | **Fig. 3** |

|

| 32 |

+

|

| 33 |

+

Firstly, **Fig. 1** illustrates the number and proportion of audio clips in each category. The Plucks category accounts for a significantly larger proportion than all other categories, comprising 74.88% of the dataset. The category with the smallest proportion is Tremolo, which accounts for only 0.49%, resulting in a difference of 74.39% between the largest and smallest categories. Moving to **Fig. 2**, which presents the audio duration of each category. The total duration of Plucks audio is also significantly longer than all other categories, with a total duration of 117.77 minutes. In contrast, the category with the shortest total duration is Glissando, with only 1.13 minutes. This differs from the pie chart, where the smallest category was Tremolo. The difference between the longest and shortest durations is 116.64 minutes. From both the pie chart and the duration chart, it is evident that this dataset suffers from a severe data imbalance problem. In the end, **Fig. 3** gives the audio clip number across various duration intervals. Most of the audio clips are concentrated in the 34-1004 milliseconds duration range, accounting for approximately 85% of the dataset. Beyond 1974 milliseconds, the number of audio clips drops sharply.

|

| 34 |

+

|

| 35 |

+

| Statistical items | Values |

|

| 36 |

+

| :------------------------------------------: | :------------------: |

|

| 37 |

+

| Total audio count | `99` |

|

| 38 |

+

| Total duration(s) | `9064.61260770975` |

|

| 39 |

+

| Total duration(s) | `91.5617435122197` |

|

| 40 |

+

| Total duration(s) | `32.888004535147395` |

|

| 41 |

+

| Total duration(s) | `327.7973469387755` |

|

| 42 |

+

| Total count | `15838` |

|

| 43 |

+

| Total duration(s) | `9760.579138441011` |

|

| 44 |

+

| Mean duration(ms) | `616.2759905569524` |

|

| 45 |

+

| Min duration(ms) | `34.81292724609375` |

|

| 46 |

+

| Max duration(ms) | `6823.249816894531` |

|

| 47 |

+

| Class in the longest audio duartion interval | `boxian` |

|

| 48 |

|

| 49 |

## Dataset Structure

|

| 50 |

+

### Default Subset Structure

|

| 51 |

<style>

|

| 52 |

.datastructure td {

|

| 53 |

vertical-align: middle !important;

|

|

|

|

| 68 |

<td>.jpg, 44100Hz</td>

|

| 69 |

<td>{onset_time : float64, offset_time : float, IPT : 7-class, note : int8}</td>

|

| 70 |

</tr>

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 71 |

</table>

|

| 72 |

|

| 73 |

+

### Eval Subset Structure

|

| 74 |

| data(logCQT spectrogram) | label |

|

| 75 |

| :----------------------: | :--------------: |

|

| 76 |

| float64, 88 x 258 x 1 | float64, 7 x 258 |

|

|

|

|

| 77 |

|

| 78 |

### Data Instances

|

| 79 |

.zip(.flac, .csv)

|

|

|

|

| 85 |

train, validation, test

|

| 86 |

|

| 87 |

## Dataset Description

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 88 |

### Dataset Summary

|

| 89 |

The integrated version provides the original content and the spectrogram generated in the experimental part of the paper cited above. For the second part, the pre-process in the paper is replicated. Each audio clip is a 3-second segment sampled at 44,100Hz, which is subsequently converted into a log Constant-Q Transform (CQT) spectrogram. A CQT accompanied by a label constitutes a single data entry, forming the first and second columns, respectively. The CQT is a 3-dimensional array with the dimension of 88 × 258 × 1, representing the frequency-time structure of the audio. The label, on the other hand, is a 2-dimensional array with dimensions of 7 × 258, which indicates the presence of seven distinct techniques across each time frame. indicating the existence of the seven techniques in each time frame. In the end, given that the raw dataset has already been split into train, valid, and test sets, the integrated dataset maintains the same split method. This dataset can be used for frame-level guzheng playing technique detection.

|

| 90 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 91 |

### Supported Tasks and Leaderboards

|

| 92 |

MIR, audio classification

|

| 93 |

|

|

|

|

| 142 |

#### Who are the annotators?

|

| 143 |

Students from FD-LAMT

|

| 144 |

|

|

|

|

|

|

|

|

|

|

| 145 |

## Considerations for Using the Data

|

| 146 |

### Social Impact of Dataset

|

| 147 |

Promoting the development of the music AI industry

|

|

|

|

| 157 |

Dichucheng Li

|

| 158 |

|

| 159 |

### Evaluation

|

| 160 |

+

[1] [Dichucheng Li, Mingjin Che, Wenwu Meng, Yulun Wu, Yi Yu, Fan Xia and Wei Li. "Frame-Level Multi-Label Playing Technique Detection Using Multi-Scale Network and Self-Attention Mechanism", in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2023).](https://arxiv.org/pdf/2303.13272.pdf)<br>

|

| 161 |

+

[2] <https://huggingface.co/ccmusic-database/Guzheng_Tech99>

|

| 162 |

|

| 163 |

### Citation Information

|

| 164 |

```bibtex

|

| 165 |

@dataset{zhaorui_liu_2021_5676893,

|

| 166 |

author = {Monan Zhou, Shenyang Xu, Zhaorui Liu, Zhaowen Wang, Feng Yu, Wei Li and Baoqiang Han},

|

| 167 |

+

title = {CCMusic: an Open and Diverse Database for Chinese Music Information Retrieval Research},

|

| 168 |

month = {mar},

|

| 169 |

year = {2024},

|

| 170 |

publisher = {HuggingFace},

|