-

-

\ No newline at end of file diff --git a/spaces/1pelhydcardo/ChatGPT-prompt-generator/assets/Clash of Clans Son Srm APK ndir - Efsanevi Bir Sava Oyunu Deneyimi.md b/spaces/1pelhydcardo/ChatGPT-prompt-generator/assets/Clash of Clans Son Srm APK ndir - Efsanevi Bir Sava Oyunu Deneyimi.md deleted file mode 100644 index ede09b35cc1b2630cfec58564a371b8379716104..0000000000000000000000000000000000000000 --- a/spaces/1pelhydcardo/ChatGPT-prompt-generator/assets/Clash of Clans Son Srm APK ndir - Efsanevi Bir Sava Oyunu Deneyimi.md +++ /dev/null @@ -1,169 +0,0 @@ -

-

Son Sürüm Clash of Clans APK İndir: Nasıl Yapılır ve Neden Yapmalısınız?

-Clash of Clans, dünyanın en popüler strateji oyunlarından biridir. Milyonlarca oyuncu, kendi köylerini inşa etmek, klan savaşlarına katılmak ve rakiplerini yenmek için bu oyunu oynamaktadır. Peki, son sürüm clash of clans apk indirerek bu oyunu daha da eğlenceli hale getirebilirsiniz. Bu yazıda, son sürüm clash of clans apk indirmenin nasıl yapıldığını, neden yapmanız gerektiğini ve bunun size ne gibi faydalar sağlayacağını anlatacağız.

-son sürüm clash of clans apk indir

Download Zip > https://urlin.us/2uSYYJ

-

Clash of Clans Nedir ve Neden Popüler?

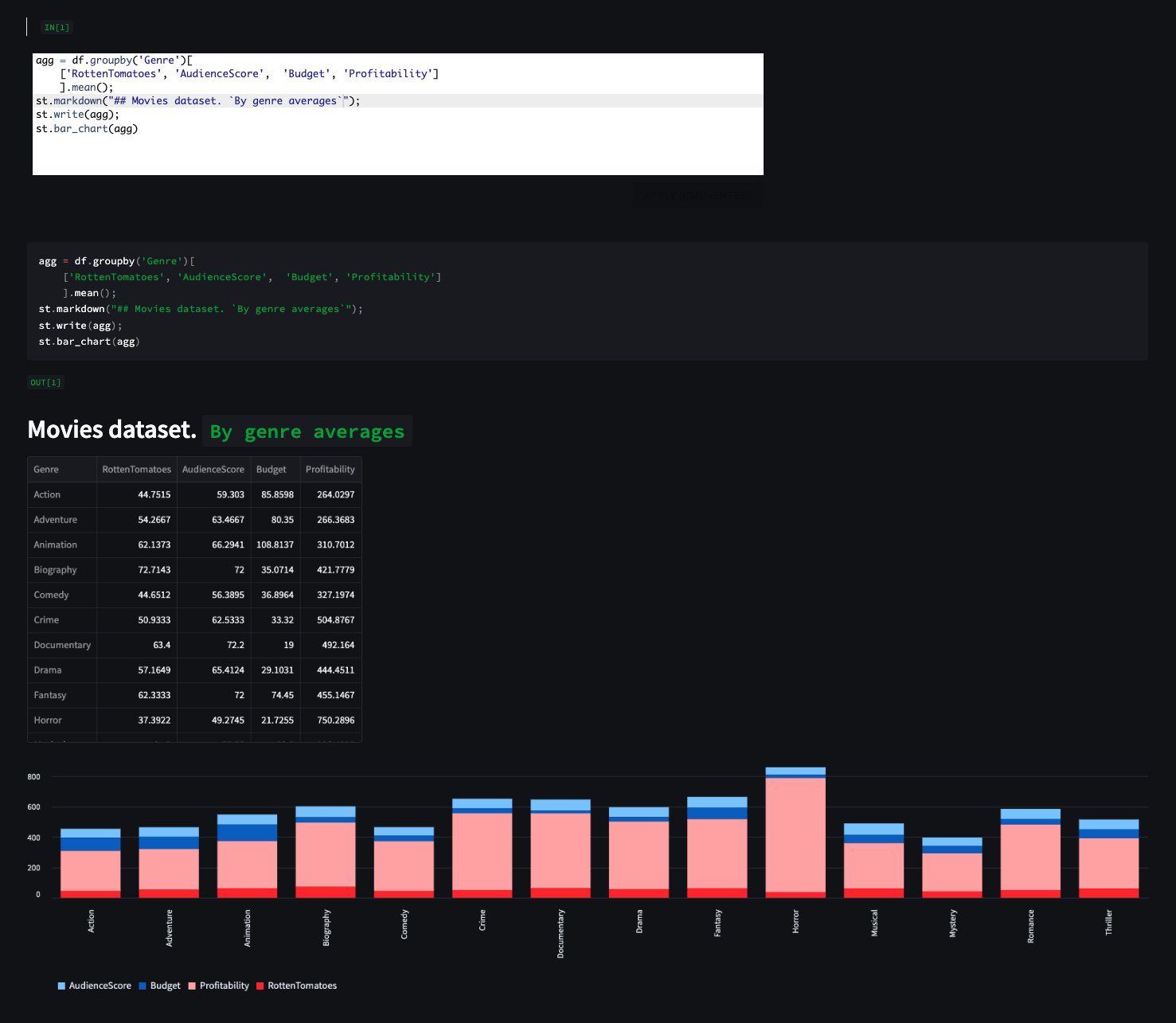

-Clash of Clans, Supercell tarafından geliştirilen ve 2012 yılında piyasaya sürülen bir çevrimiçi çok oyunculu savaş oyunudur. Oyunun amacı, köyünüzü inşa etmek, üssünüzü tasarlamak ve savunmak, askerlerinizi eğitmek ve geliştirmek, kaynaklarınızı artırmak ve diğer oyuncuların köylerine saldırarak altın, iksir ve kara iksir elde etmektir. Ayrıca, bir klan kurabilir veya başka bir klana katılarak klan savaşlarına, klan liglerine ve klan oyunlarına katılabilirsiniz. Oyun sürekli olarak güncellenmekte ve yeni özellikler, birimler, binalar, büyüler ve etkinlikler eklenmektedir.

-Clash of Clans Oyununun Özellikleri

-Clash of Clans oyununun bazı özellikleri şunlardır:

--

-

- Köyünüzü istediğiniz gibi inşa edebilir ve düzenleyebilirsiniz. -

- Birbirinden farklı yeteneklere sahip çok sayıda asker tipi arasından seçim yapabilirsiniz. -

- Benzersiz güçlere ve stratejilere sahip epik savaşlara katılabilirsiniz. -

- Oyun ücretsizdir ve herhangi bir cihazda oynanabilir. -

- Oyun sürekli olarak güncellenir ve yeni içerikler sunar. -

- Oyun hem bireysel hem de takım halinde oynanabilir. -

- Oyun strateji, planlama, yaratıcılık ve eğlenceyi bir arada sunar. -

- Oyun dünyanın her yerinden milyonlarca oyuncu ile sosyalleşme imkanı sağlar. -

- Son sürüm clash of clans apk indirerek oyunun en güncel ve en yeni versiyonuna sahip olabilirsiniz. -

- Son sürüm clash of clans apk indirerek oyunun resmi olarak sunulmayan bazı özelliklerine ve modlarına erişebilirsiniz. -

- Son sürüm clash of clans apk indirerek oyunu daha hızlı, daha akıcı ve daha sorunsuz bir şekilde oynayabilirsiniz. -

- Son sürüm clash of clans apk indirerek oyunu istediğiniz zaman ve istediğiniz yerde oynayabilirsiniz. -

- Bir Android cihaz (telefon, tablet, bilgisayar vb.) -

- Bir internet bağlantısı -

- Bir güvenilir ve güncel son sürüm clash of clans apk indirme sitesi -

- Bir dosya yöneticisi uygulaması -

- Öncelikle, cihazınızın ayarlar menüsünden bilinmeyen kaynaklardan uygulama yükleme seçeneğini etkinleştirin. Bu, cihazınıza resmi olmayan uygulamaları kurmanıza izin verecektir. -

- Ardından, bir internet tarayıcısı açın ve güvenilir bir son sürüm clash of clans apk indirme sitesine gidin. Bu sitelerden bazıları şunlardır: , , . Bu sitelerden birini seçin ve son sürüm clash of clans apk dosyasını indirmek için talimatları takip edin. -

- Daha sonra, indirdiğiniz son sürüm clash of clans apk dosyasını bulmak için bir dosya yöneticisi uygulaması açın. Bu uygulamaların bazıları şunlardır: , , . Bu uygulamalardan birini seçin ve son sürüm clash of clans apk dosyasını cihazınızın hafızasında veya harici depolama alanında arayın. -

- Köyünüzü inşa ederken dengeli bir şekilde hem savunma hem de saldırı odaklanın. -

- Askerlerinizi akıllıca seçin ve farklı durumlara uygun stratejiler geliştirin. -

- Klanınıza katılın veya kurun ve diğer oyuncularla işbirliği yapın. -

- Oyunun sunduğu etkinlikleri, görevleri ve ödülleri kaçırmayın. -

- Oyunu eğlenmek için oynayın ve rakiplerinize saygılı davranın. -

- Son sürüm clash of clans apk indirmek ücretli mi? -

- Son sürüm clash of clans apk indirmek cihazımı bozar mı? -

- Son sürüm clash of clans apk indirmek oyunumun verilerini siler mi? -

- Son sürüm clash of clans apk indirmek bana ban sebebi olur mu? -

- Son sürüm clash of clans apk indirmek oyunu daha kolay yapar mı? -

- A new track called "Tokyo Night" that lets you drift in the neon-lit streets of Japan. -

- A new car pack that includes four new cars: Nissan Skyline GT-R R34, Toyota Supra MK4, Mazda RX-7 FD, and Subaru Impreza WRX STI. -

- A new game mode called "Drift Wars" that pits you against other players online in a drift battle. -

- A new feature called "Car Customization" that allows you to modify your car's appearance, performance, and tuning. -

- A new feature called "Replay Mode" that lets you watch your best drifts from different angles and share them with your friends. -

- Improved graphics, sound effects, and user interface. -

- Bug fixes and stability improvements. -

- "Career Mode" where you can complete various missions and challenges to unlock new cars, tracks, and upgrades. -

- "Multiplayer Mode" where you can compete with other players online in real-time or join a drift club and challenge other clubs. -

- "Drift Wars Mode" where you can show off your drifting skills and earn respect from other players in a drift battle. -

- "Training Base" where you can learn the basics of drifting and test your car's performance. -

- "Parking Lot" where you can practice your drifting techniques and tricks in a spacious area. -

- "San Palezzo" where you can drift along the coast and enjoy the scenic view of the sea. -

- "Red Rock" where you can drift on a dusty road surrounded by red rocks and cacti. -

- "Tokyo Night" where you can drift in the neon-lit streets of Japan and feel the atmosphere of the city. -

- It is free to download and play, with optional in-app purchases for extra features and content. -

- It has realistic and fun gameplay, with smooth controls, physics-based car behavior, and various game modes and tracks. -

- It has stunning graphics and sound effects, with high-quality visuals, animations, and sounds. -

- It has a lot of customization options, with over 40 cars to choose from, hundreds of parts and accessories to modify your car's appearance, performance, and tuning. -

- It has a social aspect, with online multiplayer mode, drift clubs, leaderboards, chat rooms, and replay mode. -

- It requires a lot of storage space on your device, as it is a large file that takes up about 600 MB of memory. -

- It requires a stable internet connection for some features, such as multiplayer mode, drift wars mode, and online updates. -

- It can be challenging and frustrating for beginners, as it requires a lot of practice and skill to master drifting and earn points. -

- It can be repetitive and boring for some players, as it does not have a lot of variety or story in its gameplay. -

- It can be expensive for some players, as it has a lot of in-app purchases that might tempt you to spend real money on coins, golds, or premium cars. -

- Go to [this link] to download the CarX Drift Racing APK 1.21.1 file on your device. -

- Once the download is complete, locate the file in your device's file manager or downloads folder. -

- Tap on the file to start the installation process. You might need to enable "Unknown Sources" in your device's settings to allow the installation of apps from sources other than Google Play Store. -

- Follow the instructions on the screen to complete the installation process. -

- Choose the right car for your drifting style. Different cars have different characteristics, such as speed, acceleration, handling, and driftability. You can compare the stats of each car and test them on different tracks to find the one that suits you best. -

- Upgrade and tune your car to optimize its performance. You can use coins and golds to buy new parts and accessories for your car, such as tires, engines, brakes, suspensions, and turbos. You can also adjust the tuning of your car, such as camber, toe, caster, differential, and gearbox, to change its behavior on the road. -

- Use the handbrake and the throttle wisely. The handbrake is useful for initiating and maintaining drifts, while the throttle is useful for controlling the speed and angle of your drifts. You can also use the clutch kick and the weight shift techniques to enhance your drifts. -

- Practice on different tracks and game modes. The more you practice, the more you will learn how to drift on different surfaces, curves, and obstacles. You can also try different game modes and challenges to test your skills and earn more rewards. -

- Watch replays and learn from other players. You can watch your own replays or other players' replays to analyze your mistakes and improve your techniques. You can also join a drift club or a drift war to learn from other players and compete with them. -

- Is CarX Drift Racing APK 1.21.1 safe to download and install? -

- How can I get more coins and golds in CarX Drift Racing APK 1.21.1? -

- How can I join a drift club or a drift war in CarX Drift Racing APK 1.21.1? -

- How can I share my replays with my friends in CarX Drift Racing APK 1.21.1? -

- How can I contact the developers of CarX Drift Racing APK 1.21.1? -

- It is free to download and use, with no registration or subscription required. -

- It supports multiple protocols, such as OpenVPN, WireGuard, IKEv2, etc. -

- It has a large network of servers in over 50 countries. -

- It provides unlimited bandwidth and speed. -

- It has a simple and user-friendly interface. -

- It works with all Android devices running Android 4.0 or higher. -

- Go to the official website of NIC VPN Beta 6 APK and click on the download button. -

- Once the download is complete, locate the APK file on your device and tap on it. -

- If you see a warning message that says "Install blocked", go to your device settings and enable "Unknown sources" under security options. -

- Tap on "Install" and wait for the installation process to finish. -

- Launch the app and select a server location from the list. -

- Tap on "Connect" and enjoy using NIC VPN Beta 6 APK. -

-

-

- - - - - ----

General Info:

- ${info_div.join('\n')} -

- - '; - _update_cache(data); - } else { - console.log('We already have cache!'); - } - - inputs = ulColumns.querySelectorAll('input'); - for (let i = 1; i < inputs.length; i++) { - if (!inputs[i].checked) continue; - _plot(i-1); - } - }; - reader.readAsText(selectedFile); - } else { - console.warn('There is no file!'); - } -} - -function _comeback_options() { - dropdownSearch.style.display = 'none'; - let ids = new Set(); - for (let chart of charts.getElementsByClassName('cols')) { - ids.add(+chart.id.split('-')[1]); - } - - const inputs = ulColumns.querySelectorAll('input'); - inputs[0].checked = false; - for (let i = 1; i < inputs.length; i++) { - inputs[i].checked = ids.has(i-1); - } - - _update_dropdown_text(ids.size, inputs.length-1); -} - -function _apply_options() { - dropdownSearch.style.display = 'none'; - const inputs = ulColumns.querySelectorAll('input'); - for (let i = 1; i < inputs.length; i++) { - _toggle_column(i-1, inputs[i].checked); - } -} - -const showDropdownButton = document.getElementById('showDropdown'); -const dropdownSearch = document.getElementById('dropdownSearch'); -const ulColumns = document.getElementById('ul-columns'); - -showDropdownButton.addEventListener('click', function() { - if (dropdownSearch.style.display === 'none' || dropdownSearch.style.display === '') { - dropdownSearch.style.display = 'block'; - } else { - dropdownSearch.style.display = 'none'; - } -}); - -document.getElementById('cancel').addEventListener('click', _comeback_options); -document.getElementById('apply').addEventListener('click', _apply_options); diff --git a/spaces/ECCV2022/PSG/OpenPSG/configs/_base_/datasets/vg_sg.py b/spaces/ECCV2022/PSG/OpenPSG/configs/_base_/datasets/vg_sg.py deleted file mode 100644 index 5f555ac70bc04c85cbeb9099fd792114ee2ed9a9..0000000000000000000000000000000000000000 --- a/spaces/ECCV2022/PSG/OpenPSG/configs/_base_/datasets/vg_sg.py +++ /dev/null @@ -1,57 +0,0 @@ -# dataset settings -dataset_type = 'SceneGraphDataset' -ann_file = '/mnt/ssd/gzj/data/VisualGenome/data_openpsg.json' -img_dir = '/mnt/ssd/gzj/data/VisualGenome/VG_100K' - -img_norm_cfg = dict(mean=[123.675, 116.28, 103.53], - std=[58.395, 57.12, 57.375], - to_rgb=True) -train_pipeline = [ - dict(type='LoadImageFromFile'), - dict(type='LoadSceneGraphAnnotations', with_bbox=True, with_rel=True), - dict(type='Resize', img_scale=(1333, 800), keep_ratio=True), - dict(type='RandomFlip', flip_ratio=0.5), - dict(type='Normalize', **img_norm_cfg), - dict(type='Pad', size_divisor=32), - dict(type='SceneGraphFormatBundle'), - dict(type='Collect', - keys=['img', 'gt_bboxes', 'gt_labels', 'gt_rels', 'gt_relmaps']), -] -test_pipeline = [ - dict(type='LoadImageFromFile'), - # Since the forward process may need gt info, annos must be loaded. - dict(type='LoadSceneGraphAnnotations', with_bbox=True, with_rel=True), - dict( - type='MultiScaleFlipAug', - img_scale=(1333, 800), - flip=False, - transforms=[ - dict(type='Resize', keep_ratio=True), - dict(type='RandomFlip'), - dict(type='Normalize', **img_norm_cfg), - dict(type='Pad', size_divisor=32), - # NOTE: Do not change the img to DC. - dict(type='ImageToTensor', keys=['img']), - dict(type='ToTensor', keys=['gt_bboxes', 'gt_labels']), - dict(type='ToDataContainer', - fields=(dict(key='gt_bboxes'), dict(key='gt_labels'))), - dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels']), - ]) -] -data = dict(samples_per_gpu=2, - workers_per_gpu=2, - train=dict(type=dataset_type, - ann_file=ann_file, - img_prefix=img_dir, - pipeline=train_pipeline, - split='train'), - val=dict(type=dataset_type, - ann_file=ann_file, - img_prefix=img_dir, - pipeline=test_pipeline, - split='test'), - test=dict(type=dataset_type, - ann_file=ann_file, - img_prefix=img_dir, - pipeline=test_pipeline, - split='test')) diff --git a/spaces/EronSamez/RVC_HFmeu/infer_batch_rvc.py b/spaces/EronSamez/RVC_HFmeu/infer_batch_rvc.py deleted file mode 100644 index 15c862a3d6bf815fa68003cc7054b694cae50c2a..0000000000000000000000000000000000000000 --- a/spaces/EronSamez/RVC_HFmeu/infer_batch_rvc.py +++ /dev/null @@ -1,215 +0,0 @@ -""" -v1 -runtime\python.exe myinfer-v2-0528.py 0 "E:\codes\py39\RVC-beta\todo-songs" "E:\codes\py39\logs\mi-test\added_IVF677_Flat_nprobe_7.index" harvest "E:\codes\py39\RVC-beta\output" "E:\codes\py39\test-20230416b\weights\mi-test.pth" 0.66 cuda:0 True 3 0 1 0.33 -v2 -runtime\python.exe myinfer-v2-0528.py 0 "E:\codes\py39\RVC-beta\todo-songs" "E:\codes\py39\test-20230416b\logs\mi-test-v2\aadded_IVF677_Flat_nprobe_1_v2.index" harvest "E:\codes\py39\RVC-beta\output_v2" "E:\codes\py39\test-20230416b\weights\mi-test-v2.pth" 0.66 cuda:0 True 3 0 1 0.33 -""" -import os, sys, pdb, torch - -now_dir = os.getcwd() -sys.path.append(now_dir) -import sys -import torch -import tqdm as tq -from multiprocessing import cpu_count - - -class Config: - def __init__(self, device, is_half): - self.device = device - self.is_half = is_half - self.n_cpu = 0 - self.gpu_name = None - self.gpu_mem = None - self.x_pad, self.x_query, self.x_center, self.x_max = self.device_config() - - def device_config(self) -> tuple: - if torch.cuda.is_available(): - i_device = int(self.device.split(":")[-1]) - self.gpu_name = torch.cuda.get_device_name(i_device) - if ( - ("16" in self.gpu_name and "V100" not in self.gpu_name.upper()) - or "P40" in self.gpu_name.upper() - or "1060" in self.gpu_name - or "1070" in self.gpu_name - or "1080" in self.gpu_name - ): - print("16系/10系显卡和P40强制单精度") - self.is_half = False - for config_file in ["32k.json", "40k.json", "48k.json"]: - with open(f"configs/{config_file}", "r") as f: - strr = f.read().replace("true", "false") - with open(f"configs/{config_file}", "w") as f: - f.write(strr) - with open("infer/modules/train/preprocess.py", "r") as f: - strr = f.read().replace("3.7", "3.0") - with open("infer/modules/train/preprocess.py", "w") as f: - f.write(strr) - else: - self.gpu_name = None - self.gpu_mem = int( - torch.cuda.get_device_properties(i_device).total_memory - / 1024 - / 1024 - / 1024 - + 0.4 - ) - if self.gpu_mem <= 4: - with open("infer/modules/train/preprocess.py", "r") as f: - strr = f.read().replace("3.7", "3.0") - with open("infer/modules/train/preprocess.py", "w") as f: - f.write(strr) - elif torch.backends.mps.is_available(): - print("没有发现支持的N卡, 使用MPS进行推理") - self.device = "mps" - else: - print("没有发现支持的N卡, 使用CPU进行推理") - self.device = "cpu" - self.is_half = True - - if self.n_cpu == 0: - self.n_cpu = cpu_count() - - if self.is_half: - # 6G显存配置 - x_pad = 3 - x_query = 10 - x_center = 60 - x_max = 65 - else: - # 5G显存配置 - x_pad = 1 - x_query = 6 - x_center = 38 - x_max = 41 - - if self.gpu_mem != None and self.gpu_mem <= 4: - x_pad = 1 - x_query = 5 - x_center = 30 - x_max = 32 - - return x_pad, x_query, x_center, x_max - - -f0up_key = sys.argv[1] -input_path = sys.argv[2] -index_path = sys.argv[3] -f0method = sys.argv[4] # harvest or pm -opt_path = sys.argv[5] -model_path = sys.argv[6] -index_rate = float(sys.argv[7]) -device = sys.argv[8] -is_half = sys.argv[9].lower() != "false" -filter_radius = int(sys.argv[10]) -resample_sr = int(sys.argv[11]) -rms_mix_rate = float(sys.argv[12]) -protect = float(sys.argv[13]) -print(sys.argv) -config = Config(device, is_half) -now_dir = os.getcwd() -sys.path.append(now_dir) -from infer.modules.vc.modules import VC -from lib.infer_pack.models import ( - SynthesizerTrnMs256NSFsid, - SynthesizerTrnMs256NSFsid_nono, - SynthesizerTrnMs768NSFsid, - SynthesizerTrnMs768NSFsid_nono, -) -from infer.lib.audio import load_audio -from fairseq import checkpoint_utils -from scipy.io import wavfile - -hubert_model = None - - -def load_hubert(): - global hubert_model - models, saved_cfg, task = checkpoint_utils.load_model_ensemble_and_task( - ["hubert_base.pt"], - suffix="", - ) - hubert_model = models[0] - hubert_model = hubert_model.to(device) - if is_half: - hubert_model = hubert_model.half() - else: - hubert_model = hubert_model.float() - hubert_model.eval() - - -def vc_single(sid, input_audio, f0_up_key, f0_file, f0_method, file_index, index_rate): - global tgt_sr, net_g, vc, hubert_model, version - if input_audio is None: - return "You need to upload an audio", None - f0_up_key = int(f0_up_key) - audio = load_audio(input_audio, 16000) - times = [0, 0, 0] - if hubert_model == None: - load_hubert() - if_f0 = cpt.get("f0", 1) - # audio_opt=vc.pipeline(hubert_model,net_g,sid,audio,times,f0_up_key,f0_method,file_index,file_big_npy,index_rate,if_f0,f0_file=f0_file) - audio_opt = vc.pipeline( - hubert_model, - net_g, - sid, - audio, - input_audio, - times, - f0_up_key, - f0_method, - file_index, - index_rate, - if_f0, - filter_radius, - tgt_sr, - resample_sr, - rms_mix_rate, - version, - protect, - f0_file=f0_file, - ) - print(times) - return audio_opt - - -def get_vc(model_path): - global n_spk, tgt_sr, net_g, vc, cpt, device, is_half, version - print("loading pth %s" % model_path) - cpt = torch.load(model_path, map_location="cpu") - tgt_sr = cpt["config"][-1] - cpt["config"][-3] = cpt["weight"]["emb_g.weight"].shape[0] # n_spk - if_f0 = cpt.get("f0", 1) - version = cpt.get("version", "v1") - if version == "v1": - if if_f0 == 1: - net_g = SynthesizerTrnMs256NSFsid(*cpt["config"], is_half=is_half) - else: - net_g = SynthesizerTrnMs256NSFsid_nono(*cpt["config"]) - elif version == "v2": - if if_f0 == 1: # - net_g = SynthesizerTrnMs768NSFsid(*cpt["config"], is_half=is_half) - else: - net_g = SynthesizerTrnMs768NSFsid_nono(*cpt["config"]) - del net_g.enc_q - print(net_g.load_state_dict(cpt["weight"], strict=False)) # 不加这一行清不干净,真奇葩 - net_g.eval().to(device) - if is_half: - net_g = net_g.half() - else: - net_g = net_g.float() - vc = VC(tgt_sr, config) - n_spk = cpt["config"][-3] - # return {"visible": True,"maximum": n_spk, "__type__": "update"} - - -get_vc(model_path) -audios = os.listdir(input_path) -for file in tq.tqdm(audios): - if file.endswith(".wav"): - file_path = input_path + "/" + file - wav_opt = vc_single( - 0, file_path, f0up_key, None, f0method, index_path, index_rate - ) - out_path = opt_path + "/" + file - wavfile.write(out_path, tgt_sr, wav_opt) diff --git a/spaces/EronSamez/RVC_HFmeu/tools/calc_rvc_model_similarity.py b/spaces/EronSamez/RVC_HFmeu/tools/calc_rvc_model_similarity.py deleted file mode 100644 index 42496e088e51dc5162d0714470c2226f696e260c..0000000000000000000000000000000000000000 --- a/spaces/EronSamez/RVC_HFmeu/tools/calc_rvc_model_similarity.py +++ /dev/null @@ -1,96 +0,0 @@ -# This code references https://huggingface.co/JosephusCheung/ASimilarityCalculatior/blob/main/qwerty.py -# Fill in the path of the model to be queried and the root directory of the reference models, and this script will return the similarity between the model to be queried and all reference models. -import os -import logging - -logger = logging.getLogger(__name__) - -import torch -import torch.nn as nn -import torch.nn.functional as F - - -def cal_cross_attn(to_q, to_k, to_v, rand_input): - hidden_dim, embed_dim = to_q.shape - attn_to_q = nn.Linear(hidden_dim, embed_dim, bias=False) - attn_to_k = nn.Linear(hidden_dim, embed_dim, bias=False) - attn_to_v = nn.Linear(hidden_dim, embed_dim, bias=False) - attn_to_q.load_state_dict({"weight": to_q}) - attn_to_k.load_state_dict({"weight": to_k}) - attn_to_v.load_state_dict({"weight": to_v}) - - return torch.einsum( - "ik, jk -> ik", - F.softmax( - torch.einsum("ij, kj -> ik", attn_to_q(rand_input), attn_to_k(rand_input)), - dim=-1, - ), - attn_to_v(rand_input), - ) - - -def model_hash(filename): - try: - with open(filename, "rb") as file: - import hashlib - - m = hashlib.sha256() - - file.seek(0x100000) - m.update(file.read(0x10000)) - return m.hexdigest()[0:8] - except FileNotFoundError: - return "NOFILE" - - -def eval(model, n, input): - qk = f"enc_p.encoder.attn_layers.{n}.conv_q.weight" - uk = f"enc_p.encoder.attn_layers.{n}.conv_k.weight" - vk = f"enc_p.encoder.attn_layers.{n}.conv_v.weight" - atoq, atok, atov = model[qk][:, :, 0], model[uk][:, :, 0], model[vk][:, :, 0] - - attn = cal_cross_attn(atoq, atok, atov, input) - return attn - - -def main(path, root): - torch.manual_seed(114514) - model_a = torch.load(path, map_location="cpu")["weight"] - - logger.info("Query:\t\t%s\t%s" % (path, model_hash(path))) - - map_attn_a = {} - map_rand_input = {} - for n in range(6): - hidden_dim, embed_dim, _ = model_a[ - f"enc_p.encoder.attn_layers.{n}.conv_v.weight" - ].shape - rand_input = torch.randn([embed_dim, hidden_dim]) - - map_attn_a[n] = eval(model_a, n, rand_input) - map_rand_input[n] = rand_input - - del model_a - - for name in sorted(list(os.listdir(root))): - path = "%s/%s" % (root, name) - model_b = torch.load(path, map_location="cpu")["weight"] - - sims = [] - for n in range(6): - attn_a = map_attn_a[n] - attn_b = eval(model_b, n, map_rand_input[n]) - - sim = torch.mean(torch.cosine_similarity(attn_a, attn_b)) - sims.append(sim) - - logger.info( - "Reference:\t%s\t%s\t%s" - % (path, model_hash(path), f"{torch.mean(torch.stack(sims)) * 1e2:.2f}%") - ) - - -if __name__ == "__main__": - query_path = r"assets\weights\mi v3.pth" - reference_root = r"assets\weights" - main(query_path, reference_root) diff --git a/spaces/EsoCode/text-generation-webui/modules/models.py b/spaces/EsoCode/text-generation-webui/modules/models.py deleted file mode 100644 index f12e700c2345fc574dcf8274ab3dbdefeba82a3f..0000000000000000000000000000000000000000 --- a/spaces/EsoCode/text-generation-webui/modules/models.py +++ /dev/null @@ -1,334 +0,0 @@ -import gc -import os -import re -import time -from pathlib import Path - -import torch -import transformers -from accelerate import infer_auto_device_map, init_empty_weights -from transformers import ( - AutoConfig, - AutoModel, - AutoModelForCausalLM, - AutoModelForSeq2SeqLM, - AutoTokenizer, - BitsAndBytesConfig, - LlamaTokenizer -) - -import modules.shared as shared -from modules import llama_attn_hijack, sampler_hijack -from modules.logging_colors import logger -from modules.models_settings import infer_loader - -transformers.logging.set_verbosity_error() - -local_rank = None -if shared.args.deepspeed: - import deepspeed - from transformers.deepspeed import ( - HfDeepSpeedConfig, - is_deepspeed_zero3_enabled - ) - - from modules.deepspeed_parameters import generate_ds_config - - # Distributed setup - local_rank = shared.args.local_rank if shared.args.local_rank is not None else int(os.getenv("LOCAL_RANK", "0")) - world_size = int(os.getenv("WORLD_SIZE", "1")) - torch.cuda.set_device(local_rank) - deepspeed.init_distributed() - ds_config = generate_ds_config(shared.args.bf16, 1 * world_size, shared.args.nvme_offload_dir) - dschf = HfDeepSpeedConfig(ds_config) # Keep this object alive for the Transformers integration - -sampler_hijack.hijack_samplers() - - -def load_model(model_name, loader=None): - logger.info(f"Loading {model_name}...") - t0 = time.time() - - shared.is_seq2seq = False - load_func_map = { - 'Transformers': huggingface_loader, - 'AutoGPTQ': AutoGPTQ_loader, - 'GPTQ-for-LLaMa': GPTQ_loader, - 'llama.cpp': llamacpp_loader, - 'FlexGen': flexgen_loader, - 'RWKV': RWKV_loader, - 'ExLlama': ExLlama_loader, - 'ExLlama_HF': ExLlama_HF_loader - } - - if loader is None: - if shared.args.loader is not None: - loader = shared.args.loader - else: - loader = infer_loader(model_name) - if loader is None: - logger.error('The path to the model does not exist. Exiting.') - return None, None - - shared.args.loader = loader - output = load_func_map[loader](model_name) - if type(output) is tuple: - model, tokenizer = output - else: - model = output - if model is None: - return None, None - else: - tokenizer = load_tokenizer(model_name, model) - - # Hijack attention with xformers - if any((shared.args.xformers, shared.args.sdp_attention)): - llama_attn_hijack.hijack_llama_attention() - - logger.info(f"Loaded the model in {(time.time()-t0):.2f} seconds.\n") - return model, tokenizer - - -def load_tokenizer(model_name, model): - tokenizer = None - if any(s in model_name.lower() for s in ['gpt-4chan', 'gpt4chan']) and Path(f"{shared.args.model_dir}/gpt-j-6B/").exists(): - tokenizer = AutoTokenizer.from_pretrained(Path(f"{shared.args.model_dir}/gpt-j-6B/")) - elif model.__class__.__name__ in ['LlamaForCausalLM', 'LlamaGPTQForCausalLM', 'ExllamaHF']: - # Try to load an universal LLaMA tokenizer - if not any(s in shared.model_name.lower() for s in ['llava', 'oasst']): - for p in [Path(f"{shared.args.model_dir}/llama-tokenizer/"), Path(f"{shared.args.model_dir}/oobabooga_llama-tokenizer/")]: - if p.exists(): - logger.info(f"Loading the universal LLaMA tokenizer from {p}...") - tokenizer = LlamaTokenizer.from_pretrained(p, clean_up_tokenization_spaces=True) - return tokenizer - - # Otherwise, load it from the model folder and hope that these - # are not outdated tokenizer files. - tokenizer = LlamaTokenizer.from_pretrained(Path(f"{shared.args.model_dir}/{model_name}/"), clean_up_tokenization_spaces=True) - try: - tokenizer.eos_token_id = 2 - tokenizer.bos_token_id = 1 - tokenizer.pad_token_id = 0 - except: - pass - else: - path_to_model = Path(f"{shared.args.model_dir}/{model_name}/") - if path_to_model.exists(): - tokenizer = AutoTokenizer.from_pretrained(path_to_model, trust_remote_code=shared.args.trust_remote_code) - - return tokenizer - - -def huggingface_loader(model_name): - path_to_model = Path(f'{shared.args.model_dir}/{model_name}') - if 'chatglm' in model_name.lower(): - LoaderClass = AutoModel - else: - config = AutoConfig.from_pretrained(path_to_model, trust_remote_code=shared.args.trust_remote_code) - if config.to_dict().get("is_encoder_decoder", False): - LoaderClass = AutoModelForSeq2SeqLM - shared.is_seq2seq = True - else: - LoaderClass = AutoModelForCausalLM - - # Load the model in simple 16-bit mode by default - if not any([shared.args.cpu, shared.args.load_in_8bit, shared.args.load_in_4bit, shared.args.auto_devices, shared.args.disk, shared.args.deepspeed, shared.args.gpu_memory is not None, shared.args.cpu_memory is not None]): - model = LoaderClass.from_pretrained(Path(f"{shared.args.model_dir}/{model_name}"), low_cpu_mem_usage=True, torch_dtype=torch.bfloat16 if shared.args.bf16 else torch.float16, trust_remote_code=shared.args.trust_remote_code) - if torch.has_mps: - device = torch.device('mps') - model = model.to(device) - else: - model = model.cuda() - - # DeepSpeed ZeRO-3 - elif shared.args.deepspeed: - model = LoaderClass.from_pretrained(Path(f"{shared.args.model_dir}/{model_name}"), torch_dtype=torch.bfloat16 if shared.args.bf16 else torch.float16) - model = deepspeed.initialize(model=model, config_params=ds_config, model_parameters=None, optimizer=None, lr_scheduler=None)[0] - model.module.eval() # Inference - logger.info(f"DeepSpeed ZeRO-3 is enabled: {is_deepspeed_zero3_enabled()}") - - # Custom - else: - params = { - "low_cpu_mem_usage": True, - "trust_remote_code": shared.args.trust_remote_code - } - - if not any((shared.args.cpu, torch.cuda.is_available(), torch.has_mps)): - logger.warning("torch.cuda.is_available() returned False. This means that no GPU has been detected. Falling back to CPU mode.") - shared.args.cpu = True - - if shared.args.cpu: - params["torch_dtype"] = torch.float32 - else: - params["device_map"] = 'auto' - if shared.args.load_in_4bit: - - # See https://github.com/huggingface/transformers/pull/23479/files - # and https://huggingface.co/blog/4bit-transformers-bitsandbytes - quantization_config_params = { - 'load_in_4bit': True, - 'bnb_4bit_compute_dtype': eval("torch.{}".format(shared.args.compute_dtype)) if shared.args.compute_dtype in ["bfloat16", "float16", "float32"] else None, - 'bnb_4bit_quant_type': shared.args.quant_type, - 'bnb_4bit_use_double_quant': shared.args.use_double_quant, - } - - logger.warning("Using the following 4-bit params: " + str(quantization_config_params)) - params['quantization_config'] = BitsAndBytesConfig(**quantization_config_params) - - elif shared.args.load_in_8bit and any((shared.args.auto_devices, shared.args.gpu_memory)): - params['quantization_config'] = BitsAndBytesConfig(load_in_8bit=True, llm_int8_enable_fp32_cpu_offload=True) - elif shared.args.load_in_8bit: - params['quantization_config'] = BitsAndBytesConfig(load_in_8bit=True) - elif shared.args.bf16: - params["torch_dtype"] = torch.bfloat16 - else: - params["torch_dtype"] = torch.float16 - - params['max_memory'] = get_max_memory_dict() - if shared.args.disk: - params["offload_folder"] = shared.args.disk_cache_dir - - checkpoint = Path(f'{shared.args.model_dir}/{model_name}') - if shared.args.load_in_8bit and params.get('max_memory', None) is not None and params['device_map'] == 'auto': - config = AutoConfig.from_pretrained(checkpoint, trust_remote_code=shared.args.trust_remote_code) - with init_empty_weights(): - model = LoaderClass.from_config(config, trust_remote_code=shared.args.trust_remote_code) - - model.tie_weights() - params['device_map'] = infer_auto_device_map( - model, - dtype=torch.int8, - max_memory=params['max_memory'], - no_split_module_classes=model._no_split_modules - ) - - model = LoaderClass.from_pretrained(checkpoint, **params) - - return model - - -def flexgen_loader(model_name): - from flexgen.flex_opt import CompressionConfig, ExecutionEnv, OptLM, Policy - - # Initialize environment - env = ExecutionEnv.create(shared.args.disk_cache_dir) - - # Offloading policy - policy = Policy(1, 1, - shared.args.percent[0], shared.args.percent[1], - shared.args.percent[2], shared.args.percent[3], - shared.args.percent[4], shared.args.percent[5], - overlap=True, sep_layer=True, pin_weight=shared.args.pin_weight, - cpu_cache_compute=False, attn_sparsity=1.0, - compress_weight=shared.args.compress_weight, - comp_weight_config=CompressionConfig( - num_bits=4, group_size=64, - group_dim=0, symmetric=False), - compress_cache=False, - comp_cache_config=CompressionConfig( - num_bits=4, group_size=64, - group_dim=2, symmetric=False)) - - model = OptLM(f"facebook/{model_name}", env, shared.args.model_dir, policy) - return model - - -def RWKV_loader(model_name): - from modules.RWKV import RWKVModel, RWKVTokenizer - - model = RWKVModel.from_pretrained(Path(f'{shared.args.model_dir}/{model_name}'), dtype="fp32" if shared.args.cpu else "bf16" if shared.args.bf16 else "fp16", device="cpu" if shared.args.cpu else "cuda") - tokenizer = RWKVTokenizer.from_pretrained(Path(shared.args.model_dir)) - return model, tokenizer - - -def llamacpp_loader(model_name): - from modules.llamacpp_model import LlamaCppModel - - path = Path(f'{shared.args.model_dir}/{model_name}') - if path.is_file(): - model_file = path - else: - model_file = list(Path(f'{shared.args.model_dir}/{model_name}').glob('*ggml*.bin'))[0] - - logger.info(f"llama.cpp weights detected: {model_file}\n") - model, tokenizer = LlamaCppModel.from_pretrained(model_file) - return model, tokenizer - - -def GPTQ_loader(model_name): - - # Monkey patch - if shared.args.monkey_patch: - logger.warning("Applying the monkey patch for using LoRAs with GPTQ models. It may cause undefined behavior outside its intended scope.") - from modules.monkey_patch_gptq_lora import load_model_llama - - model, _ = load_model_llama(model_name) - - # No monkey patch - else: - import modules.GPTQ_loader - - model = modules.GPTQ_loader.load_quantized(model_name) - - return model - - -def AutoGPTQ_loader(model_name): - import modules.AutoGPTQ_loader - - return modules.AutoGPTQ_loader.load_quantized(model_name) - - -def ExLlama_loader(model_name): - from modules.exllama import ExllamaModel - - model, tokenizer = ExllamaModel.from_pretrained(model_name) - return model, tokenizer - - -def ExLlama_HF_loader(model_name): - from modules.exllama_hf import ExllamaHF - - return ExllamaHF.from_pretrained(model_name) - - -def get_max_memory_dict(): - max_memory = {} - if shared.args.gpu_memory: - memory_map = list(map(lambda x: x.strip(), shared.args.gpu_memory)) - for i in range(len(memory_map)): - max_memory[i] = f'{memory_map[i]}GiB' if not re.match('.*ib$', memory_map[i].lower()) else memory_map[i] - - max_cpu_memory = shared.args.cpu_memory.strip() if shared.args.cpu_memory is not None else '99GiB' - max_memory['cpu'] = f'{max_cpu_memory}GiB' if not re.match('.*ib$', max_cpu_memory.lower()) else max_cpu_memory - - # If --auto-devices is provided standalone, try to get a reasonable value - # for the maximum memory of device :0 - elif shared.args.auto_devices: - total_mem = (torch.cuda.get_device_properties(0).total_memory / (1024 * 1024)) - suggestion = round((total_mem - 1000) / 1000) * 1000 - if total_mem - suggestion < 800: - suggestion -= 1000 - - suggestion = int(round(suggestion / 1000)) - logger.warning(f"Auto-assiging --gpu-memory {suggestion} for your GPU to try to prevent out-of-memory errors. You can manually set other values.") - max_memory = {0: f'{suggestion}GiB', 'cpu': f'{shared.args.cpu_memory or 99}GiB'} - - return max_memory if len(max_memory) > 0 else None - - -def clear_torch_cache(): - gc.collect() - if not shared.args.cpu: - torch.cuda.empty_cache() - - -def unload_model(): - shared.model = shared.tokenizer = None - clear_torch_cache() - - -def reload_model(): - unload_model() - shared.model, shared.tokenizer = load_model(shared.model_name) diff --git a/spaces/EuroPython2022/pulsar-clip/pulsar_clip.py b/spaces/EuroPython2022/pulsar-clip/pulsar_clip.py deleted file mode 100644 index a9ae98c8723f846458b673ef34a8970149441ebd..0000000000000000000000000000000000000000 --- a/spaces/EuroPython2022/pulsar-clip/pulsar_clip.py +++ /dev/null @@ -1,236 +0,0 @@ -from transformers import set_seed -from tqdm.auto import trange -from PIL import Image -import numpy as np -import random -import utils -import torch - - -CONFIG_SPEC = [ - ("General", [ - ("text", "A cloud at dawn", str), - ("iterations", 5000, (0, 7500)), - ("seed", 12, int), - ("show_every", 10, int), - ]), - ("Rendering", [ - ("w", 224, [224, 252]), - ("h", 224, [224, 252]), - ("showoff", 5000, (0, 10000)), - ("turns", 4, int), - ("focal_length", 0.1, float), - ("plane_width", 0.1, float), - ("shade_strength", 0.25, float), - ("gamma", 0.5, float), - ("max_depth", 7, float), - ("offset", 5, float), - ("offset_random", 0.75, float), - ("xyz_random", 0.25, float), - ("altitude_range", 0.3, float), - ("augments", 4, int), - ]), - ("Optimization", [ - ("epochs", 6, int), - ("lr", 0.6, float), - #@markdown CLIP loss type, might improve the results - ("loss_type", "spherical", ["spherical", "cosine"]), - #@markdown CLIP loss weight - ("clip_weight", 1.0, float), #@param {type: "number"} - ]), - ("Elements", [ - ("num_objects", 256, int), - #@markdown Number of dimensions. 0 is for point clouds (default), 1 will make - #@markdown strokes, 2 will make planes, 3 produces little cubes - ("ndim", 0, [0, 1, 2, 3]), #@param {type: "integer"} - - #@markdown Opacity scale: - ("min_opacity", 1e-4, float), #@param {type: "number"} - ("max_opacity", 1.0, float), #@param {type: "number"} - ("log_opacity", False, bool), #@param {type: "boolean"} - - ("min_radius", 0.030, float), - ("max_radius", 0.170, float), - ("log_radius", False, bool), - - # TODO dynamically decide bezier_res - #@markdown Bezier resolution: how many points a line/plane/cube will have. Not applicable to points - ("bezier_res", 8, int), #@param {type: "integer"} - #@markdown Maximum scale of parameters: position, velocity, acceleration - ("pos_scale", 0.4, float), #@param {type: "number"} - ("vel_scale", 0.15, float), #@param {type: "number"} - ("acc_scale", 0.15, float), #@param {type: "number"} - - #@markdown Scale of each individual 3D object. Master control for velocity and acceleration scale. - ("scale", 1, float), #@param {type: "number"} - ]), -] - - -# TODO: one day separate the config into multiple parts and split this megaobject into multiple objects -# 2022/08/09: halfway done -class PulsarCLIP(object): - def __init__(self, args): - args = DotDict(**args) - set_seed(args.seed) - self.args = args - self.device = args.get("device", "cuda" if torch.cuda.is_available() else "cpu") - # Defer the import so that we can import `pulsar_clip` and then install `pytorch3d` - import pytorch3d.renderer.points.pulsar as ps - self.ndim = int(self.args.ndim) - self.renderer = ps.Renderer(self.args.w, self.args.h, - self.args.num_objects * (self.args.bezier_res ** self.ndim)).to(self.device) - self.bezier_pos = torch.nn.Parameter(torch.randn((args.num_objects, 4)).to(self.device)) - self.bezier_vel = torch.nn.Parameter(torch.randn((args.num_objects, 3 * self.ndim)).to(self.device)) - self.bezier_acc = torch.nn.Parameter(torch.randn((args.num_objects, 3 * self.ndim)).to(self.device)) - self.bezier_col = torch.nn.Parameter(torch.randn((args.num_objects, 4 * (1 + self.ndim))).to(self.device)) - self.optimizer = torch.optim.Adam([dict(params=[self.bezier_col], lr=5e-1 * args.lr), - dict(params=[self.bezier_pos], lr=1e-1 * args.lr), - dict(params=[self.bezier_vel, self.bezier_acc], lr=5e-2 * args.lr), - ]) - self.model_clip, self.preprocess_clip = utils.load_clip() - self.model_clip.visual.requires_grad_(False) - self.scheduler = torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(self.optimizer, - int(self.args.iterations - / self.args.augments - / self.args.epochs), - eta_min=args.lr / 100) - import clip - self.txt_emb = self.model_clip.encode_text(clip.tokenize([self.args.text]).to(self.device))[0].detach() - self.txt_emb = torch.nn.functional.normalize(self.txt_emb, dim=-1) - - def get_points(self): - if self.ndim > 0: - bezier_ts = torch.stack(torch.meshgrid( - (torch.linspace(0, 1, self.args.bezier_res, device=self.device),) * self.ndim), dim=0 - ).unsqueeze(1).repeat((1, self.args.num_objects) + (1,) * self.ndim).unsqueeze(-1) - - def interpolate_3D(pos, vel=0.0, acc=0.0, pos_scale=None, vel_scale=None, acc_scale=None, scale=None): - pos_scale = self.args.pos_scale if pos_scale is None else pos_scale - vel_scale = self.args.vel_scale if vel_scale is None else vel_scale - acc_scale = self.args.acc_scale if acc_scale is None else acc_scale - scale = self.args.scale if scale is None else scale - if self.ndim == 0: - return pos * pos_scale - result = 0.0 - s = pos.shape[-1] - assert s * self.ndim == vel.shape[-1] == acc.shape[-1] - # O(dim) sequential lol - for d, bezier_t in zip(range(self.ndim), bezier_ts): # TODO replace with fused dimension operation - result = (result - + torch.tanh(vel[..., d * s:(d + 1) * s]).view( - (-1,) + (1,) * self.ndim + (s,)) * vel_scale * bezier_t - + torch.tanh(acc[..., d * s:(d + 1) * s]).view( - (-1,) + (1,) * self.ndim + (s,)) * acc_scale * bezier_t.pow(2)) - result = (result * scale - + torch.tanh(pos[..., :s]).view((-1,) + (1,) * self.ndim + (s,)) * pos_scale).view(-1, s) - return result - - vert_pos = interpolate_3D(self.bezier_pos[..., :3], self.bezier_vel, self.bezier_acc) - vert_col = interpolate_3D(self.bezier_col[..., :4], - self.bezier_col[..., 4:4 + 4 * self.ndim], - self.bezier_col[..., -4 * self.ndim:]) - - to_bezier = lambda x: x.view((-1,) + (1,) * self.ndim + (x.shape[-1],)).repeat( - (1,) + (self.args.bezier_res,) * self.ndim + (1,)).reshape(-1, x.shape[-1]) - rescale = lambda x, a, b, is_log=False: (torch.exp(x - * np.log(b / a) - + np.log(a))) if is_log else x * (b - a) + a - return ( - vert_pos, - torch.sigmoid(vert_col[..., :3]), - rescale( - torch.sigmoid(to_bezier(self.bezier_pos[..., -1:])[..., 0]), - self.args.min_radius, self.args.max_radius, is_log=self.args.log_radius - ), - rescale(torch.sigmoid(vert_col[..., -1]), - self.args.min_opacity, self.args.max_opacity, is_log=self.args.log_opacity)) - - def camera(self, angle, altitude=0.0, offset=None, use_random=True, offset_random=None, - xyz_random=None, focal_length=None, plane_width=None): - if offset is None: - offset = self.args.offset - if xyz_random is None: - xyz_random = self.args.xyz_random - if focal_length is None: - focal_length = self.args.focal_length - if plane_width is None: - plane_width = self.args.plane_width - if offset_random is None: - offset_random = self.args.offset_random - device = self.device - offset = offset + np.random.normal() * offset_random * int(use_random) - position = torch.tensor([0, 0, -offset], dtype=torch.float) - position = utils.rotate_axis(position, altitude, 0) - position = utils.rotate_axis(position, angle, 1) - position = position + torch.randn(3) * xyz_random * int(use_random) - return torch.tensor([position[0], position[1], position[2], - altitude, angle, 0, - focal_length, plane_width], dtype=torch.float, device=device) - - - def render(self, cam_params=None): - if cam_params is None: - cam_params = self.camera(0, 0) - vert_pos, vert_col, radius, opacity = self.get_points() - - rgb = self.renderer(vert_pos, vert_col, radius, cam_params, - self.args.gamma, self.args.max_depth, opacity=opacity) - opacity = self.renderer(vert_pos, vert_col * 0, radius, cam_params, - self.args.gamma, self.args.max_depth, opacity=opacity) - return rgb, opacity - - def random_view_render(self): - angle = random.uniform(0, np.pi * 2) - altitude = random.uniform(-self.args.altitude_range / 2, self.args.altitude_range / 2) - cam_params = self.camera(angle, altitude) - result, alpha = self.render(cam_params) - back = torch.zeros_like(result) - s = back.shape - for j in range(s[-1]): - n = random.choice([7, 14, 28]) - back[..., j] = utils.rand_perlin_2d_octaves(s[:-1], (n, n)).clip(-0.5, 0.5) + 0.5 - result = result * (1 - alpha) + back * alpha - return result - - - def generate(self): - self.optimizer.zero_grad() - try: - for i in trange(self.args.iterations + self.args.showoff): - if i < self.args.iterations: - result = self.random_view_render() - img_emb = self.model_clip.encode_image( - self.preprocess_clip(result.permute(2, 0, 1)).unsqueeze(0).clamp(0., 1.)) - img_emb = torch.nn.functional.normalize(img_emb, dim=-1) - if self.args.loss_type == "spherical": - clip_loss = (img_emb - self.txt_emb).norm(dim=-1).div(2).arcsin().pow(2).mul(2).mean() - elif self.args.loss_type == "cosine": - clip_loss = (1 - img_emb @ self.txt_emb.T).mean() - else: - raise NotImplementedError(f"CLIP loss type not supported: {self.args.loss_type}") - loss = clip_loss * self.args.clip_weight + (0 and ...) # TODO add more loss types - loss.backward() - if i % self.args.augments == self.args.augments - 1: - self.optimizer.step() - self.optimizer.zero_grad() - try: - self.scheduler.step() - except AttributeError: - pass - if i % self.args.show_every == 0: - cam_params = self.camera(i / self.args.iterations * np.pi * 2 * self.args.turns, use_random=False) - img_show, _ = self.render(cam_params) - img = Image.fromarray((img_show.cpu().detach().numpy() * 255).astype(np.uint8)) - yield img - except KeyboardInterrupt: - pass - - - def save_obj(self, fn): - utils.save_obj(self.get_points(), fn) - - -class DotDict(dict): - def __getattr__(self, item): - return self.__getitem__(item) diff --git a/spaces/Faridmaruf/rvc-Blue-archives/lib/infer_pack/modules.py b/spaces/Faridmaruf/rvc-Blue-archives/lib/infer_pack/modules.py deleted file mode 100644 index c83289df7c79a4810dacd15c050148544ba0b6a9..0000000000000000000000000000000000000000 --- a/spaces/Faridmaruf/rvc-Blue-archives/lib/infer_pack/modules.py +++ /dev/null @@ -1,522 +0,0 @@ -import copy -import math -import numpy as np -import scipy -import torch -from torch import nn -from torch.nn import functional as F - -from torch.nn import Conv1d, ConvTranspose1d, AvgPool1d, Conv2d -from torch.nn.utils import weight_norm, remove_weight_norm - -from lib.infer_pack import commons -from lib.infer_pack.commons import init_weights, get_padding -from lib.infer_pack.transforms import piecewise_rational_quadratic_transform - - -LRELU_SLOPE = 0.1 - - -class LayerNorm(nn.Module): - def __init__(self, channels, eps=1e-5): - super().__init__() - self.channels = channels - self.eps = eps - - self.gamma = nn.Parameter(torch.ones(channels)) - self.beta = nn.Parameter(torch.zeros(channels)) - - def forward(self, x): - x = x.transpose(1, -1) - x = F.layer_norm(x, (self.channels,), self.gamma, self.beta, self.eps) - return x.transpose(1, -1) - - -class ConvReluNorm(nn.Module): - def __init__( - self, - in_channels, - hidden_channels, - out_channels, - kernel_size, - n_layers, - p_dropout, - ): - super().__init__() - self.in_channels = in_channels - self.hidden_channels = hidden_channels - self.out_channels = out_channels - self.kernel_size = kernel_size - self.n_layers = n_layers - self.p_dropout = p_dropout - assert n_layers > 1, "Number of layers should be larger than 0." - - self.conv_layers = nn.ModuleList() - self.norm_layers = nn.ModuleList() - self.conv_layers.append( - nn.Conv1d( - in_channels, hidden_channels, kernel_size, padding=kernel_size // 2 - ) - ) - self.norm_layers.append(LayerNorm(hidden_channels)) - self.relu_drop = nn.Sequential(nn.ReLU(), nn.Dropout(p_dropout)) - for _ in range(n_layers - 1): - self.conv_layers.append( - nn.Conv1d( - hidden_channels, - hidden_channels, - kernel_size, - padding=kernel_size // 2, - ) - ) - self.norm_layers.append(LayerNorm(hidden_channels)) - self.proj = nn.Conv1d(hidden_channels, out_channels, 1) - self.proj.weight.data.zero_() - self.proj.bias.data.zero_() - - def forward(self, x, x_mask): - x_org = x - for i in range(self.n_layers): - x = self.conv_layers[i](x * x_mask) - x = self.norm_layers[i](x) - x = self.relu_drop(x) - x = x_org + self.proj(x) - return x * x_mask - - -class DDSConv(nn.Module): - """ - Dialted and Depth-Separable Convolution - """ - - def __init__(self, channels, kernel_size, n_layers, p_dropout=0.0): - super().__init__() - self.channels = channels - self.kernel_size = kernel_size - self.n_layers = n_layers - self.p_dropout = p_dropout - - self.drop = nn.Dropout(p_dropout) - self.convs_sep = nn.ModuleList() - self.convs_1x1 = nn.ModuleList() - self.norms_1 = nn.ModuleList() - self.norms_2 = nn.ModuleList() - for i in range(n_layers): - dilation = kernel_size**i - padding = (kernel_size * dilation - dilation) // 2 - self.convs_sep.append( - nn.Conv1d( - channels, - channels, - kernel_size, - groups=channels, - dilation=dilation, - padding=padding, - ) - ) - self.convs_1x1.append(nn.Conv1d(channels, channels, 1)) - self.norms_1.append(LayerNorm(channels)) - self.norms_2.append(LayerNorm(channels)) - - def forward(self, x, x_mask, g=None): - if g is not None: - x = x + g - for i in range(self.n_layers): - y = self.convs_sep[i](x * x_mask) - y = self.norms_1[i](y) - y = F.gelu(y) - y = self.convs_1x1[i](y) - y = self.norms_2[i](y) - y = F.gelu(y) - y = self.drop(y) - x = x + y - return x * x_mask - - -class WN(torch.nn.Module): - def __init__( - self, - hidden_channels, - kernel_size, - dilation_rate, - n_layers, - gin_channels=0, - p_dropout=0, - ): - super(WN, self).__init__() - assert kernel_size % 2 == 1 - self.hidden_channels = hidden_channels - self.kernel_size = (kernel_size,) - self.dilation_rate = dilation_rate - self.n_layers = n_layers - self.gin_channels = gin_channels - self.p_dropout = p_dropout - - self.in_layers = torch.nn.ModuleList() - self.res_skip_layers = torch.nn.ModuleList() - self.drop = nn.Dropout(p_dropout) - - if gin_channels != 0: - cond_layer = torch.nn.Conv1d( - gin_channels, 2 * hidden_channels * n_layers, 1 - ) - self.cond_layer = torch.nn.utils.weight_norm(cond_layer, name="weight") - - for i in range(n_layers): - dilation = dilation_rate**i - padding = int((kernel_size * dilation - dilation) / 2) - in_layer = torch.nn.Conv1d( - hidden_channels, - 2 * hidden_channels, - kernel_size, - dilation=dilation, - padding=padding, - ) - in_layer = torch.nn.utils.weight_norm(in_layer, name="weight") - self.in_layers.append(in_layer) - - # last one is not necessary - if i < n_layers - 1: - res_skip_channels = 2 * hidden_channels - else: - res_skip_channels = hidden_channels - - res_skip_layer = torch.nn.Conv1d(hidden_channels, res_skip_channels, 1) - res_skip_layer = torch.nn.utils.weight_norm(res_skip_layer, name="weight") - self.res_skip_layers.append(res_skip_layer) - - def forward(self, x, x_mask, g=None, **kwargs): - output = torch.zeros_like(x) - n_channels_tensor = torch.IntTensor([self.hidden_channels]) - - if g is not None: - g = self.cond_layer(g) - - for i in range(self.n_layers): - x_in = self.in_layers[i](x) - if g is not None: - cond_offset = i * 2 * self.hidden_channels - g_l = g[:, cond_offset : cond_offset + 2 * self.hidden_channels, :] - else: - g_l = torch.zeros_like(x_in) - - acts = commons.fused_add_tanh_sigmoid_multiply(x_in, g_l, n_channels_tensor) - acts = self.drop(acts) - - res_skip_acts = self.res_skip_layers[i](acts) - if i < self.n_layers - 1: - res_acts = res_skip_acts[:, : self.hidden_channels, :] - x = (x + res_acts) * x_mask - output = output + res_skip_acts[:, self.hidden_channels :, :] - else: - output = output + res_skip_acts - return output * x_mask - - def remove_weight_norm(self): - if self.gin_channels != 0: - torch.nn.utils.remove_weight_norm(self.cond_layer) - for l in self.in_layers: - torch.nn.utils.remove_weight_norm(l) - for l in self.res_skip_layers: - torch.nn.utils.remove_weight_norm(l) - - -class ResBlock1(torch.nn.Module): - def __init__(self, channels, kernel_size=3, dilation=(1, 3, 5)): - super(ResBlock1, self).__init__() - self.convs1 = nn.ModuleList( - [ - weight_norm( - Conv1d( - channels, - channels, - kernel_size, - 1, - dilation=dilation[0], - padding=get_padding(kernel_size, dilation[0]), - ) - ), - weight_norm( - Conv1d( - channels, - channels, - kernel_size, - 1, - dilation=dilation[1], - padding=get_padding(kernel_size, dilation[1]), - ) - ), - weight_norm( - Conv1d( - channels, - channels, - kernel_size, - 1, - dilation=dilation[2], - padding=get_padding(kernel_size, dilation[2]), - ) - ), - ] - ) - self.convs1.apply(init_weights) - - self.convs2 = nn.ModuleList( - [ - weight_norm( - Conv1d( - channels, - channels, - kernel_size, - 1, - dilation=1, - padding=get_padding(kernel_size, 1), - ) - ), - weight_norm( - Conv1d( - channels, - channels, - kernel_size, - 1, - dilation=1, - padding=get_padding(kernel_size, 1), - ) - ), - weight_norm( - Conv1d( - channels, - channels, - kernel_size, - 1, - dilation=1, - padding=get_padding(kernel_size, 1), - ) - ), - ] - ) - self.convs2.apply(init_weights) - - def forward(self, x, x_mask=None): - for c1, c2 in zip(self.convs1, self.convs2): - xt = F.leaky_relu(x, LRELU_SLOPE) - if x_mask is not None: - xt = xt * x_mask - xt = c1(xt) - xt = F.leaky_relu(xt, LRELU_SLOPE) - if x_mask is not None: - xt = xt * x_mask - xt = c2(xt) - x = xt + x - if x_mask is not None: - x = x * x_mask - return x - - def remove_weight_norm(self): - for l in self.convs1: - remove_weight_norm(l) - for l in self.convs2: - remove_weight_norm(l) - - -class ResBlock2(torch.nn.Module): - def __init__(self, channels, kernel_size=3, dilation=(1, 3)): - super(ResBlock2, self).__init__() - self.convs = nn.ModuleList( - [ - weight_norm( - Conv1d( - channels, - channels, - kernel_size, - 1, - dilation=dilation[0], - padding=get_padding(kernel_size, dilation[0]), - ) - ), - weight_norm( - Conv1d( - channels, - channels, - kernel_size, - 1, - dilation=dilation[1], - padding=get_padding(kernel_size, dilation[1]), - ) - ), - ] - ) - self.convs.apply(init_weights) - - def forward(self, x, x_mask=None): - for c in self.convs: - xt = F.leaky_relu(x, LRELU_SLOPE) - if x_mask is not None: - xt = xt * x_mask - xt = c(xt) - x = xt + x - if x_mask is not None: - x = x * x_mask - return x - - def remove_weight_norm(self): - for l in self.convs: - remove_weight_norm(l) - - -class Log(nn.Module): - def forward(self, x, x_mask, reverse=False, **kwargs): - if not reverse: - y = torch.log(torch.clamp_min(x, 1e-5)) * x_mask - logdet = torch.sum(-y, [1, 2]) - return y, logdet - else: - x = torch.exp(x) * x_mask - return x - - -class Flip(nn.Module): - def forward(self, x, *args, reverse=False, **kwargs): - x = torch.flip(x, [1]) - if not reverse: - logdet = torch.zeros(x.size(0)).to(dtype=x.dtype, device=x.device) - return x, logdet - else: - return x - - -class ElementwiseAffine(nn.Module): - def __init__(self, channels): - super().__init__() - self.channels = channels - self.m = nn.Parameter(torch.zeros(channels, 1)) - self.logs = nn.Parameter(torch.zeros(channels, 1)) - - def forward(self, x, x_mask, reverse=False, **kwargs): - if not reverse: - y = self.m + torch.exp(self.logs) * x - y = y * x_mask - logdet = torch.sum(self.logs * x_mask, [1, 2]) - return y, logdet - else: - x = (x - self.m) * torch.exp(-self.logs) * x_mask - return x - - -class ResidualCouplingLayer(nn.Module): - def __init__( - self, - channels, - hidden_channels, - kernel_size, - dilation_rate, - n_layers, - p_dropout=0, - gin_channels=0, - mean_only=False, - ): - assert channels % 2 == 0, "channels should be divisible by 2" - super().__init__() - self.channels = channels - self.hidden_channels = hidden_channels - self.kernel_size = kernel_size - self.dilation_rate = dilation_rate - self.n_layers = n_layers - self.half_channels = channels // 2 - self.mean_only = mean_only - - self.pre = nn.Conv1d(self.half_channels, hidden_channels, 1) - self.enc = WN( - hidden_channels, - kernel_size, - dilation_rate, - n_layers, - p_dropout=p_dropout, - gin_channels=gin_channels, - ) - self.post = nn.Conv1d(hidden_channels, self.half_channels * (2 - mean_only), 1) - self.post.weight.data.zero_() - self.post.bias.data.zero_() - - def forward(self, x, x_mask, g=None, reverse=False): - x0, x1 = torch.split(x, [self.half_channels] * 2, 1) - h = self.pre(x0) * x_mask - h = self.enc(h, x_mask, g=g) - stats = self.post(h) * x_mask - if not self.mean_only: - m, logs = torch.split(stats, [self.half_channels] * 2, 1) - else: - m = stats - logs = torch.zeros_like(m) - - if not reverse: - x1 = m + x1 * torch.exp(logs) * x_mask - x = torch.cat([x0, x1], 1) - logdet = torch.sum(logs, [1, 2]) - return x, logdet - else: - x1 = (x1 - m) * torch.exp(-logs) * x_mask - x = torch.cat([x0, x1], 1) - return x - - def remove_weight_norm(self): - self.enc.remove_weight_norm() - - -class ConvFlow(nn.Module): - def __init__( - self, - in_channels, - filter_channels, - kernel_size, - n_layers, - num_bins=10, - tail_bound=5.0, - ): - super().__init__() - self.in_channels = in_channels - self.filter_channels = filter_channels - self.kernel_size = kernel_size - self.n_layers = n_layers - self.num_bins = num_bins - self.tail_bound = tail_bound - self.half_channels = in_channels // 2 - - self.pre = nn.Conv1d(self.half_channels, filter_channels, 1) - self.convs = DDSConv(filter_channels, kernel_size, n_layers, p_dropout=0.0) - self.proj = nn.Conv1d( - filter_channels, self.half_channels * (num_bins * 3 - 1), 1 - ) - self.proj.weight.data.zero_() - self.proj.bias.data.zero_() - - def forward(self, x, x_mask, g=None, reverse=False): - x0, x1 = torch.split(x, [self.half_channels] * 2, 1) - h = self.pre(x0) - h = self.convs(h, x_mask, g=g) - h = self.proj(h) * x_mask - - b, c, t = x0.shape - h = h.reshape(b, c, -1, t).permute(0, 1, 3, 2) # [b, cx?, t] -> [b, c, t, ?] - - unnormalized_widths = h[..., : self.num_bins] / math.sqrt(self.filter_channels) - unnormalized_heights = h[..., self.num_bins : 2 * self.num_bins] / math.sqrt( - self.filter_channels - ) - unnormalized_derivatives = h[..., 2 * self.num_bins :] - - x1, logabsdet = piecewise_rational_quadratic_transform( - x1, - unnormalized_widths, - unnormalized_heights, - unnormalized_derivatives, - inverse=reverse, - tails="linear", - tail_bound=self.tail_bound, - ) - - x = torch.cat([x0, x1], 1) * x_mask - logdet = torch.sum(logabsdet * x_mask, [1, 2]) - if not reverse: - return x, logdet - else: - return x diff --git a/spaces/FelixLuoX/codeformer/CodeFormer/basicsr/ops/dcn/src/deform_conv_cuda.cpp b/spaces/FelixLuoX/codeformer/CodeFormer/basicsr/ops/dcn/src/deform_conv_cuda.cpp deleted file mode 100644 index 5d9424908ed2dbd4ac3cdb98d13e09287a4d2f2d..0000000000000000000000000000000000000000 --- a/spaces/FelixLuoX/codeformer/CodeFormer/basicsr/ops/dcn/src/deform_conv_cuda.cpp +++ /dev/null @@ -1,685 +0,0 @@ -// modify from -// https://github.com/chengdazhi/Deformable-Convolution-V2-PyTorch/blob/mmdetection/mmdet/ops/dcn/src/deform_conv_cuda.c - -#include

- Complete seasons from 1998 to 2004 -

- Insane new circuits such as reverse/ramp tracks, Macau, ROC, Top Gear, MARS and Total F1 Circuit -

- Updated high-quality car liveries and custom liveries pack by members of the community -

- HD mirrors pack by alex2106 -

- Default setups, wheel user profiles and FFB guides -

- Latest EXE (v13) with improved performance and 3D config version (no compatibility mode needed) and automatic admin rights -

- Optimized default profile in SAVE folder which must be renamed (SAVE/Rename- (Rename.gal and Rename.PLR) -

- Replay pack of online fun races (2018-2021) -

- MEGA Link: https://mega.nz/file/OgIjkahI#nW3Psm7... -

- MediaFire Link: https://www.mediafire.com/file/9xo7wd... -

- Replay pack: https://drive.google.com/file/d/11PhY... -

- John the Ripper: https://www.openwall.com/john/ -

- Hashcat: https://hashcat.net/hashcat/ -

- CrackStation: https://crackstation.net/ -

- F1 Challenge 99-02 Online 2021 Edition by Bande87: This is the mod we have already mentioned in the previous section. It includes complete seasons from 1998 to 2004 and insane new circuits such as reverse/ramp tracks, Macau, ROC, Top Gear, MARS and Total F1 Circuit. -

- F1 Challenge 99-02 RH by Ripping Corporation: This is a mod that focuses on realism and historical accuracy. It includes seasons from 1988 to 2008 with realistic physics, graphics, sounds, tracks, cars, drivers, teams, rules, etc. -

- F1 Challenge 99-02 CTDP by Cars & Tracks Development Project: This is a mod that aims to provide high-quality content and features. It includes seasons from 2005 to 2009 with high-quality physics, graphics, sounds, tracks, cars, drivers, teams, rules, etc. -

- F1 Challenge 99-02 MMG by MMG Simulations: This is a mod that offers a modern and immersive experience. It includes seasons from 2007 to 2010 with modern physics, graphics, sounds, tracks, cars, drivers, teams, rules, etc. -

- Race4Sim: http://www.race4sim.com/ -

- DrivingItalia: https://www.drivingitalia.net/ -

- RaceDepartment: https://www.racedepartment.com/ -

- SimRacingWorld: http://www.simracingworld.com/ -

- You can customize and change different parts of the app, such as themes, fonts, emoji, icons, colors, etc. -

- You can freeze your last seen status and hide your online status from others. -

- You can hide double ticks, blue ticks, typing status, recording status, etc. from your contacts. -

- You can view deleted statuses and messages from others. -

- You can send more than 90 images at once and video files up to 700 MB. -

- You can increase the quality while sending images and videos. -

- You can lock your chats with a password or fingerprint. -

- You can use multiple accounts on the same device. -

- You can enjoy more privacy and security features than the official app. -

- Anti-ban feature that prevents your account from getting banned by WhatsApp. -

- New base updated to 2.21.4.22 (Play Store). -

- New emojis added from Android 11. -

- New UI for adding a status from camera screen. -

- Added option to change color of "FMWA" in home screen header. -

- Added option to change color of "typing..." in main/chat screen. -

- Added option to change color of voice note play button. -

- Added option to change color of voice note play button. -

- Added option to change color of forward icon in chat screen. -

- Added option to change color of forward background in chat screen. -

- Added option to change color of participants icon in group chat screen. -

- Added new attachment picker UI. -

- Added option to enable/disable new attachment UI. -

- Added animation to new attachment UI. -

- Added 5 entries style. -

- Added 16 bubble style. -

- Added 14 tick style. -

- Re-added option to save profile picture. -

- Fixed contact online toast not showing in some devices. -

- Fixed status seen color not changing. -

- Fixed status downloader status not showing. -

- Fixed unread counter issue for groups. -

- Fixed hidden chats random crash when going back. -

- Fixed app not launching on some devices. -

- Miscellaneous bugs fixes and improvements. -

- A new event called "The Final Singularity: Solomon" that concludes the main story arc of the game. -

- A new feature called "Command Code" that allows you to enhance your Servants' cards with special effects. -

- A new feature called "Spiritron Dress" that allows you to change your Servants' outfits and appearances. -

- A new feature called "Support Setup" that allows you to set up different teams for different situations. -

- A new feature called "Auto-Select" that allows you to automatically select your Servants and Craft Essences based on your preferences. -

- Various bug fixes and performance improvements. -

- Q: Is Fate Grand Order APK 2.61 5 safe to download and install? -

- A: Yes, Fate Grand Order APK 2.61 5 is safe to download and install, as long as you use the official link we provided or another trusted source. However, you should always be careful when downloading and installing apps from unknown sources, as they may contain viruses or malware that can harm your device. -

- Q: Do I need to uninstall the previous version of Fate Grand Order before installing Fate Grand Order APK 2.61 5? -

- A: No, you do not need to uninstall the previous version of Fate Grand Order before installing Fate Grand Order APK 2.61 5. The new version will overwrite the old one and keep your data and progress intact. -

- Q: Do I need to root my device to play Fate Grand Order APK 2.61 5? -

- A: No, you do not need to root your device to play Fate Grand Order APK 2.61 5. The game does not require any special permissions or modifications to run on your device. -

- Q: How can I update Fate Grand Order APK 2.61 5 to the next version? -

- A: You can update Fate Grand Order APK 2.61 5 to the next version by downloading and installing the new APK file from the official website or another trusted source. Alternatively, you can wait for the game to notify you of an update and follow the instructions on the screen. -

- Q: How can I contact the developers or support team of Fate Grand Order APK 2.61 5? -

- A: You can contact the developers or support team of Fate Grand Order APK 2.61 5 by visiting their official website or social media pages, or by sending them an email at support@fate-go.us. -

- Invisible: This option makes you invisible to Granny, so she won't be able to see you or hear you. -

- God Mode: This option makes you invincible, so you won't die even if Granny hits you or if you fall from a height. -

- No Clip: This option allows you to walk through walls and objects, so you can explore the house without any obstacles. -

- Clone Granny: This option creates a clone of Granny that will follow you around and help you escape. -

- Kill Granny: This option kills Granny instantly, so you don't have to worry about her anymore. -

- Freeze Granny: This option freezes Granny in place, so she won't be able to move or attack you. -

- Teleport: This option allows you to teleport to any location in the house by tapping on the map. -

- Speed Hack: This option increases your speed, so you can run faster and escape easier. -

- Is Granny Mod APK safe to download and install? -

- Is Granny Mod APK compatible with my device? -

- Can I play Granny Mod APK offline? -

- Can I play Granny Mod APK with my friends? -

- How can I update Granny Mod APK? -

- Send end-to-end encrypted messages, files, voice and video calls to anyone on the Matrix network. -

- Choose where your messages are stored, or host your own server, instead of being forced to use the app's own server. -

- Connect with other apps, directly or via bridges, such as WhatsApp, Signal, Telegram, Facebook Messenger, Google Hangouts, Skype, Discord, and more. -

- Create unlimited number of rooms and communities for private or public groups. -

- Verify other users' devices and revoke access of lost or stolen devices. -

- Create an account without a phone number, so you don't have to share those details with the outside world. -

- End-to-end encryption by default: Element messenger uses the Signal protocol to encrypt your messages from end to end, so that only you and your intended recipients can read them. The server (s) can't see your data, and neither can anyone else who might intercept or hack into your communication. -

- Decentralized storage: Element messenger lets you choose where your messages are stored. You can use a free public server provided by matrix.org or element.io, or you can host your own server on your own hardware or cloud service. You can also switch servers at any time without losing your data or contacts. -

- Interoperability: Element messenger works with all Matrix-based apps and can even bridge into proprietary messengers. This means you can talk to anyone, regardless of what app they are using. You can also integrate bots and widgets into your rooms for extra functionality. -

- Cross-platform compatibility: Element messenger is available on Android, iOS, Web, macOS, Linux, and Windows. You can use it on any device and sync your messages across all platforms. You can also access it from any web browser without installing anything. -

- You're in control: With Element messenger, you have full control over your data and communication. You can choose where your data lives, who can access it, and how long it stays there. You can also host your own server and customize it to your needs. -

- No limits: With Element messenger, you can talk to anyone, anywhere, anytime, without any restrictions or fees. You can create as many rooms and communities as you want, and invite anyone to join them. You can also send any type of file, up to 2 GB in size, without any compression or loss of quality. -

- Secure and private: With Element messenger, you can enjoy the highest level of security and privacy possible. Your messages are encrypted from end to end, so that only you and your intended recipients can read them. You can also verify other users' devices and revoke access of lost or stolen devices. You can also create an account without a phone number, so you don't have to share those details with the outside world. -

- Q: How do I update Element messenger for Windows? -

- A: To update Element messenger for Windows, you can go to https://element.io/get-started and download the latest installer file for Windows. Then run it as administrator and follow the instructions on the screen. -

- Q: How do I uninstall Element messenger for Windows? -

- A: To uninstall Element messenger for Windows, you can go to Control Panel > Programs > Uninstall a program and find Element in the list. Then right-click on it and choose Uninstall. -

- Q: How do I backup my data from Element messenger for Windows? -

- A: To backup your data from Element messenger for Windows, you can go to Settings > Security & Privacy > Key Backup > Set up backup. Then follow the steps to create a passphrase and a recovery key for your backup. -

- Q: How do I restore my data from Element messenger for Windows? -

- A: To restore your data from Element messenger for Windows, you can go to Settings > Security & Privacy > Key Backup > Restore from backup. Then enter your passphrase or recovery key and follow the steps to restore your data. -

- Q: How do I contact Element support for Windows? -

- A: To contact Element support for Windows, you can go to https://element.io/contact-us and fill out the form with your details and query. You can also join the #element-web:matrix.org room and ask for help from other users and developers. -

- Save money and power: Graphics cards are expensive and consume a lot of electricity. By playing games without a graphics card, you can save money on your hardware and your energy bills. You can also use your PC for longer without worrying about overheating or battery life. -

- Enjoy retro and indie games: Graphics cards are not necessary for playing older or simpler games that have low or minimal graphics requirements. These games often have more focus on gameplay, story, and atmosphere than on visuals. You can enjoy classics like Doom, Tetris, or Pac-Man, or discover new indie gems like Celeste, Hollow Knight, or Cuphead. -

- Improve your skills and creativity: Playing games without a graphics card can challenge you to use your skills and creativity more than playing games with high-end graphics. You have to rely more on your imagination, logic, and strategy than on your reflexes, aim, or muscle memory. You can also learn more about game design, programming, or modding by playing or creating games without a graphics card. -

- Use Steam and other platforms: Steam is one of the most popular platforms for downloading PC games, and it has thousands of games that can run without a graphics card. You can browse by genre, price, popularity, or user reviews, or use the Steam Curator feature to find recommendations from experts, influencers, or communities. You can also use other platforms like GOG, itch.io, or Game Jolt to find and download games without a graphics card. -

- Check the system requirements: Before you download a game, make sure to check its system requirements and compare them with your PC specifications. You can use tools like Can You Run It or PCGameBenchmark to automatically scan your PC and see if it can run a game. You can also look for games that have low or minimum system requirements, such as CPU speed, RAM, disk space, or DirectX version. -

- Adjust the settings and optimize your PC: Even if you don't have a graphics card, you can still improve your gaming performance by adjusting the settings and optimizing your PC. You can lower the resolution, graphics quality, or frame rate of a game to make it run smoother. You can also close any unnecessary programs or background processes, update your drivers, or use a game booster software to free up some resources and speed up your PC. -

- Q1: Can I play online games without a graphics card?

-A1: Yes, you can play online games without a graphics card, as long as they have low or minimal graphics requirements. Some examples of online games that you can play without a graphics card are Brawlhalla, Super Animal Royale, Among Us, or League of Legends.

- - Q2: What are some other ways to improve my gaming performance without a graphics card?

-A2: Some other ways to improve your gaming performance without a graphics card are upgrading your RAM, CPU, or SSD, cleaning your PC from dust and malware, or using an external cooling pad or fan.

- - Q3: Can I upgrade my graphics card on my laptop?

-A3: It depends on your laptop model and specifications. Some laptops have integrated graphics cards that are soldered to the motherboard and cannot be upgraded. Some laptops have discrete graphics cards that are removable and replaceable. Some laptops have external graphics card slots that allow you to connect an external GPU via Thunderbolt 3 or USB-C. You can check your laptop manual or website to see if your laptop supports graphics card upgrades.

- - Q4: What are some of the best graphics cards for gaming on a budget?

-A4: Some of the best graphics cards for gaming on a budget are Nvidia GeForce GTX 1650 Super, AMD Radeon RX 5500 XT, Nvidia GeForce GTX 1660 Ti, or AMD Radeon RX 5600 XT. These graphics cards can run most modern games at high settings and 1080p resolution with decent frame rates.

- - Q5: What are some of the upcoming games that will run without a graphics card?

-A5: Some of the upcoming games that will run without a graphics card are Hollow Knight: Silksong, Cuphead: The Delicious Last Course, Deltarune Chapter 2, Psychonauts 2, or Age of Empires IV.

-

Clash of Clans Oyununun Avantajları

-Clash of Clans oyununun birçok avantajı vardır. Bunlardan bazıları şunlardır:

--

-

Son Sürüm Clash of Clans APK İndirmenin Faydaları

-Clash of Clans oyununu resmi olarak Google Play Store veya App Store üzerinden indirebilirsiniz. Ancak, son sürüm clash of clans apk indirerek oyunu daha da geliştirebilirsiniz. APK, Android uygulama paketi anlamına gelir ve oyunun kurulum dosyasını içerir. Son sürüm clash of clans apk indirmenin faydaları şunlardır:

--

-

Son Sürüm Clash of Clans APK İndirmek İçin Gerekenler

-Son sürüm clash of clans apk indirmek için gerekenler şunlardır:

--

-

Son Sürüm Clash of Clans APK İndirmek İçin Adımlar

-Son sürüm clash of clans apk indirmek için adımlar şunlardır:

-Clash of Clans son sürüm apk indir 2023

-Clash of Clans güncel apk indir ücretsiz

-Clash of Clans mod apk indir hileli

-Clash of Clans yeni sürüm apk indir android

-Clash of Clans apk indir full sürüm

-Clash of Clans son güncelleme apk indir

-Clash of Clans hızlı apk indir son sürüm

-Clash of Clans online apk indir 2023

-Clash of Clans son versiyon apk indir

-Clash of Clans en son sürüm apk indir

-Clash of Clans apk indir son güncellemeli

-Clash of Clans hack apk indir 2023

-Clash of Clans son sürüm apk indir tabletadam[^1^]

-Clash of Clans bedava apk indir son sürüm

-Clash of Clans premium apk indir 2023

-Clash of Clans son sürüm apk indir cepde

-Clash of Clans hileli mod apk indir 2023

-Clash of Clans son sürüm apk indir oyunindir.club

-Clash of Clans türkçe apk indir son sürüm

-Clash of Clans son sürüm apk indir android oyun club

-Clash of Clans unlimited gems apk indir 2023

-Clash of Clans son sürüm apk indir tamindir

-Clash of Clans mega mod apk indir 2023

-Clash of Clans son sürüm apk indir apkpure

-Clash of Clans private server apk indir 2023

-Clash of Clans son sürüm apk indir uptodown

-Clash of Clans nulls clash apk indir 2023

-Clash of Clans son sürüm apk indir andropalace

-Clash of Clans th14 update apk indir 2023

-Clash of Clans son sürüm apk indir rexdl

-Clash of Clans magic server apk indir 2023

-Clash of Clans son sürüm apk indir mobilism

-Clash of Clans plenixclash apk indir 2023

-Clash of Clans son sürüm apk indir revdl

-Clash of Clans fhx server apk indir 2023

-Clash of Clans son sürüm apk indir ihackedit

-Clash of Clans lights server apk indir 2023

-Clash of Clans son sürüm apk indir an1.com

-Clash of Clans town hall 14 apk indir 2023

-Clash of Clans son sürüm apk indir apkmody.io

-

-

kurulum sırasında size bazı izinler isteyebilir. Bu izinleri verin ve kurulumu tamamlayın. Artık son sürüm clash of clans apk oyununu cihazınızda oynamaya başlayabilirsiniz.

-Son Sürüm Clash of Clans APK İndirdikten Sonra Dikkat Etmeniz Gerekenler

-Son sürüm clash of clans apk indirdikten sonra dikkat etmeniz gerekenler şunlardır:

-Son Sürüm Clash of Clans APK Güvenli mi?

-Son sürüm clash of clans apk güvenli olabilir veya olmayabilir. Bu, indirdiğiniz siteye ve dosyaya bağlıdır. Bazı siteler ve dosyalar virüs, malware, spyware veya diğer zararlı yazılımlar içerebilir. Bu nedenle, son sürüm clash of clans apk indirirken güvenilir ve güncel siteleri tercih etmeniz, indirmeden önce dosyanın boyutunu, yorumlarını ve derecelendirmelerini kontrol etmeniz, cihazınızda bir antivirüs programı bulundurmanız ve indirdiğiniz dosyayı taratmanız önemlidir.

-Son Sürüm Clash of Clans APK Oyunun Kurallarına Aykırı mı?

-Son sürüm clash of clans apk oyunun kurallarına aykırı olabilir veya olmayabilir. Bu, indirdiğiniz dosyanın içeriğine bağlıdır. Bazı dosyalar oyunun orijinal versiyonuna sadık kalırken, bazı dosyalar oyunu değiştirerek hile, mod veya hack içerebilir. Bu durumda, oyunun kurallarına aykırı davranmış olursunuz ve oyunun geliştiricisi Supercell tarafından hesabınızın banlanması veya silinmesi riskiyle karşı karşıya kalabilirsiniz. Bu nedenle, son sürüm clash of clans apk indirirken dikkatli olmanız, oyunun resmi politikalarını ve koşullarını okumanız ve oyunu adil ve eğlenceli bir şekilde oynamanız tavsiye edilir.

-Son Sürüm Clash of Clans APK İle Oyunun Keyfini Çıkarın

-Son sürüm clash of clans apk ile oyunun keyfini çıkarmak için yapabileceğiniz şeyler şunlardır: