Once downloaded, run the TeamViewer setup and follow the on-screen instruction to install it on your PC. When ask if you are to run it for personal or commercial use, be sure to select for personal use only. This should fix the TeamViewer trial version expired on Windows 10 issue.

-Learn more Please log in with your TeamViewer credentials to start the reactivation process. Click Here to StartWant more?Exclusive deals, the latest news: Our Newsletter!Sign upPlease choose your regionSelecting a region changes the language and/or content on teamviewer.com

-I am a long time TeamViewer user. I use it personally (for free) and have used the commercial version at each client.

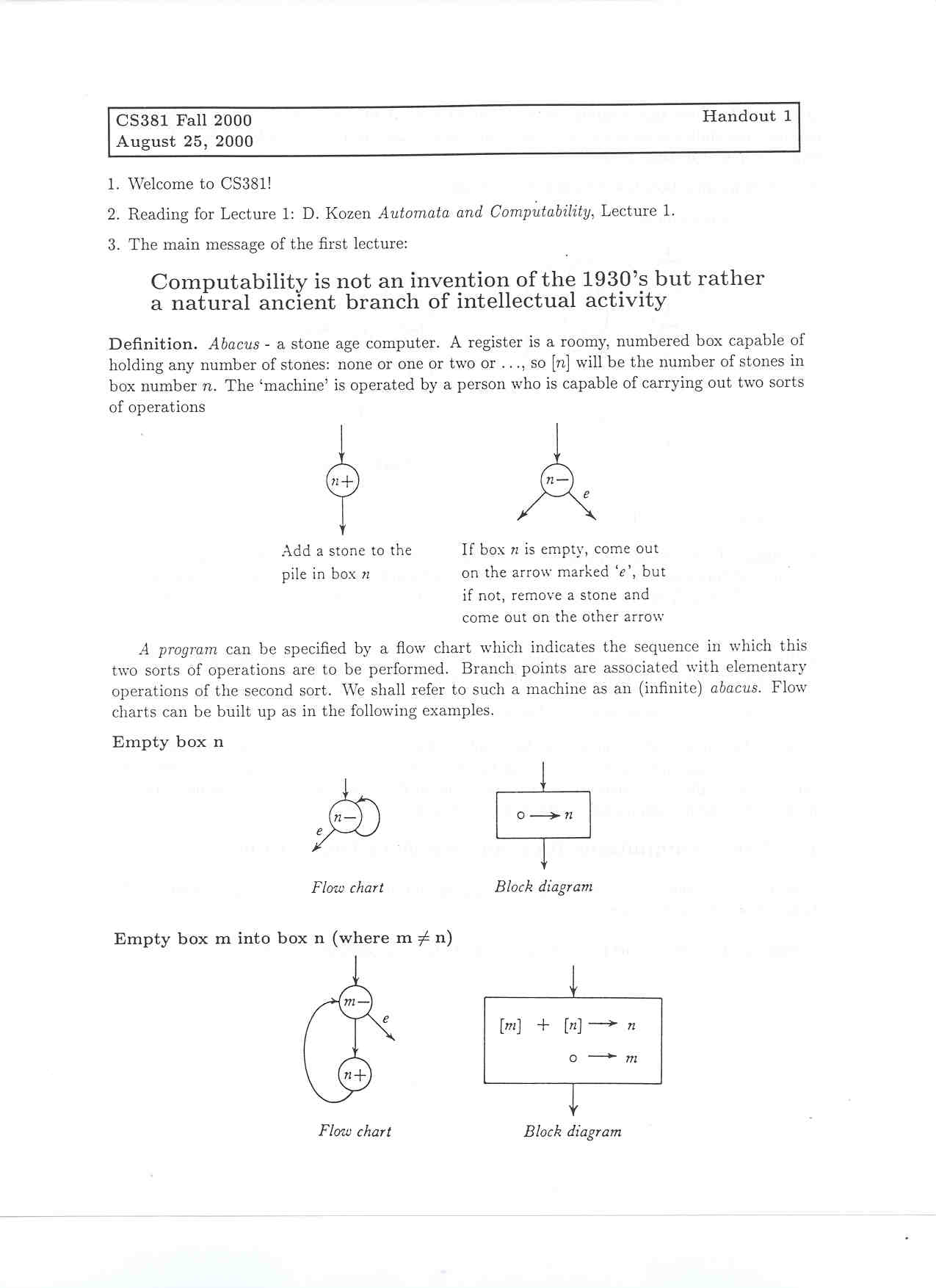

All of the sudden, one of my personal machines could not be connected to and said "your trial expired" or similar. I thought I had to reload, but then a second personal machine did it. No help and I think they are changing their policy.

Anyway, I am testing AnyDesk and it is working great so far. AnyDesk is being developed by some ex-TeamViewer people. It says it works on all platforms, and I have been trying on Ubuntu, W10, and W7. I will test on Mac, Ios and Android tonight. Enjoy.

-I am a long time TeamViewer user. I use it personally (for free) and have used the commercial version at each client.

All of the sudden, one of my personal machines could not be connected to and said "your trial expired" or similar. I thought I had to reload, but then a second personal machine did it. No help and I think they are changing their policy.

-You might experience annoying warning as shown above when you are try to connect with your friends through teamviewer. Below specified instructions for advanced users only and we are not responsible for any data loss occurs when you follow the steps and always recommend you to take a whole registry backup before to proceed the steps.

-Lỗi teamviewer hết hạn dùng thử là do khi cài đặt TeamViewer, bạn đã chọn vào mục Company / Commerical use. Lưu ý là chỉ chọn mục Personal / Non-commercial use (cá nhân, phi thương mại).

-Hết thời gian dùng thử, kể cả gỡ phần mềm TeamViewer ra và cài lại thì vẫn không hết lỗi. Bởi vì, địa chỉ MAC máy tính của bạn đã được lưu trữ ở trang chủ. Từ địa chỉ MAC này, nó sẽ tạo ra ID TeamViewer cho bạn. Vậy nên, muốn khắc phục lỗi teamviewer hết hạn dùng thử ta cần phải đổi địa chỉ MAC hay reset ID TeamViewer

-TVTools AlterID is a lightweight Windows program that allows you to reset the ID of TeamViewer software. Since there is no installation involved, you are able to drop the executable file in any part of the hard drive and click it to run. There is also the possibility to save the utility to a USB stick or similar storage device as well as to run it on any computer with minimum effort.

-The utility enables you to remove various restrictions. The overall procedure is very straightforward. All you need to do is to install the app on your computer, specify the path to the TeamViewer directory and select the desired settings. You can pick from three available options, such as a 7-day trial with full features, a limited mode with enabled advertisements and return to the original ID received when you started remote control software for the first time.

-Can't store a directory of computers that I connect to.Cumbersome connection requirements for unattended access. Why not simply do a computer ID and password with high strength?No option to start-up at computer start so that if there is a reset on the computer (power outage), I don't lose access to the remote PC. Run it as a service.

-you have to actually install the product, and it is not just click install, you have a full installation to remote a pc.I'm using teamviewer and ScreenConnect, I far prefer these product as they are easier for my customer to run. ScreenConnect doesn't need the user to read 9 digits and a code.As an idea you could adopt the administrator feature, or team feature, so that you will have a special client for the end user, and they just click and run (NO INSTALL) and then it will popup on the supporters desktop that a person needs help and with one click you are connected.

-been looking for a free piece of software to offer support to my customers and this fits the bill, as good as teamviewer, logmein etc and is freeware the others are overpriced for small businessesit does every thing i want it to do and with the security features gives the support customer confidence

-Commentaires :amazing product, i was looking forsomething like this....i mean when you compare this to something like teamviewer which is so astronomically overpriced

this is simply a breath of fresh air

aaccfb2cb3Watch the movie Children of War on the free film streaming website www.onlinemovieshindi.com (new web URL: ). Online streaming or downloading the video file easily. Watch or download Children of War online movie Hindi dubbed here.

-Dear visitor, you can download the movie Children of War on this onlinemovieshindi website. It will download the HD video file by just clicking on the button below. The video file is the same file for the online streaming above when you directly click to play. The decision to download is entirely your choice and your personal responsibility when dealing with the legality of file ownership

-Go Into the Story is the official blog for The Blacklist, the screenwriting community famous for its annual top ten list of unproduced scripts. One useful feature of Go Into the Story is its bank of downloadable movie scripts.

-

- *

- * This file is part of FFmpeg.

- *

- * FFmpeg is free software; you can redistribute it and/or

- * modify it under the terms of the GNU Lesser General Public

- * License as published by the Free Software Foundation; either

- * version 2.1 of the License, or (at your option) any later version.

- *

- * FFmpeg is distributed in the hope that it will be useful,

- * but WITHOUT ANY WARRANTY; without even the implied warranty of

- * MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

- * Lesser General Public License for more details.

- *

- * You should have received a copy of the GNU Lesser General Public

- * License along with FFmpeg; if not, write to the Free Software

- * Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA

- */

-

-static const uint8_t huff_iid_df1_bits[] = {

- 18, 18, 18, 18, 18, 18, 18, 18, 18, 17, 18, 17, 17, 16, 16, 15, 14, 14,

- 13, 12, 12, 11, 10, 10, 8, 7, 6, 5, 4, 3, 1, 3, 4, 5, 6, 7,

- 8, 9, 10, 11, 11, 12, 13, 14, 14, 15, 16, 16, 17, 17, 18, 17, 18, 18,

- 18, 18, 18, 18, 18, 18, 18,

-};

-

-static const uint32_t huff_iid_df1_codes[] = {

- 0x01FEB4, 0x01FEB5, 0x01FD76, 0x01FD77, 0x01FD74, 0x01FD75, 0x01FE8A,

- 0x01FE8B, 0x01FE88, 0x00FE80, 0x01FEB6, 0x00FE82, 0x00FEB8, 0x007F42,

- 0x007FAE, 0x003FAF, 0x001FD1, 0x001FE9, 0x000FE9, 0x0007EA, 0x0007FB,

- 0x0003FB, 0x0001FB, 0x0001FF, 0x00007C, 0x00003C, 0x00001C, 0x00000C,

- 0x000000, 0x000001, 0x000001, 0x000002, 0x000001, 0x00000D, 0x00001D,

- 0x00003D, 0x00007D, 0x0000FC, 0x0001FC, 0x0003FC, 0x0003F4, 0x0007EB,

- 0x000FEA, 0x001FEA, 0x001FD6, 0x003FD0, 0x007FAF, 0x007F43, 0x00FEB9,

- 0x00FE83, 0x01FEB7, 0x00FE81, 0x01FE89, 0x01FE8E, 0x01FE8F, 0x01FE8C,

- 0x01FE8D, 0x01FEB2, 0x01FEB3, 0x01FEB0, 0x01FEB1,

-};

-

-static const uint8_t huff_iid_dt1_bits[] = {

- 16, 16, 16, 16, 16, 16, 16, 16, 16, 15, 15, 15, 15, 15, 15, 14, 14, 13,

- 13, 13, 12, 12, 11, 10, 9, 9, 7, 6, 5, 3, 1, 2, 5, 6, 7, 8,

- 9, 10, 11, 11, 12, 12, 13, 13, 14, 14, 15, 15, 15, 15, 16, 16, 16, 16,

- 16, 16, 16, 16, 16, 16, 16,

-};

-

-static const uint16_t huff_iid_dt1_codes[] = {

- 0x004ED4, 0x004ED5, 0x004ECE, 0x004ECF, 0x004ECC, 0x004ED6, 0x004ED8,

- 0x004F46, 0x004F60, 0x002718, 0x002719, 0x002764, 0x002765, 0x00276D,

- 0x0027B1, 0x0013B7, 0x0013D6, 0x0009C7, 0x0009E9, 0x0009ED, 0x0004EE,

- 0x0004F7, 0x000278, 0x000139, 0x00009A, 0x00009F, 0x000020, 0x000011,

- 0x00000A, 0x000003, 0x000001, 0x000000, 0x00000B, 0x000012, 0x000021,

- 0x00004C, 0x00009B, 0x00013A, 0x000279, 0x000270, 0x0004EF, 0x0004E2,

- 0x0009EA, 0x0009D8, 0x0013D7, 0x0013D0, 0x0027B2, 0x0027A2, 0x00271A,

- 0x00271B, 0x004F66, 0x004F67, 0x004F61, 0x004F47, 0x004ED9, 0x004ED7,

- 0x004ECD, 0x004ED2, 0x004ED3, 0x004ED0, 0x004ED1,

-};

-

-static const uint8_t huff_iid_df0_bits[] = {

- 17, 17, 17, 17, 16, 15, 13, 10, 9, 7, 6, 5, 4, 3, 1, 3, 4, 5,

- 6, 6, 8, 11, 13, 14, 14, 15, 17, 18, 18,

-};

-

-static const uint32_t huff_iid_df0_codes[] = {

- 0x01FFFB, 0x01FFFC, 0x01FFFD, 0x01FFFA, 0x00FFFC, 0x007FFC, 0x001FFD,

- 0x0003FE, 0x0001FE, 0x00007E, 0x00003C, 0x00001D, 0x00000D, 0x000005,

- 0x000000, 0x000004, 0x00000C, 0x00001C, 0x00003D, 0x00003E, 0x0000FE,

- 0x0007FE, 0x001FFC, 0x003FFC, 0x003FFD, 0x007FFD, 0x01FFFE, 0x03FFFE,

- 0x03FFFF,

-};

-

-static const uint8_t huff_iid_dt0_bits[] = {

- 19, 19, 19, 20, 20, 20, 17, 15, 12, 10, 8, 6, 4, 2, 1, 3, 5, 7,

- 9, 11, 13, 14, 17, 19, 20, 20, 20, 20, 20,

-};

-

-static const uint32_t huff_iid_dt0_codes[] = {

- 0x07FFF9, 0x07FFFA, 0x07FFFB, 0x0FFFF8, 0x0FFFF9, 0x0FFFFA, 0x01FFFD,

- 0x007FFE, 0x000FFE, 0x0003FE, 0x0000FE, 0x00003E, 0x00000E, 0x000002,

- 0x000000, 0x000006, 0x00001E, 0x00007E, 0x0001FE, 0x0007FE, 0x001FFE,

- 0x003FFE, 0x01FFFC, 0x07FFF8, 0x0FFFFB, 0x0FFFFC, 0x0FFFFD, 0x0FFFFE,

- 0x0FFFFF,

-};

-

-static const uint8_t huff_icc_df_bits[] = {

- 14, 14, 12, 10, 7, 5, 3, 1, 2, 4, 6, 8, 9, 11, 13,

-};

-

-static const uint16_t huff_icc_df_codes[] = {

- 0x3FFF, 0x3FFE, 0x0FFE, 0x03FE, 0x007E, 0x001E, 0x0006, 0x0000,

- 0x0002, 0x000E, 0x003E, 0x00FE, 0x01FE, 0x07FE, 0x1FFE,

-};

-

-static const uint8_t huff_icc_dt_bits[] = {

- 14, 13, 11, 9, 7, 5, 3, 1, 2, 4, 6, 8, 10, 12, 14,

-};

-

-static const uint16_t huff_icc_dt_codes[] = {

- 0x3FFE, 0x1FFE, 0x07FE, 0x01FE, 0x007E, 0x001E, 0x0006, 0x0000,

- 0x0002, 0x000E, 0x003E, 0x00FE, 0x03FE, 0x0FFE, 0x3FFF,

-};

-

-static const uint8_t huff_ipd_df_bits[] = {

- 1, 3, 4, 4, 4, 4, 4, 4,

-};

-

-static const uint8_t huff_ipd_df_codes[] = {

- 0x01, 0x00, 0x06, 0x04, 0x02, 0x03, 0x05, 0x07,

-};

-

-static const uint8_t huff_ipd_dt_bits[] = {

- 1, 3, 4, 5, 5, 4, 4, 3,

-};

-

-static const uint8_t huff_ipd_dt_codes[] = {

- 0x01, 0x02, 0x02, 0x03, 0x02, 0x00, 0x03, 0x03,

-};

-

-static const uint8_t huff_opd_df_bits[] = {

- 1, 3, 4, 4, 5, 5, 4, 3,

-};

-

-static const uint8_t huff_opd_df_codes[] = {

- 0x01, 0x01, 0x06, 0x04, 0x0F, 0x0E, 0x05, 0x00,

-};

-

-static const uint8_t huff_opd_dt_bits[] = {

- 1, 3, 4, 5, 5, 4, 4, 3,

-};

-

-static const uint8_t huff_opd_dt_codes[] = {

- 0x01, 0x02, 0x01, 0x07, 0x06, 0x00, 0x02, 0x03,

-};

-

-static const int8_t huff_offset[] = {

- 30, 30,

- 14, 14,

- 7, 7,

- 0, 0,

- 0, 0,

-};

-

-///Table 8.48

-const int8_t ff_k_to_i_20[] = {

- 1, 0, 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 14, 15,

- 15, 15, 16, 16, 16, 16, 17, 17, 17, 17, 17, 18, 18, 18, 18, 18, 18, 18, 18,

- 18, 18, 18, 18, 19, 19, 19, 19, 19, 19, 19, 19, 19, 19, 19, 19, 19, 19, 19,

- 19, 19, 19, 19, 19, 19, 19, 19, 19, 19, 19, 19, 19, 19

-};

-///Table 8.49

-const int8_t ff_k_to_i_34[] = {

- 0, 1, 2, 3, 4, 5, 6, 6, 7, 2, 1, 0, 10, 10, 4, 5, 6, 7, 8,

- 9, 10, 11, 12, 9, 14, 11, 12, 13, 14, 15, 16, 13, 16, 17, 18, 19, 20, 21,

- 22, 22, 23, 23, 24, 24, 25, 25, 26, 26, 27, 27, 27, 28, 28, 28, 29, 29, 29,

- 30, 30, 30, 31, 31, 31, 31, 32, 32, 32, 32, 33, 33, 33, 33, 33, 33, 33, 33,

- 33, 33, 33, 33, 33, 33, 33, 33, 33, 33, 33, 33, 33, 33, 33

-};

diff --git a/spaces/congsaPfin/Manga-OCR/logs/Bet on Football with M-Bet Plus App - Download the Latest Version for Free.md b/spaces/congsaPfin/Manga-OCR/logs/Bet on Football with M-Bet Plus App - Download the Latest Version for Free.md

deleted file mode 100644

index 43f6b83843bb5efd17696806e51c2e736cbac4da..0000000000000000000000000000000000000000

--- a/spaces/congsaPfin/Manga-OCR/logs/Bet on Football with M-Bet Plus App - Download the Latest Version for Free.md

+++ /dev/null

@@ -1,110 +0,0 @@

-

-M-Bet Plus App: How to Download and Install the Best Betting App in Tanzania

-If you are looking for a reliable, convenient, and rewarding way to bet on your favorite sports in Tanzania, you should consider downloading the M-Bet Plus App. This is a mobile application that allows you to access all the features and services of M-Bet, one of the leading online sports betting platforms in Tanzania. With M-Bet Plus App, you can enjoy betting on various sports, such as football, basketball, tennis, rugby, cricket, and more. You can also take advantage of the generous bonuses and promotions that M-Bet offers to its customers. Whether you have an Android or iOS device, you can easily download and install the M-Bet Plus App on your smartphone or tablet. In this article, we will show you how to do that, as well as explain the features and benefits of this amazing app.

- Features and Benefits of M-Bet Plus App

-M-Bet Plus App is designed to provide you with a seamless and enjoyable betting experience on your mobile device. Here are some of the features and benefits that you can expect from this app:

-m-bet plus app download apk

Download File ✯ https://urlca.com/2uO8M3

-

-- User-friendly interface and design: The app has a simple and elegant design that makes it easy to navigate and use. You can find all the options and functions that you need with just a few taps. The app also has a dark mode that reduces eye strain and saves battery life.

-- Wide range of sports and markets: The app covers a variety of sports and markets that cater to different preferences and tastes. You can bet on popular sports like football, basketball, tennis, rugby, cricket, etc., as well as niche sports like darts, snooker, volleyball, etc. You can also bet on different types of markets, such as match result, over/under, handicap, correct score, etc.

-- Fast and secure payments: The app supports multiple payment methods that are fast and secure. You can deposit and withdraw money using mobile money services like Tigo Pesa, Airtel Money, Vodacom M-Pesa, Halo Pesa, etc. You can also use bank cards like Visa or Mastercard. The app uses SSL encryption technology to protect your personal and financial information.

-- Generous bonuses and promotions : The app offers various bonuses and promotions that can boost your winnings and enhance your betting experience. For example, you can get a 100% welcome bonus up to 10,000 TZS when you make your first deposit. You can also get a 10% cashback bonus every week if you lose more than 10 bets. Moreover, you can participate in the M-Bet Perfect 12 jackpot, where you can win up to 200 million TZS by predicting the outcome of 12 matches.

-- Live betting and virtual sports: The app allows you to bet on live events that are happening in real time. You can follow the action and place your bets as the game unfolds. You can also bet on virtual sports, which are simulated games that are based on random outcomes. You can bet on virtual football, horse racing, dog racing, etc.

-- Customer support and responsible gambling: The app has a dedicated customer support team that is available 24/7 to assist you with any issues or queries that you may have. You can contact them via phone, email, or live chat. The app also promotes responsible gambling and provides tools and resources to help you gamble safely and responsibly. You can set limits on your deposits, bets, and losses, as well as self-exclude yourself from the app if you feel that you have a gambling problem.

-

- How to Download M-Bet Plus App for Android Devices

-If you have an Android device, you can download and install the M-Bet Plus App by following these simple steps:

-

-- Visit the official M-Bet website and click on "Download Android App" at the top of the homepage.

-- Tap on "Download Our App Free" and then "Download" to start the download process. You may see a warning message that says "This type of file can harm your device". Ignore it and tap on "OK".

-- Once the apk file is downloaded, open it and allow installation from unknown sources. You may need to go to your device settings and enable this option.

-- Follow the instructions to install the app on your device. It may take a few minutes to complete.

-

- How to Download M-Bet Plus App for iOS Devices

-If you have an iOS device, you can download and install the M-Bet Plus App by following these simple steps:

-

-- Visit the official M-Bet website and click on "Download iOS App" at the top of the homepage.

-- Tap on "Download Our App Free" and then "Download" to start the download process. You may see a pop-up message that says "M-Bet would like to install 'M-Bet Plus'". Tap on "Install".

-- Once the app is downloaded, open it and trust the developer in your device settings. You may need to go to Settings > General > Device Management > Trust 'M-Bet'.

-- Follow the instructions to install the app on your device. It may take a few minutes to complete.

-

- How to Use M-Bet Plus App

-Once you have downloaded and installed the M-Bet Plus App on your device, you can start using it by following these simple steps:

-

-- Launch the app and log in with your existing account or register a new one. You will need to provide some basic information, such as your name, phone number, email address, etc.

-- Choose your preferred sport and market from the menu or search bar. You can browse through different categories, such as popular, today, tomorrow, etc. You can also filter by country, league, or team.

-- Place your bets by selecting the odds and entering the stake amount. You can place single or multiple bets, as well as pre-match or live bets. You can also use the quick bet feature to place your bets faster.

-- Confirm your bets and wait for the results. You can check your bet history and status in the app. You can also cash out your bets before the event ends if you want to secure a profit or minimize a loss.

-

- Comparison Between M-Bet Plus App and M-Bet Classic App

-M-Bet Plus App is not the only mobile application that M-Bet offers to its customers. There is also another app called M-Bet Classic App, which is an older version of the app. Here is a table showing the differences and similarities between the two apps in terms of features, design, functionality, etc.

-m-bet plus app apk download Tanzania 2023

-m-bet plus app free download for android

-m-bet plus app latest version for tz players

-m-bet plus app install guide and tips

-m-bet plus app bonus and promotions

-m-bet plus app review and rating

-m-bet plus app features and benefits

-m-bet plus app vs m-bet classic app comparison

-m-bet plus app how to bet and win

-m-bet plus app customer service and support

-m-bet plus app best odds and predictions

-m-bet plus app live betting and streaming

-m-bet plus app payment methods and security

-m-bet plus app licence and regulation

-m-bet plus app mobile site alternative

-m-bet plus app for iphone and ios devices

-m-bet plus app sports betting offering

-m-bet plus app virtual sports and games

-m-bet plus app jackpot and prizes

-m-bet plus app how to register and login

-m-bet plus app problems and solutions

-m-bet plus app faq and help center

-m-bet plus app news and updates

-m-bet plus app feedback and testimonials

-m-bet plus app advantages and disadvantages

-download mbet apk for free online

-mbet apk file size and requirements

-mbet apk how to update and uninstall

-mbet apk compatibility and performance

-mbet apk download link and source

-mbet apk pros and cons evaluation

-mbet apk user experience and interface

-mbet apk how to use and navigate

-mbet apk troubleshooting and errors

-mbet apk contact information and details

-mbet mobile app best betting tanzania

-mbet mobile app how to download and install

-mbet mobile app new version 2023 release date

-mbet mobile app offers and deals

-mbet mobile app referral code and coupons

-mbet mobile app sports markets and events

-mbet mobile app how to deposit and withdraw

-mbet mobile app safety and privacy

-mbet mobile app terms and conditions

-mbet mobile app ratings and reviews

-mbet mobile app how to play and win

-mbet mobile app tips and tricks

-mbet mobile app blog and articles

-mbet mobile app social media and community

-

-| M-Bet Plus App | M-Bet Classic App | - Newer and improved version of the app - More features and options - Better design and interface - Faster and smoother performance - Dark mode available - Compatible with Android and iOS devices | - Older and outdated version of the app - Fewer features and options - Basic design and interface - Slower and less stable performance - No dark mode available - Compatible only with Android devices |

|---|

-

- Conclusion

-M-Bet Plus App is a great choice for anyone who wants to bet on sports in Tanzania. It has many features and benefits that make it stand out from other betting apps. It is easy to download and install, user-friendly, secure, and rewarding. It also offers a wide range of sports and markets, live betting and virtual sports, bonuses and promotions, customer support and responsible gambling. Whether you are a beginner or an expert, you will find something that suits your needs and preferences. So, what are you waiting for? Download the M-Bet Plus App today and start betting on your favorite sports!

- FAQs

-Here are some of the frequently asked questions about M-Bet Plus App:

-

-- Q: How can I contact M-Bet customer support?

-A: You can contact M-Bet customer support via phone, email, or live chat. The phone number is +255 677 044 444, the email address is info@m-bet.co.tz, and the live chat option is available on the app or website.

-- Q: How can I claim my welcome bonus?

-A: You can claim your welcome bonus by making your first deposit of at least 1,000 TZS. You will receive a 100% bonus up to 10,000 TZS in your account. You will need to wager the bonus amount 4 times on odds of 2.0 or higher before you can withdraw it.

-- Q: How can I participate in the M-Bet Perfect 12 jackpot?

-A: You can participate in the M-Bet Perfect 12 jackpot by predicting the outcome of 12 matches that are selected by M-Bet. You can choose between home win, draw, or away win for each match. The entry fee is 1,000 TZS and the jackpot prize is 200 million TZS. You can also win consolation prizes if you get 11, 10, or 9 correct predictions.

-- Q: How can I cash out my bets?

-A: You can cash out your bets before the event ends if you want to secure a profit or minimize a loss. You can do this by going to your bet history and selecting the cash out option. The amount you will receive depends on the current odds and the status of your bet.

-- Q: How can I gamble responsibly?

-A: You can gamble responsibly by setting limits on your deposits, bets, and losses, as well as self-excluding yourself from the app if you feel that you have a gambling problem. You can also seek help from professional organizations like GamCare or Gamblers Anonymous if you need support or advice.

-

197e85843d

-

-

\ No newline at end of file

diff --git a/spaces/congsaPfin/Manga-OCR/logs/Enjoy the Best of PES 2018 with Konami APK and OBB Data Files.md b/spaces/congsaPfin/Manga-OCR/logs/Enjoy the Best of PES 2018 with Konami APK and OBB Data Files.md

deleted file mode 100644

index 797b61a16c9d68ec94d562cd366244e5829b8417..0000000000000000000000000000000000000000

--- a/spaces/congsaPfin/Manga-OCR/logs/Enjoy the Best of PES 2018 with Konami APK and OBB Data Files.md

+++ /dev/null

@@ -1,173 +0,0 @@

-

-How to Download and Play PES 2018 Pro Evolution Soccer on Your Android Device

-If you are a fan of soccer games, you might have heard of PES 2018 Pro Evolution Soccer, one of the most popular and realistic soccer games on mobile devices. In this article, we will show you how to download and play PES 2018 Pro Evolution Soccer on your Android device, as well as some tips and tricks to help you enjoy the game more.

-pes konami 2018 apk download

Download File 🗹 https://urlca.com/2uO96F

- Introduction

-PES 2018 Pro Evolution Soccer is a soccer game developed by Konami Digital Entertainment, the same company behind the famous Metal Gear Solid series. It is the latest entry in the PRO EVOLUTION SOCCER series, which has been running since 2001.

-PES 2018 Pro Evolution Soccer features world famous national and club teams, such as Brazil, France, Japan, FC Barcelona, and Liverpool FC. You can build your own squad from over 10,000 actual players, including legends like Beckham, Maradona, and Zico. You can also live out your childhood soccer fantasy by taking control of these legendary players and creating your dream team.

-PES 2018 Pro Evolution Soccer also boasts of stunning graphics, realistic animations, and smooth gameplay. You can experience authentic soccer on the go by playing as teams from all over the world, with natural player movements, precision passing, and in-depth tactics. You can also face off against your friends anytime, anywhere, by using the online or offline modes.

-Whether you are a casual or hardcore soccer fan, PES 2018 Pro Evolution Soccer will surely satisfy your soccer cravings. It is a game that you can play for hours without getting bored.

- How to Download PES 2018 Pro Evolution Soccer on Your Android Device

-Before you can play PES 2018 Pro Evolution Soccer on your Android device, you need to make sure that your device meets the system requirements and compatibility of the game. According to the official website, you need to have an Android device with at least:

-

-- Android version 5.0 or higher

-- 1.5 GB of RAM or more

-- 1.4 GB of free storage space or more

-- A stable internet connection (Wi-Fi is recommended)

-

-If your device meets these requirements, you can proceed to download PES 2018 Pro Evolution Soccer apk file from a trusted source. One of the sources that we recommend is CNET Download, which is a reputable website that offers safe and secure downloads of various software and apps.

-pes konami 2018 apk + obb

-pes konami 2018 apk mod

-pes konami 2018 apk offline

-pes konami 2018 apk latest version

-pes konami 2018 apk data

-pes konami 2018 apk android

-pes konami 2018 apk free download

-pes konami 2018 apk full version

-pes konami 2018 apk update

-pes konami 2018 apk cracked

-pes konami 2018 apk + data download

-pes konami 2018 apk + obb offline

-pes konami 2018 apk + obb download

-pes konami 2018 apk + obb mod

-pes konami 2018 apk + obb latest version

-pes konami 2018 apk + data offline

-pes konami 2018 apk + data mod

-pes konami 2018 apk + data download

-pes konami 2018 apk + data latest version

-pes konami 2018 apk mod offline

-pes konami 2018 apk mod unlimited money

-pes konami 2018 apk mod download

-pes konami 2018 apk mod latest version

-pes konami 2018 apk offline download

-pes konami 2018 apk offline mod

-pes konami 2018 apk offline latest version

-pes konami 2018 apk latest version download

-pes konami 2018 apk latest version offline

-pes konami 2018 apk latest version mod

-pes konami 2018 apk data download link

-pes konami 2018 apk data offline download

-pes konami 2018 apk data mod download

-pes konami 2018 apk data latest version download

-pes konami 2018 apk android download

-pes konami 2018 apk android offline

-pes konami 2018 apk android mod

-pes konami 2018 apk android latest version

-pes konami 2018 apk free download for android

-pes konami 2018 apk free download link

-pes konami 2018 apk free download offline

-pes konami 2018 apk free download mod

-pes konami 2018 apk full version download

-pes konami 2018 apk full version offline

-pes konami 2018 apk full version mod

-pes konami 2018 apk update download

-pes konami 2018 apk update offline

-pes konami 2018 apk update mod

-pes konami 2018 apk cracked download

-pes konami 2018 apk cracked offline

-To download PES 2018 Pro Evolution Soccer apk file from CNET Download, follow these steps:

-

-- Go to [CNET Download](^1^) website using your browser.

-- Pro Evolution Soccer" in the search box and hit enter.

-- Click on the "PES 2018 Pro Evolution Soccer" result from the list.

-- Click on the "Download Now" button and wait for the apk file to be downloaded to your device.

-

-Alternatively, you can also download PES 2018 Pro Evolution Soccer apk file from other sources, such as APKPure or APKMirror. However, you need to be careful and make sure that the apk file is free from viruses and malware. You can use an antivirus app to scan the apk file before installing it.

-Once you have downloaded PES 2018 Pro Evolution Soccer apk file, you need to install it on your Android device. To do this, follow these steps:

-

-- Go to your device's settings and enable the option to install apps from unknown sources. This will allow you to install apps that are not from the Google Play Store.

-- Locate the PES 2018 Pro Evolution Soccer apk file on your device using a file manager app.

-- Tap on the apk file and follow the instructions to install it on your device.

-- Wait for the installation process to finish and launch PES 2018 Pro Evolution Soccer from your app drawer or home screen.

-

-Congratulations! You have successfully downloaded and installed PES 2018 Pro Evolution Soccer on your Android device. You are now ready to play the game and enjoy its amazing features.

- How to Play PES 2018 Pro Evolution Soccer on Your Android Device

-PES 2018 Pro Evolution Soccer is a game that is easy to learn but hard to master. You need to have some skills and strategies to win matches and tournaments. Here are some basic steps on how to play PES 2018 Pro Evolution Soccer on your Android device:

- How to create your own squad and customize your players

-The first thing you need to do is to create your own squad and customize your players. You can choose from over 10,000 actual players, including legends like Beckham, Maradona, and Zico. You can also edit their appearance, skills, attributes, and positions.

-To create your own squad and customize your players, follow these steps:

-

-- From the main menu, tap on "My Team".

-- Tap on "Squad Management".

-- Tap on "Player List".

-- Select the player you want to edit or replace.

-- Tap on "Edit Player" or "Transfer".

-- Make the changes you want and save them.

-

-You can also create your own original players by tapping on "Create Player" from the player list. You can customize their name, nationality, age, height, weight, face, hair, kit number, position, playing style, skills, and abilities.

- How to control your players and perform actions on the field

-The next thing you need to do is to control your players and perform actions on the field. You can choose between two types of controls: advanced or classic. The advanced controls allow you to use gestures and swipes to perform actions, while the classic controls use virtual buttons and joysticks.

-To control your players and perform actions on the field, follow these steps:

-

-- To move your player, use the left joystick or swipe on the left side of the screen.

-- To pass the ball, tap on a teammate or swipe in their direction.

-- To shoot the ball, tap on the goal or swipe in its direction.

-- To dribble the ball, use the right joystick or swipe on the right side of the screen.

-- To tackle an opponent, tap on them or swipe in their direction.

-- To switch players, tap on the player icon or swipe up or down on the right side of the screen.

-

- How to compete with other players online or offline

-To compete with other players online or offline, follow these steps:

-

-- From the main menu, tap on "Match".

-- Select the mode you want to play, such as Matchday, Online Divisions, Local Match, Friendly Match Lobby, Campaign Mode, Events Mode, Training Mode, and more.

-- Choose your team and your opponent's team.

-- Adjust the match settings, such as difficulty, time limit, stadium, weather, and more.

-- Tap on "Start Match" and enjoy the game.

-

-You can also view your match records, rankings, rewards, and achievements by tapping on "My Profile" from the main menu.

- Tips and Tricks for Playing PES 2018 Pro Evolution Soccer on Your Android Device

-PES 2018 Pro Evolution Soccer is a game that requires some skills and strategies to win matches and tournaments. Here are some tips and tricks that can help you improve your game and have more fun:

- How to master the advanced and classic controls

-One of the most important aspects of playing PES 2018 Pro Evolution Soccer is to master the advanced and classic controls. The advanced controls allow you to use gestures and swipes to perform actions, while the classic controls use virtual buttons and joysticks. You can choose the control type that suits your preference and style.

-To master the advanced and classic controls, follow these tips:

-

-- Practice using the controls in the Training Mode. You can learn how to perform various actions, such as passing, shooting, dribbling, tackling, switching players, and more.

-- Adjust the sensitivity and size of the controls in the Settings. You can make the controls more responsive or comfortable for your fingers.

-- Use the auto-feint option in the Settings. This option will enable your players to perform feints and tricks automatically when you swipe on the screen.

-- Use the one-two pass option in the Settings. This option will enable your players to pass the ball back and forth quickly when you tap on the screen twice.

-- Use the through pass option in the Settings. This option will enable your players to pass the ball ahead of a teammate who is running into space when you swipe on the screen.

-

- How to use scouts, agents, and auctions to acquire the best players

-Another important aspect of playing PES 2018 Pro Evolution Soccer is to use scouts, agents, and auctions to acquire the best players. Scouts, agents, and auctions are ways to obtain new players for your squad. You can use coins or GP (game points) to use these methods.

-To use scouts, agents, and auctions to acquire the best players, follow these tips:

-

-- Use scouts to obtain specific players based on their attributes, such as position, nationality, league, club, skill, etc. You can obtain scouts by playing matches or events. You can also combine scouts to increase your chances of getting better players.

-- Use agents to obtain random players based on their rarity, such as bronze, silver, gold, or black ball. You can obtain agents by playing matches or events. You can also use special agents that offer higher chances of getting rare players.

-- Use auctions to bid for specific players that other users have put up for sale. You can use GP to bid for players. You can also sell your own players in auctions to earn GP.

-

- How to earn coins and GP and use them wisely

-The last important aspect of playing PES 2018 Pro Evolution Soccer is to earn coins and GP and use them wisely. Coins and GP are currencies that you can use to buy scouts, agents, auctions, energy recovery items, contract renewals, etc. You can earn coins and GP by playing matches or events.

-To earn coins and GP and use them wisely, follow these tips:

-

-- Complete daily missions and achievements to earn coins and GP. You can view your missions and achievements by tapping on "My Profile" from the main menu.

-- Participate in events and tournaments to earn coins and GP. You can view the current events and tournaments by tapping on "Match" from the main menu.

-- Play online matches against other users to earn coins and GP. You can play online matches by tapping on "Online Divisions" or "Friendly Match Lobby" from the match menu.

-- auctions, energy recovery items, contract renewals, etc. You can buy these items by tapping on "Shop" from the main menu.

-- Save your coins and GP for special occasions, such as when there are special agents or auctions that offer high-quality players.

-

- Conclusion

-PES 2018 Pro Evolution Soccer is a game that you can download and play on your Android device. It is a game that features world famous national and club teams, stunning graphics, realistic animations, and smooth gameplay. You can create your own squad, control your players, and compete with other players online or offline. You can also use scouts, agents, and auctions to acquire the best players, and earn coins and GP to buy various items.

-If you are a fan of soccer games, you should not miss PES 2018 Pro Evolution Soccer. It is a game that will give you hours of fun and excitement. It is a game that will make you feel like a real soccer star.

-So what are you waiting for? Download PES 2018 Pro Evolution Soccer apk file from CNET Download or other sources, install it on your Android device, and start playing the game. You will not regret it.

- FAQs

-Here are some frequently asked questions and answers about PES 2018 Pro Evolution Soccer:

- Q: How can I update PES 2018 Pro Evolution Soccer to the latest version?

-A: You can update PES 2018 Pro Evolution Soccer to the latest version by downloading and installing the latest apk file from CNET Download or other sources. You can also check for updates by tapping on "Extras" from the main menu and then tapping on "Update".

- Q: How can I transfer my data from one device to another?

-A: You can transfer your data from one device to another by using the data transfer feature. To do this, follow these steps:

-

-- On your old device, tap on "Extras" from the main menu and then tap on "Data Transfer".

-- Tap on "Link Data" and choose a method to link your data, such as Google Play Games or KONAMI ID.

-- On your new device, tap on "Extras" from the main menu and then tap on "Data Transfer".

-- Tap on "Transfer Data" and choose the same method that you used to link your data on your old device.

-- Follow the instructions to complete the data transfer.

-

- Q: How can I contact the customer support of PES 2018 Pro Evolution Soccer?

-A: You can contact the customer support of PES 2018 Pro Evolution Soccer by tapping on "Extras" from the main menu and then tapping on "Support". You can also visit the official website or the official Facebook page of PES 2018 Pro Evolution Soccer for more information and assistance.

- Q: How can I change the language of PES 2018 Pro Evolution Soccer?

-A: You can change the language of PES 2018 Pro Evolution Soccer by tapping on "Extras" from the main menu and then tapping on "Settings". You can then choose the language that you prefer from the list of available languages.

- Q: How can I get more coins and GP for free?

-A: You can get more coins and GP for free by completing daily missions and achievements, participating in events and tournaments, playing online matches against other users, selling your players in auctions, watching ads, and inviting your friends to play PES 2018 Pro Evolution Soccer.

401be4b1e0

-

-

\ No newline at end of file

diff --git a/spaces/congsaPfin/Manga-OCR/logs/Experience Sonic Mania Plus on Android with Exagear and Gamepad - The Ultimate Way to Play the Game.md b/spaces/congsaPfin/Manga-OCR/logs/Experience Sonic Mania Plus on Android with Exagear and Gamepad - The Ultimate Way to Play the Game.md

deleted file mode 100644

index 3278fdf91f70218ebf37e66c6b5fdcda85458915..0000000000000000000000000000000000000000

--- a/spaces/congsaPfin/Manga-OCR/logs/Experience Sonic Mania Plus on Android with Exagear and Gamepad - The Ultimate Way to Play the Game.md

+++ /dev/null

@@ -1,222 +0,0 @@

-

-< and >). Here is an example of how to create a table with HTML formatting:

-

-

- | Heading 1 |

- Heading 2 |

- Heading 3 |

-

-

-

-

- | Cell 1 |

- Cell 2 |

- Cell 3 |

-

-

- | Cell 4 |

- Cell 5 |

- Cell 6 |

-

-

-

- The table tag defines the table element, which contains the following tags: thead, tbody, tr, th, and td. The thead tag defines the header of the table, which contains one or more tr tags. The tr tag defines a row of the table, which contains one or more th or td tags. The th tag defines a header cell, which is usually bold and centered. The td tag defines a data cell, which is usually aligned to the left. You can use various attributes and styles to customize the appearance of the table, such as border, width, height, color, font, etc. Now that you know how to create tables, let me show you how to write an outline of the article and the article itself. Here is an example of how to write an outline of the article using a table: | H1: Sonic Mania Plus: A Review of Hedgehog's Greatest Adventure | | ------------------------------------------------------------ | | H2: Introduction | | - What is Sonic Mania Plus? | | - Why is it considered one of the best Sonic games? | | - What are its features and content? | | H2: Gameplay | | - How does it compare to the original Sonic Mania? | | - What are the new modes and characters? | | - How does it capture the classic Sonic feel? | | H2: Graphics and Sound | | - How does it look and sound on different platforms? | | - What are the references and homages to previous Sonic games? | | - How does it use retro-style pixel art and music? | | H2: Conclusion | | - What are the pros and cons of Sonic Mania Plus? | | - Who should play it and who should avoid it? | | - How does it rank among other Sonic games? | Here is an example of how to write an article based on that outline using a table with HTML formatting:

-

-

-Sonic Mania Plus: A Review of Hedgehog's Greatest Adventure

-

-

- Introduction |

-

-

- Sonic Mania Plus is an enhanced version of Sonic Mania, a game that was released in 2017 as a tribute to the classic Sonic games from the Sega Genesis era. It was developed by a team of talented fans who were hired by Sega to create a game that would appeal to both old-school and new-school Sonic fans. |

-

-

- Sonic Mania Plus is widely regarded as one of the best Sonic games ever made, as it delivers a perfect balance of nostalgia and innovation, with stunning graphics, catchy music, and smooth gameplay. It also adds new features and content that make it even more enjoyable and replayable than the original version.

-sonic mania plus apk gamejolt

Download Zip ↔ https://urlca.com/2uO72H

|

-

-

- In this article, we will review Sonic Mania Plus and see why it is a must-play game for any Sonic fan or platformer lover. We will cover its gameplay, graphics, sound, and conclusion, as well as answer some frequently asked questions about the game. |

-

-

- Gameplay |

-

-

- Sonic Mania Plus is a 2D side-scrolling platformer that follows the adventures of Sonic the Hedgehog and his friends as they try to stop the evil Dr. Eggman and his robot army from taking over the world. The game consists of 12 zones, each with two acts and a boss fight. The zones are a mix of remixed stages from previous Sonic games and new stages that are inspired by them. |

-

-

- The gameplay is fast-paced and fun, as you can run, jump, spin, dash, and fly through the levels, collecting rings, power-ups, and secrets along the way. You can also use various gimmicks and obstacles, such as springs, loops, spikes, switches, and more, to spice up the action. The game also features multiple paths and routes that you can take to explore the levels and find different outcomes. |

-

-

- One of the main differences between Sonic Mania Plus and the original Sonic Mania is that it adds two new playable characters: Mighty the Armadillo and Ray the Flying Squirrel. These characters have their own unique abilities and playstyles that change the way you approach the game. Mighty can slam the ground to destroy enemies and obstacles, as well as bounce off spikes without taking damage. Ray can glide in the air by tilting the controller left or right, allowing him to reach high places and avoid hazards. |

-

-

- Another difference is that it adds two new modes: Encore Mode and Competition Mode. Encore Mode is a remixed version of the main game, with different level layouts, color palettes, music tracks, and enemy placements. It also introduces a new feature called Character Switching, which allows you to switch between two characters at any time by hitting a special monitor. You can also find more characters in the levels and swap them with your current ones. However, if you lose all your lives with one character, you lose them for good. |

-

-

- Competition Mode is a multiplayer mode that lets you race against up to three other players on split-screen. You can choose from four zones and customize the rules and settings of the match. You can also play online with other players via GameJolt, a platform that hosts indie games and fan games. To play Sonic Mania Plus online, you need to download the Sonic Mania Plus APK GameJolt file from their website and install it on your device.

-sonic mania plus complete save data gamejolt

-sonic mania plus pc port gamejolt

-sonic mania plus android port gamejolt

-sonic mania plus mod apk gamejolt

-sonic mania plus download free gamejolt

-sonic mania plus online multiplayer gamejolt

-sonic mania plus ray and mighty gamejolt

-sonic mania plus encore mode gamejolt

-sonic mania plus cheats and codes gamejolt

-sonic mania plus update patch gamejolt

-sonic mania plus original soundtrack gamejolt

-sonic mania plus fan game gamejolt

-sonic mania plus exe apk gamejolt

-sonic mania plus full version gamejolt

-sonic mania plus android emulator gamejolt

-sonic mania plus extra stages gamejolt

-sonic mania plus bonus content gamejolt

-sonic mania plus custom characters gamejolt

-sonic mania plus debug mode gamejolt

-sonic mania plus level editor gamejolt

-sonic mania plus amy rose gamejolt

-sonic mania plus metal sonic gamejolt

-sonic mania plus super forms gamejolt

-sonic mania plus special edition gamejolt

-sonic mania plus knuckles chaotix gamejolt

-sonic mania plus tails adventure gamejolt

-sonic mania plus shadow the hedgehog gamejolt

-sonic mania plus infinite the jackal gamejolt

-sonic mania plus classic heroes gamejolt

-sonic mania plus modern remixes gamejolt

-sonic mania plus 3d models gamejolt

-sonic mania plus retro graphics gamejolt

-sonic mania plus hd textures gamejolt

-sonic mania plus new zones gamejolt

-sonic mania plus boss rush mode gamejolt

-sonic mania plus time attack mode gamejolt

-sonic mania plus competition mode gamejolt

-sonic mania plus co-op mode gamejolt

-sonic mania plus split screen mode gamejolt

-sonic mania plus speedrun mode gamejolt

-sonic mania plus hard mode gamejolt

-sonic mania plus chaos emeralds gamejolt

-sonic mania plus hyper emeralds gamejolt

-sonic mania plus master emeralds gamejolt

-sonic mania plus sol emeralds gamejolt |

-

- Sonic Mania Plus is a game that captures the classic Sonic feel, as it pays homage to the original games and their mechanics, while also adding new twists and surprises. The game is challenging but fair, rewarding skill and exploration. It also has a lot of replay value, as you can try different characters, modes, and paths. The game is a blast to play solo or with friends, as you can share the excitement and fun of the Sonic experience. |

-

-

- Graphics and Sound |

-

-

- Sonic Mania Plus is a game that looks and sounds amazing, as it uses retro-style pixel art and music to create a nostalgic and vibrant atmosphere. The game is colorful and detailed, with smooth animations and dynamic backgrounds. The game also runs at 60 frames per second on all platforms, ensuring a smooth and responsive gameplay. |

-

-

- The game is available on various platforms, such as PC, PlayStation 4, Xbox One, Nintendo Switch, and Android devices. The game looks and sounds great on all of them, with minor differences in resolution and performance. The game also supports cross-play between PC and Android devices, allowing you to play online with other players regardless of the platform. |

-

-

- The game is full of references and homages to previous Sonic games and other Sega titles, such as Streets of Rage, OutRun, and Jet Set Radio. The game features many Easter eggs and secrets that will delight any fan of the Sonic franchise. The game also has a lot of humor and personality, with funny dialogue and expressions from the characters. |

-

-

- The game's soundtrack is composed by Tee Lopes, a fan-turned-professional musician who created remixes and original tracks for the game. The soundtrack is catchy and diverse, featuring various genres and styles that match the mood and theme of each zone. The soundtrack also includes contributions from other Sonic composers, such as Jun Senoue, Masato Nakamura, and Hyper Potions. |

-

- Conclusion |

-

-

- Sonic Mania Plus is a game that deserves all the praise and acclaim it has received, as it is a masterpiece of platforming and fan service. It is a game that celebrates the legacy and history of Sonic the Hedgehog, while also bringing new and fresh ideas to the table. It is a game that appeals to both old and new fans of the blue blur, as well as anyone who enjoys a good platformer. |

-

-

- The game has many pros and cons, depending on your preferences and expectations. Here are some of them: |

-

-

-

-

-

- | Pros |

- Cons |

-

-

-

-

- | Amazing graphics and sound |

- Some levels can be frustrating or confusing |

-

-

- | Smooth and fun gameplay |

- Some bosses can be too easy or too hard |

-

-

- | New characters and modes |

- Some features can be locked behind DLC or online access |

-

-

- | High replay value and content |

- Some glitches and bugs can occur |

-

-

- | Nostalgic and innovative |

- Some references and homages can be obscure or missed |

-

-

- |

-

- So, who should play Sonic Mania Plus and who should avoid it? Well, if you are a fan of Sonic the Hedgehog, or platformers in general, you should definitely give it a try. It is a game that will make you smile and have fun, as well as challenge and impress you. It is a game that will remind you why you love Sonic and why he is still relevant and popular today. |

-

-

- However, if you are not a fan of Sonic the Hedgehog, or platformers in general, you might want to skip it. It is a game that might not appeal to you or suit your tastes, as it is very faithful and loyal to the original games and their mechanics. It is a game that might frustrate or bore you, as it can be very fast and chaotic, or very slow and tedious. |

-

-

- Ultimately, Sonic Mania Plus is a game that deserves your attention and appreciation, as it is one of the best Sonic games ever made. It is a game that ranks among the top platformers of all time, as it is a masterpiece of design and creativity. It is a game that you should play at least once in your life, as it is a game that will make you feel like a kid again. |

-

-

- Frequently Asked Questions |

-

-

- Q: How long is Sonic Mania Plus? |

-

-

- A: Sonic Mania Plus is about 4 to 6 hours long, depending on your skill level and how much you explore the levels. However, the game has a lot of replay value, as you can play with different characters, modes, and paths. |

-

-

- Q: How much does Sonic Mania Plus cost? |

-

-

- A: Sonic Mania Plus costs $29.99 for the physical version, which includes an art book and a reversible cover. The digital version costs $19.99 for the base game, and $4.99 for the Plus DLC, which adds the new features and content. |

-

-

- Q: How do I unlock Super Sonic in Sonic Mania Plus? |

-

-

- A: To unlock Super Sonic in Sonic Mania Plus, you need to collect all seven Chaos Emeralds in the game. You can find them in special stages, which are accessed by finding giant rings hidden in the levels. Once you have all seven Chaos Emeralds, you can transform into Super Sonic by collecting 50 rings and pressing the jump button twice. |

-

- Q: How do I play Sonic Mania Plus online? |

-

-

- A: To play Sonic Mania Plus online, you need to download the Sonic Mania Plus APK GameJolt file from their website and install it on your Android device. You also need to create a GameJolt account and log in to the game. Then, you can join or host online matches with other players using the Competition Mode. |

-

-

- Q: What are the differences between Sonic Mania and Sonic Mania Plus? |

-

-

- A: Sonic Mania Plus is an enhanced version of Sonic Mania, which adds the following features and content: |

-

-

-

- - Two new playable characters: Mighty the Armadillo and Ray the Flying Squirrel

- - Two new modes: Encore Mode and Competition Mode

- - New level layouts, color palettes, music tracks, and enemy placements

- - New feature: Character Switching

- - New option: Angel Island Zone

- - New cutscenes and endings

- - New achievements and trophies

- - Various bug fixes and improvements

- |

-

-

- I hope this article has helped you learn more about Sonic Mania Plus and why it is a game worth playing. If you have any questions or comments, feel free to leave them below. Thank you for reading and have a great day! |

-

-

- |

-

-

-

401be4b1e0

-

-

\ No newline at end of file

diff --git a/spaces/congsaPfin/Manga-OCR/logs/How to Get Among Us 4.20 for Free on PC Android and iOS.md b/spaces/congsaPfin/Manga-OCR/logs/How to Get Among Us 4.20 for Free on PC Android and iOS.md

deleted file mode 100644

index 867ee10fc75b272bfae8c1936f336f6fa40bf70a..0000000000000000000000000000000000000000

--- a/spaces/congsaPfin/Manga-OCR/logs/How to Get Among Us 4.20 for Free on PC Android and iOS.md

+++ /dev/null

@@ -1,156 +0,0 @@

-

-

- What's new in Among Us 4.20?

- How to download and install Among Us 4.20 on different platforms?

- Conclusion: Summarize the main points and invite the reader to try out the game. | | H2: What is Among Us and why is it so popular? | - Explain the basic premise and gameplay of Among Us.

- Mention some of the features and modes that make it fun and engaging.

- Highlight some of the reasons why it has become a viral sensation among gamers and streamers. | | H2: What's new in Among Us 4.20? | - List some of the new features and improvements that are included in the latest update of Among Us.

- Use a table to compare the differences between Among Us 4.20 and the previous version.

- Provide some screenshots or videos to showcase the new content. | | H2: How to download and install Among Us 4.20 on different platforms? | - Explain how to download and install Among Us 4.20 on Android, iOS, Windows, and Mac devices.

- Provide links to the official sources or trusted third-party websites where the user can get the game.

- Give some tips and warnings on how to avoid scams, malware, or other issues that may arise from downloading or installing the game. | | H2: Conclusion | - Summarize the main points of the article and restate the benefits of playing Among Us 4.20.

- Invite the reader to try out the game and share their feedback or experiences with other players.

- Provide some resources or links where the reader can learn more about Among Us or join its community. | Table 2: Article with HTML formatting Among Us 4.20 Download: How to Play the Latest Version of the Popular Social Deduction Game

- If you are looking for a fun and exciting game to play with your friends or strangers online, you might want to check out Among Us, one of the most popular social deduction games in recent years. In this article, we will tell you everything you need to know about Among Us 4.20, the latest version of the game that has been released in June 2023. We will also show you how to download and install it on your device, whether it is an Android, iOS, Windows, or Mac device.

-among us 4.20 download

DOWNLOAD ····· https://urlca.com/2uO4Ss

- What is Among Us and why is it so popular?

- Among Us is a multiplayer game that can be played online or over local WiFi with 4-15 players. The game is set in a spaceship, where each player has a role: either a Crewmate or an Impostor.

- The Crewmates have to work together to complete tasks around the ship and find out who the Impostor is. The Impostor has to sabotage, kill, and deceive the Crewmates without getting caught.

- The game has several features and modes that make it fun and engaging, such as:

-among us 4.20 download for android

-among us 4.20 download for pc steam

-among us 4.20 download epic games store

-among us 4.20 download free online

-among us 4.20 download latest update

-among us 4.20 download with mods

-among us 4.20 download for mac

-among us 4.20 download for windows 10

-among us 4.20 download apk mod menu

-among us 4.20 download no verification

-among us 4.20 download for chromebook

-among us 4.20 download for ios

-among us 4.20 download with airship map

-among us 4.20 download for pc without steam

-among us 4.20 download hack version

-among us 4.20 download for laptop

-among us 4.20 download for xbox one

-among us 4.20 download with voice chat

-among us 4.20 download for ps4

-among us 4.20 download apk pure

-among us 4.20 download for nintendo switch

-among us 4.20 download with friends online

-among us 4.20 download for pc full version

-among us 4.20 download with roles mod

-among us 4.20 download apk uptodown

-among us 4.20 download for kindle fire

-among us 4.20 download with hide and seek mode

-among us 4.20 download for pc windows 7

-among us 4.20 download unlimited money

-among us 4.20 download apk obb

-

-- Different maps to play in: The Skeld, MIRA HQ, Polus, and the Airship.

-- Lots of game options: Add more Impostors, more tasks, different roles, and so much more.

-- Different modes to choose from: Classic or Hide n Seek.

-- A chat system that allows players to communicate with each other during meetings or emergencies.

-- A friend system that lets players add and invite their friends to play together.

-

- The game has become a viral sensation among gamers and streamers for several reasons, such as:

-

-- Its simple yet addictive gameplay that can be enjoyed by anyone.

-- Its social aspect that encourages interaction, cooperation, deception, and betrayal among players.

-- Its hilarious and unpredictable moments that can create memorable experiences.

-- Its low system requirements that make it accessible to a wide range of devices.

-

- What's new in Among Us 4.20?

- The latest update of Among Us, version 4.20, has been released in June 2023 with some new features and improvements that make the game even better than before. Some of these are:

- - Four new roles: Scientist, Engineer, Guardian Angel, and Shapeshifter.

-- An XP system that rewards players for playing the game and completing tasks.

-- Multiple currencies: Stars, Beans, and Pods that can be used to buy cosmetics and other items.

-- A new store that offers a variety of customization options, including visor cosmetics, name plates, and more.

-- A single Among Us account that lets players save their progress and use their cosmetics across different platforms.

-

- To give you a better idea of what's new in Among Us 4.20, here is a table that compares it with the previous version of the game:

-

-

-| Feature |

-Among Us 4.19 |

-Among Us 4.20 |

-

-

-| Roles |

-Crewmate or Impostor |

-Crewmate (Scientist or Engineer), Impostor (Shapeshifter), or Guardian Angel |

-

-

-| XP system |

-No XP system |

-XP system that tracks players' level, playtime, tasks completed, and more |

-

-

-| Currencies |

-No currencies |

-Stars (premium currency), Beans (earned by playing), and Pods (earned by leveling up) |

-

-

-| Store |

-Limited store with only hats, skins, and pets |

-Expanded store with visor cosmetics, name plates, cosmicubes, and more |

-

-

-| Account |

-No account required |

-Single account required to save progress and use cosmetics across platforms |

-

-

- If you want to see the new features in action, you can watch some of the videos or screenshots below:

- )

- )

- )

- How to download and install Among Us 4.20 on different platforms?

- If you are interested in playing Among Us 4.20, you might be wondering how to download and install it on your device. Depending on what platform you are using, the process may vary slightly. Here are the steps for each platform:

- Android

-

-- Go to the Google Play Store and search for Among Us or click on this link: [Among Us - Apps on Google Play](^3^).

-- Tap on the Install button and wait for the download to finish.

-- Open the game and enjoy!

-

- iOS

-

-- Go to the App Store and search for Among Us or click on this link: [Among Us! on the App Store].

-- Tap on the Get button and wait for the download to finish.

-- Open the game and enjoy!

-

- Windows

-

-- Go to Steam and search for Among Us or click on this link: [Save 25% on Among Us on Steam](^2^).

-- Add the game to your cart and purchase it for $3.74 (25% off until June 27).

-- Download and install the game through Steam.

-- Open the game and enjoy!

-

- Mac

- - Go to the Epic Games Store and search for Among Us or click on this link: [Among Us - Epic Games Store].

-- Add the game to your cart and purchase it for $4.99.

-- Download and install the game through the Epic Games Launcher.

-- Open the game and enjoy!

-

- Before you download and install Among Us 4.20, here are some tips and warnings that you should keep in mind:

-

-- Make sure you have enough storage space on your device to download and install the game.

-- Make sure you have a stable internet connection to download and play the game online.

-- Make sure you have a compatible device that meets the minimum system requirements of the game.

-- Do not download or install the game from unofficial or untrusted sources, as they may contain viruses, malware, or other harmful content.

-- Do not use any cheats, hacks, or mods that may alter the game or give you an unfair advantage, as they may ruin the game experience for yourself and others, or get you banned from the game.

-

- Conclusion

- In conclusion, Among Us 4.20 is the latest version of the popular social deduction game that has been released in June 2023 with some new features and improvements that make the game even better than before. You can play it online or over local WiFi with 4-15 players, and choose from different roles, maps, modes, and options. You can also customize your character with various cosmetics and items that you can buy with different currencies. You can download and install it on your Android, iOS, Windows, or Mac device by following the steps we have provided above. We hope you enjoy playing Among Us 4.20 and have a blast with your friends or strangers online!

- If you want to learn more about Among Us or join its community, you can visit these resources or links:

-

-- The official website of Among Us: [Among Us | InnerSloth].

-- The official Twitter account of Among Us: [@AmongUsGame].

-- The official Discord server of Among Us: [Among Us].

-- The official subreddit of Among Us: [r/AmongUs].

-- The official wiki of Among Us: [Among Us Wiki | Fandom].

-

- Frequently Asked Questions

- Here are some of the frequently asked questions about Among Us 4.20:

- Q: Is Among Us 4.20 free to play?

- A: Among Us 4.20 is free to play on Android and iOS devices, but it costs $3.74 on Steam (until June 27) and $4.99 on Epic Games Store for Windows and Mac devices. However, there are some in-game purchases that require real money, such as Stars (the premium currency) and some cosmetics and items.

- Q: How do I update my Among Us to version 4.20?

- A: If you already have Among Us installed on your device, you can update it to version 4.20 by going to the app store or launcher where you got it from and checking for updates. Alternatively, you can uninstall the previous version of the game and download and install the latest version from the official sources or trusted third-party websites that we have provided above.

- Q: How do I play with my friends in Among Us 4.20?

- A: You can play with your friends in Among Us 4.20 by either creating a private room or joining a public room. To create a private room, you need to select a map, mode, and options, then tap on Host. You will get a code that you can share with your friends so they can join your room. To join a public room, you need to select a map, mode, and options, then tap on Find Game. You will see a list of available rooms that you can join by tapping on them.

- Q: How do I change my role in Among Us 4.20?

- A: You can change your role in Among Us 4.20 by going to the game options before starting a game and selecting one of the four roles: Scientist, Engineer, Guardian Angel, or Shapeshifter. However, note that not all roles are available for all modes or maps, and some roles may require certain conditions to be met before they can be activated.

- Q: How do I report a bug or issue in Among Us 4.20?

- A: You can report a bug or issue in Among Us 4.20 by going to the settings menu in the game and tapping on the Report Bug button. You will be redirected to a form where you can fill in the details of the bug or issue, such as the platform, the version, the map, the mode, the role, and the description. You can also attach a screenshot or a video to illustrate the problem. After submitting the form, you will receive a confirmation email and a ticket number that you can use to track the status of your report.

197e85843d

-

-

\ No newline at end of file

diff --git a/spaces/congsaPfin/Manga-OCR/logs/How to Tune Your Car and Win Races in Drag Racing Classic.md b/spaces/congsaPfin/Manga-OCR/logs/How to Tune Your Car and Win Races in Drag Racing Classic.md

deleted file mode 100644

index 844832100de8fcbe4a7bf773ef1507a727fb982c..0000000000000000000000000000000000000000

--- a/spaces/congsaPfin/Manga-OCR/logs/How to Tune Your Car and Win Races in Drag Racing Classic.md

+++ /dev/null

@@ -1,111 +0,0 @@

-

-Drag Racing Classic: A Guide for Beginners

-If you are looking for a racing game that is fun, addictive, and challenging, you might want to check out Drag Racing Classic. This game is one of the most popular racing apps on the App Store, with over 100 million players worldwide. In this game, you can drive 50+ real licensed cars from the world’s hottest car manufacturers, race against other players online, and customize and tune your cars for 1/4 or 1/2 mile drag races. In this article, we will give you a guide on how to play, master, and enjoy Drag Racing Classic.

- What is Drag Racing Classic?

-Drag Racing Classic is a racing game developed by Creative Mobile, a leading mobile game developer based in Estonia. The game was released in 2011 and has since become one of the most downloaded racing apps on the App Store. The game is available for both iPhone and iPad devices.

-drag racing classic

Download Zip ✦✦✦ https://urlca.com/2uOg2a

-Drag Racing Classic is a game that simulates drag racing, which is a type of motor racing that involves two vehicles competing in a straight line over a fixed distance. The objective of the game is to accelerate faster than your opponent and reach the finish line first. The game features realistic physics, graphics, and sound effects that make you feel like you are on a real drag strip.

-The game also offers a variety of features that make it more engaging and enjoyable. Some of these features are:

-

-- 50+ real licensed cars from the world’s hottest car manufacturers including BMW, Dodge, Honda, Nissan, McLaren, Pagani and the officially licensed 1200 bhp Hennessey Venom GT™

-- Performance upgrades, customization options, and tuning tools that allow you to modify your cars according to your preferences and needs

-- Different modes of play that cater to different levels of skill and interest: career mode, online mode, and pro league mode

-- Competitive multiplayer that lets you race against your friends or random racers online, drive your opponent’s car, or participate in real-time 10-player races in pro league mode

-- A team feature that lets you join a team or create your own team to exchange tunes, discuss strategy, and share your achievements with other players

-- An awesome community that connects you with other car game fanatics who enjoy Drag Racing Classic as much as you do

-

- How to play Drag Racing Classic?

-Playing Drag Racing Classic is easy and fun. All you need is your device and an internet connection. Here are the basic steps on how to play the game:

-

-- Download the game from the App Store for free. You can also purchase in-app items to enhance your gaming experience.

-- Launch the game and choose your mode of play: career mode, online mode, or pro league mode.

-- Select your car from the garage. You can buy new cars or upgrade your existing cars using cash or RP (respect points) earned from winning races.

-- Select your race type: 1/4 mile or 1/2 mile. You can also choose to race against an AI opponent or a real player online.

-- Start the race by pressing the gas pedal at the right time. You can also use nitrous oxide for a speed boost by tapping the N2 O button on the screen.

-- Shift gears at the right time by tapping the up and down arrows on the screen. You can also adjust your gear ratios in the garage to optimize your acceleration and speed.

-- Cross the finish line before your opponent and win the race. You can also watch a replay of your race or share it with your friends.

-

-That’s it! You have just completed a drag race. You can repeat these steps as many times as you want and enjoy the thrill of drag racing.

- How to master Drag Racing Classic?

-While playing Drag Racing Classic is easy, mastering it is not. It takes practice, skill, and strategy to become a drag racing champion. Here are some tips and tricks that can help you improve your performance and win more races:

-

-- Know your car. Different cars have different strengths and weaknesses, such as power, grip, weight, and nitrous capacity. You should choose a car that suits your style and preference, and learn how to drive it well.

-- Upgrade your car. Upgrading your car can make a big difference in your performance. You can upgrade your engine, turbo, intake, nitrous, weight, tires, and transmission using cash or RP. You can also customize your car’s appearance by changing its color, rims, decals, and license plate.

-- Tune your car. Tuning your car can give you an edge over your opponents. You can tune your car’s gear ratios, final drive, tire pressure, nitrous timing, and launch control using the tuning tools in the garage. You can also test your tune on the dyno or the test track to see how it affects your speed and acceleration.

-- Join a team. Joining a team can help you learn from other players, share tunes and strategies, and compete in team events. You can join an existing team or create your own team using the team feature in the game. You can also chat with your teammates and send them gifts using the team chat function.

-

- Why play Drag Racing Classic?

-Drag Racing Classic is more than just a game. It is a hobby, a passion, and a lifestyle for many people who love cars and racing. Here are some of the reasons why you should play Drag Racing Classic:

-drag racing classic app

-drag racing classic game

-drag racing classic cheats

-drag racing classic tuning

-drag racing classic mod apk

-drag racing classic best cars

-drag racing classic online

-drag racing classic download

-drag racing classic tips

-drag racing classic hack

-drag racing classic android

-drag racing classic ios

-drag racing classic review

-drag racing classic gameplay

-drag racing classic codes

-drag racing classic update

-drag racing classic levels

-drag racing classic pro league

-drag racing classic forum

-drag racing classic support

-drag racing classic wiki

-drag racing classic facebook

-drag racing classic twitter

-drag racing classic instagram

-drag racing classic youtube

-drag racing classic bmw m3 e92 tune

-drag racing classic dodge challenger tune

-drag racing classic honda s2000 tune

-drag racing classic nissan skyline tune

-drag racing classic mclaren mp4 12c tune

-drag racing classic pagani zonda tune

-drag racing classic hennessey venom gt tune

-drag racing classic boss cars

-drag racing classic career mode

-drag racing classic multiplayer mode

-drag racing classic nitrous oxide

-drag racing classic gear ratios

-drag racing classic launch control

-drag racing classic wheelie bar

-drag racing classic decals

-drag racing classic custom paint

-drag racing classic free rp and money

-drag racing classic unlimited coins and cash

-drag racing classic no ads

-drag racing classic offline mode

-drag racing classic how to play

-drag racing classic faq

-drag racing classic creative mobile

-drag racing classic app store

-

-- It is fun. Drag Racing Classic is a game that is easy to play but hard to master. It offers a lot of variety and challenge that keep you entertained and engaged. It is also a game that lets you express yourself and unleash your creativity through your car collection.

-- It is addictive. Drag Racing Classic is a game that keeps you coming back for more. It has a lot of features and modes that keep you hooked and motivated. It also has a competitive aspect that makes you want to improve your skills and rank higher on the leaderboards.

-- It is social. Drag Racing Classic is a game that connects you with other people who share your interest and passion for cars and racing. It has a friendly and supportive community that welcomes you and helps you grow as a player. It also has a team feature that lets you collaborate and compete with other players from around the world.

-

- Conclusion

-Drag Racing Classic is a game that offers you an exciting and realistic drag racing experience on your device. It lets you drive 50+ real licensed cars from the world’s hottest car manufacturers, race against other players online, and customize and tune your cars for 1/4 or 1/2 mile drag races. It also gives you tips and tricks on how to play, master, and enjoy the game. Whether you are a casual gamer or a hardcore racer, Drag Racing Classic is a game that you will love.

-So what are you waiting for? Download Drag Racing Classic today and join the millions of players who are already having fun with this game. You will not regret it!

- Frequently Asked Questions

-Here are some of the most common questions that people ask about Drag Racing Classic:

-

-- How do I get more cash or RP in the game?

-You can get more cash or RP by winning races, completing achievements, watching ads, or buying them with real money.

-- How do I unlock new cars or levels in the game?

-You can unlock new cars or levels by earning enough RP or cash to buy them or by reaching certain milestones in the career mode.

-- How do I find other players to race with online?