Commit

·

c1a869e

1

Parent(s):

906f139

Update parquet files (step 63 of 249)

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- spaces/1acneusushi/gradio-2dmoleculeeditor/data/23rd March 1931 Shaheed Mp4 2021 Download.md +0 -20

- spaces/1gistliPinn/ChatGPT4/Examples/Dc Unlocker 2 Client Full [HOT] 12.md +0 -6

- spaces/1gistliPinn/ChatGPT4/Examples/Download Buku Boyman Pramuka Pdf Viewer Semua yang Perlu Anda Ketahui tentang Pramuka.md +0 -6

- spaces/1gistliPinn/ChatGPT4/Examples/Download Commando - A One Man Army Full Movie.md +0 -6

- spaces/1gistliPinn/ChatGPT4/Examples/F.E.A.R 3 ENGLISH LANGUAGE PACK TORRENT.md +0 -7

- spaces/1pelhydcardo/ChatGPT-prompt-generator/Download Triple Frontier.md +0 -100

- spaces/1phancelerku/anime-remove-background/Download Free Fire MAX APK 32 Bits for Android - The Ultimate Battle Royale Experience.md +0 -153

- spaces/232labs/VToonify/app.py +0 -290

- spaces/AIConsultant/MusicGen/scripts/static/style.css +0 -113

- spaces/AIFILMS/audioldm-text-to-audio-generation/audioldm/pipeline.py +0 -78

- spaces/AIGC-Audio/AudioGPT/audio_detection/__init__.py +0 -0

- spaces/ATang0729/Forecast4Muses/Model/Model6/Model6_0_ClothesDetection/mmyolo/configs/yolov5/voc/yolov5_m-v61_fast_1xb64-50e_voc.py +0 -17

- spaces/Abhilashvj/haystack_QA/search.py +0 -60

- spaces/AchyuthGamer/OpenGPT-Chat-UI/src/lib/utils/deepestChild.ts +0 -6

- spaces/AchyuthGamer/OpenGPT/g4f/Provider/Providers/Myshell.py +0 -173

- spaces/AgentVerse/agentVerse/ui/src/phaser3-rex-plugins/templates/ui/gridtable/TableOnCellVisible.js +0 -28

- spaces/AgentVerse/agentVerse/ui/src/phaser3-rex-plugins/templates/ui/lineprogress/Factory.d.ts +0 -13

- spaces/Amrrs/DragGan-Inversion/stylegan_human/bg_white.py +0 -60

- spaces/Androidonnxfork/CivitAi-to-Diffusers/diffusers/src/diffusers/schedulers/scheduling_ddpm.py +0 -513

- spaces/Androidonnxfork/CivitAi-to-Diffusers/diffusers/tests/pipelines/stable_diffusion_2/__init__.py +0 -0

- spaces/Andy1621/uniformer_image_detection/configs/ghm/retinanet_ghm_x101_64x4d_fpn_1x_coco.py +0 -13

- spaces/AngoHF/ANGO-Leaderboard/assets/constant.py +0 -1

- spaces/AnxiousNugget/janitor/README.md +0 -10

- spaces/ArcAhmedEssam/CLIP-Interrogator-2/app.py +0 -177

- spaces/Ataturk-Chatbot/HuggingFaceChat/venv/lib/python3.11/site-packages/setuptools/_vendor/importlib_metadata/_meta.py +0 -48

- spaces/Awiny/Image2Paragraph/models/grit_src/third_party/CenterNet2/tests/structures/test_boxes.py +0 -223

- spaces/Benson/text-generation/Examples/Barbie Apk.md +0 -74

- spaces/BetterAPI/BetterChat_new/src/lib/utils/analytics.ts +0 -39

- spaces/Big-Web/MMSD/env/Lib/site-packages/pip/_vendor/chardet/cp949prober.py +0 -49

- spaces/Big-Web/MMSD/env/Lib/site-packages/setuptools/_distutils/command/build_clib.py +0 -208

- spaces/BilalSardar/Like-Chatgpt-clone/README.md +0 -12

- spaces/BlitzKriegM/argilla/Dockerfile +0 -19

- spaces/CALM/Dashboard/dashboard_utils/bubbles.py +0 -189

- spaces/CNXT/PiX2TXT/app.py +0 -3

- spaces/CVMX-jaca-tonos/YouTube-Video-Streaming-Spanish-ASR/app.py +0 -126

- spaces/CVPR/Dual-Key_Backdoor_Attacks/openvqa/utils/test_engine.py +0 -157

- spaces/CVPR/LIVE/pybind11/tests/constructor_stats.h +0 -275

- spaces/CVPR/LIVE/pybind11/tests/test_call_policies.py +0 -192

- spaces/CVPR/SPOTER_Sign_Language_Recognition/spoter_mod/sweep-agent.sh +0 -18

- spaces/CVPR/regionclip-demo/detectron2/data/transforms/torchvision_transforms/functional.py +0 -1365

- spaces/CVPR/regionclip-demo/detectron2/utils/registry.py +0 -60

- spaces/CofAI/chat/server/backend.py +0 -176

- spaces/CosmoAI/BhagwatGeeta/htmlTemplates.py +0 -44

- spaces/DQChoi/gpt-demo/venv/lib/python3.11/site-packages/PIL/JpegPresets.py +0 -240

- spaces/DQChoi/gpt-demo/venv/lib/python3.11/site-packages/PIL/_util.py +0 -19

- spaces/DQChoi/gpt-demo/venv/lib/python3.11/site-packages/fontTools/ttLib/tables/G_S_U_B_.py +0 -5

- spaces/DQChoi/gpt-demo/venv/lib/python3.11/site-packages/fontTools/varLib/instancer/names.py +0 -380

- spaces/Daniil-plotnikov/Daniil-plotnikov-russian-vision-v5-beta-3/README.md +0 -12

- spaces/Datasculptor/StyleGAN-NADA/README.md +0 -14

- spaces/Detomo/CuteRobot/README.md +0 -10

spaces/1acneusushi/gradio-2dmoleculeeditor/data/23rd March 1931 Shaheed Mp4 2021 Download.md

DELETED

|

@@ -1,20 +0,0 @@

|

|

| 1 |

-

<br />

|

| 2 |

-

<h1>23rd March 1931 Shaheed: A Tribute to the Martyrs of India</h1>

|

| 3 |

-

<p>23rd March 1931 Shaheed is a 2002 Hindi movie that depicts the life and struggle of Bhagat Singh, one of the most influential revolutionaries of the Indian independence movement. The movie, directed by Guddu Dhanoa and produced by Dharmendra, stars Bobby Deol as Bhagat Singh, Sunny Deol as Chandrashekhar Azad, and Amrita Singh as Vidyavati Kaur, Bhagat's mother. The movie also features Aishwarya Rai in a special appearance as Mannewali, Bhagat's love interest.</p>

|

| 4 |

-

<p>The movie portrays the events leading up to the hanging of Bhagat Singh and his comrades Sukhdev Thapar and Shivaram Rajguru on 23rd March 1931, which is observed as Shaheed Diwas or Martyrs' Day in India. The movie shows how Bhagat Singh joined the Hindustan Socialist Republican Association (HSRA) led by Chandrashekhar Azad and participated in various revolutionary activities such as the Lahore Conspiracy Case, the Central Legislative Assembly bombing, and the hunger strike in jail. The movie also highlights the ideological differences between Bhagat Singh and Mahatma Gandhi, who advocated non-violence as the means to achieve freedom.</p>

|

| 5 |

-

<h2>23rd March 1931 Shaheed mp4 download</h2><br /><p><b><b>Download File</b> ===> <a href="https://byltly.com/2uKvvh">https://byltly.com/2uKvvh</a></b></p><br /><br />

|

| 6 |

-

<p>23rd March 1931 Shaheed is a tribute to the courage and sacrifice of the martyrs who gave their lives for the freedom of India. The movie received mixed reviews from critics and audiences, but it has gained a cult status over the years. The movie is available for streaming on Disney+ Hotstar[^3^].</p><p>Another movie based on the life of Bhagat Singh is The Legend of Bhagat Singh, a 2002 Hindi movie directed by Rajkumar Santoshi and starring Ajay Devgan in the lead role. The movie covers Bhagat Singh's journey from his childhood where he witnesses the Jallianwala Bagh massacre to his involvement in the Hindustan Socialist Republican Association (HSRA) and his execution along with Rajguru and Sukhdev.</p>

|

| 7 |

-

<p>The movie focuses on the ideological differences between Bhagat Singh and Mahatma Gandhi, who advocated non-violence as the means to achieve freedom. The movie also shows how Bhagat Singh and his comrades used various tactics such as hunger strike, bomb blast, and court trial to challenge the British rule and inspire the masses. The movie also features Sushant Singh as Sukhdev, D. Santosh as Rajguru, Akhilendra Mishra as Chandrashekhar Azad, and Amrita Rao as Mannewali.</p>

|

| 8 |

-

<p>The Legend of Bhagat Singh received critical acclaim for its direction, story, screenplay, music, and performances. The movie won two National Film Awards â Best Feature Film in Hindi and Best Actor for Devgan â and three Filmfare Awards from eight nominations. However, the movie failed to perform well at the box office, as it faced competition from another movie on Bhagat Singh released on the same day â 23rd March 1931 Shaheed. The movie is available for streaming on Airtel Xstream[^3^].</p><p>Bhagat Singh was not only a brave revolutionary, but also a visionary thinker and writer. He wrote several essays and articles on various topics such as socialism, religion, nationalism, and violence. He also kept a jail notebook where he recorded his thoughts and quotations from various books he read in prison. Some of his famous quotes are:</p>

|

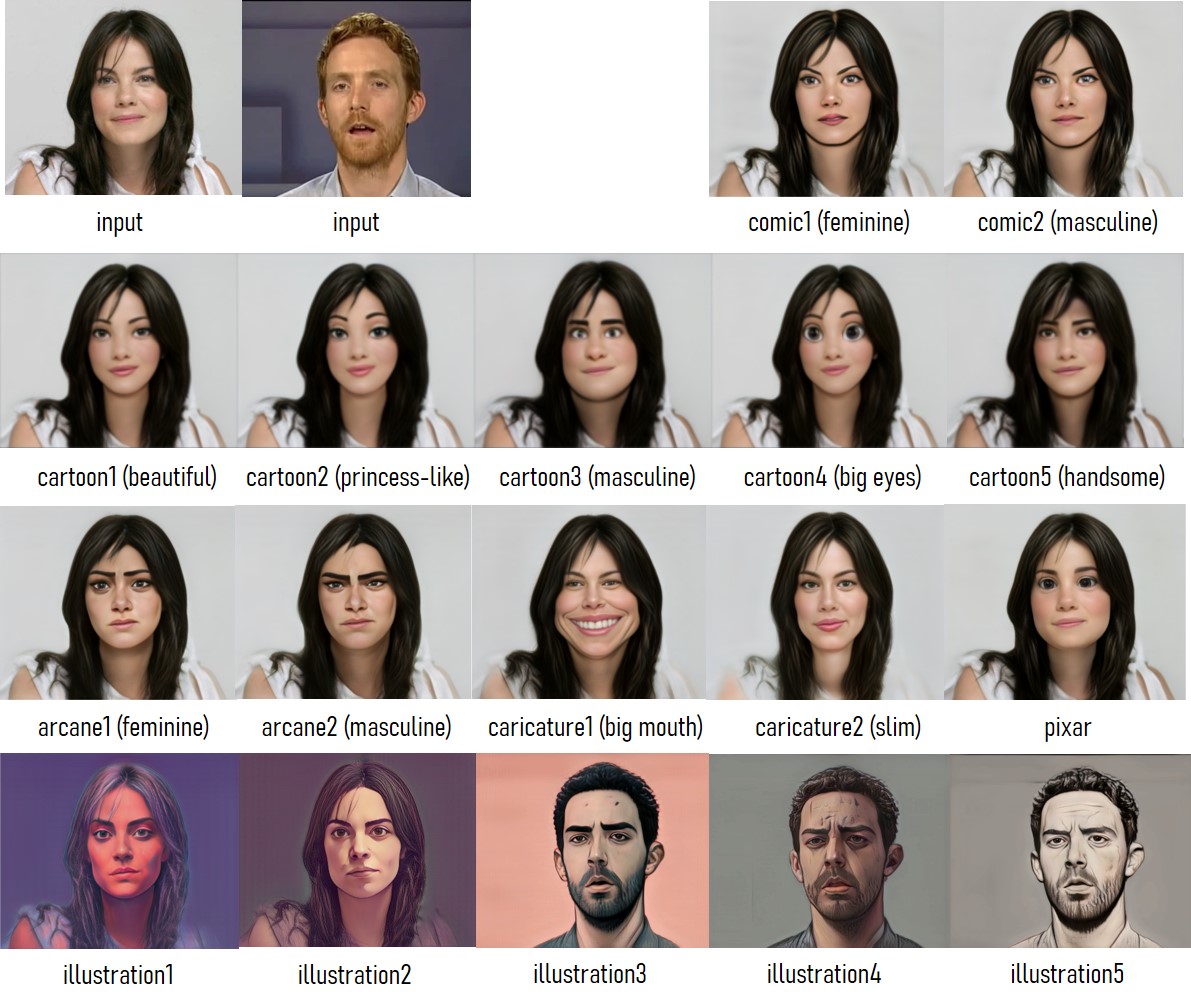

| 9 |

-

<p></p>

|

| 10 |

-

<ul>

|

| 11 |

-

<li>"They may kill me, but they cannot kill my ideas. They can crush my body, but they will not be able to crush my spirit."[^1^]</li>

|

| 12 |

-

<li>"Revolution is an inalienable right of mankind. Freedom is an imperishable birth right of all."[^1^]</li>

|

| 13 |

-

<li>"I am such a lunatic that I am free even in jail."[^2^]</li>

|

| 14 |

-

<li>"Merciless criticism and independent thinking are two traits of revolutionary thinking. Lovers, lunatics and poets are made of the same stuff."[^1^]</li>

|

| 15 |

-

<li>"Bombs and pistols don't make a revolution. The sword of revolution is sharpened on the whetting stone of ideas."[^1^]</li>

|

| 16 |

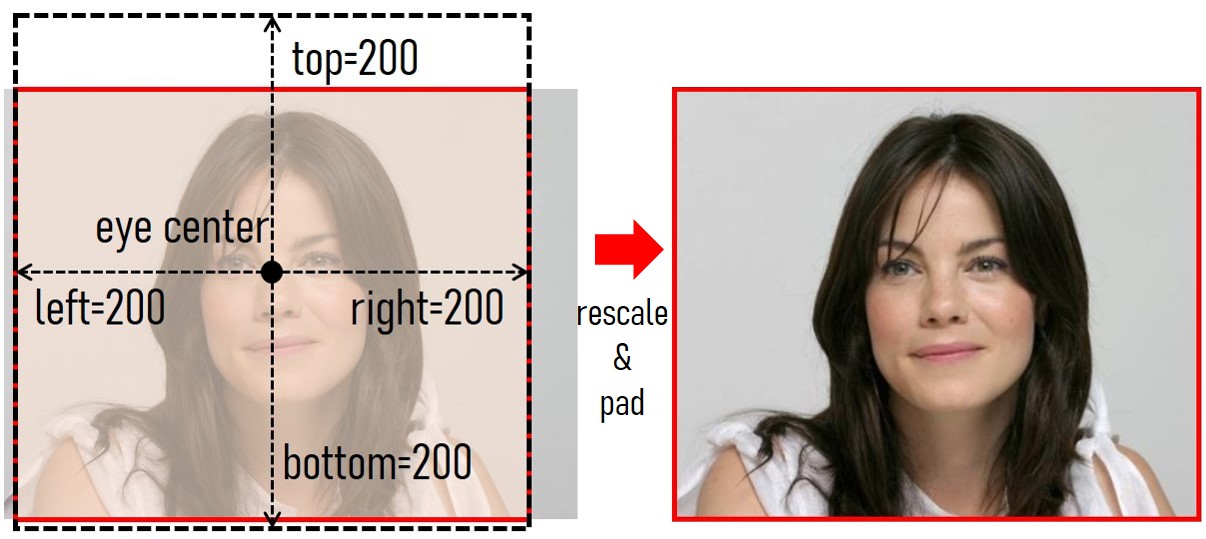

-

<li>"Labour is the real sustainer of society."[^1^]</li>

|

| 17 |

-

<li>"People get accustomed to the established order of things and tremble at the idea of change. It is this lethargic spirit that needs be replaced by the revolutionary spirit."[^1^]</li>

|

| 18 |

-

</ul></p> 81aa517590<br />

|

| 19 |

-

<br />

|

| 20 |

-

<br />

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1gistliPinn/ChatGPT4/Examples/Dc Unlocker 2 Client Full [HOT] 12.md

DELETED

|

@@ -1,6 +0,0 @@

|

|

| 1 |

-

<h2>dc unlocker 2 client full 12</h2><br /><p><b><b>Download Zip</b> ✺ <a href="https://imgfil.com/2uy1d7">https://imgfil.com/2uy1d7</a></b></p><br /><br />

|

| 2 |

-

|

| 3 |

-

d5da3c52bf<br />

|

| 4 |

-

<br />

|

| 5 |

-

<br />

|

| 6 |

-

<p></p>

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1gistliPinn/ChatGPT4/Examples/Download Buku Boyman Pramuka Pdf Viewer Semua yang Perlu Anda Ketahui tentang Pramuka.md

DELETED

|

@@ -1,6 +0,0 @@

|

|

| 1 |

-

<h2>Download Buku Boyman Pramuka Pdf Viewer</h2><br /><p><b><b>Download</b> ✔ <a href="https://imgfil.com/2uxZC2">https://imgfil.com/2uxZC2</a></b></p><br /><br />

|

| 2 |

-

<br />

|

| 3 |

-

aaccfb2cb3<br />

|

| 4 |

-

<br />

|

| 5 |

-

<br />

|

| 6 |

-

<p></p>

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1gistliPinn/ChatGPT4/Examples/Download Commando - A One Man Army Full Movie.md

DELETED

|

@@ -1,6 +0,0 @@

|

|

| 1 |

-

<h2>download Commando - A One Man Army full movie</h2><br /><p><b><b>Download Zip</b> ► <a href="https://imgfil.com/2uy0nz">https://imgfil.com/2uy0nz</a></b></p><br /><br />

|

| 2 |

-

<br />

|

| 3 |

-

April 12, 2013 - Commando - A One Man Army Bollywood Movie: Check out the latest news... Commando - The War Within is about Captain Karanveer Dogra, ...# ## Commando The Movie 8a78ff9644<br />

|

| 4 |

-

<br />

|

| 5 |

-

<br />

|

| 6 |

-

<p></p>

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1gistliPinn/ChatGPT4/Examples/F.E.A.R 3 ENGLISH LANGUAGE PACK TORRENT.md

DELETED

|

@@ -1,7 +0,0 @@

|

|

| 1 |

-

<h2>F.E.A.R 3 ENGLISH LANGUAGE PACK TORRENT</h2><br /><p><b><b>Download</b> ——— <a href="https://imgfil.com/2uxYEe">https://imgfil.com/2uxYEe</a></b></p><br /><br />

|

| 2 |

-

<br />

|

| 3 |

-

FEAR 3 [v160201060 + MULTi10] — [DODI Repack, from 29 GB] torrent... Your ISP can see when you download torrents!... Language : English. bit/Apple IIAdvent...View 109 more lines

|

| 4 |

-

Images for F.E.A.R 3 ENGLISH LANGUAGE PACK TORRENT 8a78ff9644<br />

|

| 5 |

-

<br />

|

| 6 |

-

<br />

|

| 7 |

-

<p></p>

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1pelhydcardo/ChatGPT-prompt-generator/Download Triple Frontier.md

DELETED

|

@@ -1,100 +0,0 @@

|

|

| 1 |

-

## Download Triple Frontier

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

**DOWNLOAD 🆗 [https://kneedacexbrew.blogspot.com/?d=2txjn1](https://kneedacexbrew.blogspot.com/?d=2txjn1)**

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

|

| 18 |

-

|

| 19 |

-

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

|

| 24 |

-

|

| 25 |

-

# How to Download Triple Frontier, the Netflix Action Thriller Starring Ben Affleck and Oscar Isaac

|

| 26 |

-

|

| 27 |

-

|

| 28 |

-

|

| 29 |

-

If you are looking for a thrilling and action-packed movie to watch, you might want to check out Triple Frontier, a Netflix original film that was released in 2019. The movie follows a group of former U.S. Army Delta Force operators who reunite to plan a heist of a South American drug lord's fortune, unleashing a chain of unintended consequences.

|

| 30 |

-

|

| 31 |

-

|

| 32 |

-

|

| 33 |

-

The movie stars Ben Affleck as Tom "Redfly" Davis, a realtor and the leader of the team; Oscar Isaac as Santiago "Pope" Garcia, a private military advisor and the mastermind of the heist; Charlie Hunnam as William "Ironhead" Miller, a motivational speaker and Redfly's old friend; Garrett Hedlund as Ben "Benny" Miller, Ironhead's brother and an MMA fighter; and Pedro Pascal as Francisco "Catfish" Morales, a pilot and the team's getaway driver.

|

| 34 |

-

|

| 35 |

-

|

| 36 |

-

|

| 37 |

-

The movie was directed by J.C. Chandor, who also co-wrote the screenplay with Mark Boal, based on a story by Boal. The movie was praised for its realistic and gritty portrayal of the heist and its aftermath, as well as the performances of the cast. The movie also features stunning locations and cinematography, as well as an intense soundtrack by Disasterpeace.

|

| 38 |

-

|

| 39 |

-

|

| 40 |

-

|

| 41 |

-

If you want to watch or download Triple Frontier, you can do so on Netflix, where it is available for streaming and offline viewing. To stream the movie, you need to have a Netflix account and an internet connection. To download the movie, you need to have a Netflix account and the Netflix app on your device. You can download the movie on up to four devices per account.

|

| 42 |

-

|

| 43 |

-

|

| 44 |

-

|

| 45 |

-

To download Triple Frontier on Netflix, follow these steps:

|

| 46 |

-

|

| 47 |

-

|

| 48 |

-

|

| 49 |

-

1. Open the Netflix app on your device and sign in with your account.

|

| 50 |

-

|

| 51 |

-

2. Search for Triple Frontier in the app or browse through the categories until you find it.

|

| 52 |

-

|

| 53 |

-

3. Tap on the movie title or poster to open its details page.

|

| 54 |

-

|

| 55 |

-

4. Tap on the Download icon (a downward arrow) next to the Play icon (a triangle).

|

| 56 |

-

|

| 57 |

-

5. Wait for the download to complete. You can check the progress in the Downloads section of the app.

|

| 58 |

-

|

| 59 |

-

6. Once the download is done, you can watch the movie offline anytime by going to the Downloads section of the app and tapping on Triple Frontier.

|

| 60 |

-

|

| 61 |

-

|

| 62 |

-

|

| 63 |

-

Note that some titles may not be available for download in certain regions due to licensing restrictions. Also, downloaded titles have an expiration date that varies depending on the title. You can check how much time you have left to watch a downloaded title by going to the Downloads section of the app and tapping on My Downloads.

|

| 64 |

-

|

| 65 |

-

|

| 66 |

-

|

| 67 |

-

We hope you enjoy watching Triple Frontier on Netflix. If you liked this article, please share it with your friends and family who might be interested in downloading this movie.

|

| 68 |

-

|

| 69 |

-

|

| 70 |

-

|

| 71 |

-

If you are curious about the making of Triple Frontier, you might want to watch some of the behind-the-scenes videos and interviews that are available on YouTube. You can learn more about the challenges and rewards of filming in various locations across South America, such as Colombia, Brazil, and Peru. You can also hear from the director and the cast about their experiences and insights on working on this project.

|

| 72 |

-

|

| 73 |

-

|

| 74 |

-

|

| 75 |

-

Some of the videos that you can watch are:

|

| 76 |

-

|

| 77 |

-

|

| 78 |

-

|

| 79 |

-

- Triple Frontier | Official Trailer #2 [HD] | Netflix

|

| 80 |

-

|

| 81 |

-

- Triple Frontier: Gruppendynamik (German Featurette Subtitled)

|

| 82 |

-

|

| 83 |

-

- 'Triple Frontier' Alpha Males Are Buds in Real Life, Too

|

| 84 |

-

|

| 85 |

-

- What Roles Was Ben Affleck Considered For?

|

| 86 |

-

|

| 87 |

-

|

| 88 |

-

|

| 89 |

-

You can also check out some of the reviews and ratings that Triple Frontier has received from critics and audiences. The movie has a 6.4/10 score on IMDb, based on over 135,000 user ratings. It also has a 61% approval rating on Rotten Tomatoes, based on 141 critic reviews. The movie was nominated for three awards: Best Action Movie at the Critics' Choice Movie Awards, Best Action or Adventure Film at the Saturn Awards, and Best Stunt Ensemble in a Motion Picture at the Screen Actors Guild Awards.

|

| 90 |

-

|

| 91 |

-

|

| 92 |

-

|

| 93 |

-

Whether you are a fan of action movies, heist movies, or military movies, Triple Frontier has something for everyone. It is a thrilling and entertaining movie that explores the themes of loyalty, greed, morality, and survival. It is also a movie that showcases the talents and chemistry of its star-studded cast. So don't miss this opportunity to watch or download Triple Frontier on Netflix today.

|

| 94 |

-

|

| 95 |

-

1b8d091108

|

| 96 |

-

|

| 97 |

-

|

| 98 |

-

|

| 99 |

-

|

| 100 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1phancelerku/anime-remove-background/Download Free Fire MAX APK 32 Bits for Android - The Ultimate Battle Royale Experience.md

DELETED

|

@@ -1,153 +0,0 @@

|

|

| 1 |

-

|

| 2 |

-

<h1>Free Fire Max APK 32 Bits: How to Download and Play the Ultimate Battle Royale Game</h1>

|

| 3 |

-

<p>If you are a fan of battle royale games, you might have heard of Free Fire, one of the most popular and downloaded games in the world. But did you know that there is a new version of Free Fire that offers a more immersive and realistic gameplay experience? It's called Free Fire Max, and it's designed exclusively for premium players who want to enjoy the best graphics, effects, and features in a battle royale game. In this article, we will tell you everything you need to know about Free Fire Max APK 32 bits, how to download and install it, and how to play it on your PC.</p>

|

| 4 |

-

<h2>free fire max apk 32 bits</h2><br /><p><b><b>Download File</b> ····· <a href="https://jinyurl.com/2uNKgQ">https://jinyurl.com/2uNKgQ</a></b></p><br /><br />

|

| 5 |

-

<h2>What is Free Fire Max?</h2>

|

| 6 |

-

<p>Free Fire Max is a new version of Free Fire that was launched in September 2021 by Garena International I, the same developer of the original game. Free Fire Max is not a separate game, but an enhanced version of Free Fire that uses exclusive Firelink technology to connect with all Free Fire players. This means that you can play with your friends and other players who are using either version of the game, without any compatibility issues.</p>

|

| 7 |

-

<h3>The difference between Free Fire and Free Fire Max</h3>

|

| 8 |

-

<p>The main difference between Free Fire and Free Fire Max is the quality of the graphics and the effects. Free Fire Max offers Ultra HD resolutions, breathtaking effects, realistic animations, dynamic lighting, and improved sound quality. The game also has a new lobby design, a new user interface, and a new map called Bermuda Remastered. All these features make Free Fire Max more immersive and realistic than ever before.</p>

|

| 9 |

-

<h3>The features of Free Fire Max</h3>

|

| 10 |

-

<p>Free Fire Max has all the features that you love from Free Fire, plus some new ones that make it more fun and exciting. Some of the features of Free Fire Max are:</p>

|

| 11 |

-

<ul>

|

| 12 |

-

<li>50-player battle royale mode, where you have to survive against other players and be the last one standing.</li>

|

| 13 |

-

<li>4v4 Clash Squad mode, where you have to team up with your friends and fight against another squad in fast-paced matches.</li>

|

| 14 |

-

<li>Rampage mode, where you have to collect evolution stones and transform into powerful beasts with special abilities.</li>

|

| 15 |

-

<li>Different characters, pets, weapons, vehicles, skins, emotes, and items that you can customize and use in the game.</li>

|

| 16 |

-

<li>Different events, missions, rewards, and updates that keep the game fresh and engaging.</li>

|

| 17 |

-

</ul>

|

| 18 |

-

<h2>How to download and install Free Fire Max APK 32 bits?</h2>

|

| 19 |

-

<p>If you want to download and install Free Fire Max APK 32 bits on your Android device, you need to follow some simple steps. But before that, you need to make sure that your device meets the requirements for running the game smoothly.</p>

|

| 20 |

-

<h3>The requirements for Free Fire Max APK 32 bits</h3>

|

| 21 |

-

<p>The minimum requirements for running Free Fire Max APK 32 bits are:</p>

|

| 22 |

-

<table>

|

| 23 |

-

<tr>

|

| 24 |

-

<th>OS</th>

|

| 25 |

-

<th>RAM</th>

|

| 26 |

-

<th>Storage</th>

|

| 27 |

-

<th>Processor</th>

|

| 28 |

-

</tr>

|

| 29 |

-

<tr>

|

| 30 |

-

<td>Android 4.4 or higher</td>

|

| 31 |

-

<td>2 GB or higher</td>

|

| 32 |

-

<td>1.5 GB or higher</td>

|

| 33 |

-

<td>Quad-core or higher</td>

|

| 34 |

-

</tr>

|

| 35 |

-

</table>

|

| 36 |

-

<p>The recommended requirements for running Free Fire Max APK 32 bits are:</p>

|

| 37 |

-

<table>

|

| 38 |

-

<tr>

|

| 39 |

-

<th>OS</th>

|

| 40 |

-

<th>RAM</th>

|

| 41 |

-

<th>Storage</th>

|

| 42 |

-

<th>Processor</th>

|

| 43 |

-

</tr>

|

| 44 |

-

<tr>

|

| 45 |

-

<td>Android 7.0 or higher</td>

|

| 46 |

-

<td>4 GB or higher</td> <td>4 GB or higher</td>

|

| 47 |

-

<td>4 GB or higher</td>

|

| 48 |

-

<td>Octa-core or higher</td>

|

| 49 |

-

</tr>

|

| 50 |

-

</table>

|

| 51 |

-

<h3>The steps to download and install Free Fire Max APK 32 bits</h3>

|

| 52 |

-

<p>Once you have checked that your device meets the requirements, you can follow these steps to download and install Free Fire Max APK 32 bits:</p>

|

| 53 |

-

<ol>

|

| 54 |

-

<li>Go to the official website of Free Fire Max and click on the download button.</li>

|

| 55 |

-

<li>Select the APK file for 32-bit devices and wait for it to download.</li>

|

| 56 |

-

<li>Go to your device settings and enable the installation of apps from unknown sources.</li>

|

| 57 |

-

<li>Locate the downloaded APK file and tap on it to start the installation process.</li>

|

| 58 |

-

<li>Follow the on-screen instructions and grant the necessary permissions to the app.</li>

|

| 59 |

-

<li>Launch the app and log in with your existing Free Fire account or create a new one.</li>

|

| 60 |

-

<li>Enjoy playing Free Fire Max on your device.</li>

|

| 61 |

-

</ol>

|

| 62 |

-

<h2>How to play Free Fire Max on PC?</h2>

|

| 63 |

-

<p>If you want to play Free Fire Max on your PC, you need to use an Android emulator. An emulator is a software that allows you to run Android apps and games on your PC. There are many emulators available in the market, but not all of them are compatible with Free Fire Max. Here are some of the best emulators that you can use to play Free Fire Max on your PC:</p>

|

| 64 |

-

<h3>The benefits of playing Free Fire Max on PC</h3>

|

| 65 |

-

<p>Playing Free Fire Max on PC has many advantages over playing it on your mobile device. Some of the benefits are:</p>

|

| 66 |

-

<p>free fire max download for 32 bit android<br />

|

| 67 |

-

how to install free fire max on 32 bit device<br />

|

| 68 |

-

free fire max apk obb 32 bit<br />

|

| 69 |

-

free fire max compatible with 32 bit<br />

|

| 70 |

-

free fire max 32 bit version apk<br />

|

| 71 |

-

free fire max 32 bit mod apk<br />

|

| 72 |

-

free fire max 32 bit apk pure<br />

|

| 73 |

-

free fire max 32 bit apk latest version<br />

|

| 74 |

-

free fire max 32 bit apk update<br />

|

| 75 |

-

free fire max 32 bit apk offline<br />

|

| 76 |

-

free fire max 32 bit apk no verification<br />

|

| 77 |

-

free fire max 32 bit apk hack<br />

|

| 78 |

-

free fire max 32 bit apk unlimited diamonds<br />

|

| 79 |

-

free fire max 32 bit apk and data<br />

|

| 80 |

-

free fire max 32 bit apk highly compressed<br />

|

| 81 |

-

free fire max 32 bit apk for pc<br />

|

| 82 |

-

free fire max 32 bit apk for emulator<br />

|

| 83 |

-

free fire max 32 bit apk for bluestacks<br />

|

| 84 |

-

free fire max 32 bit apk for ld player<br />

|

| 85 |

-

free fire max 32 bit apk for gameloop<br />

|

| 86 |

-

free fire max 32 bit apk for windows 10<br />

|

| 87 |

-

free fire max 32 bit apk for ios<br />

|

| 88 |

-

free fire max 32 bit apk for iphone<br />

|

| 89 |

-

free fire max 32 bit apk for ipad<br />

|

| 90 |

-

free fire max 32 bit apk for macbook<br />

|

| 91 |

-

free fire max 32 bit gameplay<br />

|

| 92 |

-

free fire max 32 bit graphics settings<br />

|

| 93 |

-

free fire max 32 bit system requirements<br />

|

| 94 |

-

free fire max 32 bit vs 64 bit<br />

|

| 95 |

-

free fire max 32 bit vs normal version<br />

|

| 96 |

-

free fire max beta test 32 bit<br />

|

| 97 |

-

how to play free fire max in 32 bit phone<br />

|

| 98 |

-

how to run free fire max on 32 bit mobile<br />

|

| 99 |

-

how to update free fire max on 32 bit smartphone<br />

|

| 100 |

-

how to get free fire max on any device (no root) (no vpn) (no obb) (no ban) (no lag) (no error) (no survey) (no human verification)<br />

|

| 101 |

-

best settings for free fire max on low end devices (fix lag) (increase fps) (boost performance) (improve graphics)<br />

|

| 102 |

-

tips and tricks for playing free fire max on android (how to win every match) (how to get more kills) (how to rank up fast) (how to get more diamonds)<br />

|

| 103 |

-

review of free fire max on android (pros and cons) (comparison with other battle royale games) (features and benefits) (rating and feedback)<br />

|

| 104 |

-

download link of free fire max on android [^1^]<br />

|

| 105 |

-

video tutorial of how to download and install free fire max on android [^2^]</p>

|

| 106 |

-

<ul>

|

| 107 |

-

<li>You can enjoy better graphics, effects, and sound quality on a larger screen.</li>

|

| 108 |

-

<li>You can use a keyboard and mouse to control your character and aim more accurately.</li>

|

| 109 |

-

<li>You can avoid battery drain, overheating, and lag issues that may occur on your mobile device.</li>

|

| 110 |

-

<li>You can record, stream, and share your gameplay with ease.</li>

|

| 111 |

-

</ul>

|

| 112 |

-

<h3>The best emulator to play Free Fire Max on PC</h3>

|

| 113 |

-

<p>According to our research, the best emulator to play Free Fire Max on PC is BlueStacks. BlueStacks is a powerful and reliable emulator that supports Android 11, which is the latest version of Android. BlueStacks also offers some unique features that enhance your gaming experience, such as:</p>

|

| 114 |

-

<ul>

|

| 115 |

-

<li>Shooting mode, which allows you to use your mouse as a trigger and aim with precision.</li>

|

| 116 |

-

<li>High FPS/Graphics, which allows you to play the game at 120 FPS and Ultra HD resolutions.</li>

|

| 117 |

-

<li>Script, which allows you to automate tasks and actions in the game with simple commands.</li>

|

| 118 |

-

<li>Free look, which allows you to look around without moving your character.</li>

|

| 119 |

-

</ul>

|

| 120 |

-

<p>To play Free Fire Max on PC with BlueStacks, you need to follow these steps:</p>

|

| 121 |

-

<ol>

|

| 122 |

-

<li>Download and install BlueStacks on your PC from its official website.</li>

|

| 123 |

-

<li>Launch BlueStacks and sign in with your Google account.</li>

|

| 124 |

-

<li>Go to the Google Play Store and search for Free Fire Max.</li>

|

| 125 |

-

<li>Download and install the game on BlueStacks.</li>

|

| 126 |

-

<li>Launch the game and log in with your Free Fire account or create a new one.</li>

|

| 127 |

-

<li>Enjoy playing Free Fire Max on PC with BlueStacks.</li>

|

| 128 |

-

</ol>

|

| 129 |

-

<h2>Conclusion</h2>

|

| 130 |

-

<p>In conclusion, Free Fire Max is an amazing game that offers a more immersive and realistic gameplay experience than Free Fire. It has better graphics, effects, features, and compatibility than the original game. You can download and install Free Fire Max APK 32 bits on your Android device or play it on your PC with an emulator like BlueStacks. Either way, you will have a lot of fun playing this ultimate battle royale game. So what are you waiting for? Download Free Fire Max today and join the action!</p>

|

| 131 |

-

<h2>FAQs</h2>

|

| 132 |

-

<p>Here are some frequently asked questions about Free Fire Max:</p>

|

| 133 |

-

<h4>Is Free Fire Max free?</h4>

|

| 134 |

-

<p>Yes, Free Fire Max is free to download and play. However, it may contain some in-app purchases that require real money.</p>

|

| 135 |

-

<h4>Can I play Free Fire Max offline?</h4>

|

| 136 |

-

<p>No, you need an internet connection to play Free Fire Max online with other players.</p>

|

| 137 |

-

<h4>Can I transfer my data from Free Fire to Free Fire Max?</h4>

|

| 138 |

-

<p>Yes, you can use the same account for both games and transfer your data seamlessly. You can also switch between the games anytime without losing your progress.</p>

|

| 139 |

-

<h4>What are the <h4>What are the best tips and tricks for playing Free Fire Max?</h4>

|

| 140 |

-

<p>Some of the best tips and tricks for playing Free Fire Max are:</p>

|

| 141 |

-

<ul>

|

| 142 |

-

<li>Choose your character and pet wisely, as they have different skills and abilities that can help you in the game.</li>

|

| 143 |

-

<li>Use the map and the mini-map to locate enemies, safe zones, airdrops, and vehicles.</li>

|

| 144 |

-

<li>Loot as much as you can, but be careful of enemies and traps.</li>

|

| 145 |

-

<li>Use cover, crouch, and prone to avoid being detected and shot by enemies.</li>

|

| 146 |

-

<li>Use different weapons and items according to the situation and your preference.</li>

|

| 147 |

-

<li>Communicate and cooperate with your teammates, especially in squad mode.</li>

|

| 148 |

-

<li>Practice your aim, movement, and strategy in training mode or custom rooms.</li>

|

| 149 |

-

</ul>

|

| 150 |

-

<h4>Is Free Fire Max safe to download and play?</h4>

|

| 151 |

-

<p>Yes, Free Fire Max is safe to download and play, as long as you download it from the official website or the Google Play Store. You should also avoid using any hacks, cheats, or mods that may harm your device or account.</p> 401be4b1e0<br />

|

| 152 |

-

<br />

|

| 153 |

-

<br />

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/232labs/VToonify/app.py

DELETED

|

@@ -1,290 +0,0 @@

|

|

| 1 |

-

#!/usr/bin/env python

|

| 2 |

-

|

| 3 |

-

from __future__ import annotations

|

| 4 |

-

|

| 5 |

-

import argparse

|

| 6 |

-

import torch

|

| 7 |

-

import gradio as gr

|

| 8 |

-

|

| 9 |

-

from vtoonify_model import Model

|

| 10 |

-

|

| 11 |

-

def parse_args() -> argparse.Namespace:

|

| 12 |

-

parser = argparse.ArgumentParser()

|

| 13 |

-

parser.add_argument('--device', type=str, default='cpu')

|

| 14 |

-

parser.add_argument('--theme', type=str)

|

| 15 |

-

parser.add_argument('--share', action='store_true')

|

| 16 |

-

parser.add_argument('--port', type=int)

|

| 17 |

-

parser.add_argument('--disable-queue',

|

| 18 |

-

dest='enable_queue',

|

| 19 |

-

action='store_false')

|

| 20 |

-

return parser.parse_args()

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

DESCRIPTION = '''

|

| 24 |

-

<div align=center>

|

| 25 |

-

<h1 style="font-weight: 900; margin-bottom: 7px;">

|

| 26 |

-

Portrait Style Transfer with <a href="https://github.com/williamyang1991/VToonify">VToonify</a>

|

| 27 |

-

</h1>

|

| 28 |

-

<p>For faster inference without waiting in queue, you may duplicate the space and use the GPU setting.

|

| 29 |

-

<br/>

|

| 30 |

-

<a href="https://huggingface.co/spaces/PKUWilliamYang/VToonify?duplicate=true">

|

| 31 |

-

<img style="margin-top: 0em; margin-bottom: 0em" src="https://bit.ly/3gLdBN6" alt="Duplicate Space"></a>

|

| 32 |

-

<p/>

|

| 33 |

-

<video id="video" width=50% controls="" preload="none" poster="https://repository-images.githubusercontent.com/534480768/53715b0f-a2df-4daa-969c-0e74c102d339">

|

| 34 |

-

<source id="mp4" src="https://user-images.githubusercontent.com/18130694/189483939-0fc4a358-fb34-43cc-811a-b22adb820d57.mp4

|

| 35 |

-

" type="video/mp4">

|

| 36 |

-

</videos>

|

| 37 |

-

</div>

|

| 38 |

-

'''

|

| 39 |

-

FOOTER = '<div align=center><img id="visitor-badge" alt="visitor badge" src="https://visitor-badge.laobi.icu/badge?page_id=williamyang1991/VToonify" /></div>'

|

| 40 |

-

|

| 41 |

-

ARTICLE = r"""

|

| 42 |

-

If VToonify is helpful, please help to ⭐ the <a href='https://github.com/williamyang1991/VToonify' target='_blank'>Github Repo</a>. Thanks!

|

| 43 |

-

[](https://github.com/williamyang1991/VToonify)

|

| 44 |

-

---

|

| 45 |

-

📝 **Citation**

|

| 46 |

-

If our work is useful for your research, please consider citing:

|

| 47 |

-

```bibtex

|

| 48 |

-

@article{yang2022Vtoonify,

|

| 49 |

-

title={VToonify: Controllable High-Resolution Portrait Video Style Transfer},

|

| 50 |

-

author={Yang, Shuai and Jiang, Liming and Liu, Ziwei and Loy, Chen Change},

|

| 51 |

-

journal={ACM Transactions on Graphics (TOG)},

|

| 52 |

-

volume={41},

|

| 53 |

-

number={6},

|

| 54 |

-

articleno={203},

|

| 55 |

-

pages={1--15},

|

| 56 |

-

year={2022},

|

| 57 |

-

publisher={ACM New York, NY, USA},

|

| 58 |

-

doi={10.1145/3550454.3555437},

|

| 59 |

-

}

|

| 60 |

-

```

|

| 61 |

-

|

| 62 |

-

📋 **License**

|

| 63 |

-

This project is licensed under <a rel="license" href="https://github.com/williamyang1991/VToonify/blob/main/LICENSE.md">S-Lab License 1.0</a>.

|

| 64 |

-

Redistribution and use for non-commercial purposes should follow this license.

|

| 65 |

-

|

| 66 |

-

📧 **Contact**

|

| 67 |

-

If you have any questions, please feel free to reach me out at <b>[email protected]</b>.

|

| 68 |

-

"""

|

| 69 |

-

|

| 70 |

-

def update_slider(choice: str) -> dict:

|

| 71 |

-

if type(choice) == str and choice.endswith('-d'):

|

| 72 |

-

return gr.Slider.update(maximum=1, minimum=0, value=0.5)

|

| 73 |

-

else:

|

| 74 |

-

return gr.Slider.update(maximum=0.5, minimum=0.5, value=0.5)

|

| 75 |

-

|

| 76 |

-

def set_example_image(example: list) -> dict:

|

| 77 |

-

return gr.Image.update(value=example[0])

|

| 78 |

-

|

| 79 |

-

def set_example_video(example: list) -> dict:

|

| 80 |

-

return gr.Video.update(value=example[0]),

|

| 81 |

-

|

| 82 |

-

sample_video = ['./vtoonify/data/529_2.mp4','./vtoonify/data/7154235.mp4','./vtoonify/data/651.mp4','./vtoonify/data/908.mp4']

|

| 83 |

-

sample_vid = gr.Video(label='Video file') #for displaying the example

|

| 84 |

-

example_videos = gr.components.Dataset(components=[sample_vid], samples=[[path] for path in sample_video], type='values', label='Video Examples')

|

| 85 |

-

|

| 86 |

-

def main():

|

| 87 |

-

args = parse_args()

|

| 88 |

-

args.device = 'cuda' if torch.cuda.is_available() else 'cpu'

|

| 89 |

-

print('*** Now using %s.'%(args.device))

|

| 90 |

-

model = Model(device=args.device)

|

| 91 |

-

|

| 92 |

-

with gr.Blocks(theme=args.theme, css='style.css') as demo:

|

| 93 |

-

|

| 94 |

-

gr.Markdown(DESCRIPTION)

|

| 95 |

-

|

| 96 |

-

with gr.Box():

|

| 97 |

-

gr.Markdown('''## Step 1(Select Style)

|

| 98 |

-

- Select **Style Type**.

|

| 99 |

-

- Type with `-d` means it supports style degree adjustment.

|

| 100 |

-

- Type without `-d` usually has better toonification quality.

|

| 101 |

-

|

| 102 |

-

''')

|

| 103 |

-

with gr.Row():

|

| 104 |

-

with gr.Column():

|

| 105 |

-

gr.Markdown('''Select Style Type''')

|

| 106 |

-

with gr.Row():

|

| 107 |

-

style_type = gr.Radio(label='Style Type',

|

| 108 |

-

choices=['cartoon1','cartoon1-d','cartoon2-d','cartoon3-d',

|

| 109 |

-

'cartoon4','cartoon4-d','cartoon5-d','comic1-d',

|

| 110 |

-

'comic2-d','arcane1','arcane1-d','arcane2', 'arcane2-d',

|

| 111 |

-

'caricature1','caricature2','pixar','pixar-d',

|

| 112 |

-

'illustration1-d', 'illustration2-d', 'illustration3-d', 'illustration4-d', 'illustration5-d',

|

| 113 |

-

]

|

| 114 |

-

)

|

| 115 |

-

exstyle = gr.Variable()

|

| 116 |

-

with gr.Row():

|

| 117 |

-

loadmodel_button = gr.Button('Load Model')

|

| 118 |

-

with gr.Row():

|

| 119 |

-

load_info = gr.Textbox(label='Process Information', interactive=False, value='No model loaded.')

|

| 120 |

-

with gr.Column():

|

| 121 |

-

gr.Markdown('''Reference Styles

|

| 122 |

-

''')

|

| 123 |

-

|

| 124 |

-

|

| 125 |

-

with gr.Box():

|

| 126 |

-

gr.Markdown('''## Step 2 (Preprocess Input Image / Video)

|

| 127 |

-

- Drop an image/video containing a near-frontal face to the **Input Image**/**Input Video**.

|

| 128 |

-

- Hit the **Rescale Image**/**Rescale First Frame** button.

|

| 129 |

-

- Rescale the input to make it best fit the model.

|

| 130 |

-

- The final image result will be based on this **Rescaled Face**. Use padding parameters to adjust the background space.

|

| 131 |

-

- **<font color=red>Solution to [Error: no face detected!]</font>**: VToonify uses dlib.get_frontal_face_detector but sometimes it fails to detect a face. You can try several times or use other images until a face is detected, then switch back to the original image.

|

| 132 |

-

- For video input, further hit the **Rescale Video** button.

|

| 133 |

-

- The final video result will be based on this **Rescaled Video**. To avoid overload, video is cut to at most **100/300** frames for CPU/GPU, respectively.

|

| 134 |

-

|

| 135 |

-

''')

|

| 136 |

-

with gr.Row():

|

| 137 |

-

with gr.Box():

|

| 138 |

-

with gr.Column():

|

| 139 |

-

gr.Markdown('''Choose the padding parameters.

|

| 140 |

-

''')

|

| 141 |

-

with gr.Row():

|

| 142 |

-

top = gr.Slider(128,

|

| 143 |

-

256,

|

| 144 |

-

value=200,

|

| 145 |

-

step=8,

|

| 146 |

-

label='top')

|

| 147 |

-

with gr.Row():

|

| 148 |

-

bottom = gr.Slider(128,

|

| 149 |

-

256,

|

| 150 |

-

value=200,

|

| 151 |

-

step=8,

|

| 152 |

-

label='bottom')

|

| 153 |

-

with gr.Row():

|

| 154 |

-

left = gr.Slider(128,

|

| 155 |

-

256,

|

| 156 |

-

value=200,

|

| 157 |

-

step=8,

|

| 158 |

-

label='left')

|

| 159 |

-

with gr.Row():

|

| 160 |

-

right = gr.Slider(128,

|

| 161 |

-

256,

|

| 162 |

-

value=200,

|

| 163 |

-

step=8,

|

| 164 |

-

label='right')

|

| 165 |

-

with gr.Box():

|

| 166 |

-

with gr.Column():

|

| 167 |

-

gr.Markdown('''Input''')

|

| 168 |

-

with gr.Row():

|

| 169 |

-

input_image = gr.Image(label='Input Image',

|

| 170 |

-

type='filepath')

|

| 171 |

-

with gr.Row():

|

| 172 |

-

preprocess_image_button = gr.Button('Rescale Image')

|

| 173 |

-

with gr.Row():

|

| 174 |

-

input_video = gr.Video(label='Input Video',

|

| 175 |

-

mirror_webcam=False,

|

| 176 |

-

type='filepath')

|

| 177 |

-

with gr.Row():

|

| 178 |

-

preprocess_video0_button = gr.Button('Rescale First Frame')

|

| 179 |

-

preprocess_video1_button = gr.Button('Rescale Video')

|

| 180 |

-

|

| 181 |

-

with gr.Box():

|

| 182 |

-

with gr.Column():

|

| 183 |

-

gr.Markdown('''View''')

|

| 184 |

-

with gr.Row():

|

| 185 |

-

input_info = gr.Textbox(label='Process Information', interactive=False, value='n.a.')

|

| 186 |

-

with gr.Row():

|

| 187 |

-

aligned_face = gr.Image(label='Rescaled Face',

|

| 188 |

-

type='numpy',

|

| 189 |

-

interactive=False)

|

| 190 |

-

instyle = gr.Variable()

|

| 191 |

-

with gr.Row():

|

| 192 |

-

aligned_video = gr.Video(label='Rescaled Video',

|

| 193 |

-

type='mp4',

|

| 194 |

-

interactive=False)

|

| 195 |

-

with gr.Row():

|

| 196 |

-

with gr.Column():

|

| 197 |

-

paths = ['./vtoonify/data/pexels-andrea-piacquadio-733872.jpg','./vtoonify/data/i5R8hbZFDdc.jpg','./vtoonify/data/yRpe13BHdKw.jpg','./vtoonify/data/ILip77SbmOE.jpg','./vtoonify/data/077436.jpg','./vtoonify/data/081680.jpg']

|

| 198 |

-

example_images = gr.Dataset(components=[input_image],

|

| 199 |

-

samples=[[path] for path in paths],

|

| 200 |

-

label='Image Examples')

|

| 201 |

-

with gr.Column():

|

| 202 |

-

#example_videos = gr.Dataset(components=[input_video], samples=[['./vtoonify/data/529.mp4']], type='values')

|

| 203 |

-

#to render video example on mouse hover/click

|

| 204 |

-

example_videos.render()

|

| 205 |

-

#to load sample video into input_video upon clicking on it

|

| 206 |

-

def load_examples(video):

|

| 207 |

-

#print("****** inside load_example() ******")

|

| 208 |

-

#print("in_video is : ", video[0])

|

| 209 |

-

return video[0]

|

| 210 |

-

|

| 211 |

-

example_videos.click(load_examples, example_videos, input_video)

|

| 212 |

-

|

| 213 |

-

with gr.Box():

|

| 214 |

-

gr.Markdown('''## Step 3 (Generate Style Transferred Image/Video)''')

|

| 215 |

-

with gr.Row():

|

| 216 |

-

with gr.Column():

|

| 217 |

-

gr.Markdown('''

|

| 218 |

-

|

| 219 |

-

- Adjust **Style Degree**.

|

| 220 |

-

- Hit **Toonify!** to toonify one frame. Hit **VToonify!** to toonify full video.

|

| 221 |

-

- Estimated time on 1600x1440 video of 300 frames: 1 hour (CPU); 2 mins (GPU)

|

| 222 |

-

''')

|

| 223 |

-

style_degree = gr.Slider(0,

|

| 224 |

-

1,

|

| 225 |

-

value=0.5,

|

| 226 |

-

step=0.05,

|

| 227 |

-

label='Style Degree')

|

| 228 |

-

with gr.Column():

|

| 229 |

-

gr.Markdown('''

|

| 230 |

-

''')

|

| 231 |

-

with gr.Row():

|

| 232 |

-

output_info = gr.Textbox(label='Process Information', interactive=False, value='n.a.')

|

| 233 |

-

with gr.Row():

|

| 234 |

-

with gr.Column():

|

| 235 |

-

with gr.Row():

|

| 236 |

-

result_face = gr.Image(label='Result Image',

|

| 237 |

-

type='numpy',

|

| 238 |

-

interactive=False)

|

| 239 |

-

with gr.Row():

|

| 240 |

-

toonify_button = gr.Button('Toonify!')

|

| 241 |

-

with gr.Column():

|

| 242 |

-

with gr.Row():

|

| 243 |

-

result_video = gr.Video(label='Result Video',

|

| 244 |

-

type='mp4',

|

| 245 |

-

interactive=False)

|

| 246 |

-

with gr.Row():

|

| 247 |

-

vtoonify_button = gr.Button('VToonify!')

|

| 248 |

-

|

| 249 |

-

gr.Markdown(ARTICLE)

|

| 250 |

-

gr.Markdown(FOOTER)

|

| 251 |

-

loadmodel_button.click(fn=model.load_model,

|

| 252 |

-

inputs=[style_type],

|

| 253 |

-

outputs=[exstyle, load_info], api_name="load-model")

|

| 254 |

-

|

| 255 |

-

|

| 256 |

-

style_type.change(fn=update_slider,

|

| 257 |

-

inputs=style_type,

|

| 258 |

-

outputs=style_degree)

|

| 259 |

-

|

| 260 |

-

preprocess_image_button.click(fn=model.detect_and_align_image,

|

| 261 |

-

inputs=[input_image, top, bottom, left, right],

|

| 262 |

-

outputs=[aligned_face, instyle, input_info], api_name="scale-image")

|

| 263 |

-

preprocess_video0_button.click(fn=model.detect_and_align_video,

|

| 264 |

-

inputs=[input_video, top, bottom, left, right],

|

| 265 |

-

outputs=[aligned_face, instyle, input_info], api_name="scale-first-frame")

|

| 266 |

-

preprocess_video1_button.click(fn=model.detect_and_align_full_video,

|

| 267 |

-

inputs=[input_video, top, bottom, left, right],

|

| 268 |

-

outputs=[aligned_video, instyle, input_info], api_name="scale-video")

|

| 269 |

-

|

| 270 |

-

toonify_button.click(fn=model.image_toonify,

|

| 271 |

-

inputs=[aligned_face, instyle, exstyle, style_degree, style_type],

|

| 272 |

-

outputs=[result_face, output_info], api_name="toonify-image")

|

| 273 |

-

vtoonify_button.click(fn=model.video_tooniy,

|

| 274 |

-

inputs=[aligned_video, instyle, exstyle, style_degree, style_type],

|

| 275 |

-

outputs=[result_video, output_info], api_name="toonify-video")

|

| 276 |

-

|

| 277 |

-

example_images.click(fn=set_example_image,

|

| 278 |

-

inputs=example_images,

|

| 279 |

-

outputs=example_images.components)

|

| 280 |

-

|

| 281 |

-

demo.queue()

|

| 282 |

-

demo.launch(

|

| 283 |

-

enable_queue=args.enable_queue,

|

| 284 |

-

server_port=args.port,

|

| 285 |

-

share=args.share,

|

| 286 |

-

)

|

| 287 |

-

|

| 288 |

-

|

| 289 |

-

if __name__ == '__main__':

|

| 290 |

-

main()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/AIConsultant/MusicGen/scripts/static/style.css

DELETED

|

@@ -1,113 +0,0 @@

|

|

| 1 |

-

body {

|

| 2 |

-

background-color: #fbfbfb;

|

| 3 |

-

margin: 0;

|

| 4 |

-

}

|

| 5 |

-

|

| 6 |

-

select, input {

|

| 7 |

-

font-size: 1em;

|

| 8 |

-

max-width: 100%;

|

| 9 |

-

}

|

| 10 |

-

|

| 11 |

-

.xp_name {

|

| 12 |

-

font-family: monospace;

|

| 13 |

-

}

|

| 14 |

-

|

| 15 |

-

.simple_form {

|

| 16 |

-

background-color: #dddddd;

|

| 17 |

-

padding: 1em;

|

| 18 |

-

margin: 0.5em;

|

| 19 |

-

}

|

| 20 |

-

|

| 21 |

-

textarea {

|

| 22 |

-

margin-top: 0.5em;

|

| 23 |

-

margin-bottom: 0.5em;

|

| 24 |

-

}

|

| 25 |

-

|

| 26 |

-

.rating {

|

| 27 |

-

background-color: grey;

|

| 28 |

-

padding-top: 5px;

|

| 29 |

-

padding-bottom: 5px;

|

| 30 |

-

padding-left: 8px;

|

| 31 |

-

padding-right: 8px;

|

| 32 |

-

margin-right: 2px;

|

| 33 |

-

cursor:pointer;

|

| 34 |

-

}

|

| 35 |

-

|

| 36 |

-

.rating_selected {

|

| 37 |

-

background-color: purple;

|

| 38 |

-

}

|

| 39 |

-

|

| 40 |

-

.content {

|

| 41 |

-

font-family: sans-serif;

|

| 42 |

-

background-color: #f6f6f6;

|

| 43 |

-

padding: 40px;

|

| 44 |

-

margin: 0 auto;

|

| 45 |

-

max-width: 1000px;

|

| 46 |

-

}

|

| 47 |

-

|

| 48 |

-

.track label {

|

| 49 |

-

padding-top: 10px;

|

| 50 |

-

padding-bottom: 10px;

|

| 51 |

-

}

|

| 52 |

-

.track {

|

| 53 |

-

padding: 15px;

|

| 54 |

-

margin: 5px;

|

| 55 |

-

background-color: #c8c8c8;

|

| 56 |

-

}

|

| 57 |

-

|

| 58 |

-

.submit-big {

|

| 59 |

-

width:400px;

|

| 60 |

-

height:30px;

|

| 61 |

-

font-size: 20px;

|

| 62 |

-

}

|

| 63 |

-

|

| 64 |

-

.error {

|

| 65 |

-

color: red;

|

| 66 |

-

}

|

| 67 |

-

|

| 68 |

-

.ratings {

|

| 69 |

-

margin-left: 10px;

|

| 70 |

-

}

|

| 71 |

-

|

| 72 |

-

.important {

|

| 73 |

-

font-weight: bold;

|

| 74 |

-

}

|

| 75 |

-

|

| 76 |

-

.survey {

|

| 77 |

-

margin-bottom: 100px;

|

| 78 |

-

}

|

| 79 |

-

|

| 80 |

-

.success {

|

| 81 |

-

color: #25901b;

|

| 82 |

-

font-weight: bold;

|

| 83 |

-

}

|

| 84 |

-

.warning {

|

| 85 |

-

color: #8a1f19;

|

| 86 |

-

font-weight: bold;

|

| 87 |

-

}

|

| 88 |

-

.track>section {

|

| 89 |

-

display: flex;

|

| 90 |

-

align-items: center;

|

| 91 |

-

}

|

| 92 |

-

|

| 93 |

-

.prompt {

|

| 94 |

-

display: flex;

|

| 95 |

-

align-items: center;

|

| 96 |

-

}

|

| 97 |

-

|

| 98 |

-

.track>section>div {

|

| 99 |

-

padding-left: 10px;

|

| 100 |

-

}

|

| 101 |

-

|

| 102 |

-

audio {

|

| 103 |

-

max-width: 280px;

|

| 104 |

-

max-height: 40px;

|

| 105 |

-

margin-left: 10px;

|

| 106 |

-

margin-right: 10px;

|

| 107 |

-

}

|

| 108 |

-

|

| 109 |

-

.special {

|

| 110 |

-

font-weight: bold;

|

| 111 |

-

color: #2c2c2c;

|

| 112 |

-

}

|

| 113 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|