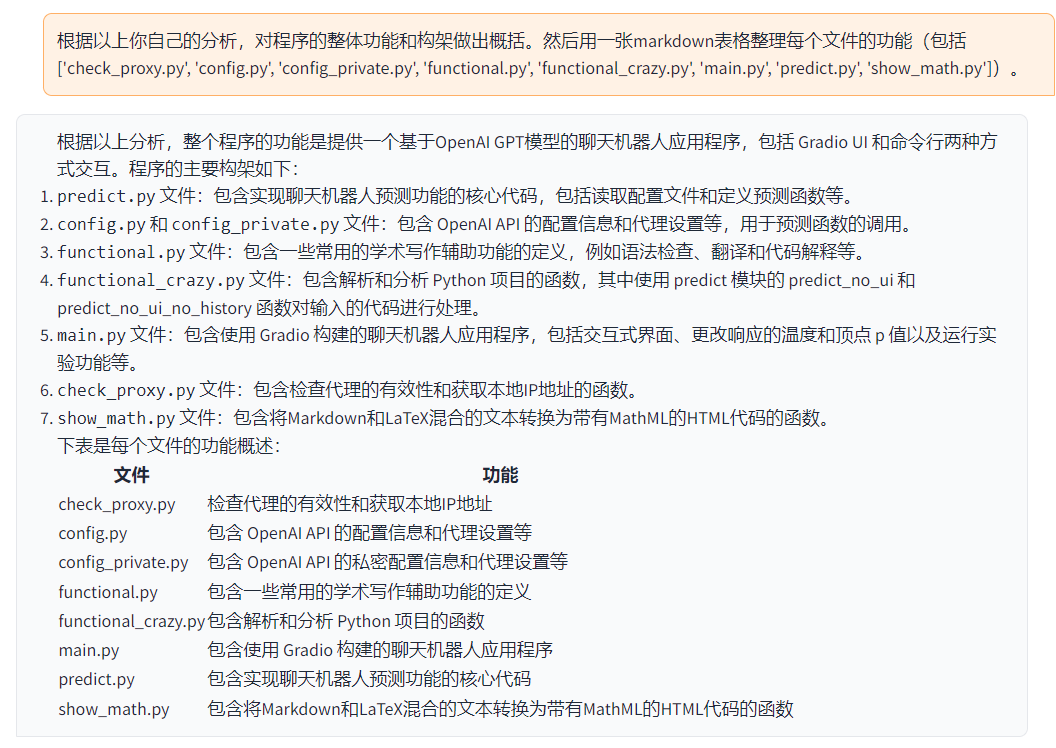

-

-

\ No newline at end of file diff --git a/spaces/4Taps/SadTalker/src/face3d/util/__init__.py b/spaces/4Taps/SadTalker/src/face3d/util/__init__.py deleted file mode 100644 index 04eecb58b62f8c9d11d17606c6241d278a48b9b9..0000000000000000000000000000000000000000 --- a/spaces/4Taps/SadTalker/src/face3d/util/__init__.py +++ /dev/null @@ -1,3 +0,0 @@ -"""This package includes a miscellaneous collection of useful helper functions.""" -from src.face3d.util import * - diff --git a/spaces/801artistry/RVC801/lib/uvr5_pack/lib_v5/layers_33966KB.py b/spaces/801artistry/RVC801/lib/uvr5_pack/lib_v5/layers_33966KB.py deleted file mode 100644 index a38b7bb3ae3136b07eadfc2db445fef4c2de186b..0000000000000000000000000000000000000000 --- a/spaces/801artistry/RVC801/lib/uvr5_pack/lib_v5/layers_33966KB.py +++ /dev/null @@ -1,126 +0,0 @@ -import torch -from torch import nn -import torch.nn.functional as F - -from . import spec_utils - - -class Conv2DBNActiv(nn.Module): - def __init__(self, nin, nout, ksize=3, stride=1, pad=1, dilation=1, activ=nn.ReLU): - super(Conv2DBNActiv, self).__init__() - self.conv = nn.Sequential( - nn.Conv2d( - nin, - nout, - kernel_size=ksize, - stride=stride, - padding=pad, - dilation=dilation, - bias=False, - ), - nn.BatchNorm2d(nout), - activ(), - ) - - def __call__(self, x): - return self.conv(x) - - -class SeperableConv2DBNActiv(nn.Module): - def __init__(self, nin, nout, ksize=3, stride=1, pad=1, dilation=1, activ=nn.ReLU): - super(SeperableConv2DBNActiv, self).__init__() - self.conv = nn.Sequential( - nn.Conv2d( - nin, - nin, - kernel_size=ksize, - stride=stride, - padding=pad, - dilation=dilation, - groups=nin, - bias=False, - ), - nn.Conv2d(nin, nout, kernel_size=1, bias=False), - nn.BatchNorm2d(nout), - activ(), - ) - - def __call__(self, x): - return self.conv(x) - - -class Encoder(nn.Module): - def __init__(self, nin, nout, ksize=3, stride=1, pad=1, activ=nn.LeakyReLU): - super(Encoder, self).__init__() - self.conv1 = Conv2DBNActiv(nin, nout, ksize, 1, pad, activ=activ) - self.conv2 = Conv2DBNActiv(nout, nout, ksize, stride, pad, activ=activ) - - def __call__(self, x): - skip = self.conv1(x) - h = self.conv2(skip) - - return h, skip - - -class Decoder(nn.Module): - def __init__( - self, nin, nout, ksize=3, stride=1, pad=1, activ=nn.ReLU, dropout=False - ): - super(Decoder, self).__init__() - self.conv = Conv2DBNActiv(nin, nout, ksize, 1, pad, activ=activ) - self.dropout = nn.Dropout2d(0.1) if dropout else None - - def __call__(self, x, skip=None): - x = F.interpolate(x, scale_factor=2, mode="bilinear", align_corners=True) - if skip is not None: - skip = spec_utils.crop_center(skip, x) - x = torch.cat([x, skip], dim=1) - h = self.conv(x) - - if self.dropout is not None: - h = self.dropout(h) - - return h - - -class ASPPModule(nn.Module): - def __init__(self, nin, nout, dilations=(4, 8, 16, 32, 64), activ=nn.ReLU): - super(ASPPModule, self).__init__() - self.conv1 = nn.Sequential( - nn.AdaptiveAvgPool2d((1, None)), - Conv2DBNActiv(nin, nin, 1, 1, 0, activ=activ), - ) - self.conv2 = Conv2DBNActiv(nin, nin, 1, 1, 0, activ=activ) - self.conv3 = SeperableConv2DBNActiv( - nin, nin, 3, 1, dilations[0], dilations[0], activ=activ - ) - self.conv4 = SeperableConv2DBNActiv( - nin, nin, 3, 1, dilations[1], dilations[1], activ=activ - ) - self.conv5 = SeperableConv2DBNActiv( - nin, nin, 3, 1, dilations[2], dilations[2], activ=activ - ) - self.conv6 = SeperableConv2DBNActiv( - nin, nin, 3, 1, dilations[2], dilations[2], activ=activ - ) - self.conv7 = SeperableConv2DBNActiv( - nin, nin, 3, 1, dilations[2], dilations[2], activ=activ - ) - self.bottleneck = nn.Sequential( - Conv2DBNActiv(nin * 7, nout, 1, 1, 0, activ=activ), nn.Dropout2d(0.1) - ) - - def forward(self, x): - _, _, h, w = x.size() - feat1 = F.interpolate( - self.conv1(x), size=(h, w), mode="bilinear", align_corners=True - ) - feat2 = self.conv2(x) - feat3 = self.conv3(x) - feat4 = self.conv4(x) - feat5 = self.conv5(x) - feat6 = self.conv6(x) - feat7 = self.conv7(x) - out = torch.cat((feat1, feat2, feat3, feat4, feat5, feat6, feat7), dim=1) - bottle = self.bottleneck(out) - return bottle diff --git a/spaces/AIConsultant/MusicGen/audiocraft/modules/chroma.py b/spaces/AIConsultant/MusicGen/audiocraft/modules/chroma.py deleted file mode 100644 index e84fb66b4a4aaefb0b3ccac8a9a44c3b20e48f61..0000000000000000000000000000000000000000 --- a/spaces/AIConsultant/MusicGen/audiocraft/modules/chroma.py +++ /dev/null @@ -1,66 +0,0 @@ -# Copyright (c) Meta Platforms, Inc. and affiliates. -# All rights reserved. -# -# This source code is licensed under the license found in the -# LICENSE file in the root directory of this source tree. -import typing as tp - -from einops import rearrange -from librosa import filters -import torch -from torch import nn -import torch.nn.functional as F -import torchaudio - - -class ChromaExtractor(nn.Module): - """Chroma extraction and quantization. - - Args: - sample_rate (int): Sample rate for the chroma extraction. - n_chroma (int): Number of chroma bins for the chroma extraction. - radix2_exp (int): Size of stft window for the chroma extraction (power of 2, e.g. 12 -> 2^12). - nfft (int, optional): Number of FFT. - winlen (int, optional): Window length. - winhop (int, optional): Window hop size. - argmax (bool, optional): Whether to use argmax. Defaults to False. - norm (float, optional): Norm for chroma normalization. Defaults to inf. - """ - def __init__(self, sample_rate: int, n_chroma: int = 12, radix2_exp: int = 12, nfft: tp.Optional[int] = None, - winlen: tp.Optional[int] = None, winhop: tp.Optional[int] = None, argmax: bool = False, - norm: float = torch.inf): - super().__init__() - self.winlen = winlen or 2 ** radix2_exp - self.nfft = nfft or self.winlen - self.winhop = winhop or (self.winlen // 4) - self.sample_rate = sample_rate - self.n_chroma = n_chroma - self.norm = norm - self.argmax = argmax - self.register_buffer('fbanks', torch.from_numpy(filters.chroma(sr=sample_rate, n_fft=self.nfft, tuning=0, - n_chroma=self.n_chroma)), persistent=False) - self.spec = torchaudio.transforms.Spectrogram(n_fft=self.nfft, win_length=self.winlen, - hop_length=self.winhop, power=2, center=True, - pad=0, normalized=True) - - def forward(self, wav: torch.Tensor) -> torch.Tensor: - T = wav.shape[-1] - # in case we are getting a wav that was dropped out (nullified) - # from the conditioner, make sure wav length is no less that nfft - if T < self.nfft: - pad = self.nfft - T - r = 0 if pad % 2 == 0 else 1 - wav = F.pad(wav, (pad // 2, pad // 2 + r), 'constant', 0) - assert wav.shape[-1] == self.nfft, f"expected len {self.nfft} but got {wav.shape[-1]}" - - spec = self.spec(wav).squeeze(1) - raw_chroma = torch.einsum('cf,...ft->...ct', self.fbanks, spec) - norm_chroma = torch.nn.functional.normalize(raw_chroma, p=self.norm, dim=-2, eps=1e-6) - norm_chroma = rearrange(norm_chroma, 'b d t -> b t d') - - if self.argmax: - idx = norm_chroma.argmax(-1, keepdim=True) - norm_chroma[:] = 0 - norm_chroma.scatter_(dim=-1, index=idx, value=1) - - return norm_chroma diff --git a/spaces/AIGC-Audio/AudioGPT/text_to_audio/Make_An_Audio/ldm/modules/discriminator/model.py b/spaces/AIGC-Audio/AudioGPT/text_to_audio/Make_An_Audio/ldm/modules/discriminator/model.py deleted file mode 100644 index 5263368a5e74d9d07840399469ca12a54e7fecbc..0000000000000000000000000000000000000000 --- a/spaces/AIGC-Audio/AudioGPT/text_to_audio/Make_An_Audio/ldm/modules/discriminator/model.py +++ /dev/null @@ -1,295 +0,0 @@ -import functools -import torch.nn as nn - - -class ActNorm(nn.Module): - def __init__(self, num_features, logdet=False, affine=True, - allow_reverse_init=False): - assert affine - super().__init__() - self.logdet = logdet - self.loc = nn.Parameter(torch.zeros(1, num_features, 1, 1)) - self.scale = nn.Parameter(torch.ones(1, num_features, 1, 1)) - self.allow_reverse_init = allow_reverse_init - - self.register_buffer('initialized', torch.tensor(0, dtype=torch.uint8)) - - def initialize(self, input): - with torch.no_grad(): - flatten = input.permute(1, 0, 2, 3).contiguous().view(input.shape[1], -1) - mean = ( - flatten.mean(1) - .unsqueeze(1) - .unsqueeze(2) - .unsqueeze(3) - .permute(1, 0, 2, 3) - ) - std = ( - flatten.std(1) - .unsqueeze(1) - .unsqueeze(2) - .unsqueeze(3) - .permute(1, 0, 2, 3) - ) - - self.loc.data.copy_(-mean) - self.scale.data.copy_(1 / (std + 1e-6)) - - def forward(self, input, reverse=False): - if reverse: - return self.reverse(input) - if len(input.shape) == 2: - input = input[:, :, None, None] - squeeze = True - else: - squeeze = False - - _, _, height, width = input.shape - - if self.training and self.initialized.item() == 0: - self.initialize(input) - self.initialized.fill_(1) - - h = self.scale * (input + self.loc) - - if squeeze: - h = h.squeeze(-1).squeeze(-1) - - if self.logdet: - log_abs = torch.log(torch.abs(self.scale)) - logdet = height * width * torch.sum(log_abs) - logdet = logdet * torch.ones(input.shape[0]).to(input) - return h, logdet - - return h - - def reverse(self, output): - if self.training and self.initialized.item() == 0: - if not self.allow_reverse_init: - raise RuntimeError( - "Initializing ActNorm in reverse direction is " - "disabled by default. Use allow_reverse_init=True to enable." - ) - else: - self.initialize(output) - self.initialized.fill_(1) - - if len(output.shape) == 2: - output = output[:, :, None, None] - squeeze = True - else: - squeeze = False - - h = output / self.scale - self.loc - - if squeeze: - h = h.squeeze(-1).squeeze(-1) - return h - -def weights_init(m): - classname = m.__class__.__name__ - if classname.find('Conv') != -1: - nn.init.normal_(m.weight.data, 0.0, 0.02) - elif classname.find('BatchNorm') != -1: - nn.init.normal_(m.weight.data, 1.0, 0.02) - nn.init.constant_(m.bias.data, 0) - - -class NLayerDiscriminator(nn.Module): - """Defines a PatchGAN discriminator as in Pix2Pix - --> see https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/models/networks.py - """ - def __init__(self, input_nc=3, ndf=64, n_layers=3, use_actnorm=False): - """Construct a PatchGAN discriminator - Parameters: - input_nc (int) -- the number of channels in input images - ndf (int) -- the number of filters in the last conv layer - n_layers (int) -- the number of conv layers in the discriminator - norm_layer -- normalization layer - """ - super(NLayerDiscriminator, self).__init__() - if not use_actnorm: - norm_layer = nn.BatchNorm2d - else: - norm_layer = ActNorm - if type(norm_layer) == functools.partial: # no need to use bias as BatchNorm2d has affine parameters - use_bias = norm_layer.func != nn.BatchNorm2d - else: - use_bias = norm_layer != nn.BatchNorm2d - - kw = 4 - padw = 1 - sequence = [nn.Conv2d(input_nc, ndf, kernel_size=kw, stride=2, padding=padw), nn.LeakyReLU(0.2, True)] - nf_mult = 1 - nf_mult_prev = 1 - for n in range(1, n_layers): # gradually increase the number of filters - nf_mult_prev = nf_mult - nf_mult = min(2 ** n, 8) - sequence += [ - nn.Conv2d(ndf * nf_mult_prev, ndf * nf_mult, kernel_size=kw, stride=2, padding=padw, bias=use_bias), - norm_layer(ndf * nf_mult), - nn.LeakyReLU(0.2, True) - ] - - nf_mult_prev = nf_mult - nf_mult = min(2 ** n_layers, 8) - sequence += [ - nn.Conv2d(ndf * nf_mult_prev, ndf * nf_mult, kernel_size=kw, stride=1, padding=padw, bias=use_bias), - norm_layer(ndf * nf_mult), - nn.LeakyReLU(0.2, True) - ] - # output 1 channel prediction map - sequence += [nn.Conv2d(ndf * nf_mult, 1, kernel_size=kw, stride=1, padding=padw)] - self.main = nn.Sequential(*sequence) - - def forward(self, input): - """Standard forward.""" - return self.main(input) - -class NLayerDiscriminator1dFeats(NLayerDiscriminator): - """Defines a PatchGAN discriminator as in Pix2Pix - --> see https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/models/networks.py - """ - def __init__(self, input_nc=3, ndf=64, n_layers=3, use_actnorm=False): - """Construct a PatchGAN discriminator - Parameters: - input_nc (int) -- the number of channels in input feats - ndf (int) -- the number of filters in the last conv layer - n_layers (int) -- the number of conv layers in the discriminator - norm_layer -- normalization layer - """ - super().__init__(input_nc=input_nc, ndf=64, n_layers=n_layers, use_actnorm=use_actnorm) - - if not use_actnorm: - norm_layer = nn.BatchNorm1d - else: - norm_layer = ActNorm - if type(norm_layer) == functools.partial: # no need to use bias as BatchNorm has affine parameters - use_bias = norm_layer.func != nn.BatchNorm1d - else: - use_bias = norm_layer != nn.BatchNorm1d - - kw = 4 - padw = 1 - sequence = [nn.Conv1d(input_nc, input_nc//2, kernel_size=kw, stride=2, padding=padw), nn.LeakyReLU(0.2, True)] - nf_mult = input_nc//2 - nf_mult_prev = 1 - for n in range(1, n_layers): # gradually decrease the number of filters - nf_mult_prev = nf_mult - nf_mult = max(nf_mult_prev // (2 ** n), 8) - sequence += [ - nn.Conv1d(nf_mult_prev, nf_mult, kernel_size=kw, stride=2, padding=padw, bias=use_bias), - norm_layer(nf_mult), - nn.LeakyReLU(0.2, True) - ] - - nf_mult_prev = nf_mult - nf_mult = max(nf_mult_prev // (2 ** n), 8) - sequence += [ - nn.Conv1d(nf_mult_prev, nf_mult, kernel_size=kw, stride=1, padding=padw, bias=use_bias), - norm_layer(nf_mult), - nn.LeakyReLU(0.2, True) - ] - nf_mult_prev = nf_mult - nf_mult = max(nf_mult_prev // (2 ** n), 8) - sequence += [ - nn.Conv1d(nf_mult_prev, nf_mult, kernel_size=kw, stride=1, padding=padw, bias=use_bias), - norm_layer(nf_mult), - nn.LeakyReLU(0.2, True) - ] - # output 1 channel prediction map - sequence += [nn.Conv1d(nf_mult, 1, kernel_size=kw, stride=1, padding=padw)] - self.main = nn.Sequential(*sequence) - - -class NLayerDiscriminator1dSpecs(NLayerDiscriminator): - """Defines a PatchGAN discriminator as in Pix2Pix - --> see https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/models/networks.py - """ - def __init__(self, input_nc=80, ndf=64, n_layers=3, use_actnorm=False): - """Construct a PatchGAN discriminator - Parameters: - input_nc (int) -- the number of channels in input specs - ndf (int) -- the number of filters in the last conv layer - n_layers (int) -- the number of conv layers in the discriminator - norm_layer -- normalization layer - """ - super().__init__(input_nc=input_nc, ndf=64, n_layers=n_layers, use_actnorm=use_actnorm) - - if not use_actnorm: - norm_layer = nn.BatchNorm1d - else: - norm_layer = ActNorm - if type(norm_layer) == functools.partial: # no need to use bias as BatchNorm has affine parameters - use_bias = norm_layer.func != nn.BatchNorm1d - else: - use_bias = norm_layer != nn.BatchNorm1d - - kw = 4 - padw = 1 - sequence = [nn.Conv1d(input_nc, ndf, kernel_size=kw, stride=2, padding=padw), nn.LeakyReLU(0.2, True)] - nf_mult = 1 - nf_mult_prev = 1 - for n in range(1, n_layers): # gradually decrease the number of filters - nf_mult_prev = nf_mult - nf_mult = min(2 ** n, 8) - sequence += [ - nn.Conv1d(ndf * nf_mult_prev, ndf * nf_mult, kernel_size=kw, stride=2, padding=padw, bias=use_bias), - norm_layer(ndf * nf_mult), - nn.LeakyReLU(0.2, True) - ] - - nf_mult_prev = nf_mult - nf_mult = min(2 ** n_layers, 8) - sequence += [ - nn.Conv1d(ndf * nf_mult_prev, ndf * nf_mult, kernel_size=kw, stride=1, padding=padw, bias=use_bias), - norm_layer(ndf * nf_mult), - nn.LeakyReLU(0.2, True) - ] - # output 1 channel prediction map - sequence += [nn.Conv1d(ndf * nf_mult, 1, kernel_size=kw, stride=1, padding=padw)] - self.main = nn.Sequential(*sequence) - - def forward(self, input): - """Standard forward.""" - # (B, C, L) - input = input.squeeze(1) - input = self.main(input) - return input - - -if __name__ == '__main__': - import torch - - ## FEATURES - disc_in_channels = 2048 - disc_num_layers = 2 - use_actnorm = False - disc_ndf = 64 - discriminator = NLayerDiscriminator1dFeats(input_nc=disc_in_channels, n_layers=disc_num_layers, - use_actnorm=use_actnorm, ndf=disc_ndf).apply(weights_init) - inputs = torch.rand((6, 2048, 212)) - outputs = discriminator(inputs) - print(outputs.shape) - - ## AUDIO - disc_in_channels = 1 - disc_num_layers = 3 - use_actnorm = False - disc_ndf = 64 - discriminator = NLayerDiscriminator(input_nc=disc_in_channels, n_layers=disc_num_layers, - use_actnorm=use_actnorm, ndf=disc_ndf).apply(weights_init) - inputs = torch.rand((6, 1, 80, 848)) - outputs = discriminator(inputs) - print(outputs.shape) - - ## IMAGE - disc_in_channels = 3 - disc_num_layers = 3 - use_actnorm = False - disc_ndf = 64 - discriminator = NLayerDiscriminator(input_nc=disc_in_channels, n_layers=disc_num_layers, - use_actnorm=use_actnorm, ndf=disc_ndf).apply(weights_init) - inputs = torch.rand((6, 3, 256, 256)) - outputs = discriminator(inputs) - print(outputs.shape) diff --git a/spaces/AIGC-Audio/Make_An_Audio_inpaint/ldm/modules/encoders/open_clap/pretrained.py b/spaces/AIGC-Audio/Make_An_Audio_inpaint/ldm/modules/encoders/open_clap/pretrained.py deleted file mode 100644 index 723619a9fd511cf8619def49c4631ec701891b93..0000000000000000000000000000000000000000 --- a/spaces/AIGC-Audio/Make_An_Audio_inpaint/ldm/modules/encoders/open_clap/pretrained.py +++ /dev/null @@ -1,147 +0,0 @@ -import hashlib -import os -import urllib -import warnings - -from tqdm import tqdm - -_RN50 = dict( - openai="https://openaipublic.azureedge.net/clip/models/afeb0e10f9e5a86da6080e35cf09123aca3b358a0c3e3b6c78a7b63bc04b6762/RN50.pt", - yfcc15m="https://github.com/mlfoundations/open_clip/releases/download/v0.2-weights/rn50-quickgelu-yfcc15m-455df137.pt", - cc12m="https://github.com/mlfoundations/open_clip/releases/download/v0.2-weights/rn50-quickgelu-cc12m-f000538c.pt" -) - -_RN50_quickgelu = dict( - openai="https://openaipublic.azureedge.net/clip/models/afeb0e10f9e5a86da6080e35cf09123aca3b358a0c3e3b6c78a7b63bc04b6762/RN50.pt", - yfcc15m="https://github.com/mlfoundations/open_clip/releases/download/v0.2-weights/rn50-quickgelu-yfcc15m-455df137.pt", - cc12m="https://github.com/mlfoundations/open_clip/releases/download/v0.2-weights/rn50-quickgelu-cc12m-f000538c.pt" -) - -_RN101 = dict( - openai="https://openaipublic.azureedge.net/clip/models/8fa8567bab74a42d41c5915025a8e4538c3bdbe8804a470a72f30b0d94fab599/RN101.pt", - yfcc15m="https://github.com/mlfoundations/open_clip/releases/download/v0.2-weights/rn101-quickgelu-yfcc15m-3e04b30e.pt" -) - -_RN101_quickgelu = dict( - openai="https://openaipublic.azureedge.net/clip/models/8fa8567bab74a42d41c5915025a8e4538c3bdbe8804a470a72f30b0d94fab599/RN101.pt", - yfcc15m="https://github.com/mlfoundations/open_clip/releases/download/v0.2-weights/rn101-quickgelu-yfcc15m-3e04b30e.pt" -) - -_RN50x4 = dict( - openai="https://openaipublic.azureedge.net/clip/models/7e526bd135e493cef0776de27d5f42653e6b4c8bf9e0f653bb11773263205fdd/RN50x4.pt", -) - -_RN50x16 = dict( - openai="https://openaipublic.azureedge.net/clip/models/52378b407f34354e150460fe41077663dd5b39c54cd0bfd2b27167a4a06ec9aa/RN50x16.pt", -) - -_RN50x64 = dict( - openai="https://openaipublic.azureedge.net/clip/models/be1cfb55d75a9666199fb2206c106743da0f6468c9d327f3e0d0a543a9919d9c/RN50x64.pt", -) - -_VITB32 = dict( - openai="https://openaipublic.azureedge.net/clip/models/40d365715913c9da98579312b702a82c18be219cc2a73407c4526f58eba950af/ViT-B-32.pt", - laion400m_e31="https://github.com/mlfoundations/open_clip/releases/download/v0.2-weights/vit_b_32-quickgelu-laion400m_e31-d867053b.pt", - laion400m_e32="https://github.com/mlfoundations/open_clip/releases/download/v0.2-weights/vit_b_32-quickgelu-laion400m_e32-46683a32.pt", - laion400m_avg="https://github.com/mlfoundations/open_clip/releases/download/v0.2-weights/vit_b_32-quickgelu-laion400m_avg-8a00ab3c.pt", -) - -_VITB32_quickgelu = dict( - openai="https://openaipublic.azureedge.net/clip/models/40d365715913c9da98579312b702a82c18be219cc2a73407c4526f58eba950af/ViT-B-32.pt", - laion400m_e31="https://github.com/mlfoundations/open_clip/releases/download/v0.2-weights/vit_b_32-quickgelu-laion400m_e31-d867053b.pt", - laion400m_e32="https://github.com/mlfoundations/open_clip/releases/download/v0.2-weights/vit_b_32-quickgelu-laion400m_e32-46683a32.pt", - laion400m_avg="https://github.com/mlfoundations/open_clip/releases/download/v0.2-weights/vit_b_32-quickgelu-laion400m_avg-8a00ab3c.pt", -) - -_VITB16 = dict( - openai="https://openaipublic.azureedge.net/clip/models/5806e77cd80f8b59890b7e101eabd078d9fb84e6937f9e85e4ecb61988df416f/ViT-B-16.pt", -) - -_VITL14 = dict( - openai="https://openaipublic.azureedge.net/clip/models/b8cca3fd41ae0c99ba7e8951adf17d267cdb84cd88be6f7c2e0eca1737a03836/ViT-L-14.pt", -) - -_PRETRAINED = { - "RN50": _RN50, - "RN50-quickgelu": _RN50_quickgelu, - "RN101": _RN101, - "RN101-quickgelu": _RN101_quickgelu, - "RN50x4": _RN50x4, - "RN50x16": _RN50x16, - "ViT-B-32": _VITB32, - "ViT-B-32-quickgelu": _VITB32_quickgelu, - "ViT-B-16": _VITB16, - "ViT-L-14": _VITL14, -} - - -def list_pretrained(as_str: bool = False): - """ returns list of pretrained models - Returns a tuple (model_name, pretrain_tag) by default or 'name:tag' if as_str == True - """ - return [':'.join([k, t]) if as_str else (k, t) for k in _PRETRAINED.keys() for t in _PRETRAINED[k].keys()] - - -def list_pretrained_tag_models(tag: str): - """ return all models having the specified pretrain tag """ - models = [] - for k in _PRETRAINED.keys(): - if tag in _PRETRAINED[k]: - models.append(k) - return models - - -def list_pretrained_model_tags(model: str): - """ return all pretrain tags for the specified model architecture """ - tags = [] - if model in _PRETRAINED: - tags.extend(_PRETRAINED[model].keys()) - return tags - - -def get_pretrained_url(model: str, tag: str): - if model not in _PRETRAINED: - return '' - model_pretrained = _PRETRAINED[model] - if tag not in model_pretrained: - return '' - return model_pretrained[tag] - - -def download_pretrained(url: str, root: str = os.path.expanduser("~/.cache/clip")): - os.makedirs(root, exist_ok=True) - filename = os.path.basename(url) - - if 'openaipublic' in url: - expected_sha256 = url.split("/")[-2] - else: - expected_sha256 = '' - - download_target = os.path.join(root, filename) - - if os.path.exists(download_target) and not os.path.isfile(download_target): - raise RuntimeError(f"{download_target} exists and is not a regular file") - - if os.path.isfile(download_target): - if expected_sha256: - if hashlib.sha256(open(download_target, "rb").read()).hexdigest() == expected_sha256: - return download_target - else: - warnings.warn(f"{download_target} exists, but the SHA256 checksum does not match; re-downloading the file") - else: - return download_target - - with urllib.request.urlopen(url) as source, open(download_target, "wb") as output: - with tqdm(total=int(source.info().get("Content-Length")), ncols=80, unit='iB', unit_scale=True) as loop: - while True: - buffer = source.read(8192) - if not buffer: - break - - output.write(buffer) - loop.update(len(buffer)) - - if expected_sha256 and hashlib.sha256(open(download_target, "rb").read()).hexdigest() != expected_sha256: - raise RuntimeError(f"Model has been downloaded but the SHA256 checksum does not not match") - - return download_target diff --git a/spaces/AgentVerse/agentVerse/agentverse/agentverse.py b/spaces/AgentVerse/agentVerse/agentverse/agentverse.py deleted file mode 100644 index 8b1505075ccd942f0859b779eff6b6e3a2915aa1..0000000000000000000000000000000000000000 --- a/spaces/AgentVerse/agentVerse/agentverse/agentverse.py +++ /dev/null @@ -1,65 +0,0 @@ -import asyncio -import logging -from typing import List - -# from agentverse.agents import Agent -from agentverse.agents.conversation_agent import BaseAgent -from agentverse.environments import BaseEnvironment -from agentverse.initialization import load_agent, load_environment, prepare_task_config - -logging.basicConfig( - format="%(asctime)s - %(levelname)s - %(name)s - %(message)s", - datefmt="%m/%d/%Y %H:%M:%S", - level=logging.INFO, -) - -openai_logger = logging.getLogger("openai") -openai_logger.setLevel(logging.WARNING) - - -class AgentVerse: - def __init__(self, agents: List[BaseAgent], environment: BaseEnvironment): - self.agents = agents - self.environment = environment - - @classmethod - def from_task(cls, task: str, tasks_dir: str): - """Build an AgentVerse from a task name. - The task name should correspond to a directory in `tasks` directory. - Then this method will load the configuration from the yaml file in that directory. - """ - # Prepare the config of the task - task_config = prepare_task_config(task, tasks_dir) - - # Build the agents - agents = [] - for agent_configs in task_config["agents"]: - agent = load_agent(agent_configs) - agents.append(agent) - - # Build the environment - env_config = task_config["environment"] - env_config["agents"] = agents - environment = load_environment(env_config) - - return cls(agents, environment) - - def run(self): - """Run the environment from scratch until it is done.""" - self.environment.reset() - while not self.environment.is_done(): - asyncio.run(self.environment.step()) - - def reset(self): - self.environment.reset() - for agent in self.agents: - agent.reset() - - def next(self, *args, **kwargs): - """Run the environment for one step and return the return message.""" - return_message = asyncio.run(self.environment.step(*args, **kwargs)) - return return_message - - def update_state(self, *args, **kwargs): - """Run the environment for one step and return the return message.""" - self.environment.update_state(*args, **kwargs) diff --git a/spaces/AgentVerse/agentVerse/agentverse/tasksolving.py b/spaces/AgentVerse/agentVerse/agentverse/tasksolving.py deleted file mode 100644 index 2bd2bd9b7793d21de6cc4f0089c33eaed955e721..0000000000000000000000000000000000000000 --- a/spaces/AgentVerse/agentVerse/agentverse/tasksolving.py +++ /dev/null @@ -1,91 +0,0 @@ -import asyncio -import os -import copy - -import logging - -from agentverse.environments.tasksolving_env.basic import BasicEnvironment -from agentverse.initialization import load_agent, load_environment, prepare_task_config -from agentverse.utils import AGENT_TYPES - - -openai_logger = logging.getLogger("openai") -openai_logger.setLevel(logging.WARNING) - - -class TaskSolving: - environment: BasicEnvironment - task: str = "" - logs: list = [] - - def __init__(self, environment: BasicEnvironment, task: str = ""): - self.environment = environment - self.task = task - - @classmethod - def from_task(cls, task: str, tasks_dir: str): - """Build an AgentVerse from a task name. - The task name should correspond to a directory in `tasks` directory. - Then this method will load the configuration from the yaml file in that directory. - """ - # Prepare the config of the task - task_config = prepare_task_config(task, tasks_dir) - - # Build the environment - env_config = task_config["environment"] - - # Build agents for all pipeline (task) - agents = {} - for i, agent_config in enumerate(task_config["agents"]): - agent_type = AGENT_TYPES(i) - if i == 2 and agent_config.get("agent_type", "") == "critic": - agent = load_agent(agent_config) - agents[agent_type] = [ - copy.deepcopy(agent) - for _ in range(task_config.get("cnt_agents", 1) - 1) - ] - else: - agents[agent_type] = load_agent(agent_config) - - env_config["agents"] = agents - - env_config["task_description"] = task_config.get("task_description", "") - env_config["max_rounds"] = task_config.get("max_rounds", 3) - - environment: BasicEnvironment = load_environment(env_config) - - return cls(environment=environment, task=task) - - def run(self): - """Run the environment from scratch until it is done.""" - self.environment.reset() - self.logs = [] - advice = "No advice yet." - previous_plan = "No solution yet." - while not self.environment.is_done(): - result, advice, previous_plan, logs, success = asyncio.run( - self.environment.step(advice, previous_plan) - ) - self.logs += logs - self.environment.report_metrics() - self.save_result(previous_plan, result, self.environment.get_spend()) - return previous_plan, result, self.logs - - def singleagent_thinking(self, preliminary_solution, advice) -> str: - preliminary_solution = self.environment.solve( - former_solution=preliminary_solution, - critic_opinions=[(self.environment.evaluator, advice)], - ) - return preliminary_solution - - def reset(self): - self.environment.reset() - - def save_result(self, plan: str, result: str, spend: float): - """Save the result to the result file""" - result_file_path = "./results/" + self.task + ".txt" - os.makedirs(os.path.dirname(result_file_path), exist_ok=True) - with open(result_file_path, "w") as f: - f.write("[Final Plan]\n" + plan + "\n\n") - f.write("[Result]\n" + result) - f.write(f"[Spent]\n${spend}") diff --git a/spaces/AgentVerse/agentVerse/ui/src/phaser3-rex-plugins/templates/ui/namevaluelabel/Factory.js b/spaces/AgentVerse/agentVerse/ui/src/phaser3-rex-plugins/templates/ui/namevaluelabel/Factory.js deleted file mode 100644 index db094ab8b8ad86b74fcc5419879e69ef4e599031..0000000000000000000000000000000000000000 --- a/spaces/AgentVerse/agentVerse/ui/src/phaser3-rex-plugins/templates/ui/namevaluelabel/Factory.js +++ /dev/null @@ -1,13 +0,0 @@ -import NameValueLabel from './NameValueLabel.js'; -import ObjectFactory from '../ObjectFactory.js'; -import SetValue from '../../../plugins/utils/object/SetValue.js'; - -ObjectFactory.register('nameValueLabel', function (config) { - var gameObject = new NameValueLabel(this.scene, config); - this.scene.add.existing(gameObject); - return gameObject; -}); - -SetValue(window, 'RexPlugins.UI.NameValueLabel', NameValueLabel); - -export default NameValueLabel; \ No newline at end of file diff --git a/spaces/AkitoP/umamusume_bert_vits2/text/cleaner.py b/spaces/AkitoP/umamusume_bert_vits2/text/cleaner.py deleted file mode 100644 index 3ba3739816aabbe16663b68c74fcda0588c14bab..0000000000000000000000000000000000000000 --- a/spaces/AkitoP/umamusume_bert_vits2/text/cleaner.py +++ /dev/null @@ -1,28 +0,0 @@ -from text import chinese, japanese, cleaned_text_to_sequence - - -language_module_map = {"ZH": chinese, "JP": japanese} - - -def clean_text(text, language): - language_module = language_module_map[language] - norm_text = language_module.text_normalize(text) - phones, tones, word2ph = language_module.g2p(norm_text) - return norm_text, phones, tones, word2ph - - -def clean_text_bert(text, language): - language_module = language_module_map[language] - norm_text = language_module.text_normalize(text) - phones, tones, word2ph = language_module.g2p(norm_text) - bert = language_module.get_bert_feature(norm_text, word2ph) - return phones, tones, bert - - -def text_to_sequence(text, language): - norm_text, phones, tones, word2ph = clean_text(text, language) - return cleaned_text_to_sequence(phones, tones, language) - - -if __name__ == "__main__": - pass diff --git a/spaces/AlekseyKorshuk/model-evaluation/tabs/arena_battle.py b/spaces/AlekseyKorshuk/model-evaluation/tabs/arena_battle.py deleted file mode 100644 index b5996af803e55759018000c19a35eb940dde2a79..0000000000000000000000000000000000000000 --- a/spaces/AlekseyKorshuk/model-evaluation/tabs/arena_battle.py +++ /dev/null @@ -1,260 +0,0 @@ -import time - -import gradio as gr -import random -from conversation import Conversation -from utils import get_matchmaking - - -def get_tab_arena_battle(download_bot_config, get_bot_profile, model_mapping, client): - gr.Markdown(""" - # ⚔️ Chatbot Arena (battle) ⚔️ - ## Rules - * Chat with two anonymous models side-by-side and vote for which one is better! - * You can do multiple rounds of conversations before voting or vote for each message. - * The names of the models will be revealed of the top after your voted and pressed "Show models". - * Click “Restart” to start a new round with new models. - """) - default_bot_id = "_bot_e21de304-6151-4a04-b025-4c553ae8cbca" - bot_config = download_bot_config(default_bot_id) - user_state = gr.State( - bot_config - ) - with gr.Row(): - bot_id = gr.Textbox(label="Chai bot ID", value=default_bot_id, interactive=True) - reload_bot_button = gr.Button("Reload bot") - bot_profile = gr.HTML(get_bot_profile(bot_config)) - with gr.Accordion("Bot config:", open=False): - bot_config_text = gr.Markdown(f"# Memory\n{bot_config['memory']}\n# Prompt\n{bot_config['prompt']}\n") - - with gr.Row(): - values = list(model_mapping.keys()) - first_message = (None, bot_config["firstMessage"]) - height = 450 - model_a_value, model_b_value = get_matchmaking(client, values, is_anonymous=True) - with gr.Column(): - model_a = gr.Textbox(value=model_a_value, label="Model A", interactive=False, visible=False) - chatbot_a = gr.Chatbot([first_message]) - chatbot_a.style(height=height) - with gr.Column(): - model_b = gr.Textbox(value=model_b_value, label="Model B", interactive=False, visible=False) - chatbot_b = gr.Chatbot([first_message]) - chatbot_b.style(height=height) - - with gr.Row(): - with gr.Column(scale=3): - msg = gr.Textbox(show_label=False, value="Hi there!", interactive=True) - with gr.Column(scale=3): - send = gr.Button("Send") - with gr.Row(): - vote_a = gr.Button("👈 A is better", interactive=False) - vote_b = gr.Button("👉 B is better", interactive=False) - vote_tie = gr.Button("🤝 Tie", interactive=False) - vote_bad = gr.Button("💩 Both are bad", interactive=False) - show_models_button = gr.Button("Show models", interactive=False) - with gr.Row(): - regenerate = gr.Button("Regenerate", interactive=False) - clear = gr.Button("Restart") - - with gr.Accordion("Generation parameters for model A", open=False): - model = model_mapping[model_a.value] - temperature_model_a = gr.Slider(minimum=0.0, maximum=1.0, value=model.generation_params["temperature"], - interactive=True, label="Temperature") - repetition_penalty_model_a = gr.Slider(minimum=0.0, maximum=2.0, - value=model.generation_params["repetition_penalty"], - interactive=True, label="Repetition penalty") - max_new_tokens_model_a = gr.Slider(minimum=1, maximum=512, value=model.generation_params["max_new_tokens"], - interactive=True, label="Max new tokens") - top_k_model_a = gr.Slider(minimum=1, maximum=100, value=model.generation_params["top_k"], - interactive=True, label="Top-K") - top_p_model_a = gr.Slider(minimum=0.0, maximum=1.0, value=model.generation_params["top_p"], - interactive=True, label="Top-P") - - with gr.Accordion("Generation parameters for model B", open=False): - model = model_mapping[model_b.value] - temperature_model_b = gr.Slider(minimum=0.0, maximum=1.0, value=model.generation_params["temperature"], - interactive=True, label="Temperature") - repetition_penalty_model_b = gr.Slider(minimum=0.0, maximum=2.0, - value=model.generation_params["repetition_penalty"], - interactive=True, label="Repetition penalty") - max_new_tokens_model_b = gr.Slider(minimum=1, maximum=512, value=model.generation_params["max_new_tokens"], - interactive=True, label="Max new tokens") - top_k_model_b = gr.Slider(minimum=1, maximum=100, value=model.generation_params["top_k"], - interactive=True, label="Top-K") - top_p_model_b = gr.Slider(minimum=0.0, maximum=1.0, value=model.generation_params["top_p"], - interactive=True, label="Top-P") - - def clear_chat(user_state): - return "", [(None, user_state["firstMessage"])], [(None, user_state["firstMessage"])] - - def reload_bot(bot_id): - bot_config = download_bot_config(bot_id) - bot_profile = get_bot_profile(bot_config) - return bot_profile, [(None, bot_config["firstMessage"])], [(None, bot_config[ - "firstMessage"])], bot_config, f"# Memory\n{bot_config['memory']}\n# Prompt\n{bot_config['prompt']}" - - def get_generation_args(model_tag): - model = model_mapping[model_tag] - return ( - model.generation_params["temperature"], - model.generation_params["repetition_penalty"], - model.generation_params["max_new_tokens"], - model.generation_params["top_k"], - model.generation_params["top_p"], - ) - - def respond(message, chat_history, user_state, model_tag, - temperature, repetition_penalty, max_new_tokens, top_k, top_p): - custom_generation_params = { - 'temperature': temperature, - 'repetition_penalty': repetition_penalty, - 'max_new_tokens': max_new_tokens, - 'top_k': top_k, - 'top_p': top_p, - } - conv = Conversation(user_state) - conv.set_chat_history(chat_history) - conv.add_user_message(message) - model = model_mapping[model_tag] - bot_message = model.generate_response(conv, custom_generation_params) - chat_history.append( - (message, bot_message) - ) - return "", chat_history - - def record_vote(user_state, vote, - chat_history_a, model_tag_a, - chat_history_b, model_tag_b): - conv_a = Conversation(user_state) - conv_a.set_chat_history(chat_history_a) - conv_b = Conversation(user_state) - conv_b.set_chat_history(chat_history_b) - if "A is better" in vote: - vote_str = "model_a" - elif "B is better" in vote: - vote_str = "model_b" - elif "Tie" in vote: - vote_str = "tie" - else: - vote_str = "tie (bothbad)" - row = { - "timestamp": time.time(), - "bot_id": user_state["bot_id"], - "vote": vote_str, - "model_a": model_tag_a, - "model_b": model_tag_b, - "is_anonymous": int(True) - } - sheet = client.open("Chat Arena").sheet1 - num_rows = len(sheet.get_all_records()) - sheet.insert_row(list(row.values()), index=num_rows + 2) - return gr.Button.update(interactive=True) - - def regenerate_response(chat_history, user_state, model_tag, - temperature, repetition_penalty, max_new_tokens, top_k, top_p): - if len(chat_history) == 1: - return "", chat_history - custom_generation_params = { - 'temperature': temperature, - 'repetition_penalty': repetition_penalty, - 'max_new_tokens': max_new_tokens, - 'top_k': top_k, - 'top_p': top_p, - } - last_row = chat_history.pop(-1) - chat_history.append((last_row[0], None)) - model = model_mapping[model_tag] - conv = Conversation(user_state) - conv.set_chat_history(chat_history) - bot_message = model.generate_response(conv, custom_generation_params) - chat_history[-1] = (last_row[0], bot_message) - return "", chat_history - - def disable_voting(): - return [gr.Button.update(interactive=False)] * 4 - - def enable_voting(): - return [gr.Button.update(interactive=True)] * 4 - - def show_models(): - return [gr.Textbox.update(visible=True)] * 2 - - def hide_models(): - model_a_value, model_b_value = get_matchmaking(client, values, is_anonymous=True) - return [gr.Textbox.update(visible=False, value=model_a_value), - gr.Textbox.update(visible=False, value=model_b_value)] - - def disable_send(): - return [gr.Button.update(interactive=False)] * 3 - - def enable_send(): - return [gr.Button.update(interactive=True), gr.Button.update(interactive=False)] - - def enable_regenerate(): - return gr.Button.update(interactive=True) - - for vote in [vote_a, vote_b, vote_tie, vote_bad]: - vote.click(record_vote, - [user_state, vote, chatbot_a, model_a, chatbot_b, model_b], - [show_models_button], - queue=False) - vote.click(disable_voting, None, [vote_a, vote_b, vote_tie, vote_bad], queue=False) - - show_models_button.click(show_models, None, [model_a, model_b], queue=False) - clear.click(hide_models, None, [model_a, model_b], queue=False) - reload_bot_button.click(hide_models, None, [model_a, model_b], queue=False) - show_models_button.click(disable_voting, None, [vote_a, vote_b, vote_tie, vote_bad], queue=False) - show_models_button.click(disable_send, None, [send, regenerate, show_models_button], queue=False) - clear.click(enable_send, None, [send, regenerate], queue=False) - reload_bot_button.click(enable_send, None, [send, regenerate], queue=False) - - model_a.change(get_generation_args, [model_a], - [temperature_model_a, repetition_penalty_model_a, max_new_tokens_model_a, top_k_model_a, - top_p_model_a], queue=False) - model_b.change(get_generation_args, [model_b], - [temperature_model_b, repetition_penalty_model_b, max_new_tokens_model_b, top_k_model_b, - top_p_model_b], queue=False) - - clear.click(clear_chat, [user_state], [msg, chatbot_a, chatbot_b], queue=False) - model_a.change(clear_chat, [user_state], [msg, chatbot_a, chatbot_b], queue=False) - model_b.change(clear_chat, [user_state], [msg, chatbot_a, chatbot_b], queue=False) - - # model_a.change(enable_voting, None, [vote_a, vote_b, vote_tie, vote_bad], queue=False) - # model_b.change(enable_voting, None, [vote_a, vote_b, vote_tie, vote_bad], queue=False) - reload_bot_button.click(disable_voting, None, [vote_a, vote_b, vote_tie, vote_bad], queue=False) - reload_bot_button.click(reload_bot, [bot_id], [bot_profile, chatbot_a, chatbot_b, user_state, bot_config_text], - queue=False) - send.click(enable_voting, None, [vote_a, vote_b, vote_tie, vote_bad], queue=False) - clear.click(disable_voting, None, [vote_a, vote_b, vote_tie, vote_bad], queue=False) - regenerate.click(enable_voting, None, [vote_a, vote_b, vote_tie, vote_bad], queue=False) - msg.submit(enable_voting, None, [vote_a, vote_b, vote_tie, vote_bad], queue=False) - - send.click(respond, - [msg, chatbot_a, user_state, model_a, temperature_model_a, repetition_penalty_model_a, - max_new_tokens_model_a, top_k_model_a, top_p_model_a], [msg, chatbot_a], - queue=False) - msg.submit(respond, - [msg, chatbot_a, user_state, model_a, temperature_model_a, repetition_penalty_model_a, - max_new_tokens_model_a, top_k_model_a, top_p_model_a], [msg, chatbot_a], - queue=False) - - send.click(respond, - [msg, chatbot_b, user_state, model_b, temperature_model_b, repetition_penalty_model_b, - max_new_tokens_model_b, top_k_model_b, top_p_model_b], [msg, chatbot_b], - queue=False) - msg.submit(respond, - [msg, chatbot_b, user_state, model_b, temperature_model_b, repetition_penalty_model_b, - max_new_tokens_model_b, top_k_model_b, top_p_model_b], [msg, chatbot_b], - queue=False) - - send.click(enable_regenerate, None, [regenerate], queue=False) - msg.submit(enable_regenerate, None, [regenerate], queue=False) - - regenerate.click(regenerate_response, - [chatbot_a, user_state, model_a, temperature_model_a, repetition_penalty_model_a, - max_new_tokens_model_a, top_k_model_a, - top_p_model_a], [msg, chatbot_a], queue=False) - regenerate.click(regenerate_response, - [chatbot_b, user_state, model_b, temperature_model_b, repetition_penalty_model_b, - max_new_tokens_model_b, top_k_model_b, - top_p_model_b], [msg, chatbot_b], queue=False) diff --git a/spaces/Alfasign/dIFFU/style.css b/spaces/Alfasign/dIFFU/style.css deleted file mode 100644 index 3bdfc5ebc1422c5ed2ca6dce9c38c543e68299f1..0000000000000000000000000000000000000000 --- a/spaces/Alfasign/dIFFU/style.css +++ /dev/null @@ -1,59 +0,0 @@ -h1 { - text-align: center; - font-size: 10vw; /* relative to the viewport width */ -} - -h2 { - text-align: center; - font-size: 10vw; /* relative to the viewport width */ -} - -#duplicate-button { - margin: auto; - color: #fff; - background: #1565c0; - border-radius: 100vh; -} - -#component-0 { - max-width: 80%; /* relative to the parent element's width */ - margin: auto; - padding-top: 1.5rem; -} - -/* You can also use media queries to adjust your style for different screen sizes */ -@media (max-width: 600px) { - #component-0 { - max-width: 90%; - padding-top: 1rem; - } -} - -#gallery .grid-wrap{ - min-height: 25%; -} - -#title-container { - display: flex; - justify-content: center; - align-items: center; - height: 100vh; /* Adjust this value to position the title vertically */ - } - -#title { - font-size: 3em; - text-align: center; - color: #333; - font-family: 'Helvetica Neue', sans-serif; - text-transform: uppercase; - background: transparent; - } - -#title span { - background: -webkit-linear-gradient(45deg, #4EACEF, #28b485); - -webkit-background-clip: text; - -webkit-text-fill-color: transparent; -} - -#subtitle { - text-align: center; \ No newline at end of file diff --git a/spaces/Altinas/vits-uma-genshin-honkais/modules.py b/spaces/Altinas/vits-uma-genshin-honkais/modules.py deleted file mode 100644 index 56ea4145eddf19dd330a3a41ab0183efc1686d83..0000000000000000000000000000000000000000 --- a/spaces/Altinas/vits-uma-genshin-honkais/modules.py +++ /dev/null @@ -1,388 +0,0 @@ -import math -import numpy as np -import torch -from torch import nn -from torch.nn import functional as F - -from torch.nn import Conv1d, ConvTranspose1d, AvgPool1d, Conv2d -from torch.nn.utils import weight_norm, remove_weight_norm - -import commons -from commons import init_weights, get_padding -from transforms import piecewise_rational_quadratic_transform - - -LRELU_SLOPE = 0.1 - - -class LayerNorm(nn.Module): - def __init__(self, channels, eps=1e-5): - super().__init__() - self.channels = channels - self.eps = eps - - self.gamma = nn.Parameter(torch.ones(channels)) - self.beta = nn.Parameter(torch.zeros(channels)) - - def forward(self, x): - x = x.transpose(1, -1) - x = F.layer_norm(x, (self.channels,), self.gamma, self.beta, self.eps) - return x.transpose(1, -1) - - -class ConvReluNorm(nn.Module): - def __init__(self, in_channels, hidden_channels, out_channels, kernel_size, n_layers, p_dropout): - super().__init__() - self.in_channels = in_channels - self.hidden_channels = hidden_channels - self.out_channels = out_channels - self.kernel_size = kernel_size - self.n_layers = n_layers - self.p_dropout = p_dropout - assert n_layers > 1, "Number of layers should be larger than 0." - - self.conv_layers = nn.ModuleList() - self.norm_layers = nn.ModuleList() - self.conv_layers.append(nn.Conv1d(in_channels, hidden_channels, kernel_size, padding=kernel_size//2)) - self.norm_layers.append(LayerNorm(hidden_channels)) - self.relu_drop = nn.Sequential( - nn.ReLU(), - nn.Dropout(p_dropout)) - for _ in range(n_layers-1): - self.conv_layers.append(nn.Conv1d(hidden_channels, hidden_channels, kernel_size, padding=kernel_size//2)) - self.norm_layers.append(LayerNorm(hidden_channels)) - self.proj = nn.Conv1d(hidden_channels, out_channels, 1) - self.proj.weight.data.zero_() - self.proj.bias.data.zero_() - - def forward(self, x, x_mask): - x_org = x - for i in range(self.n_layers): - x = self.conv_layers[i](x * x_mask) - x = self.norm_layers[i](x) - x = self.relu_drop(x) - x = x_org + self.proj(x) - return x * x_mask - - -class DDSConv(nn.Module): - """ - Dialted and Depth-Separable Convolution - """ - def __init__(self, channels, kernel_size, n_layers, p_dropout=0.): - super().__init__() - self.channels = channels - self.kernel_size = kernel_size - self.n_layers = n_layers - self.p_dropout = p_dropout - - self.drop = nn.Dropout(p_dropout) - self.convs_sep = nn.ModuleList() - self.convs_1x1 = nn.ModuleList() - self.norms_1 = nn.ModuleList() - self.norms_2 = nn.ModuleList() - for i in range(n_layers): - dilation = kernel_size ** i - padding = (kernel_size * dilation - dilation) // 2 - self.convs_sep.append(nn.Conv1d(channels, channels, kernel_size, - groups=channels, dilation=dilation, padding=padding - )) - self.convs_1x1.append(nn.Conv1d(channels, channels, 1)) - self.norms_1.append(LayerNorm(channels)) - self.norms_2.append(LayerNorm(channels)) - - def forward(self, x, x_mask, g=None): - if g is not None: - x = x + g - for i in range(self.n_layers): - y = self.convs_sep[i](x * x_mask) - y = self.norms_1[i](y) - y = F.gelu(y) - y = self.convs_1x1[i](y) - y = self.norms_2[i](y) - y = F.gelu(y) - y = self.drop(y) - x = x + y - return x * x_mask - - -class WN(torch.nn.Module): - def __init__(self, hidden_channels, kernel_size, dilation_rate, n_layers, gin_channels=0, p_dropout=0): - super(WN, self).__init__() - assert(kernel_size % 2 == 1) - self.hidden_channels =hidden_channels - self.kernel_size = kernel_size, - self.dilation_rate = dilation_rate - self.n_layers = n_layers - self.gin_channels = gin_channels - self.p_dropout = p_dropout - - self.in_layers = torch.nn.ModuleList() - self.res_skip_layers = torch.nn.ModuleList() - self.drop = nn.Dropout(p_dropout) - - if gin_channels != 0: - cond_layer = torch.nn.Conv1d(gin_channels, 2*hidden_channels*n_layers, 1) - self.cond_layer = torch.nn.utils.weight_norm(cond_layer, name='weight') - - for i in range(n_layers): - dilation = dilation_rate ** i - padding = int((kernel_size * dilation - dilation) / 2) - in_layer = torch.nn.Conv1d(hidden_channels, 2*hidden_channels, kernel_size, - dilation=dilation, padding=padding) - in_layer = torch.nn.utils.weight_norm(in_layer, name='weight') - self.in_layers.append(in_layer) - - # last one is not necessary - if i < n_layers - 1: - res_skip_channels = 2 * hidden_channels - else: - res_skip_channels = hidden_channels - - res_skip_layer = torch.nn.Conv1d(hidden_channels, res_skip_channels, 1) - res_skip_layer = torch.nn.utils.weight_norm(res_skip_layer, name='weight') - self.res_skip_layers.append(res_skip_layer) - - def forward(self, x, x_mask, g=None, **kwargs): - output = torch.zeros_like(x) - n_channels_tensor = torch.IntTensor([self.hidden_channels]) - - if g is not None: - g = self.cond_layer(g) - - for i in range(self.n_layers): - x_in = self.in_layers[i](x) - if g is not None: - cond_offset = i * 2 * self.hidden_channels - g_l = g[:,cond_offset:cond_offset+2*self.hidden_channels,:] - else: - g_l = torch.zeros_like(x_in) - - acts = commons.fused_add_tanh_sigmoid_multiply( - x_in, - g_l, - n_channels_tensor) - acts = self.drop(acts) - - res_skip_acts = self.res_skip_layers[i](acts) - if i < self.n_layers - 1: - res_acts = res_skip_acts[:,:self.hidden_channels,:] - x = (x + res_acts) * x_mask - output = output + res_skip_acts[:,self.hidden_channels:,:] - else: - output = output + res_skip_acts - return output * x_mask - - def remove_weight_norm(self): - if self.gin_channels != 0: - torch.nn.utils.remove_weight_norm(self.cond_layer) - for l in self.in_layers: - torch.nn.utils.remove_weight_norm(l) - for l in self.res_skip_layers: - torch.nn.utils.remove_weight_norm(l) - - -class ResBlock1(torch.nn.Module): - def __init__(self, channels, kernel_size=3, dilation=(1, 3, 5)): - super(ResBlock1, self).__init__() - self.convs1 = nn.ModuleList([ - weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=dilation[0], - padding=get_padding(kernel_size, dilation[0]))), - weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=dilation[1], - padding=get_padding(kernel_size, dilation[1]))), - weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=dilation[2], - padding=get_padding(kernel_size, dilation[2]))) - ]) - self.convs1.apply(init_weights) - - self.convs2 = nn.ModuleList([ - weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=1, - padding=get_padding(kernel_size, 1))), - weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=1, - padding=get_padding(kernel_size, 1))), - weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=1, - padding=get_padding(kernel_size, 1))) - ]) - self.convs2.apply(init_weights) - - def forward(self, x, x_mask=None): - for c1, c2 in zip(self.convs1, self.convs2): - xt = F.leaky_relu(x, LRELU_SLOPE) - if x_mask is not None: - xt = xt * x_mask - xt = c1(xt) - xt = F.leaky_relu(xt, LRELU_SLOPE) - if x_mask is not None: - xt = xt * x_mask - xt = c2(xt) - x = xt + x - if x_mask is not None: - x = x * x_mask - return x - - def remove_weight_norm(self): - for l in self.convs1: - remove_weight_norm(l) - for l in self.convs2: - remove_weight_norm(l) - - -class ResBlock2(torch.nn.Module): - def __init__(self, channels, kernel_size=3, dilation=(1, 3)): - super(ResBlock2, self).__init__() - self.convs = nn.ModuleList([ - weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=dilation[0], - padding=get_padding(kernel_size, dilation[0]))), - weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=dilation[1], - padding=get_padding(kernel_size, dilation[1]))) - ]) - self.convs.apply(init_weights) - - def forward(self, x, x_mask=None): - for c in self.convs: - xt = F.leaky_relu(x, LRELU_SLOPE) - if x_mask is not None: - xt = xt * x_mask - xt = c(xt) - x = xt + x - if x_mask is not None: - x = x * x_mask - return x - - def remove_weight_norm(self): - for l in self.convs: - remove_weight_norm(l) - - -class Log(nn.Module): - def forward(self, x, x_mask, reverse=False, **kwargs): - if not reverse: - y = torch.log(torch.clamp_min(x, 1e-5)) * x_mask - logdet = torch.sum(-y, [1, 2]) - return y, logdet - else: - x = torch.exp(x) * x_mask - return x - - -class Flip(nn.Module): - def forward(self, x, *args, reverse=False, **kwargs): - x = torch.flip(x, [1]) - if not reverse: - logdet = torch.zeros(x.size(0)).to(dtype=x.dtype, device=x.device) - return x, logdet - else: - return x - - -class ElementwiseAffine(nn.Module): - def __init__(self, channels): - super().__init__() - self.channels = channels - self.m = nn.Parameter(torch.zeros(channels,1)) - self.logs = nn.Parameter(torch.zeros(channels,1)) - - def forward(self, x, x_mask, reverse=False, **kwargs): - if not reverse: - y = self.m + torch.exp(self.logs) * x - y = y * x_mask - logdet = torch.sum(self.logs * x_mask, [1,2]) - return y, logdet - else: - x = (x - self.m) * torch.exp(-self.logs) * x_mask - return x - - -class ResidualCouplingLayer(nn.Module): - def __init__(self, - channels, - hidden_channels, - kernel_size, - dilation_rate, - n_layers, - p_dropout=0, - gin_channels=0, - mean_only=False): - assert channels % 2 == 0, "channels should be divisible by 2" - super().__init__() - self.channels = channels - self.hidden_channels = hidden_channels - self.kernel_size = kernel_size - self.dilation_rate = dilation_rate - self.n_layers = n_layers - self.half_channels = channels // 2 - self.mean_only = mean_only - - self.pre = nn.Conv1d(self.half_channels, hidden_channels, 1) - self.enc = WN(hidden_channels, kernel_size, dilation_rate, n_layers, p_dropout=p_dropout, gin_channels=gin_channels) - self.post = nn.Conv1d(hidden_channels, self.half_channels * (2 - mean_only), 1) - self.post.weight.data.zero_() - self.post.bias.data.zero_() - - def forward(self, x, x_mask, g=None, reverse=False): - x0, x1 = torch.split(x, [self.half_channels]*2, 1) - h = self.pre(x0) * x_mask - h = self.enc(h, x_mask, g=g) - stats = self.post(h) * x_mask - if not self.mean_only: - m, logs = torch.split(stats, [self.half_channels]*2, 1) - else: - m = stats - logs = torch.zeros_like(m) - - if not reverse: - x1 = m + x1 * torch.exp(logs) * x_mask - x = torch.cat([x0, x1], 1) - logdet = torch.sum(logs, [1,2]) - return x, logdet - else: - x1 = (x1 - m) * torch.exp(-logs) * x_mask - x = torch.cat([x0, x1], 1) - return x - - -class ConvFlow(nn.Module): - def __init__(self, in_channels, filter_channels, kernel_size, n_layers, num_bins=10, tail_bound=5.0): - super().__init__() - self.in_channels = in_channels - self.filter_channels = filter_channels - self.kernel_size = kernel_size - self.n_layers = n_layers - self.num_bins = num_bins - self.tail_bound = tail_bound - self.half_channels = in_channels // 2 - - self.pre = nn.Conv1d(self.half_channels, filter_channels, 1) - self.convs = DDSConv(filter_channels, kernel_size, n_layers, p_dropout=0.) - self.proj = nn.Conv1d(filter_channels, self.half_channels * (num_bins * 3 - 1), 1) - self.proj.weight.data.zero_() - self.proj.bias.data.zero_() - - def forward(self, x, x_mask, g=None, reverse=False): - x0, x1 = torch.split(x, [self.half_channels]*2, 1) - h = self.pre(x0) - h = self.convs(h, x_mask, g=g) - h = self.proj(h) * x_mask - - b, c, t = x0.shape - h = h.reshape(b, c, -1, t).permute(0, 1, 3, 2) # [b, cx?, t] -> [b, c, t, ?] - - unnormalized_widths = h[..., :self.num_bins] / math.sqrt(self.filter_channels) - unnormalized_heights = h[..., self.num_bins:2*self.num_bins] / math.sqrt(self.filter_channels) - unnormalized_derivatives = h[..., 2 * self.num_bins:] - - x1, logabsdet = piecewise_rational_quadratic_transform(x1, - unnormalized_widths, - unnormalized_heights, - unnormalized_derivatives, - inverse=reverse, - tails='linear', - tail_bound=self.tail_bound - ) - - x = torch.cat([x0, x1], 1) * x_mask - logdet = torch.sum(logabsdet * x_mask, [1,2]) - if not reverse: - return x, logdet - else: - return x diff --git a/spaces/Aman30577/imageTool1/app.py b/spaces/Aman30577/imageTool1/app.py deleted file mode 100644 index 1fdb09ec4d86c46a38b04ad4e3b79f3cf6f7e6ca..0000000000000000000000000000000000000000 --- a/spaces/Aman30577/imageTool1/app.py +++ /dev/null @@ -1,144 +0,0 @@ -import gradio as gr -# import os -# import sys -# from pathlib import Path -import time - -models =[ - "digiplay/polla_mix_2.3D", - "kayteekay/jordan-generator-v1", - "Meina/Unreal_V4.1", - "Meina/MeinaMix_V11", - "Erlalex/dominikof-v1-5-1", - "hearmeneigh/sd21-e621-rising-v1", - "Anna11/heera", - "kanu03/my-cat", - "Kernel/sd-nsfw", - "lodestones/P.A.W.F.E.C.T-Alpha", -] - - -model_functions = {} -model_idx = 1 -for model_path in models: - try: - model_functions[model_idx] = gr.Interface.load(f"models/{model_path}", live=False, preprocess=True, postprocess=False) - except Exception as error: - def the_fn(txt): - return None - model_functions[model_idx] = gr.Interface(fn=the_fn, inputs=["text"], outputs=["image"]) - model_idx+=1 - - -def send_it_idx(idx): - def send_it_fn(prompt): - output = (model_functions.get(str(idx)) or model_functions.get(str(1)))(prompt) - return output - return send_it_fn - -def get_prompts(prompt_text): - return prompt_text - -def clear_it(val): - if int(val) != 0: - val = 0 - else: - val = 0 - pass - return val - -def all_task_end(cnt,t_stamp): - to = t_stamp + 60 - et = time.time() - if et > to and t_stamp != 0: - d = gr.update(value=0) - tog = gr.update(value=1) - #print(f'to: {to} et: {et}') - else: - if cnt != 0: - d = gr.update(value=et) - else: - d = gr.update(value=0) - tog = gr.update(value=0) - #print (f'passing: to: {to} et: {et}') - pass - return d, tog - -def all_task_start(): - print("\n\n\n\n\n\n\n") - t = time.gmtime() - t_stamp = time.time() - current_time = time.strftime("%H:%M:%S", t) - return gr.update(value=t_stamp), gr.update(value=t_stamp), gr.update(value=0) - -def clear_fn(): - nn = len(models) - return tuple([None, *[None for _ in range(nn)]]) - - - -with gr.Blocks(title="SD Models") as my_interface: - with gr.Column(scale=12): - # with gr.Row(): - # gr.Markdown("""- Primary prompt: 你想画的内容(英文单词,如 a cat, 加英文逗号效果更好;点 Improve 按钮进行完善)\n- Real prompt: 完善后的提示词,出现后再点右边的 Run 按钮开始运行""") - with gr.Row(): - with gr.Row(scale=6): - primary_prompt=gr.Textbox(label="Prompt", value="") - # real_prompt=gr.Textbox(label="Real prompt") - with gr.Row(scale=6): - # improve_prompts_btn=gr.Button("Improve") - with gr.Row(): - run=gr.Button("Run",variant="primary") - clear_btn=gr.Button("Clear") - with gr.Row(): - sd_outputs = {} - model_idx = 1 - for model_path in models: - with gr.Column(scale=3, min_width=320): - with gr.Box(): - sd_outputs[model_idx] = gr.Image(label=model_path) - pass - model_idx += 1 - pass - pass - - with gr.Row(visible=False): - start_box=gr.Number(interactive=False) - end_box=gr.Number(interactive=False) - tog_box=gr.Textbox(value=0,interactive=False) - - start_box.change( - all_task_end, - [start_box, end_box], - [start_box, tog_box], - every=1, - show_progress=False) - - primary_prompt.submit(all_task_start, None, [start_box, end_box, tog_box]) - run.click(all_task_start, None, [start_box, end_box, tog_box]) - runs_dict = {} - model_idx = 1 - for model_path in models: - runs_dict[model_idx] = run.click(model_functions[model_idx], inputs=[primary_prompt], outputs=[sd_outputs[model_idx]]) - model_idx += 1 - pass - pass - - # improve_prompts_btn_clicked=improve_prompts_btn.click( - # get_prompts, - # inputs=[primary_prompt], - # outputs=[primary_prompt], - # cancels=list(runs_dict.values())) - clear_btn.click( - clear_fn, - None, - [primary_prompt, *list(sd_outputs.values())], - cancels=[*list(runs_dict.values())]) - tog_box.change( - clear_it, - tog_box, - tog_box, - cancels=[*list(runs_dict.values())]) - -my_interface.queue(concurrency_count=600, status_update_rate=1) -my_interface.launch(inline=True, show_api=False) \ No newline at end of file diff --git "a/spaces/Ameaou/academic-chatgpt3.1/crazy_functions/\350\257\242\351\227\256\345\244\232\344\270\252\345\244\247\350\257\255\350\250\200\346\250\241\345\236\213.py" "b/spaces/Ameaou/academic-chatgpt3.1/crazy_functions/\350\257\242\351\227\256\345\244\232\344\270\252\345\244\247\350\257\255\350\250\200\346\250\241\345\236\213.py" deleted file mode 100644 index 1d37b8b6520dc5649cca1797c9084ef6b41c2724..0000000000000000000000000000000000000000 --- "a/spaces/Ameaou/academic-chatgpt3.1/crazy_functions/\350\257\242\351\227\256\345\244\232\344\270\252\345\244\247\350\257\255\350\250\200\346\250\241\345\236\213.py" +++ /dev/null @@ -1,30 +0,0 @@ -from toolbox import CatchException, update_ui -from .crazy_utils import request_gpt_model_in_new_thread_with_ui_alive -import datetime -@CatchException -def 同时问询(txt, llm_kwargs, plugin_kwargs, chatbot, history, system_prompt, web_port): - """ - txt 输入栏用户输入的文本,例如需要翻译的一段话,再例如一个包含了待处理文件的路径 - llm_kwargs gpt模型参数,如温度和top_p等,一般原样传递下去就行 - plugin_kwargs 插件模型的参数,如温度和top_p等,一般原样传递下去就行 - chatbot 聊天显示框的句柄,用于显示给用户 - history 聊天历史,前情提要 - system_prompt 给gpt的静默提醒 - web_port 当前软件运行的端口号 - """ - history = [] # 清空历史,以免输入溢出 - chatbot.append((txt, "正在同时咨询gpt-3.5(openai)和gpt-4(api2d)……")) - yield from update_ui(chatbot=chatbot, history=history) # 刷新界面 # 由于请求gpt需要一段时间,我们先及时地做一次界面更新 - - # llm_kwargs['llm_model'] = 'chatglm&gpt-3.5-turbo&api2d-gpt-3.5-turbo' # 支持任意数量的llm接口,用&符号分隔 - llm_kwargs['llm_model'] = 'gpt-3.5-turbo&api2d-gpt-4' # 支持任意数量的llm接口,用&符号分隔 - gpt_say = yield from request_gpt_model_in_new_thread_with_ui_alive( - inputs=txt, inputs_show_user=txt, - llm_kwargs=llm_kwargs, chatbot=chatbot, history=history, - sys_prompt=system_prompt, - retry_times_at_unknown_error=0 - ) - - history.append(txt) - history.append(gpt_say) - yield from update_ui(chatbot=chatbot, history=history) # 刷新界面 # 界面更新 \ No newline at end of file diff --git a/spaces/Androidonnxfork/CivitAi-to-Diffusers/diffusers/docs/source/en/api/pipelines/text_to_video.md b/spaces/Androidonnxfork/CivitAi-to-Diffusers/diffusers/docs/source/en/api/pipelines/text_to_video.md deleted file mode 100644 index 6d28fb0e29d0086f772d481bd2f9445c3c9e605b..0000000000000000000000000000000000000000 --- a/spaces/Androidonnxfork/CivitAi-to-Diffusers/diffusers/docs/source/en/api/pipelines/text_to_video.md +++ /dev/null @@ -1,180 +0,0 @@ - - -

-  -

- |

- -  -

- |

-

-  -

- |

-

3utools Descargar 2019: Una guía completa

-Si usted está buscando una forma gratuita y fácil de administrar los datos de su dispositivo iOS en su PC con Windows, entonces es posible que desee comprobar a cabo 3utools. 3utools es un completo programa de software que te permite acceder y controlar varios aspectos de tu iPhone, iPad o iPod touch. Puede hacer copias de seguridad y restaurar sus datos, descargar aplicaciones, tonos de llamada y fondos de pantalla, flash y jailbreak su dispositivo, y utilizar muchas otras características útiles. En este artículo, le mostraremos cómo descargar e instalar 3utools en su PC, y cómo usarlo para administrar su dispositivo iOS.

-¿Qué es 3utools y por qué lo necesitas?

-3utools es una herramienta todo en uno para los usuarios de iOS que les permite ver y administrar la información de su dispositivo, archivos, multimedia, aplicaciones, etc. También es compatible con flasheo y jailbreak, que son procesos que modifican el sistema operativo del dispositivo para desbloquear su máximo potencial. Con 3utools, puede realizar fácilmente tareas que de otro modo requerirían múltiples programas de software o procedimientos complejos.

-3utools download 2019

Download File ✅ https://bltlly.com/2v6L7R

-

Características de 3utools

-Estas son algunas de las principales características de 3utools que lo convierten en una herramienta potente y versátil para los usuarios de iOS:

-Gestión de datos

-Con 3utools, puede realizar fácilmente copias de seguridad y restaurar sus datos en su dispositivo iOS. Puede optar por realizar copias de seguridad o restaurar todos los tipos de datos seleccionados, como contactos, mensajes, fotos, música, videos, libros, etc. También puede ver y editar los archivos de copia de seguridad en su PC. También puede administrar sus archivos de datos en su dispositivo, como borrarlos, copiarlos, moverlos o cambiarles el nombre.

-Aplicaciones, tonos de llamada y fondos de pantalla

- -Flash y jailbreak

-3utools puede ayudarle a flash y jailbreak su dispositivo iOS con un solo clic. Flashing es el proceso de instalación de una versión de firmware diferente en su dispositivo, que puede mejorar su rendimiento o solucionar algunos problemas. Jailbreak es el proceso de eliminación de las restricciones impuestas por Apple en su dispositivo, que le permite instalar aplicaciones no autorizadas, ajustes y temas. 3utools puede coincidir automáticamente con el firmware disponible para su modelo de dispositivo y la versión de iOS. También soporta flasheo en modo normal, modo DFU y modo de recuperación. También soporta varias herramientas y métodos de jailbreak.

-Otras herramientas útiles

-Además de las características anteriores, 3utools también ofrece muchas otras herramientas útiles para los usuarios de iOS, como:

--

-

- Migración de datos: Puede transferir datos de un dispositivo iOS a otro con facilidad. -

- Convertidor de vídeo: Puede convertir archivos de vídeo a diferentes formatos que son compatibles con su dispositivo. -

- Convertidor de audio: Puede convertir archivos de audio a diferentes formatos que son compatibles con su dispositivo. -

- Editor de audio : Puede editar archivos de audio recortando, cortando, fusionando, etc. -

- Convertidor de imágenes: Puede convertir archivos de imágenes a diferentes formatos que son compatibles con su dispositivo. -

- Editor de imágenes: Puede editar archivos de imagen recortando, rotando, redimensionando, etc. -

- Grabador de pantalla: Puede grabar la pantalla del dispositivo y guardarlo como un archivo de vídeo. -

- Captura de pantalla: Puede tomar capturas de pantalla de la pantalla de su dispositivo y guardarlas como archivos de imagen. -

- Pantalla en tiempo real: Puede ver la pantalla de su dispositivo en su PC en tiempo real. -

- Reiniciar: Puede reiniciar el dispositivo en modo normal, modo de recuperación o modo DFU. -

- Apagado: Puede apagar el dispositivo de forma remota desde su PC. -

- Basura limpia: Puede borrar la caché y archivos basura en su dispositivo para liberar espacio y mejorar el rendimiento. - -

- Compruebe la batería: Puede comprobar el estado de la batería y el estado de su dispositivo. -

- Comprobar el estado de iCloud: Puede comprobar el estado de bloqueo de activación de iCloud de su dispositivo. -

¿Cómo descargar e instalar 3utools en una PC con Windows?

-Descargar e instalar 3utools en tu PC con Windows es muy fácil y rápido. Solo sigue estos sencillos pasos:

-Paso 1: Visite el sitio web oficial de 3utools

-Lo primero que debe hacer es visitar el sitio web oficial de 3utools en http://www.3u.com/. Esta es la única fuente segura y confiable para descargar 3utools. No descargue 3utools de ningún otro sitio web, ya que pueden contener virus o malware.

- -Paso 2: Haga clic en el botón de descarga y guarde el archivo

-En la página principal del sitio web, verá un gran botón de descarga verde que dice "Descargar 3uTools". Haga clic en él y aparecerá una ventana emergente. Elija una ubicación en su PC donde desea guardar el archivo y haga clic en "Guardar". El nombre del archivo será algo así como "3uTools_v2.53_Setup.exe" y el tamaño del archivo será de alrededor de 100 MB.

-Paso 3: Ejecute el archivo de configuración y siga las instrucciones

- -Paso 4: Conecte su dispositivo iOS a su PC con un cable USB o WIFI

-El último paso es conectar el dispositivo iOS a su PC con un cable USB o WIFI. Si utiliza un cable USB, asegúrese de que es original y está en buenas condiciones. Conecte un extremo del cable en su dispositivo y el otro extremo en su PC. Si utiliza WIFI, asegúrese de que tanto su dispositivo como su PC estén conectados a la misma red WIFI. Luego, abra 3utools en su PC y espere a que detecte su dispositivo. Es posible que tenga que desbloquear el dispositivo y toque "Confiar" en el mensaje emergente que dice "Confiar en este equipo?". Una vez que el dispositivo esté conectado, verá su información básica en la interfaz principal de 3utools, como el nombre del dispositivo, el modelo, la versión de iOS, el número de serie, etc.

-¿Cómo usar 3utools para administrar tu dispositivo iOS?

-Ahora que ha descargado e instalado 3utools en su PC y conectado su dispositivo iOS a ella, puede comenzar a usarlo para administrar su dispositivo iOS. Estas son algunas de las tareas comunes que puedes hacer con 3utools:

-Copia de seguridad y restaurar los datos

-Para realizar copias de seguridad y restaurar los datos en su dispositivo iOS, haga clic en la pestaña "Copia de seguridad/ Restaurar" en el menú superior de 3utools. Verá dos botones: "Copia de seguridad ahora" y "Restaurar datos". Para hacer una copia de seguridad de sus datos, haga clic en "Copia de seguridad ahora" y elija el modo de copia de seguridad: copia de seguridad completa o copia de seguridad personalizada. La copia de seguridad completa respaldará todos sus tipos de datos, mientras que la copia de seguridad personalizada le permitirá seleccionar los tipos de datos que desea respaldar. Luego, haga clic en "Iniciar copia de seguridad" y espere a que termine el proceso. Puede ver los archivos de copia de seguridad en su PC haciendo clic en "Ver datos de copia de seguridad". Para restaurar sus datos, haga clic en "Restaurar datos" y elija el archivo de copia de seguridad que desea restaurar de la lista. Luego, haz clic en "Iniciar restauración" y espera a que termine el proceso. También puede restaurar sus datos de iTunes o iCloud copias de seguridad haciendo clic en "Importar datos de copia de seguridad".

-Descargar e instalar aplicaciones, tonos de llamada y fondos de pantalla

- -Flash y jailbreak su dispositivo

-Para flash y jailbreak su dispositivo iOS, haga clic en el "Flash & JB" pestaña en el menú superior de 3utools. Verás dos sub-pestañas: "Easy Flash" y "Pro Flash". Para flashear su dispositivo, haga clic en "Easy Flash" y elija la versión de firmware que desea flashear de la lista. También puede marcar la casilla que dice "Retener los datos del usuario mientras parpadea" si desea mantener sus datos después de parpadear. A continuación, haga clic en "Flash" y siga las instrucciones en la pantalla. Para jailbreak su dispositivo, haga clic en "Pro Flash" y elegir la herramienta de jailbreak que desea utilizar de la lista. También puede marcar la casilla que dice "Activar dispositivo después de jailbreak" si desea activar el dispositivo después de jailbreak. Luego, haz clic en "Jailbreak" y sigue las instrucciones en la pantalla.

-Utilice otras características útiles

-Para utilizar otras características útiles de 3utools, haga clic en la pestaña "Caja de herramientas" en el menú superior de 3utools. Verás una lista de iconos que representan diferentes herramientas que puedes usar. Por ejemplo, puede hacer clic en "Migración de datos" para transferir datos de un dispositivo iOS a otro, o hacer clic en "Grabadora de pantalla" para grabar la pantalla del dispositivo y guardarlo como un archivo de vídeo. Puede pasar el ratón sobre cada icono para ver el nombre y la descripción de la herramienta. Para usar una herramienta, simplemente haga clic en el icono y siga las instrucciones en la pantalla.

-Conclusión

-3utools es un programa de software potente y versátil que puede ayudarlo a administrar los datos de su dispositivo iOS en su PC con Windows. Puede hacer copias de seguridad y restaurar sus datos, descargar e instalar aplicaciones, tonos de llamada y fondos de pantalla, flash y jailbreak su dispositivo, y utilizar muchas otras características útiles. 3utools es gratuito y fácil de descargar e instalar, y es compatible con todos los dispositivos iOS y versiones. Si está buscando una solución integral para la administración de dispositivos iOS, debe probar 3utools.

-Preguntas frecuentes

-Aquí están algunas de las preguntas más frecuentes sobre 3utools:

--

-

-Sí, 3utools es seguro de usar siempre y cuando lo descargue desde el sitio web oficial en http://www.3u.com/. No descargue 3utools de ningún otro sitio web, ya que pueden contener virus o malware. Además, asegúrese de tener un programa antivirus en su PC y escanee el archivo antes de ejecutarlo.

-No, 3utools solo funciona en PC con Windows. No hay versión para Mac de 3utools disponible en este momento.

-No, 3utools no requiere iTunes para funcionar. Sin embargo, es posible que necesite instalar controladores de iTunes en su PC si desea conectar su dispositivo iOS con un cable USB. Puede descargar los controladores de iTunes desde https://support.apple.com/downloads/itunes.

-No, 3utools no borrará sus datos a menos que elija hacerlo. Por ejemplo, si flashea su dispositivo con una versión de firmware diferente, puede perder sus datos si no hace una copia de seguridad primero. Además, si haces jailbreak a tu dispositivo, puedes perder la garantía y algunas características de tu dispositivo. Por lo tanto, siempre debe realizar copias de seguridad de sus datos antes de usar 3utools.

-Si tiene alguna pregunta o problema con 3utools, puede ponerse en contacto con el soporte 3utools enviando un correo electrónico a support@3u.com. También puede visitar el foro oficial de 3utools en http://forum.3u.com/ y publicar sus consultas allí.

-

-

\ No newline at end of file diff --git a/spaces/Benson/text-generation/Examples/Descargar Apk Juego Sigma.md b/spaces/Benson/text-generation/Examples/Descargar Apk Juego Sigma.md deleted file mode 100644 index afbec1847afcd3eb3dbf1fbf0a1f4cbf34c03c85..0000000000000000000000000000000000000000 --- a/spaces/Benson/text-generation/Examples/Descargar Apk Juego Sigma.md +++ /dev/null @@ -1,92 +0,0 @@ -

-

Descargar APK Game Sigma: Un juego estilizado Shooter de supervivencia para teléfonos móviles

-Si usted está buscando un nuevo y emocionante juego de disparos de supervivencia para jugar en su teléfono móvil, es posible que desee echa un vistazo APK Game Sigma. Este es un estilizado juego de disparos de supervivencia que ofrece dos modos diferentes: Classic Battle Royale y 4v4 Fight Out. En este artículo, te diremos qué es APK Game Sigma, qué características tiene y cómo descargarlo e instalarlo en tu dispositivo.

-¿Qué es APK juego Sigma?

-APK Game Sigma es un juego desarrollado por Studio Arm Private Limited, una empresa con sede en la India. Es un juego de disparos de supervivencia que está disponible en dispositivos Android. El juego fue lanzado en noviembre de 2022 y ha ganado más de 500.000 descargas desde entonces.

-descargar apk juego sigma

Download Zip ★ https://bltlly.com/2v6IG1

-

El juego está inspirado en el género popular de los juegos de battle royale, donde los jugadores luchan entre sí en un mapa cada vez más pequeño hasta que solo queda uno. Sin embargo, APK Game Sigma también añade algunas características únicas y elementos que lo hacen destacar de otros juegos similares.

-Características del juego APK Sigma

-Aquí están algunas de las características que ofrece APK Game Sigma:

-- Modo clásico Battle Royale

-En este modo, puede unirse a otros 50 jugadores en un juego rápido y ligero. Puedes elegir tu punto de partida con tu paracaídas y explorar el vasto mapa. Tienes que encontrar armas, objetos y vehículos para sobrevivir y luchar. También tienes que permanecer en la zona segura el mayor tiempo posible, ya que el mapa se reducirá con el tiempo. El último jugador o equipo en pie gana el partido.

-- Modo de lucha 4v4

-En este modo, puedes formar equipo con otros tres jugadores y competir contra otro equipo en un partido tenso y estratégico. Tienes que asignar recursos, comprar armas y sobrevivir a tus enemigos. Puede elegir entre diferentes mapas que tienen diferentes diseños y desafíos. El partido dura siete minutos y el equipo con más muertes gana.

-- Gráficos estilizados

- -- Controles fáciles de usar

-El juego también tiene controles fáciles de usar que prometen una experiencia de supervivencia inolvidable en el móvil. Puedes personalizar tus controles según tu preferencia y comodidad. También puedes usar el chat de voz para comunicarte con tus compañeros de equipo y coordinar tus estrategias.

-Cómo descargar e instalar APK juego Sigma?

-Si estás interesado en jugar APK Game Sigma, tienes varias opciones para descargarlo e instalarlo en tu dispositivo. Estas son algunas de ellas:

- -Descargar desde APKCombo

-APKCombo es un sitio web que proporciona enlaces de descarga gratuita para varios juegos y aplicaciones Android. Puede descargar APK Game Sigma de APKCombo siguiendo estos pasos:

-- Pasos para descargar

--

-

- Ir a APKCombo.com y buscar "Sigma" en la barra de búsqueda. -

- Seleccione el juego de los resultados y haga clic en el botón "Descargar". -

- Elija la versión que es compatible con su dispositivo y haga clic en el botón "Descargar" de nuevo. -

- Espere a que la descarga termine y localice el archivo APK en el almacenamiento de su dispositivo. -

- Toque en el archivo APK y permitir la instalación de fuentes desconocidas si se le solicita. -

- Siga las instrucciones en la pantalla y espere a que se complete la instalación. -

- Inicia el juego y disfruta jugando. -

- Ventajas de APKCombo

--

-

- Es rápido y fácil de usar. -

- Ofrece múltiples versiones del juego, incluyendo las más recientes y más antiguas. -

- Es seguro y protegido, ya que escanea los archivos APK en busca de virus y malware. -

Descargar desde CCM

-CCM es otro sitio web que proporciona enlaces de descarga gratuita para varios juegos y aplicaciones de Android. Puede descargar APK Game Sigma de CCM siguiendo estos pasos:

-- Pasos para descargar

- -- Ventajas de CCM

--

-

- Es fiable y confiable, ya que ha estado proporcionando enlaces de descarga durante más de 20 años. -

- Ofrece una variedad de juegos y aplicaciones, incluyendo populares y nichos. -

- Es fácil de usar y tiene una interfaz simple. -

Descargar e instalar en el PC usando BlueStacks emulador

-Si quieres jugar APK Game Sigma en tu PC, puedes usar un emulador como BlueStacks. BlueStacks es un software que te permite ejecutar juegos y aplicaciones Android en tu PC. Puedes descargar e instalar APK Game Sigma en tu PC usando BlueStacks siguiendo estos pasos:

-- Pasos para descargar e instalar BlueStacks emulador

- -- Pasos para instalar APK juego Sigma en el PC usando BlueStacks emulador

--

-

- En BlueStacks, vaya a la pestaña "Mis juegos" y haga clic en el botón "Instalar apk" en la esquina inferior derecha. -

- Navegar por el almacenamiento de su PC y seleccione el archivo APK de APK Game Sigma que ha descargado de APKCombo o CCM. -

- Esperar a BlueStacks para instalar el juego y abrirlo desde la pestaña "Mis juegos". -

- Disfrutar de jugar APK Juego Sigma en su PC con una pantalla más grande y mejores controles. -

Conclusión

- -Preguntas frecuentes (preguntas frecuentes)

-Aquí están algunas de las preguntas más comunes que la gente hace acerca de APK Game Sigma:

--

-

- ¿Está libre el juego APK Sigma? -

- Es APK juego Sigma en línea o fuera de línea? -

- ¿Es seguro el juego APK Sigma? -

- ¿Cuáles son los requisitos mínimos para jugar APK Game Sigma? -

- ¿Cómo puedo contactar al desarrollador de APK Game Sigma? -

- ¿Cómo puedo actualizar APK Game Sigma? - -