-

-Black Clover Mobile 2022 APK: Everything You Need to Know

-If you are a fan of anime, manga, or RPG games, you might have heard of Black Clover Mobile, a new mobile game based on the popular series by Yuki Tabata. This game is set to launch globally in 2022, but you can already download its APK file and try it out on your Android device. But what is Black Clover Mobile exactly? And why should you play it? In this article, we will answer these questions and more. We will give you a brief overview of the game and its features, show you how to download and install it on your device, provide you with some tips and tricks to help you get started, and end with a conclusion and some FAQs. So without further ado, let's dive into the world of Black Clover Mobile!

-black clover mobile 2022 apk

Download Zip ⏩ https://urlca.com/2uOaFV

-What is Black Clover Mobile?

-Black Clover Mobile is a 3D open-world action RPG game developed by VIC Game Studios and published by Bandai Namco Entertainment. It is based on the anime and manga series Black Clover, which follows the adventures of Asta, a boy who dreams of becoming the Wizard King in a world where magic is everything. In Black Clover Mobile, you can create your own custom character and join one of the nine magic knight squads, each with its own unique theme and style. You can also collect and customize over 50 characters from the original series, each with their own skills, abilities, and voice actors. You can switch between your custom character and your collected characters at any time, creating your own dream team.

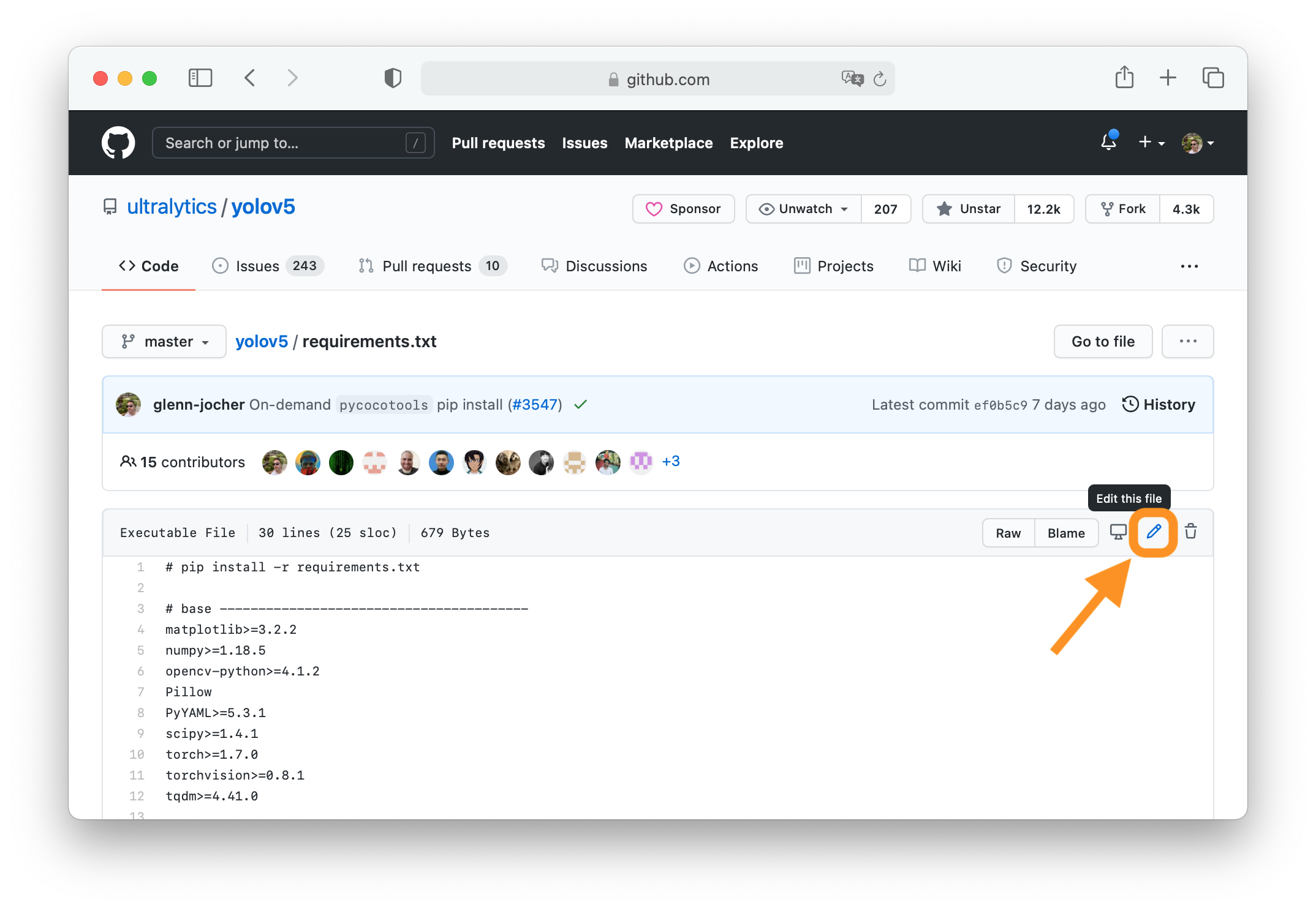

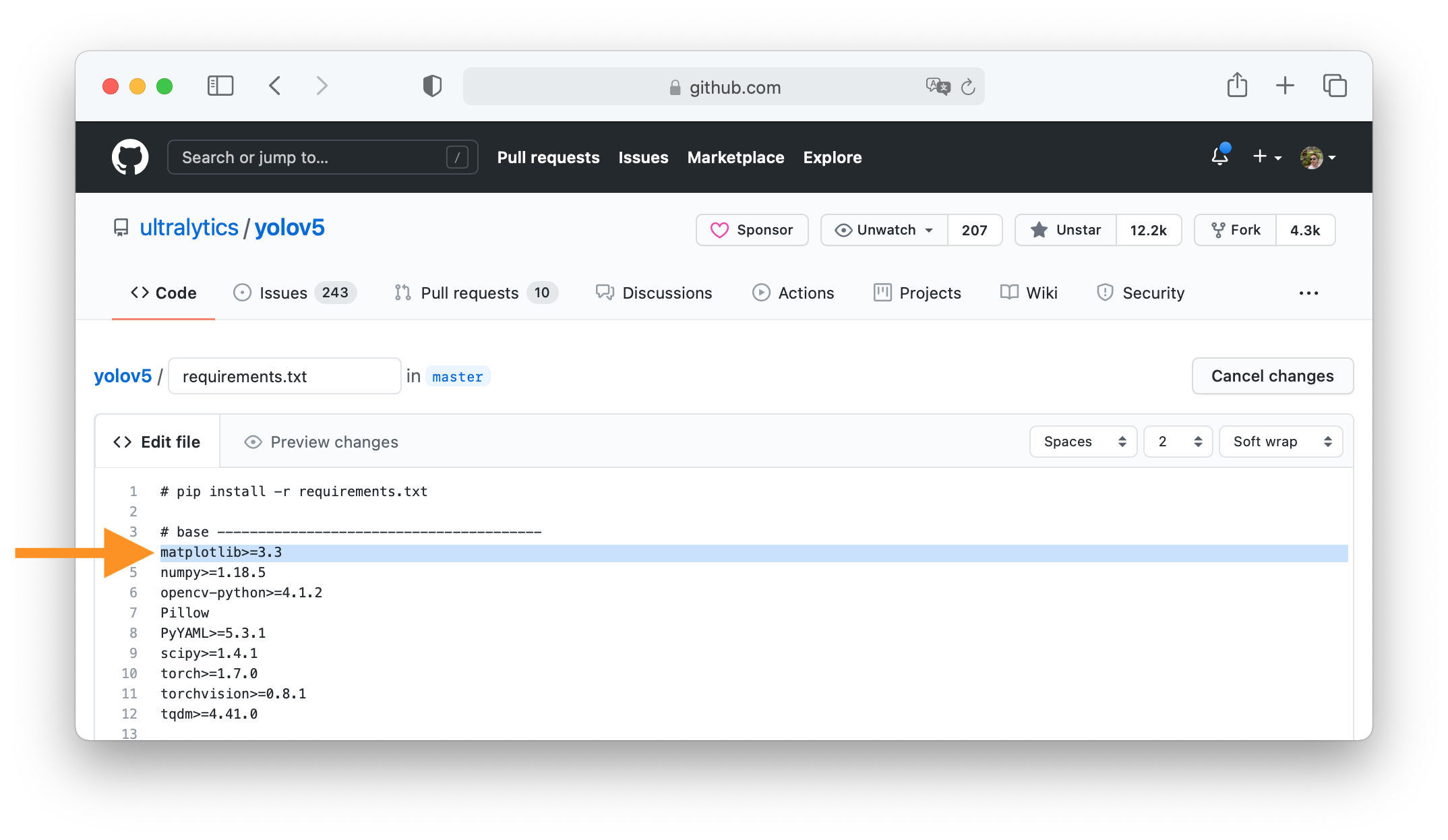

-How to download Black Clover Mobile APK?

-If you want to play Black Clover Mobile before its official global release, you can download its APK file from a trusted source and install it on your Android device. Here are the steps you need to follow:

-

-- Go to [this link](^1^) and download the Black Clover Mobile APK file. Make sure you have enough storage space on your device.

-- Go to your device settings and enable the option to install apps from unknown sources. This will allow you to install the APK file without any issues.

-- Locate the downloaded APK file on your device and tap on it to start the installation process. Follow the instructions on the screen and wait for the installation to finish.

-- Launch the game and enjoy!

-

-Note: You may need a VPN app to access the game servers if you are not in the supported regions. You can also use an emulator like BlueStacks to play the game on your PC.

-What are the system requirements for Black Clover Mobile?

-Black Clover Mobile is a high-quality game that requires a decent device to run smoothly. Here are the minimum and recommended system requirements for the game:

-black clover mobile game download apk

-black clover mobile release date 2022 apk

-black clover mobile english version apk

-black clover mobile apk mod unlimited money

-black clover mobile apk obb data offline

-black clover mobile latest update apk

-black clover mobile gameplay android apk

-black clover mobile gacha system apk

-black clover mobile open world apk

-black clover mobile official website apk

-black clover mobile news and updates apk

-black clover mobile pre registration apk

-black clover mobile beta test apk

-black clover mobile characters list apk

-black clover mobile best team apk

-black clover mobile tips and tricks apk

-black clover mobile reddit community apk

-black clover mobile discord server apk

-black clover mobile review and rating apk

-black clover mobile free download apk

-black clover mobile hack and cheats apk

-black clover mobile how to play apk

-black clover mobile system requirements apk

-black clover mobile compatible devices apk

-black clover mobile wallpaper hd apk

-black clover mobile ost and soundtracks apk

-black clover mobile anime crossover apk

-black clover mobile fan art and memes apk

-black clover mobile pvp mode apk

-black clover mobile coop mode apk

-black clover mobile guild system apk

-black clover mobile events and rewards apk

-black clover mobile codes and coupons apk

-black clover mobile support and feedback apk

-black clover mobile bugs and issues apk

-black clover mobile guides and tutorials apk

-black clover mobile skills and abilities apk

-black clover mobile classes and roles apk

-black clover mobile customization and outfits apk

-black clover mobile story and lore apk

-black clover mobile quests and missions apk

-black clover mobile bosses and enemies apk

-black clover mobile items and equipment apk

-black clover mobile shop and currency apk

-black clover mobile achievements and trophies apk

-black clover mobile voice actors and cast apk

-

-| Minimum | Recommended |

|---|

-| Android 6.0 or higher | Android 8.0 or higher |

-| 2 GB of RAM | 4 GB of RAM or more |

-| 2 GB of free storage space | 4 GB of free storage space or more |

-| Quad-core processor | Octa-core processor or better |

-| Adreno 506 GPU or equivalent | Adreno 630 GPU or better |

-

-If your device meets these requirements, you should be able to play the game without any major problems. However, you may still experience some lag or crashes depending on your network connection and device performance.

-How to play Black Clover Mobile?

-Black Clover Mobile is a game that offers a lot of content and features for players to enjoy. Here is a summary of the gameplay mechanics and modes:

-

-- The game follows the main story of the anime and manga series, with some original scenarios and events added. You can experience the story through quests, cutscenes, and dialogues with various characters.

-- The game features an open-world map that you can explore freely, with different regions, landmarks, enemies, and secrets to discover. You can also interact with other players in real-time, chat with them, trade with them, or fight them in PvP battles.

-- The game has a gacha system that allows you to summon new characters using magic stones, which are the premium currency of the game. You can also earn magic stones by completing missions, achievements, events, and more.

-- The game has a character customization system that lets you change the appearance, equipment, skills, and stats of your characters. You can also upgrade your characters by leveling them up, awakening them, enhancing them, and more.

-- The game has a combat system that is based on real-time action and strategy. You can control your character using virtual buttons or gestures, and use various skills and abilities depending on your character class and element. You can also switch between your custom character and your collected characters during battle.

-- The game has various modes that offer different challenges and rewards. Some of these modes are story mode, adventure mode, boss mode, guild mode, arena mode, tower mode, raid mode, and more.

- Why should you play Black Clover Mobile?

-Now that you know what Black Clover Mobile is and how to play it, you might be wondering why you should play it. Well, there are many reasons why this game is worth your time and attention. Here are some of them:

-Enjoy an immersive open-world adventure

-One of the main attractions of Black Clover Mobile is its stunning open-world map, which is based on the anime and manga series. You can explore different regions, such as the Clover Kingdom, the Diamond Kingdom, the Spade Kingdom, and more. You can also find various landmarks, such as the Royal Capital, the Black Bulls' base, the Dungeon, and more. The game features high-quality graphics, sound effects, and voice acting that will make you feel like you are part of the story. You can also encounter different enemies, such as bandits, monsters, and other magic knights, and engage them in dynamic battles. You can also discover hidden secrets, treasures, and easter eggs that will enrich your experience.

-Collect and customize your favorite characters

-Another reason why you should play Black Clover Mobile is its gacha system, which allows you to collect and customize over 50 characters from the original series. You can summon characters using magic stones, which are the premium currency of the game. You can also earn magic stones by completing missions, achievements, events, and more. You can get characters of different rarities, from common to legendary. You can also get characters of different classes, such as fighter, shooter, healer, support, and more. You can also get characters of different elements, such as fire, water, wind, earth, light, dark, and more. Each character has their own skills, abilities, and voice actors that will make them unique and fun to use. You can also customize your characters by changing their appearance, equipment, skills, and stats. You can also upgrade your characters by leveling them up, awakening them, enhancing them, and more.

-Challenge yourself with epic boss battles

-A third reason why you should play Black Clover Mobile is its combat system, which is based on real-time action and strategy. You can control your character using virtual buttons or gestures, and use various skills and abilities depending on your character class and element. You can also switch between your custom character and your collected characters during battle. The game features various modes that offer different challenges and rewards. Some of these modes are story mode, adventure mode, boss mode, guild mode, arena mode, tower mode, raid mode, and more. In these modes, you will face different enemies and bosses that will test your skills and tactics. Some of these bosses are from the original series, such as Vetto, Fana, Licht, Zagred, and more. The game also features a difficulty system that will adjust the level of the enemies according to your level. The game also features a reward system that will give you various resources and items for completing battles. What are some tips and tricks for Black Clover Mobile?

-Black Clover Mobile is a game that can be enjoyed by both beginners and veterans alike. However, if you want to have an edge over your enemies and make the most out of your gameplay, you might want to follow some tips and tricks that will help you improve your skills and strategies. Here are some of them:

-Follow the main story quests

-One of the best ways to progress through the game and unlock new features is to follow the main story quests. These quests will guide you through the plot of the anime and manga series, with some original scenarios and events added. You will also meet various characters, learn more about the world, and get rewards for completing them. The main story quests will also help you level up your characters, unlock new regions, and access new modes. You can find the main story quests on the top left corner of the screen, and you can tap on them to start them.

-Join a guild and cooperate with other players

-Another way to enhance your gameplay experience is to join a guild and cooperate with other players. A guild is a group of players who share a common interest and goal in the game. You can join a guild or create your own guild once you reach level 10. By joining a guild, you can chat with other members, trade items, request help, and participate in guild events. Guild events are special missions that require teamwork and coordination among guild members. They offer various rewards, such as magic stones, gold, equipment, and more. You can also challenge other guilds in guild wars, which are competitive battles that rank guilds based on their performance.

-Upgrade your equipment and enhance your stats

-A third way to improve your gameplay performance is to upgrade your equipment and enhance your stats. Equipment are items that you can equip on your characters to boost their attributes, such as attack, defense, speed, and more. You can get equipment from various sources, such as quests, gacha, shops, events, and more. You can also upgrade your equipment by using materials and gold, which will increase their level and quality. You can also enhance your stats by using magic crystals, which are items that you can use to increase your character's base stats. You can get magic crystals from various sources, such as quests, gacha, shops, events, and more.

-Complete daily missions and achievements

-A fourth way to earn more resources and rewards is to complete daily missions and achievements. Daily missions are tasks that you can complete every day, such as logging in, playing certain modes, summoning characters, and more. They offer various rewards, such as magic stones, gold, stamina, and more. Achievements are goals that you can achieve by playing the game, such as reaching certain levels, collecting certain characters, completing certain quests, and more. They offer various rewards, such as magic stones, gold, equipment, and more.

-Conclusion

-Black Clover Mobile is a game that offers a lot of fun and excitement for fans of anime, manga, or RPG games. It is a game that lets you create your own custom character and join one of the nine magic knight squads in a world where magic is everything. It is a game that lets you collect and customize over 50 characters from the original series, each with their own skills, abilities, and voice actors. It is a game that lets you enjoy an immersive open-world adventure with stunning graphics, sound effects, and voice acting. It is a game that lets you challenge yourself with epic boss battles with real-time action and strategy. It is a game that lets you join a guild and cooperate with other players in various modes and events. It is a game that lets you upgrade your equipment and enhance your stats to improve your performance and power up your characters. It is a game that lets you complete daily missions and achievements to earn more resources and rewards.

-If you are interested in playing Black Clover Mobile before its official global release in 2022, you can download its APK file from [this link] and install it on your Android device. You can also use a VPN app or an emulator to access the game servers if you are not in the supported regions.

-We hope this article has given you everything you need to know about Black Clover Mobile. If you have any questions or feedback about the game or this article, feel free to leave a comment below. We would love to hear from you!

-FAQs

-Here are some frequently asked questions and answers about Black Clover Mobile:

-

-- Is Black Clover Mobile free to play?

Yes, Black Clover Mobile is free to play with optional in-app purchases. What are the supported regions for Black Clover Mobile?

Black Clover Mobile is currently available in Japan, Korea, Taiwan, Hong Kong, and Macau. It will be released globally in 2022.

-- Can I play Black Clover Mobile on PC?

Yes, you can play Black Clover Mobile on PC using an emulator like BlueStacks. You will need to download the APK file from [this link] and install it on the emulator. You will also need a VPN app to access the game servers if you are not in the supported regions.

-- How can I get more magic stones?

Magic stones are the premium currency of Black Clover Mobile. You can get more magic stones by completing missions, achievements, events, and more. You can also buy magic stones with real money using in-app purchases.

-- Who are the best characters in Black Clover Mobile?

The best characters in Black Clover Mobile depend on your personal preference and playstyle. However, some of the most popular and powerful characters are Asta, Yuno, Noelle, Yami, Julius, and Mereoleona.

- 401be4b1e0

-

-

\ No newline at end of file

diff --git a/spaces/congsaPfin/Manga-OCR/logs/Bubble Shooter Classic Geniee die Grafik und die Musik in diesem Knobelspiel.md b/spaces/congsaPfin/Manga-OCR/logs/Bubble Shooter Classic Geniee die Grafik und die Musik in diesem Knobelspiel.md

deleted file mode 100644

index 0e07ce8ab0c11e341887ff265416e5bf548a869b..0000000000000000000000000000000000000000

--- a/spaces/congsaPfin/Manga-OCR/logs/Bubble Shooter Classic Geniee die Grafik und die Musik in diesem Knobelspiel.md

+++ /dev/null

@@ -1,114 +0,0 @@

-

-Bubble Shooter Download Kostenlos Vollversion Deutsch: Die besten Spiele für PC und Smartphone

-Bubble Shooter ist eines der beliebtesten und süchtig machenden Spiele aller Zeiten. Es gibt unzählige Varianten und Versionen dieses Klassikers, die man kostenlos herunterladen und spielen kann. Ob auf dem PC oder dem Smartphone, Bubble Shooter bietet Spaß und Spannung für Jung und Alt. In diesem Artikel stellen wir dir einige der besten Bubble Shooter Spiele vor, die du kostenlos downloaden kannst. Außerdem erklären wir dir, was Bubble Shooter ist, wie es funktioniert und warum es so gut für dein Gehirn ist.

-bubble shooter download kostenlos vollversion deutsch

Download Zip ✑ https://urlca.com/2uObKe

-Was ist Bubble Shooter?

-Bubble Shooter ist ein einfaches aber geniales Puzzlespiel, bei dem du bunte Blasen auf dem Bildschirm zum Platzen bringen musst. Dazu musst du mit einer Kanone am unteren Rand des Bildschirms Blasen abschießen und mindestens drei Blasen der gleichen Farbe zusammenbringen. Je mehr Blasen du auf einmal zerplatzt, desto mehr Punkte bekommst du. Das Spiel endet, wenn die Blasen den unteren Rand des Bildschirms erreichen oder wenn du alle Blasen entfernt hast.

-Das Spielprinzip

-Das Spielprinzip von Bubble Shooter ist sehr einfach zu verstehen und zu erlernen. Du brauchst nur eine Maus oder einen Finger, um die Kanone zu steuern und die Blasen abzufeuern. Du kannst die Richtung der Kanone mit der Maus oder dem Finger bewegen und mit einem Klick oder einem Tippen die Blase abschießen. Du musst versuchen, die Blase so zu platzieren, dass sie eine Gruppe von mindestens drei Blasen der gleichen Farbe bildet. Dann werden diese Blasen zerplatzen und vom Bildschirm verschwinden. Wenn du mehrere Gruppen auf einmal triffst, bekommst du Bonuspunkte. Manchmal gibt es auch spezielle Blasen, die besondere Effekte haben, wie zum Beispiel Bomben, die mehrere Blasen auf einmal sprengen, oder Regenbogenblasen, die jede Farbe annehmen können.

-Die Geschichte

-Bubble Shooter ist ein Spiel mit einer langen Geschichte. Es wurde ursprünglich im Jahr 1994 von der Firma Taito als Arcade-Spiel namens Puzzle Bobble veröffentlicht. Das Spiel war ein großer Erfolg und wurde bald für verschiedene Plattformen wie PC, PlayStation, Game Boy und andere portiert. Im Jahr 2002 erschien eine Web-Version des Spiels unter dem Namen Bubble Shooter, die schnell viral ging und Millionen von Spielern auf der ganzen Welt begeisterte. Seitdem sind viele Nachahmer und Variationen des Spiels entstanden, die das gleiche Grundprinzip beibehalten, aber unterschiedliche Grafiken, Musik, Level und Features bieten.

-Die Vorteile

-Bubble Shooter ist nicht nur ein unterhaltsames Spiel, sondern auch ein gutes Training für dein Gehirn. Das Spiel fördert nämlich verschiedene kognitive Fähigkeiten wie Konzentration, Logik, Strategie, Gedächtnis und Reaktionsgeschwindigkeit. Außerdem hilft es dir, Stress abzubauen und entspannen. Bubble Shooter ist also ein Spiel, das Spaß macht und gleichzeitig gut für dich ist.

-Wie kann man Bubble Shooter kostenlos herunterladen?

-Wenn du Lust hast, Bubble Shooter zu spielen, hast du viele Möglichkeiten, das Spiel kostenlos herunterzuladen. Es gibt viele Webseiten, die dir verschiedene Versionen von Bubble Shooter anbieten, die du direkt in deinem Browser spielen kannst. Du brauchst dafür nur eine Internetverbindung und einen Flash-Player. Wenn du lieber das Spiel auf deinem PC oder Smartphone installieren möchtest, gibt es auch viele Apps und Programme, die du kostenlos downloaden kannst. Hier sind einige Beispiele für die besten Bubble Shooter Spiele für Windows PC und Android Smartphone.

-Für Windows PC

-Wenn du einen Windows PC hast, kannst du aus vielen Bubble Shooter Spielen wählen, die du kostenlos herunterladen kannst. Hier sind einige der beliebtesten:

-Bubble Shooter Classic

-Bubble Shooter Classic ist eine kostenlose App, die du aus dem Microsoft Store herunterladen kannst. Es ist eine klassische Version von Bubble Shooter mit einfacher Grafik und Musik. Das Spiel hat mehr als 1000 Level, die du meistern musst. Du kannst auch deine eigenen Level erstellen und mit anderen Spielern teilen. Das Spiel ist einfach zu spielen, aber schwer zu meistern. Du kannst deine Punktzahl mit deinen Freunden vergleichen und versuchen, alle Sterne zu sammeln.

-Bubble Shooter von Netzwelt

-Bubble Shooter von Netzwelt ist eine kostenlose Web-Version von Bubble Shooter, die du direkt in deinem Browser spielen kannst. Du brauchst dafür nur eine Internetverbindung und einen Flash-Player. Das Spiel hat eine bunte Grafik und eine fröhliche Musik. Das Spiel hat mehrere Modi, wie zum Beispiel Arcade, Puzzle und Zeit. Du kannst auch zwischen verschiedenen Schwierigkeitsgraden wählen. Das Spiel ist sehr unterhaltsam und herausfordernd.

-bubble shooter classic download kostenlos vollversion deutsch

-bubble shooter spiel download kostenlos vollversion deutsch

-bubble shooter deluxe download kostenlos vollversion deutsch

-bubble shooter 3 download kostenlos vollversion deutsch

-bubble shooter 2 download kostenlos vollversion deutsch

-bubble shooter online spielen kostenlos vollversion deutsch

-bubble shooter offline download kostenlos vollversion deutsch

-bubble shooter windows 10 download kostenlos vollversion deutsch

-bubble shooter pc download kostenlos vollversion deutsch

-bubble shooter app download kostenlos vollversion deutsch

-bubble shooter microsoft store download kostenlos vollversion deutsch

-bubble shooter netzwelt download kostenlos vollversion deutsch

-bubble shooter chip download kostenlos vollversion deutsch

-bubble shooter web-version download kostenlos vollversion deutsch

-bubble shooter ohne anmeldung download kostenlos vollversion deutsch

-bubble shooter ohne werbung download kostenlos vollversion deutsch

-bubble shooter ohne internet download kostenlos vollversion deutsch

-bubble shooter ohne installation download kostenlos vollversion deutsch

-bubble shooter für mac download kostenlos vollversion deutsch

-bubble shooter für linux download kostenlos vollversion deutsch

-bubble shooter für android download kostenlos vollversion deutsch

-bubble shooter für ios download kostenlos vollversion deutsch

-bubble shooter für windows xp download kostenlos vollversion deutsch

-bubble shooter für windows vista download kostenlos vollversion deutsch

-bubble shooter für windows 7 download kostenlos vollversion deutsch

-bubble shooter für windows 8 download kostenlos vollversion deutsch

-bubble shooter mit leveln download kostenlos vollversion deutsch

-bubble shooter mit highscore download kostenlos vollversion deutsch

-bubble shooter mit sound download kostenlos vollversion deutsch

-bubble shooter mit musik download kostenlos vollversion deutsch

-bubble shooter mit farbenblind-modus download kostenlos vollversion deutsch

-bubble shooter mit verschiedenen modi download kostenlos vollversion deutsch

-bubble shooter mit verschiedenen blasenarten download kostenlos vollversion deutsch

-bubble shooter mit verschiedenen hintergründen download kostenlos vollversion deutsch

-bubble shooter mit verschiedenen schwierigkeitsgraden download kostenlos vollversion deutsch

-bubble shooter mit tipps und tricks download kostenlos vollversion deutsch

-bubble shooter mit anleitung und tutorial download kostenlos vollversion deutsch

-bubble shooter mit updates und erweiterungen download kostenlos vollversion deutsch

-bubble shooter mit support und kundenservice download kostenlos vollversion deutsch

-bubble shooter mit bewertungen und rezensionen download kostenlos vollversion deutsch

-bubble shooter von milanworldwidegames download kostenlos vollversion deutsch[^1^]

-bubble shooter von gabriele cirulli download kostenlos vollversion deutsch[^2^]

-bubble shooter von mojang synergies ab download kostenlos vollversion deutsch[^2^]

-bubble shooter von moorhuhn.de download kostenlos vollversion deutsch[^2^]

-bubble shooter von pangea software download kostenlos vollversion deutsch[^2^]

-bubble shooter von new scientist download kostenlos vollversion deutsch[^3^]

-bubble shooter von the sun.com download kostenlos vollversion deutsch[^3^]

-bubble shooter von yahoo news.com download kostenlos vollversion deutsch[^3^]

-bubble shooter von wikipedia.org download kostenlos vollversion deutsch

-Bubble-Shooter von Microsoft Store

-Bubble-Shooter von Microsoft Store ist eine weitere kostenlose App, die du aus dem Microsoft Store herunterladen kannst. Es ist eine moderne Version von Bubble Shooter mit einer schönen Grafik und einer entspannenden Musik. Das Spiel hat mehr als 3000 Level, die du freischalten musst. Du kannst auch verschiedene Power-Ups verwenden, um dir zu helfen. Das Spiel ist sehr süchtig machend und spaßig.

-Für Android Smartphone

-Wenn du ein Android Smartphone hast, kannst du auch viele Bubble Shooter Spiele kostenlos herunterladen. Hier sind einige der populärsten:

-Bubble Shooter von Ilyon Dynamics

-Bubble Shooter von Ilyon Dynamics ist eine kostenlose App, die du aus dem Google Play Store herunterladen kannst. Es ist eine der beliebtesten Bubble Shooter Apps mit mehr als 100 Millionen Downloads. Das Spiel hat eine tolle Grafik und eine lustige Musik. Das Spiel hat mehr als 3000 Level, die du spielen kannst. Du kannst auch verschiedene Booster und Power-Ups nutzen, um deine Punktzahl zu erhöhen. Das Spiel ist sehr unterhaltsam und spannend.

-Bubble Witch 3 Saga von King

-Bubble Witch 3 Saga von King ist eine weitere kostenlose App, die du aus dem Google Play Store herunterladen kannst. Es ist eine der erfolgreichsten Bubble Shooter Apps mit mehr als 50 Millionen Downloads. Das Spiel hat eine fantastische Grafik und eine magische Musik. Das Spiel hat eine interessante Storyline, bei der du der Hexe Stella helfen musst, das Böse zu besiegen. Das Spiel hat mehr als 2000 Level, die du erkunden kannst. Du kannst auch deine Freunde herausfordern und ihnen Geschenke schicken. Das Spiel ist sehr faszinierend und abenteuerlich.

-Panda Pop von Jam City

-Panda Pop von Jam City ist noch eine weitere kostenlose App, die du aus dem Google Play Store herunterladen kannst. Es ist eine der niedlichsten Bubble Shooter Apps mit mehr als 10 Millionen Downloads. Das Spiel hat eine süße Grafik und eine fröhliche Musik. Das Spiel hat eine rührende Geschichte, bei der du den Pandas helfen musst, ihre Babys zu retten. Das Spiel hat mehr als 3000 Level, die du genießen kannst. Du kannst auch verschiedene Power-Ups verwenden, um deine Mission zu erleichtern. Das Spiel ist sehr liebenswert und lustig.

-Fazit: Bubble Shooter macht Spaß und trainiert das Gehirn

-Bubble Shooter ist ein Spiel, das du nicht verpassen solltest, wenn du Puzzlespiele magst. Es ist ein Spiel, das dich stundenlang unterhalten und herausfordern kann. Es ist auch ein Spiel, das dein Gehirn trainiert und deine Stimmung verbessert. Es gibt viele Bubble Shooter Spiele, die du kostenlos herunterladen kannst, sowohl für deinen PC als auch für dein Smartphone. Du kannst aus verschiedenen Versionen wählen, die unterschiedliche Grafiken, Musik, Level und Features haben. Du kannst auch mit deinen Freunden spielen und deine Punktzahl mit ihnen teilen. Bubble Shooter ist also ein Spiel, das dir viel Spaß und Nutzen bringt.

-Warum sollte man Bubble Shooter spielen?

-Bubble Shooter ist ein Spiel, das viele Vorteile hat. Hier sind einige Gründe, warum du Bubble Shooter spielen solltest:

-

-- Es ist ein einfaches Spiel, das du schnell lernen und spielen kannst.

-- Es ist ein spannendes Spiel, das dich immer wieder fordert und motiviert.

-- Es ist ein unterhaltsames Spiel, das dich von Langeweile und Stress befreit.

-- Es ist ein gutes Spiel, das dein Gehirn fördert und deine kognitiven Fähigkeiten verbessert.

-- Es ist ein kostenloses Spiel, das du jederzeit und überall spielen kannst.

-

-Welches Spiel ist das beste?

-Es gibt viele Bubble Shooter Spiele, die du kostenlos herunterladen kannst. Welches Spiel das beste ist, hängt von deinem persönlichen Geschmack und deinen Vorlieben ab. Du kannst verschiedene Spiele ausprobieren und sehen, welches dir am meisten gefällt. Hier sind einige Kriterien, die du beachten kannst, um das beste Spiel für dich zu finden:

-

-- Die Grafik: Die Grafik eines Spiels kann einen großen Einfluss auf dein Spielerlebnis haben. Du solltest ein Spiel wählen, das eine schöne und klare Grafik hat, die dir gefällt.

-- Die Musik: Die Musik eines Spiels kann deine Stimmung und deine Konzentration beeinflussen. Du solltest ein Spiel wählen, das eine angenehme und passende Musik hat, die dich nicht stört.

-- Die Level: Die Level eines Spiels können den Schwierigkeitsgrad und die Abwechslung bestimmen. Du solltest ein Spiel wählen, das genug Level hat, um dich zu beschäftigen und zu fordern.

-- Die Features: Die Features eines Spiels können den Spaßfaktor und die Herausforderung erhöhen. Du solltest ein Spiel wählen, das interessante Features hat, wie zum Beispiel Power-Ups, Spezialblasen oder verschiedene Modi.

-

- FAQs

-Hier sind einige häufig gestellte Fragen zu Bubble Shooter:

-

-- Wie kann ich meine Punktzahl erhöhen?

-Du kannst deine Punktzahl erhöhen, indem du mehr Blasen auf einmal zerplatzt, indem du Bonuspunkte sammelst oder indem du Power-Ups verwendest.

-- Wie kann ich verhindern, dass die Blasen den Boden erreichen?

-Du kannst verhindern, dass die Blasen den Boden erreichen, indem du schnell und präzise schießt, indem du Lücken nutzt oder indem du Bomben oder andere Spezialblasen benutzt.

-- Wie kann ich mit meinen Freunden spielen?

-Du kannst mit deinen Freunden spielen, indem du eine App wählst, die einen Multiplayer-Modus oder eine soziale Funktion hat. Du kannst dann deine Freunde einladen oder herausfordern oder ihnen Geschenke schicken.

-- Wie kann ich mein Gehirn trainieren?

-Du kannst dein Gehirn trainieren, indem du regelmäßig Bubble Shooter spielst. Das Spiel hilft dir, deine Konzentration, Logik, Strategie, Gedächtnis und Reaktionsgeschwindigkeit zu verbessern.

-- Wo kann ich mehr über Bubble Shooter erfahren?

-Du kannst mehr über Bubble Shooter erfahren, indem du online recherchierst oder indem du andere Spieler fragst. Du kannst auch Blogs oder Foren besuchen oder Videos oder Podcasts anschauen, die dir Tipps und Tricks verraten.

-Das war unser Artikel über Bubble Shooter Download Kostenlos Vollversion Deutsch. Wir hoffen, dass du ihn nützlich und interessant fandest. Wenn du Bubble Shooter ausprobieren möchtest, kannst du eine der Apps oder Webseiten wählen, die wir dir vorgestellt haben. Viel Spaß beim Spielen und Platzen! 401be4b1e0

-

-

\ No newline at end of file

diff --git a/spaces/congsaPfin/Manga-OCR/logs/Download Supreme Duelist Stickman A Funny and Addictive Stickman Game with New Features.md b/spaces/congsaPfin/Manga-OCR/logs/Download Supreme Duelist Stickman A Funny and Addictive Stickman Game with New Features.md

deleted file mode 100644

index e6b50c064c82b2aa2c93ee69bb96519042daa7ef..0000000000000000000000000000000000000000

--- a/spaces/congsaPfin/Manga-OCR/logs/Download Supreme Duelist Stickman A Funny and Addictive Stickman Game with New Features.md

+++ /dev/null

@@ -1,112 +0,0 @@

-

-Download Supreme Duelist Stickman New Version: A Fun and Crazy Stickman Game

-If you are looking for a fun and crazy stickman game, you should try Supreme Duelist Stickman. This is a popular action game that lets you fight, shoot, and race with stickman characters in various modes and maps. You can also customize your own stickman warriors and challenge your friends or other players online. In this article, we will tell you what is Supreme Duelist Stickman, how to download the new version, why you should play it, and some tips and tricks to help you win.

- What is Supreme Duelist Stickman?

-Supreme Duelist Stickman is an action game developed by Neron's Brother. It was released in 2019 and has been updated regularly with new features and improvements. The game has over 100 million downloads on Google Play Store and over 10 million downloads on Microsoft Store. You can also play it on your PC using BlueStacks, an Android emulator that lets you enjoy mobile games on a bigger screen.

-download supreme duelist stickman new version

DOWNLOAD ✺ https://urlca.com/2uO5JG

- Features of Supreme Duelist Stickman

-Different modes and maps

-Supreme Duelist Stickman offers a lot of variety in terms of gameplay. You can choose from different modes, such as 1 player, 2 player, 3 player, 4 player with CPU, survival mode, boss fight tournament, and new map editor. You can also select from different maps, such as city, forest, desert, space, ice land, lava land, and more. Each map has its own obstacles and challenges that you have to overcome.

- Simple controls and realistic physics

-The game has simple controls that are easy to learn and use. You can move your stickman with the joystick, jump with the button, and attack with the weapon button. You can also switch weapons by tapping on the weapon icon. The game uses realistic physics to simulate the movement and collision of the stickman characters. You can see them fly over cliffs, bounce off walls, or fall down from heights.

- Customizable stickman characters

-You can also create your own stickman warriors by choosing from different skins, weapons, hats, and accessories. You can unlock new items by playing the game or by watching ads. You can also edit the size, color, and shape of your stickman to make it more unique. You can save your custom stickman in the gallery and use it in any mode or map.

- How to download Supreme Duelist Stickman new version?

-For Android devices

-If you have an Android device, you can download Supreme Duelist Stickman new version from Google Play Store. Just follow these steps:

-

-- Open Google Play Store on your device.

-- Search for Supreme Duelist Stickman or tap on this link.

-- Tap on Install to download the game.

-- Wait for the installation to finish.

-- Tap on Open to launch the game.

-

- For Windows PC

-If you have a Windows PC, you can download Supreme Duelist Stickman new version from Microsoft Store or from BlueStacks[^ 3^]. BlueStacks is a free and safe Android emulator that lets you play mobile games on your PC. Just follow these steps:

-

-- Download and install BlueStacks from this link.

-- Launch BlueStacks and sign in with your Google account.

-- Search for Supreme Duelist Stickman in the search bar or tap on this link.

-- Click on Install to download the game.

-- Wait for the installation to finish.

-- Click on Supreme Duelist Stickman icon to launch the game.

-

- Why should you play Supreme Duelist Stickman?

-Supreme Duelist Stickman is not just a simple stickman game. It is a fun and crazy game that will keep you entertained for hours. Here are some of the benefits of playing Supreme Duelist Stickman:

- Benefits of playing Supreme Duelist Stickman

-Fun and addictive gameplay

-The game has a fun and addictive gameplay that will make you want to play more. You can enjoy the thrill of fighting, shooting, and racing with stickman characters in different modes and maps. You can also use different weapons, such as guns, swords, axes, hammers, and more. You can also use gravity and instant KO to defeat your enemies or make them fly away. The game has a lot of humor and surprises that will make you laugh.

- Offline and online modes

-The game also has offline and online modes that will suit your preference. You can play the game offline without internet connection or wifi. You can also play the game online with other players from around the world. You can join or create rooms, chat with other players, and compete in leaderboards. You can also invite your friends to play with you online or offline.

-supreme duelist stickman apk free download

-how to play supreme duelist stickman on pc

-supreme duelist stickman mod apk unlimited money

-supreme duelist stickman online multiplayer

-supreme duelist stickman new version update

-supreme duelist stickman game tips and tricks

-supreme duelist stickman boss fight tournament

-supreme duelist stickman map editor tutorial

-supreme duelist stickman best skin unlock

-supreme duelist stickman 4 player mode

-supreme duelist stickman realistic ragdoll physics

-supreme duelist stickman 2d stick fight battle

-supreme duelist stickman create your own warriors

-supreme duelist stickman gravity on/off mode

-supreme duelist stickman instant ko feature

-supreme duelist stickman funny and crazy gameplay

-supreme duelist stickman latest version for android

-supreme duelist stickman app store download link

-supreme duelist stickman microsoft store download link

-supreme duelist stickman google play store download link

-supreme duelist stickman review and rating

-supreme duelist stickman gameplay video and screenshot

-supreme duelist stickman new mini game mode football

-supreme duelist stickman vs cpu mode difficulty level

-supreme duelist stickman simple and easy control

-supreme duelist stickman how to install and run

-supreme duelist stickman compatible devices and requirements

-supreme duelist stickman developer and contact information

-supreme duelist stickman feedback and suggestion form

-supreme duelist stickman bug report and fix guide

-supreme duelist stickman fan community and forum

-supreme duelist stickman cheat codes and hacks

-supreme duelist stickman new features and improvements

-supreme duelist stickman offline and online mode switch

-supreme duelist stickman support and help center

-supreme duelist stickman how to play with friends on same device

-supreme duelist stickman how to play with friends online

-supreme duelist stickman how to customize your character and weapon

-supreme duelist stickman how to earn coins and gems fast

-supreme duelist stickman how to use power-ups and items effectively

- Earn rewards and achievements

-The game also rewards you for playing well. You can earn coins, gems, stars, and trophies by completing levels, missions, and challenges. You can use these rewards to unlock new items, skins, weapons, and maps. You can also earn achievements by reaching certain milestones or performing certain actions in the game. You can view your achievements in the menu and share them with your friends.

- Tips and tricks for playing Supreme Duelist Stickman

-Use gravity and instant KO wisely

-One of the unique features of the game is the gravity and instant KO buttons. These buttons can help you win or lose the game depending on how you use them. The gravity button lets you change the direction of gravity in the map. You can use it to make your enemies fall off cliffs or into traps. The instant KO button lets you knock out your enemies instantly. You can use it to finish them off quickly or to escape from a tight situation. However, be careful not to use these buttons too often or too randomly as they can also affect you or your allies.

- Experiment with different weapons and skins

-The game has a lot of weapons and skins that you can choose from. Each weapon has its own advantages and disadvantages. For example, guns have long range but low damage, swords have high damage but short range, axes have high damage but slow speed, etc. You should experiment with different weapons and find the ones that suit your style and strategy. You should also try different skins and customize your stickman characters. Each skin has its own personality and animation. For example, ninja skin has fast movement and stealthy attacks, clown skin has funny gestures and sounds, etc. You should find the skins that match your mood and preference.

- Challenge your friends or other players online

-The game is more fun when you play with other people. You can challenge your friends or other players online in different modes and maps. You can join or create rooms, chat with other players, and compete in leaderboards. You can also invite your friends to play with you online or offline. You can have fun together or test your skills against each other.

- Conclusion

-Supreme Duelist Stickman is a fun and crazy stickman game that you should try. It has a lot of features that will keep you entertained for hours. You can download the new version of the game from Google Play Store or Microsoft Store for free. You can also play it on your PC using BlueStacks, an Android emulator that lets you enjoy mobile games on a bigger screen. You can also follow these tips and tricks to help you win the game:

-

-- Use gravity and instant KO wisely.

-- Experiment with different weapons and skins.

- Challenge your friends or other players online.

-

-If you are looking for a fun and crazy stickman game, you should download Supreme Duelist Stickman new version and enjoy the thrill of fighting, shooting, and racing with stickman characters in various modes and maps. You can also customize your own stickman warriors and challenge your friends or other players online. Supreme Duelist Stickman is a game that will make you laugh and have fun.

- FAQs

-Here are some of the frequently asked questions about Supreme Duelist Stickman:

-

-- What is the latest version of Supreme Duelist Stickman?

-The latest version of Supreme Duelist Stickman is 3.0.1, which was released on June 15, 2023. It added new weapons, skins, maps, and bug fixes.

-- How can I play Supreme Duelist Stickman with my friends?

-You can play Supreme Duelist Stickman with your friends online or offline. To play online, you need to have an internet connection or wifi. You can join or create rooms, chat with other players, and compete in leaderboards. To play offline, you need to have two devices with the game installed. You can connect the devices via Bluetooth or hotspot and play in 2 player mode.

-- How can I get more coins, gems, stars, and trophies in Supreme Duelist Stickman?

-You can get more coins, gems, stars, and trophies by playing the game or by watching ads. You can use these rewards to unlock new items, skins, weapons, and maps. You can also earn achievements by reaching certain milestones or performing certain actions in the game.

-- How can I change the language of Supreme Duelist Stickman?

-You can change the language of Supreme Duelist Stickman by going to the settings menu and tapping on the language option. You can choose from English, Spanish, Portuguese, French, German, Russian, Turkish, Arabic, Indonesian, Vietnamese, Thai, Japanese, Korean, Chinese (Simplified), and Chinese (Traditional).

-- Is Supreme Duelist Stickman safe to download and play?

-Yes, Supreme Duelist Stickman is safe to download and play. It does not contain any viruses or malware. It also does not require any personal information or permissions from your device. However, you should be careful not to download the game from untrusted sources or websites as they may contain harmful files or links.

- 197e85843d

-

-

\ No newline at end of file

diff --git a/spaces/congsaPfin/Manga-OCR/logs/FIFA Mobile Play the Official FIFA World Cup 2022 Game with Your Favorite Soccer Stars and Teams.md b/spaces/congsaPfin/Manga-OCR/logs/FIFA Mobile Play the Official FIFA World Cup 2022 Game with Your Favorite Soccer Stars and Teams.md

deleted file mode 100644

index 77267953572b38ad24edd3ad235a90834c2dbbd6..0000000000000000000000000000000000000000

--- a/spaces/congsaPfin/Manga-OCR/logs/FIFA Mobile Play the Official FIFA World Cup 2022 Game with Your Favorite Soccer Stars and Teams.md

+++ /dev/null

@@ -1,110 +0,0 @@

-

-FIFA Soccer Mobile APK: Everything You Need to Know

-If you are a soccer fan and you want to experience the thrill of playing with your favorite soccer stars on your mobile device, then you should check out FIFA Soccer Mobile APK. This is a free-to-play soccer game developed by Electronic Arts that lets you build your dream team, compete in various modes, and relive the world's greatest soccer tournament, the FIFA World Cup 2022™. In this article, we will tell you everything you need to know about FIFA Soccer Mobile APK, including its features, how to download and install it, how to play it, and its pros and cons.

- What is FIFA Soccer Mobile APK?

-FIFA Soccer Mobile APK is an Android game that is based on the popular FIFA franchise. It is a soccer simulation game that allows you to create your own ultimate team of soccer players from over 15,000 authentic soccer stars, including world-class talent like Kylian Mbappé, Christian Pulisic, Vinicius Jr and Son Heung-min. You can also choose from over 600 teams, including Chelsea, Paris SG, Real Madrid, Liverpool and Juventus. You can play in various modes, such as Head-to-Head, VS Attack, and Manager Mode. You can also relive the official tournament brackets of the FIFA World Cup 2022™ with any of the 32 qualified nations. FIFA Soccer Mobile APK is updated regularly with new players, kits, clubs and leagues to reflect the real world 22/23 soccer season.

-fifa soccer mobile apk

Download Zip →→→ https://urlca.com/2uO5b6

- Features of FIFA Soccer Mobile APK

-FIFA Soccer Mobile APK has many features that make it one of the best soccer games on mobile devices. Here are some of the main features that you can enjoy in this game:

- FIFA World Cup 2022™ Mode

-This is a special mode that lets you relive the official tournament brackets of the FIFA World Cup 2022™ with any of the 32 qualified nations. You can play with authentic World Cup national team kits and badges, the official match ball, and in World Cup stadiums (Al Bayt and Lusail). You can also enjoy localized World Cup commentary to bring the most immersive match atmosphere.

- Soccer ICONs and Heroes

-This feature allows you to collect and play with over 100 soccer Heroes and ICONs from different leagues and eras. You can score big with world soccer ICONs like Paolo Maldini, Ronaldinho, & more. You can also level up your team with soccer legends from over 30+ leagues.

- Immersive Next-Level Soccer Simulation

-This feature gives you a realistic and thrilling soccer experience on your mobile device. You can experience new, upgraded soccer stadiums including several classic FIFA venues up to 60 fps*. You can also hear realistic stadium SFX and live on-field audio commentary.

- Manager Mode

-This feature lets you be the soccer manager of your own dream team. You can plan your strategy and adjust your tactics in real time or choose auto-play to enjoy an idle soccer manager game experience.

- How to Download and Install FIFA Soccer Mobile APK?

-If you want to download and install FIFA Soccer Mobile APK on your Android device, you need to follow these steps:

- Requirements for FIFA Soccer Mobile APK

-

-- Your Android device must have at least Android 6.0 or higher.

-- Your Android device must have at least 1 GB of RAM.

-- Your Android device must have at least 100 MB of free storage space.

-- You must have a stable internet connection to download and play the game.

-

- Steps to Download and Install FIFA Soccer Mobile APK

-

-- Go to the official website of FIFA Soccer Mobile APK and click on the download button. You can also use this link:

-- Wait for the download to finish and then locate the APK file on your device.

-- Tap on the APK file and allow the installation from unknown sources if prompted.

-- Follow the on-screen instructions and wait for the installation to complete.

-- Launch the game and enjoy playing FIFA Soccer Mobile APK on your device.

-

- How to Play FIFA Soccer Mobile APK?

-Playing FIFA Soccer Mobile APK is easy and fun. You just need to follow these steps:

- Build Your Ultimate Team

-The first thing you need to do is to create your own ultimate team of soccer players. You can choose from over 15,000 authentic soccer stars, including world-class talent like Kylian Mbappé, Christian Pulisic, Vinicius Jr and Son Heung-min. You can also customize your team's formation, tactics, kits, and badges. You can earn coins and rewards by playing matches, completing objectives, and participating in events. You can use these coins and rewards to buy new players, upgrade your team, and unlock new features.

-fifa soccer mobile apk download

-fifa soccer mobile apk mod

-fifa soccer mobile apk latest version

-fifa soccer mobile apk offline

-fifa soccer mobile apk obb

-fifa soccer mobile apk android

-fifa soccer mobile apk hack

-fifa soccer mobile apk update

-fifa soccer mobile apk old version

-fifa soccer mobile apk data

-fifa soccer mobile apk free

-fifa soccer mobile apk unlimited money

-fifa soccer mobile apk revdl

-fifa soccer mobile apk rexdl

-fifa soccer mobile apk pure

-fifa soccer mobile apk mirror

-fifa soccer mobile apk 2022

-fifa soccer mobile apk 2023

-fifa soccer mobile apk world cup

-fifa soccer mobile apk gameplay

-fifa soccer mobile apk size

-fifa soccer mobile apk requirements

-fifa soccer mobile apk features

-fifa soccer mobile apk cheats

-fifa soccer mobile apk tips

-fifa soccer mobile apk tricks

-fifa soccer mobile apk guide

-fifa soccer mobile apk review

-fifa soccer mobile apk ratings

-fifa soccer mobile apk news

-fifa soccer mobile apk events

-fifa soccer mobile apk rewards

-fifa soccer mobile apk coins

-fifa soccer mobile apk gems

-fifa soccer mobile apk players

-fifa soccer mobile apk teams

-fifa soccer mobile apk leagues

-fifa soccer mobile apk modes

-fifa soccer mobile apk manager mode

-fifa soccer mobile apk head-to-head mode

-fifa soccer mobile apk vs attack mode

-fifa soccer mobile apk icons mode

-fifa soccer mobile apk heroes mode

-fifa soccer mobile apk champions league mode

-fifa soccer mobile apk ultimate team mode

-fifa soccer mobile apk season mode

-fifa soccer mobile apk tournament mode

- Compete in PVP Modes

-The next thing you need to do is to compete in various PVP modes against other players from around the world. You can play in Head-to-Head mode, where you can control your team on the pitch and show your skills in real time. You can also play in VS Attack mode, where you can take turns attacking and defending with your opponent in a fast-paced match. You can also join a League and team up with other players to compete in tournaments and events. You can climb the leaderboards, earn trophies, and win exclusive rewards by competing in PVP modes.

- Train Your Players and Level Up Your Team

-The last thing you need to do is to train your players and level up your team. You can improve your players' attributes, skills, and abilities by using training items and XP. You can also unlock new skill moves, traits, and specialties for your players by leveling them up. You can also boost your team's overall rating by increasing your team chemistry. You can increase your team chemistry by using players from the same club, league, nation, or region. You can also use soccer ICONs and Heroes to boost your team chemistry with any player.

- Pros and Cons of FIFA Soccer Mobile APK

-FIFA Soccer Mobile APK has many pros and cons that you should consider before playing it. Here are some of them:

- Pros of FIFA Soccer Mobile APK

-

-- It is free-to-play and does not require any subscription or registration.

-- It has high-quality graphics, sound effects, and commentary that create an immersive soccer experience.

-- It has a large variety of players, teams, modes, and features that offer endless gameplay possibilities.

-- It is updated regularly with new content that reflects the real world 22/23 soccer season.

-- It is compatible with most Android devices and does not require a lot of storage space or battery power.

-

- Cons of FIFA Soccer Mobile APK

-

-- It requires a stable internet connection to download and play the game.

-- It may have some bugs or glitches that affect the game performance or functionality.

-- It may have some ads or in-app purchases that may interrupt the game flow or affect the game balance.

-- It may have some compatibility issues with some Android devices or operating systems.

-- It may have some content or features that are restricted by region or age rating.

-

- Conclusion

-FIFA Soccer Mobile APK is a great soccer game that lets you build your dream team, compete in various modes, and relive the world's greatest soccer tournament, the FIFA World Cup 2022™. It has many features that make it one of the best soccer games on mobile devices. It is free-to-play, easy to download and install, and fun to play. However, it also has some drawbacks that you should be aware of before playing it. It requires a stable internet connection, may have some bugs or glitches, may have some ads or in-app purchases, may have some compatibility issues, and may have some content or features that are restricted by region or age rating. Therefore, you should weigh the pros and cons of FIFA Soccer Mobile APK before playing it. If you are a soccer fan and you want to experience the thrill of playing with your favorite soccer stars on your mobile device, then you should give FIFA Soccer Mobile APK a try. You might find it to be the best soccer game on mobile devices. Here are some FAQs that you might have about FIFA Soccer Mobile APK: Q: Is FIFA Soccer Mobile APK safe to download and install? A: Yes, FIFA Soccer Mobile APK is safe to download and install as long as you use the official website or a trusted source. You should avoid downloading and installing FIFA Soccer Mobile APK from unknown or suspicious sources as they might contain viruses or malware that can harm your device or steal your personal information. Q: Is FIFA Soccer Mobile APK compatible with iOS devices? A: No, FIFA Soccer Mobile APK is only compatible with Android devices. If you have an iOS device, you can download and play FIFA Soccer from the App Store instead. Q: How can I contact the developers or support team of FIFA Soccer Mobile APK? A: You can contact the developers or support team of FIFA Soccer Mobile APK by using the in-game help center, by visiting the official website, or by following the official social media accounts of FIFA Soccer Mobile APK. Q: How can I get more coins and rewards in FIFA Soccer Mobile APK? A: You can get more coins and rewards in FIFA Soccer Mobile APK by playing matches, completing objectives, participating in events, watching ads, or making in-app purchases. Q: How can I update FIFA Soccer Mobile APK to the latest version? A: You can update FIFA Soccer Mobile APK to the latest version by using the in-game update feature, by visiting the official website, or by checking the Google Play Store for updates. 197e85843d

-

-

\ No newline at end of file

diff --git a/spaces/congsaPfin/Manga-OCR/logs/Google Go APK The Best Way to Search Translate and Discover on the Web.md b/spaces/congsaPfin/Manga-OCR/logs/Google Go APK The Best Way to Search Translate and Discover on the Web.md

deleted file mode 100644

index c5954275aedbabfc2ae9a5b0dbe35368cdbf4504..0000000000000000000000000000000000000000

--- a/spaces/congsaPfin/Manga-OCR/logs/Google Go APK The Best Way to Search Translate and Discover on the Web.md

+++ /dev/null

@@ -1,120 +0,0 @@

-

-Google Go APK: A Lighter and Faster Way to Search

-Have you ever wished for a simpler and faster way to search the web on your Android device? If so, you might want to try Google Go APK, a lighter version of the classic Google Search app that is designed for countries with slow connections and low-end smartphones. In this article, we will tell you what Google Go APK is, how to download and install it, and what benefits it offers.

-google go apk

Download Zip ✺ https://urlca.com/2uO5Xu

- What is Google Go APK?

-Google Go APK (Android App) is a reduced version of the Google Search app that is optimized to save up to 40% data and run smoothly on devices with low space and memory. It is only 12MB in size, which makes it fast to download and easy to store on your phone. It also has a simple and intuitive interface that lets you access your favorite apps and websites, as well as images, videos, news, and more, with just a few taps or voice commands.

- Features of Google Go APK

-Google Go APK has many features that make it a great alternative to the standard Google Search app. Here are some of them:

- Type less, discover more

-With Google Go APK, you can save time by tapping your way through trending queries and topics, or by using your voice to say what you're looking for. You can also use Google Lens to point your camera at text or objects and get instant information or translations.

-google go app download apk

-google go apk latest version

-google go apk for android

-google go apk free download

-google go apk mirror

-google go apk old version

-google go apk pure

-google go apk uptodown

-google go lite apk

-google go mod apk

-google lens go apk

-google assistant go apk

-google camera go apk

-google maps go apk

-google photos go apk

-google chrome go apk

-google duo go apk

-google files go apk

-google gmail go apk

-google keyboard go apk

-google news go apk

-google play go apk

-google translate go apk

-google youtube go apk

-download google go app for android apk

-download google lens go app for android apk

-download google assistant go app for android apk

-download google camera go app for android apk

-download google maps go app for android apk

-download google photos go app for android apk

-download google chrome go app for android apk

-download google duo go app for android apk

-download google files go app for android apk

-download google gmail go app for android apk

-download google keyboard go app for android apk

-download google news go app for android apk

-download google play go app for android apk

-download google translate go app for android apk

-download google youtube go app for android apk

-how to install google go apk on android phone

-how to update google go apk on android phone

-how to uninstall google go apk from android phone

-how to use google lens go with camera in android phone

-how to use google assistant go with voice in android phone

-how to use google camera go with hdr in android phone

-how to use google maps go with navigation in android phone

-how to use google photos go with backup in android phone

-how to use google chrome go with incognito in android phone

-how to use google duo go with video call in android phone

-how to use google files go with clean up in android phone

- Make Google read it

-If you don't feel like reading a web page, you can make Google read it for you. Just tap the speaker icon and listen to any web page in your preferred language. The words are highlighted as they are read, so you can easily follow along.

- Search and translate with your camera

-Google Go APK also lets you use your camera to search and translate anything you see. Whether it's a sign, a form, a product, or a word you don't understand, you can just point your camera at it and get instant results or translations.

- Everything you need in one app

-Google Go APK is more than just a search app. It also gives you easy and quick access to your favorite apps and websites, as well as images, videos, and information on the things you care about. You can also customize your home screen with wallpapers and shortcuts to suit your preferences.

- Don't miss out on what's popular and trending

-With Google Go APK, you can always stay updated on what's happening in the world. You can explore the latest trending topics by tapping the search bar, or find the perfect greetings to share with your loved ones by tapping on "Images" or "GIFs". You can also discover new content based on your interests and location.

- Easily switch between languages

-If you want to search in another language, you don't have to change your settings or use a separate app. With Google Go APK, you can set a second language to switch your search results to or from at any time. You can also translate any web page or text with just one tap.

- How to download and install Google Go APK?

-If you want to try Google Go APK on your Android device, there are two ways to download and install it:

- Download from Google Play Store

-The easiest way to get Google Go APK is to download it from the official Google Play Store. Just follow these steps:

-

-- Open the Google Play Store app on your device

- Search for "Google Go" or use this link: [Google Go: A lighter, faster way to search - Apps on Google Play]

-- Tap on the "Install" button and wait for the app to download and install on your device

-- Open the app and enjoy its features

-

- Download from APK websites

-If you can't access the Google Play Store or want to download the APK file directly, you can also get Google Go APK from various APK websites. However, be careful to choose a reputable and safe source, as some APK files may contain malware or viruses. Here are the steps to follow:

-

-- Go to a trusted APK website, such as [APKPure] or [APKMirror]

-- Search for "Google Go" or use these links: [Google Go APK Download - APKPure.com] or [Google Go: A lighter, faster way to search 3.39.397679726.release APK Download by Google LLC - APKMirror]

-- Download the latest version of the APK file to your device

-- Enable the "Unknown sources" option in your device settings to allow the installation of apps from outside the Google Play Store

-- Locate the downloaded APK file and tap on it to install it on your device

-- Open the app and enjoy its features

-

- Benefits of using Google Go APK

-Google Go APK is not just a lighter and faster version of the Google Search app. It also has many benefits that make it a better choice for some users. Here are some of them:

- Save data and space

-One of the main advantages of Google Go APK is that it helps you save data and space on your device. It uses up to 40% less data than the standard Google Search app, which means you can browse more with less data charges. It also only takes up 12MB of space on your device, which is much less than the 100MB+ of the Google Search app. This means you can free up more space for other apps and files.

- Get answers quickly and reliably

-Another benefit of Google Go APK is that it delivers fast and reliable results, even on slow or unstable connections. It loads web pages quickly and optimizes them for your device, so you can see more content with less scrolling and tapping. It also works well offline, as it remembers your recent searches and shows them to you when you have no connection. You can also use voice search or Google Lens to get answers without typing.

- Explore the web with ease

-A third benefit of Google Go APK is that it makes it easy for you to explore the web and find what you need. It has a simple and intuitive interface that lets you access your favorite apps and websites with just a few taps. You can also discover new content based on your interests and location, such as images, videos, news, and more. You can also customize your home screen with wallpapers and shortcuts to suit your preferences.

- Conclusion

-In conclusion, Google Go APK is a great alternative to the standard Google Search app for Android users who want a lighter and faster way to search the web. It has many features that make it convenient and enjoyable to use, such as voice search, Google Lens, web page reading, language switching, and more. It also helps you save data and space on your device, as well as get answers quickly and reliably, even on slow or unstable connections. If you want to try Google Go APK on your device, you can download it from the Google Play Store or from various APK websites.

- Frequently Asked Questions (FAQs)

-Here are some common questions and answers about Google Go APK:

-

-- Is Google Go APK safe?

-Yes, Google Go APK is safe to use, as long as you download it from a trusted source, such as the Google Play Store or a reputable APK website. It is developed by Google LLC, which is a well-known and reliable company that creates many popular apps and services.

- - What is the difference between Google Go APK and Google Search app?

-The main difference between Google Go APK and Google Search app is that Google Go APK is a lighter and faster version of the Google Search app that is optimized for low-end devices and slow connections. It has fewer features than the Google Search app, but it also uses less data and space on your device.

- - Can I use Google Go APK on any Android device

- Can I use Google Go APK on any Android device?

-Yes, you can use Google Go APK on any Android device that runs on Android 5.0 (Lollipop) or higher. However, it is especially designed for devices with low space and memory, as well as slow or unstable connections.

- - How can I update Google Go APK?

-If you downloaded Google Go APK from the Google Play Store, you can update it automatically or manually through the app store. If you downloaded it from an APK website, you can check for updates on the same website and download the latest version of the APK file.

- - How can I uninstall Google Go APK?

-If you want to uninstall Google Go APK from your device, you can do so by following these steps:

-

-- Go to your device settings and tap on "Apps" or "Applications"

-- Find and tap on "Google Go" in the list of apps

-- Tap on "Uninstall" and confirm your action

-

- I hope this article was helpful and informative for you. If you have any questions or feedback, please feel free to leave a comment below. Thank you for reading! 197e85843d

-

-

\ No newline at end of file

diff --git a/spaces/congsaPfin/Manga-OCR/logs/Mi Remote APK Old Version - Free Download for Android.md b/spaces/congsaPfin/Manga-OCR/logs/Mi Remote APK Old Version - Free Download for Android.md

deleted file mode 100644

index 6ea6fedf0a4d6c198a2006405f87f4355437bec1..0000000000000000000000000000000000000000

--- a/spaces/congsaPfin/Manga-OCR/logs/Mi Remote APK Old Version - Free Download for Android.md

+++ /dev/null

@@ -1,101 +0,0 @@

-

-Mi Remote APK Download Old Version: How to Control Your TV, AC, and More with Your Phone

-Do you want to turn your phone into a universal remote control for your TV, air conditioner, set-top box, projector, and other devices? If you have a Xiaomi phone, you can do that with the Mi Remote app. But what if you don't like the latest version of the app or it doesn't work well with your phone or devices? Don't worry, you can still download the old version of the Mi Remote APK and enjoy its features. In this article, we will tell you what is Mi Remote APK, why you might want to download the old version, how to download and install it, and how to use it to control your devices.

-What is Mi Remote APK?

-Mi Remote is an app developed by Xiaomi that allows you to use your phone as a remote control for various devices that support infrared (IR) or Wi-Fi connection. You can control your TV, air conditioner, set-top box, projector, fan, camera, DVD player, and more with just one app. You can also customize the buttons and layout of the remote according to your preference. Mi Remote is compatible with most brands and models of devices, so you don't need to worry about finding the right remote for each device.

-mi remote apk download old version

DOWNLOAD ✑ ✑ ✑ https://urlca.com/2uO76e

-Features of Mi Remote APK

-Some of the features of Mi Remote APK are:

-

-- Supports IR and Wi-Fi connection for different devices

-- Compatible with most brands and models of devices

-- Allows customization of buttons and layout of remote

-- Provides enhanced TV guide and program recommendations

-- Supports voice control and smart gestures

-- Integrates with Mi Home app for smart home devices

-

-Benefits of Mi Remote APK

-Some of the benefits of using Mi Remote APK are:

-

-- You can save space and avoid clutter by using one app instead of multiple remotes

-- You can easily switch between different devices without changing remotes

-- You can access more functions and settings than a regular remote

-- You can enjoy a better user experience and interface than a regular remote

-- You can control your devices from anywhere in your home or office

-

-Why Download Mi Remote APK Old Version?

-While the latest version of Mi Remote APK may have some improvements and bug fixes, it may also have some drawbacks that make you want to download the old version instead. Here are some possible reasons why you might prefer the old version:

-Compatibility Issues with New Version

-The new version of Mi Remote APK may not be compatible with some older models of phones or devices. You may experience crashes, glitches, or errors when using the app. The old version may work better with your phone or devices and offer more stability and performance.

-Preference for Old Interface and Design

-The new version of Mi Remote APK may have changed the interface and design of the app. You may not like the new look or feel of the app. You may find it harder to navigate or use the app. The old version may have a simpler or more familiar interface and design that you are used to and prefer.

-Security and Privacy Concerns with New Version

-The new version of Mi Remote APK may have added some features or permissions that may compromise your security and privacy. You may not want to share your personal data or location with the app or third parties. You may not trust the app to protect your information from hackers or malware. The old version may have fewer or more transparent features and permissions that you are comfortable with and trust.

-How to Download Mi Remote APK Old Version?

-If you have decided to download the old version of Mi Remote APK, you need to follow some steps to do it safely and successfully. Here are the steps you need to take:

-mi remote controller apk download old version

-mi remote app old version download

-mi remote apk old version free download

-download mi remote apk for android old version

-mi remote apk download latest old version

-mi remote apk download 2023 old version

-mi remote apk download 2022 old version

-mi remote apk download 2021 old version

-mi remote apk download 2020 old version

-mi remote apk download 2019 old version

-mi remote apk download 2018 old version

-mi remote apk download 2017 old version

-mi remote apk download 2016 old version

-mi remote apk download 2015 old version

-mi remote apk download 2014 old version

-mi remote apk download for samsung old version

-mi remote apk download for lg old version

-mi remote apk download for sony old version

-mi remote apk download for tcl old version

-mi remote apk download for panasonic old version

-mi remote apk download for philips old version

-mi remote apk download for onida old version

-mi remote apk download for vu old version

-mi remote apk download for haier old version

-mi remote apk download for micromax old version

-mi remote apk download for videocon old version

-mi remote apk download for sansui old version

-mi remote apk download for hitachi old version

-mi remote apk download for toshiba old version

-mi remote apk download for sharp old version

-mi home and tv remote control app apk download old version

-xiaomi universal ir tv air conditioner smart home wifi app control device infrared transmitter adapter usb android phone wireless ir controller compatible with google assistant alexa voice control system smart life app control broadlink rm4 pro rm4c mini rm3 tc2s tc2 tc3 gang us au eu uk wifi rf smart wall touch light switch work with alexa google home ifttt voice control app control ewelink app control sonoff basic r3 rfr3 mini powr2 th16 tx series 4ch pro r2 inching self locking interlock switches wifi smart garage door controller opener work with alexa google home ifttt no hub required ewelink app control sonoff sv safe voltage wifi wireless switch module support secondary development diy your smart home device work with alexa google home ifttt voice control app control ewelink app control sonoff dual r2 channel wifi wireless smart switch module support timer countdown loop timing work with alexa google home ifttt voice control app control ewelink app control sonoff s26 s20 s55 s31 lite zb mini basic zbr3 zigbee diy smart socket plug work with alexa google home ifttt voice control app control ewelink app control sonoff ip66 waterproof case cover shell support sonoff basic dual pow g1 inching self locking interlock switches wifi smart garage door controller opener work with alexa google home ifttt no hub required ewelink app control sonoff dw1 dw2 wifi rf 433mhz door window alarm sensor work with rf bridge 433 wifi wireless switch module support secondary development diy your smart home device work with alexa google home ifttt voice control app control ewelink app control sonoff rf bridge 433 wifi wireless switch module support secondary development diy your smart home device work with alexa google home ifttt voice control app control ewelink app control sonoff slampher rf e27 wifi light bulbs holder supports amazon echo alexa google home nest ifttt voice control app control ewelink app control sonoff b1 e27 dimmable color temperature brightness adjustable wifi led lamp rgb color light bulb compatible with amazon echo alexa google home nest ifttt voice control app control ewelink app control sonoff led led strip light dimmable color temperature brightness adjustable wifi led lamp rgb color light strip compatible with amazon echo alexa google home nest ifttt voice control app control ewelink app control

-Step 1: Find a Reliable Source for the APK File

-The APK file is the installation file for Android apps. You need to find a reliable source that offers the old version of Mi Remote APK that you want. You can search online for websites that provide APK files for various apps. However, you need to be careful and avoid downloading from untrusted or malicious sources that may contain viruses or malware. You can check the reviews, ratings, and comments of other users to verify the credibility and quality of the source. You can also scan the APK file with an antivirus software before downloading it.

-Step 2: Enable Unknown Sources on Your Phone Settings

-By default, your phone may not allow you to install apps from sources other than the Google Play Store. You need to enable the option to install apps from unknown sources on your phone settings. To do this, go to Settings > Security > Unknown Sources and toggle it on. You may see a warning message that installing apps from unknown sources may harm your device. Tap OK to proceed.

-Step 3: Download and Install the APK File

-Once you have found a reliable source and enabled unknown sources, you can download the APK file of the old version of Mi Remote APK. You can use your browser or a download manager app to download the file. After downloading, locate the file on your phone storage and tap on it to install it. You may see a confirmation message that asks you if you want to install the app. Tap Install to continue.

-Step 4: Launch the App and Grant Permissions

-After installing, you can launch the app from your app drawer or home screen. You may see a message that asks you to grant permissions to the app. Tap Allow to grant the necessary permissions for the app to function properly. You may also see a message that asks you to update the app to the latest version. Tap Cancel or Skip to ignore it and use the old version.

-How to Use Mi Remote APK to Control Your Devices?

-Now that you have downloaded and installed the old version of Mi Remote APK, you can use it to control your devices with your phone. Here are the steps you need to take:

-Step 1: Select the Device Type and Brand

-Open the app and tap on the Add Remote button at the bottom of the screen. You will see a list of device types that you can control with the app, such as TV, AC, Set-top Box, Projector, etc. Tap on the device type that you want to control. You will then see a list of brands that are compatible with the app, such as Samsung, LG, Sony, etc. Tap on the brand of your device.

-Step 2: Pair Your Phone with the Device via IR or Wi-Fi