Commit

·

6aa2b53

1

Parent(s):

4f92e8e

Update parquet files (step 55 of 476)

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- spaces/1acneusushi/gradio-2dmoleculeeditor/data/B Ampr Automation Studio 4 Download Crack.md +0 -22

- spaces/1acneusushi/gradio-2dmoleculeeditor/data/Datem Summit Evolution Crack Para How to Get the Latest Version of the 3D Stereo Software.md +0 -121

- spaces/1gistliPinn/ChatGPT4/Examples/Autodesk Revit 2018 Crack WORK Keygen XForce Free Download.md +0 -6

- spaces/1gistliPinn/ChatGPT4/Examples/Fireflies Movie English Subtitles Download !!LINK!! Torrent.md +0 -22

- spaces/1phancelerku/anime-remove-background/Download Free and Unlimited Android Mods with APKMODEL.md +0 -75

- spaces/1phancelerku/anime-remove-background/Download Nada Dering WA Tiktok Suara Google BTS Chagiya dan Lainnya.md +0 -76

- spaces/ADOPLE/Multi-Doc-Virtual-Chatbot/app.py +0 -202

- spaces/AIConsultant/MusicGen/audiocraft/grids/compression/encodec_base_24khz.py +0 -28

- spaces/AIGC-Audio/AudioGPT/audio_to_text/captioning/utils/build_vocab_ltp.py +0 -150

- spaces/AIGC-Audio/Make_An_Audio/ldm/modules/encoders/open_clap/openai.py +0 -129

- spaces/AIGText/GlyphControl/ldm/modules/image_degradation/bsrgan.py +0 -730

- spaces/AIlexDev/Einfach.Hintergrund/app.py +0 -154

- spaces/ATang0729/Forecast4Muses/Model/Model6/Model6_1_ClothesKeyPoint/mmpose_1_x/configs/fashion_2d_keypoint/topdown_heatmap/deepfashion2/td_hm_res50_4xb32-120e_deepfashion2_sling_256x192.py +0 -172

- spaces/AgentVerse/agentVerse/ui/src/phaser3-rex-plugins/templates/ui/click/Factory.d.ts +0 -7

- spaces/AgentVerse/agentVerse/ui/src/phaser3-rex-plugins/templates/ui/dynamictext/Factory.d.ts +0 -5

- spaces/Ajaymekala/gradiolangchainChatBotOpenAI-1/app.py +0 -34

- spaces/Alpaca233/SadTalker/src/face3d/models/arcface_torch/docs/speed_benchmark.md +0 -93

- spaces/Androidonnxfork/CivitAi-to-Diffusers/diffusers/docs/source/en/api/schedulers/ddim_inverse.md +0 -21

- spaces/Androidonnxfork/CivitAi-to-Diffusers/diffusers/utils/check_copies.py +0 -213

- spaces/Andy1621/uniformer_image_detection/mmdet/core/bbox/assigners/hungarian_assigner.py +0 -145

- spaces/Andy1621/uniformer_image_segmentation/configs/deeplabv3plus/deeplabv3plus_r50-d8_480x480_40k_pascal_context.py +0 -10

- spaces/AnnonSubmission/xai-cl/README.md +0 -12

- spaces/Annotation-AI/fast-segment-everything-with-image-prompt/app.py +0 -17

- spaces/Anonymous-sub/Rerender/ControlNet/annotator/uniformer/mmcv/ops/ball_query.py +0 -55

- spaces/Ariharasudhan/YoloV5/models/common.py +0 -860

- spaces/Arnx/MusicGenXvAKN/Makefile +0 -21

- spaces/Augustya/ai-subject-answer-generator/app.py +0 -7

- spaces/Awiny/Image2Paragraph/models/grit_src/third_party/CenterNet2/detectron2/utils/analysis.py +0 -188

- spaces/Benson/text-generation/Examples/Bloons Td 6 Apk Download Android.md +0 -49

- spaces/Benson/text-generation/Examples/Creality Ender 3 S1 Pro Cura Perfil Descargar.md +0 -84

- spaces/Big-Web/MMSD/env/Lib/site-packages/pip/_vendor/pygments/__main__.py +0 -17

- spaces/Big-Web/MMSD/env/Lib/site-packages/pip/_vendor/resolvelib/reporters.py +0 -43

- spaces/Big-Web/MMSD/env/Lib/site-packages/pkg_resources/_vendor/importlib_resources/__init__.py +0 -36

- spaces/Big-Web/MMSD/env/Lib/site-packages/setuptools/_distutils/py38compat.py +0 -8

- spaces/Big-Web/MMSD/env/Lib/site-packages/setuptools/extension.py +0 -148

- spaces/CVPR/Dual-Key_Backdoor_Attacks/datagen/grid-feats-vqa/train_net.py +0 -128

- spaces/CVPR/DualStyleGAN/README.md +0 -13

- spaces/CVPR/LIVE/thrust/thrust/detail/complex/csinhf.h +0 -142

- spaces/CVPR/LIVE/thrust/thrust/detail/preprocessor.h +0 -1182

- spaces/CVPR/LIVE/thrust/thrust/detail/use_default.h +0 -27

- spaces/CVPR/LIVE/thrust/thrust/system/omp/detail/execution_policy.h +0 -107

- spaces/CVPR/LIVE/thrust/thrust/system/omp/detail/transform_scan.h +0 -23

- spaces/CVPR/regionclip-demo/detectron2/modeling/test_time_augmentation.py +0 -307

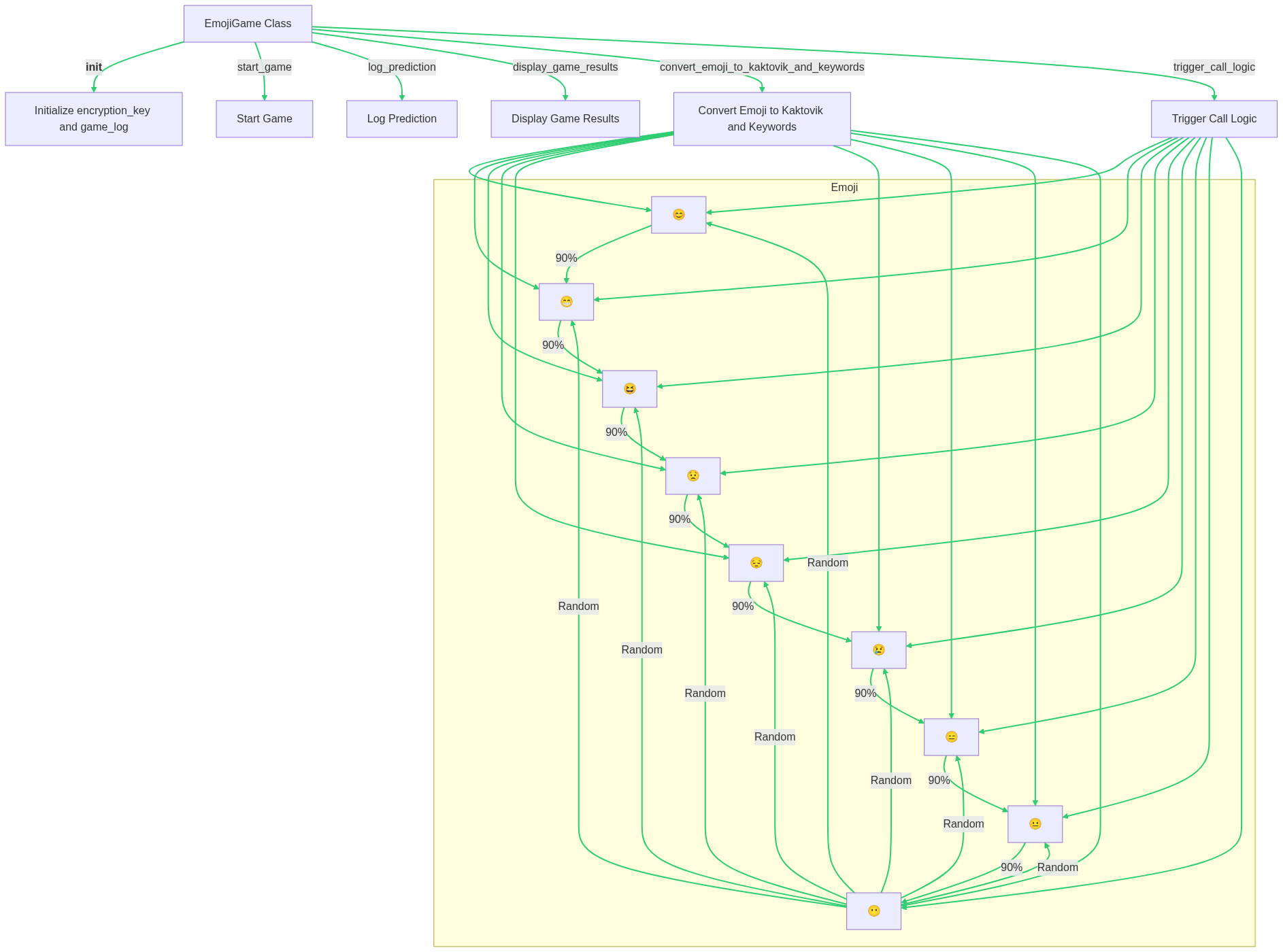

- spaces/CaliforniaHealthCollaborative/Emoji2KaktovicEncryptKey/EMOJILOGIC.md +0 -11

- spaces/Chomkwoy/Nilkessye/syllable_model.py +0 -55

- spaces/Cicooo/vits-uma-genshin-honkai/text/symbols.py +0 -39

- spaces/CikeyQI/Yunzai/Yunzai/plugins/other/version.js +0 -27

- spaces/CikeyQI/meme-api/meme_generator/memes/cover_face/__init__.py +0 -19

- spaces/Cong723/gpt-academic-public/check_proxy.py +0 -151

- spaces/DQChoi/gpt-demo/venv/lib/python3.11/site-packages/gradio/templates/frontend/assets/module-447425fe.js +0 -9

spaces/1acneusushi/gradio-2dmoleculeeditor/data/B Ampr Automation Studio 4 Download Crack.md

DELETED

|

@@ -1,22 +0,0 @@

|

|

| 1 |

-

|

| 2 |

-

<h1>How to Download and Install B&R Automation Studio 4</h1>

|

| 3 |

-

<p>B&R Automation Studio 4 is a software tool that allows you to design, program, test and debug automation systems. It supports a wide range of hardware platforms, such as PLCs, industrial PCs, servo drives, HMIs and more. With B&R Automation Studio 4, you can create modular and reusable software components, use graphical editors for logic and motion control, simulate your system before deployment, and benefit from integrated diagnostics and troubleshooting features.</p>

|

| 4 |

-

<h2>b amp;r automation studio 4 download crack</h2><br /><p><b><b>Download</b> ===== <a href="https://byltly.com/2uKz3Z">https://byltly.com/2uKz3Z</a></b></p><br /><br />

|

| 5 |

-

<p>If you want to download and install B&R Automation Studio 4 on your computer, you need to follow these steps:</p>

|

| 6 |

-

<ol>

|

| 7 |

-

<li>Go to the official website of B&R Industrial Automation at <a href="https://www.br-automation.com/">https://www.br-automation.com/</a> and click on the "Downloads" tab.</li>

|

| 8 |

-

<li>Under the "Software" section, find the link for "B&R Automation Studio 4" and click on it.</li>

|

| 9 |

-

<li>You will be redirected to a page where you can choose the version and language of B&R Automation Studio 4 that you want to download. You can also check the system requirements and the release notes for each version.</li>

|

| 10 |

-

<li>After selecting your preferences, click on the "Download" button and save the file to your computer.</li>

|

| 11 |

-

<li>Once the download is complete, run the file and follow the instructions on the screen to install B&R Automation Studio 4 on your computer.</li>

|

| 12 |

-

<li>You may need to restart your computer after the installation is finished.</li>

|

| 13 |

-

<li>To launch B&R Automation Studio 4, go to the Start menu and look for the B&R folder. Then, click on the "B&R Automation Studio 4" icon.</li>

|

| 14 |

-

</ol>

|

| 15 |

-

<p>Congratulations! You have successfully downloaded and installed B&R Automation Studio 4 on your computer. You can now start creating your own automation projects with this powerful software tool.</p>

|

| 16 |

-

|

| 17 |

-

<p>B&R Automation Studio 4 is based on the IEC 61131-3 standard, which defines five programming languages for automation systems: Ladder Diagram (LD), Function Block Diagram (FBD), Structured Text (ST), Instruction List (IL) and Sequential Function Chart (SFC). You can use any of these languages or combine them to create your software components. You can also use C/C++ or ANSI C for more complex tasks.</p>

|

| 18 |

-

<p>B&R Automation Studio 4 also provides graphical editors for motion control, such as Motion Chart and CAM Editor. These editors allow you to define the motion profiles and trajectories of your servo axes, as well as synchronize them with other axes or events. You can also use the integrated PLCopen motion function blocks to implement standard motion functions, such as homing, positioning, gearing and camming.</p>

|

| 19 |

-

<p></p>

|

| 20 |

-

<p>B&R Automation Studio 4 enables you to simulate your system before deploying it to the hardware. You can use the Simulation Runtime feature to run your software components on your computer and test their functionality and performance. You can also use the Simulation View feature to visualize the behavior of your system in a 3D environment. You can import CAD models of your machine or plant and connect them to your software components. This way, you can verify the kinematics and dynamics of your system and detect any errors or collisions.</p> ddb901b051<br />

|

| 21 |

-

<br />

|

| 22 |

-

<br />

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1acneusushi/gradio-2dmoleculeeditor/data/Datem Summit Evolution Crack Para How to Get the Latest Version of the 3D Stereo Software.md

DELETED

|

@@ -1,121 +0,0 @@

|

|

| 1 |

-

|

| 2 |

-

<h1>How to Crack DAT/EM Summit Evolution for Free</h1>

|

| 3 |

-

<p>DAT/EM Summit Evolution is a powerful software that allows you to discover and capture 3D information from stereo data. The software includes CAD and GIS interfaces, 3D stereo vector superimposition, automated feature editing, contour generation, and many more tools. It is used by professionals in various fields such as mapping, surveying, engineering, geology, forestry, archaeology, etc.</p>

|

| 4 |

-

<p>However, DAT/EM Summit Evolution is not a cheap software. Depending on the product level and the modules you need, it can cost you thousands of dollars. That's why some people may want to crack it and use it for free. Cracking is the process of modifying or bypassing the protection mechanisms of a software to make it work without a license or a dongle.</p>

|

| 5 |

-

<h2>datem summit evolution crack para</h2><br /><p><b><b>DOWNLOAD</b> ►►►►► <a href="https://byltly.com/2uKvQF">https://byltly.com/2uKvQF</a></b></p><br /><br />

|

| 6 |

-

<p>But cracking DAT/EM Summit Evolution is not an easy task. It requires advanced skills in reverse engineering, programming, debugging, etc. It also involves many risks and challenges such as legal issues, malware infections, compatibility problems, functionality limitations, etc. On the other hand, using a cracked version of DAT/EM Summit Evolution can also have some benefits such as saving money, testing the software before buying it, accessing features that are not available in your product level, etc.</p>

|

| 7 |

-

<p>In this article, we will show you how to find and download a crack for DAT/EM Summit Evolution, how to use a cracked version of the software, and what are the pros and cons of doing so. We will also provide some alternatives and recommendations for legal and ethical use of the software. Please note that this article is for educational purposes only and we do not condone or encourage piracy or illegal use of any software.</p>

|

| 8 |

-

<h2>How to Find and Download a Crack for Summit Evolution</h2>

|

| 9 |

-

<p>The first step to crack DAT/EM Summit Evolution is to find and download a crack for it. A crack is usually a file or a program that modifies or replaces some parts of the original software to make it work without a license or a dongle. There are many websites that offer cracks for various software online, but not all of them are trustworthy or reliable.</p>

|

| 10 |

-

<p>Some websites may try to scam you by asking you to pay money or provide personal information before downloading a crack. Some websites may infect your computer with malware or viruses that can harm your system or steal your data. Some websites may provide fake or outdated cracks that do not work or cause errors.</p>

|

| 11 |

-

<p>Therefore, you need to be careful and cautious when looking for cracks online. Here are some tips on how to avoid scams and malware when searching for cracks:</p>

|

| 12 |

-

<ul>

|

| 13 |

-

<li>Use a reputable search engine such as Google or Bing to find cracks.</li>

|

| 14 |

-

<li>Use keywords such as "DAT/EM Summit Evolution crack", "DAT/EM Summit Evolution dongle emulator", "DAT/EM Summit Evolution keygen", etc.</li>

|

| 15 |

-

<li>Check the domain name, URL, and design of the website. Avoid websites that have suspicious or unfamiliar domain names or URLs such as .ru, .cn, .tk, .biz, etc. Avoid websites that have poor design or layout such as broken links, pop-ups, ads, etc.</li>

|

| 16 |

-

<li>Read the comments, reviews, ratings, feedbacks, etc. of other users who have downloaded or used the crack. Avoid websites that have negative or no comments at all.</li>

|

| 17 |

-

<li>Scan the crack file or program with an antivirus or anti-malware software before downloading or opening it. Avoid files or programs that have suspicious extensions such as .exe, .bat, .com, .scr, etc.</li>

|

| 18 |

-

<li>Backup your important data before installing or running a crack on your computer.</li>

|

| 19 |

-

</ul>

|

| 20 |

-

<p>One example of a website that claims to provide a crack for DAT/EM Summit Evolution is Brain Studio (https://www.brstudio.com/wf/news/summit-evolution-dongle-emulator.html). According to this website, they offer a Sentinel SuperPro/UltraPro Dongle Emulator that can emulate the dongle protection of DAT/EM Summit Evolution v6.3 - v8.0. They also claim that their emulator can include all possible modules of the software.</p>

|

| 21 |

-

<p>We cannot verify the authenticity or safety of this website or their crack. Therefore, we advise you to use it at your own risk and discretion. If you decide to download their crack, you need to follow their instructions on how to install and run it on your computer.</p>

|

| 22 |

-

<h2>How to Use a Cracked Version of Summit Evolution</h2>

|

| 23 |

-

<p>The second step to crack DAT/EM Summit Evolution is to use a cracked version of the software. A cracked version of DAT/EM Summit Evolution is a modified version of the original software that works without a license or a dongle. Depending on the type and quality of the crack you have downloaded, you may be able to access different features and modules of the software.</p>

|

| 24 |

-

<p>datem summit evolution dongle emulator<br />

|

| 25 |

-

datem summit evolution stereo data capture<br />

|

| 26 |

-

datem summit evolution professional edition<br />

|

| 27 |

-

datem summit evolution orthorectification tools<br />

|

| 28 |

-

datem summit evolution 3d vector superimposition<br />

|

| 29 |

-

datem summit evolution contour generation features<br />

|

| 30 |

-

datem summit evolution v8.0 x64 bit download<br />

|

| 31 |

-

datem summit evolution v7.6 patch update<br />

|

| 32 |

-

datem summit evolution v7.4 sentinel superpro<br />

|

| 33 |

-

datem summit evolution v6.3 user manual<br />

|

| 34 |

-

datem summit evolution lite edition free trial<br />

|

| 35 |

-

datem summit evolution mobile edition for field work<br />

|

| 36 |

-

datem summit evolution uas edition for drone imagery<br />

|

| 37 |

-

datem summit evolution point cloud application<br />

|

| 38 |

-

datem summit evolution sample data elevation model<br />

|

| 39 |

-

datem summit evolution propack bundle offer<br />

|

| 40 |

-

datem summit evolution cad and gis interfaces<br />

|

| 41 |

-

datem summit evolution automated feature editing<br />

|

| 42 |

-

datem summit evolution terrain visualization options<br />

|

| 43 |

-

datem summit evolution model generator tutorial<br />

|

| 44 |

-

datem summit evolution stereo viewer operation guide<br />

|

| 45 |

-

datem summit evolution capture interface for autocad<br />

|

| 46 |

-

datem summit evolution superimposition for microstation<br />

|

| 47 |

-

datem summit evolution arcgis integration tips<br />

|

| 48 |

-

datem summit evolution global mapper compatibility<br />

|

| 49 |

-

datem summit evolution 3d information discovery<br />

|

| 50 |

-

datem summit evolution feature collection level<br />

|

| 51 |

-

datem summit evolution orientation measurement module<br />

|

| 52 |

-

datem summit evolution feature verification process<br />

|

| 53 |

-

datem summit evolution release notes and brochures<br />

|

| 54 |

-

datem summit evolution help and troubleshooting support<br />

|

| 55 |

-

datem summit evolution drivers and manuals download<br />

|

| 56 |

-

datem summit evolution license activation code<br />

|

| 57 |

-

datem summit evolution system requirements and specifications<br />

|

| 58 |

-

datem summit evolution customer reviews and testimonials<br />

|

| 59 |

-

datem summit evolution product comparison and pricing<br />

|

| 60 |

-

datem summit evolution training and certification courses<br />

|

| 61 |

-

datem summit evolution online demo and webinar registration<br />

|

| 62 |

-

datem summit evolution case studies and success stories<br />

|

| 63 |

-

datem summit evolution news and events updates</p>

|

| 64 |

-

<p>DAT/EM Summit Evolution is available in five product levels: Professional, Feature Collection, Lite, Mobile, and UAS. Each product level has different capabilities and functionalities depending on your needs and preferences.</p>

|

| 65 |

-

<table>

|

| 66 |

-

<tr><th>Product Level</th><th>Description</th></tr>

|

| 67 |

-

<tr><td>Professional</td><td>The most comprehensive product level that includes orientation measurement, orthorectification, terrain visualization, contour generation, point translation, DTM collection, and more.</td></tr>

|

| 68 |

-

<tr><td>Feature Collection</td><td>A product level that focuses on feature collection from stereo data using CAD and GIS interfaces. It does not include orientation measurement, orthorectification, or terrain visualization.</td></tr>

|

| 69 |

-

<tr><td>Lite</td><td>A product level that provides 3D stereo viewing capabilities for resource specialists, GIS technicians, and QA professionals. It does not include feature collection tools.</td></tr>

|

| 70 |

-

<tr><td>Mobile</td><td>A product level that optimizes 3D stereo viewing capabilities for field applications using laptops or tablets. It also works on desktop computers.</td></tr>

|

| 71 |

-

<tr><td>UAS</td><td>A product level that specializes in 3D viewing and simple 3D digitizing from UAS orthophotos. It does not include orientation measurement, orthorectification, or terrain visualization.</td></tr>

|

| 72 |

-

</table>

|

| 73 |

-

<p>If you have downloaded a crack that can include all possible modules of DAT/EM Summit Evolution, you may be able to use any product level you want. However, if you have downloaded a crack that only works for a specific product level, you may be limited by its features and functions.</p>

|

| 74 |

-

<p>To use a cracked version of DAT/EM Summit Evolution, you need to follow these steps:</p>

|

| 75 |

-

<ol>

|

| 76 |

-

<li>Launch the crack file or program on your computer. This may require administrator privileges or password depending on your system settings.</li>

|

| 77 |

-

<li>Select the product level and modules you want to use from the crack interface. This may vary depending on the type and quality of the crack you have downloaded.</li>

|

| 78 |

-

<li>Launch DAT/EM Summit Evolution from your desktop shortcut or start menu. The software should start without asking for a license or dongle verification.</li>

|

| 79 |

-

<li>Access and manipulate stereo data from various sources such as aerial photos, satellite images, lidar data, etc. You can use various tools such as Capture™ interface, DAT/EM SuperImposition™, Summit Model Generator™, etc. to digitize features directly into AutoCAD®, MicroStation®, ArcGIS®, or Global Mapper®.</li>

|

| 80 |

-

<p>Summit Evolution Feature Collection is a product level that focuses on feature collection from stereo data using CAD and GIS interfaces. It does not include orientation measurement, orthorectification, or terrain visualization.</p>

|

| 81 |

-

<p>Summit Evolution Lite is a product level that provides 3D stereo viewing capabilities for resource specialists, GIS technicians, and QA professionals. It does not include feature collection tools.</p>

|

| 82 |

-

<p>Summit Evolution Mobile is a product level that optimizes 3D stereo viewing capabilities for field applications using laptops or tablets. It also works on desktop computers.</p>

|

| 83 |

-

<p>Summit Evolution UAS is a product level that specializes in 3D viewing and simple 3D digitizing from UAS orthophotos. It does not include orientation measurement, orthorectification, or terrain visualization.</p>

|

| 84 |

-

<li><b>How does Summit Evolution compare to other stereo photogrammetry software?</b></li>

|

| 85 |

-

<p>Summit Evolution is one of the leading stereo photogrammetry software in the market. It has many advantages over other software such as:</p>

|

| 86 |

-

<ul>

|

| 87 |

-

<li>It supports a wide range of stereo data sources such as aerial photos, satellite images, lidar data, etc.</li>

|

| 88 |

-

<li>It integrates seamlessly with popular CAD and GIS applications such as AutoCAD®, MicroStation®, ArcGIS®, or Global Mapper®.</li>

|

| 89 |

-

<li>It offers various tools for 3D stereo vector superimposition, automated feature editing, contour generation, and more.</li>

|

| 90 |

-

<li>It has a user-friendly interface and a customizable keypad that enhance the workflow and productivity.</li>

|

| 91 |

-

<li>It has a high-quality technical support team that provides assistance and guidance to the users.</li>

|

| 92 |

-

</ul>

|

| 93 |

-

<p>However, Summit Evolution also has some disadvantages compared to other software such as:</p>

|

| 94 |

-

<ul>

|

| 95 |

-

<li>It is expensive and requires a license or a dongle to run.</li>

|

| 96 |

-

<li>It may not be compatible with some operating systems or hardware configurations.</li>

|

| 97 |

-

<li>It may have some bugs or errors that affect its performance or functionality.</li>

|

| 98 |

-

</ul>

|

| 99 |

-

<li><b>What are the system requirements for running Summit Evolution?</b></li>

|

| 100 |

-

<p>The system requirements for running Summit Evolution vary depending on the product level and modules you use. However, the minimum system requirements for running any product level of Summit Evolution are:</p>

|

| 101 |

-

<ul>

|

| 102 |

-

<li>A Windows 10 operating system (64-bit).</li>

|

| 103 |

-

<li>A quad-core processor with a speed of 2.5 GHz or higher.</li>

|

| 104 |

-

<li>A RAM memory of 8 GB or higher.</li>

|

| 105 |

-

<li>A graphics card with a dedicated memory of 2 GB or higher.</li>

|

| 106 |

-

<li>A monitor with a resolution of 1920 x 1080 pixels or higher.</li>

|

| 107 |

-

<li>A mouse with a scroll wheel and at least three buttons.</li>

|

| 108 |

-

<li>A DAT/EM Keypad (optional but recommended).</li>

|

| 109 |

-

</ul>

|

| 110 |

-

<li><b>How can I get technical support for Summit Evolution?</b></li>

|

| 111 |

-

<p>If you have any questions or issues with Summit Evolution, you can contact the technical support team of DAT/EM Systems International by:</p>

|

| 112 |

-

<ul>

|

| 113 |

-

<li>Emailing them at [email protected]</li>

|

| 114 |

-

<li>Calling them at +1 (907) 522-3681</li>

|

| 115 |

-

<li>Filling out an online form at https://www.datem.com/support/</li>

|

| 116 |

-

</ul>

|

| 117 |

-

<li><b>Where can I learn more about Summit Evolution and its applications?</b></li>

|

| 118 |

-

<p>If you want to learn more about Summit Evolution and its applications, you can visit the official website of DAT/EM Systems International at https://www.datem.com/. There you can find more information about the software features, product levels, modules, pricing, etc. You can also download the official documentation, tutorials, webinars, etc. that can help you understand and use the software better.</p>

|

| 119 |

-

</p> 0a6ba089eb<br />

|

| 120 |

-

<br />

|

| 121 |

-

<br />

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1gistliPinn/ChatGPT4/Examples/Autodesk Revit 2018 Crack WORK Keygen XForce Free Download.md

DELETED

|

@@ -1,6 +0,0 @@

|

|

| 1 |

-

<h2>Autodesk Revit 2018 Crack Keygen XForce Free Download</h2><br /><p><b><b>Download Zip</b> >>>>> <a href="https://imgfil.com/2uxYIB">https://imgfil.com/2uxYIB</a></b></p><br /><br />

|

| 2 |

-

<br />

|

| 3 |

-

3cee63e6c2<br />

|

| 4 |

-

<br />

|

| 5 |

-

<br />

|

| 6 |

-

<p></p>

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1gistliPinn/ChatGPT4/Examples/Fireflies Movie English Subtitles Download !!LINK!! Torrent.md

DELETED

|

@@ -1,22 +0,0 @@

|

|

| 1 |

-

|

| 2 |

-

<h1>How to Watch Fireflies Movie with English Subtitles Online</h1>

|

| 3 |

-

<p>Fireflies is a 2022 animated film directed by Hayao Miyazaki and produced by Studio Ghibli. It tells the story of a young boy who befriends a mysterious girl who can communicate with fireflies. The film has received critical acclaim and has been nominated for several awards, including the Academy Award for Best Animated Feature.</p>

|

| 4 |

-

<h2>Fireflies Movie English Subtitles Download Torrent</h2><br /><p><b><b>Download Zip</b> ☆☆☆ <a href="https://imgfil.com/2uy1ve">https://imgfil.com/2uy1ve</a></b></p><br /><br />

|

| 5 |

-

<p>If you want to watch Fireflies movie with English subtitles online, you have a few options. One of them is to download the torrent file from a reliable source and use a torrent client to stream or download the movie. However, this method may be illegal in some countries and may expose you to malware or viruses. Therefore, we do not recommend this option.</p>

|

| 6 |

-

<p>A safer and more legal way to watch Fireflies movie with English subtitles online is to use a streaming service that offers the film. Some of the streaming services that have Fireflies movie with English subtitles are:</p>

|

| 7 |

-

<ul>

|

| 8 |

-

<li>Netflix: Netflix is a popular streaming platform that has a large library of movies and shows, including many Studio Ghibli films. You can watch Fireflies movie with English subtitles on Netflix with a subscription plan that starts from $8.99 per month.</li>

|

| 9 |

-

<li>Hulu: Hulu is another streaming service that has a variety of content, including anime and animation. You can watch Fireflies movie with English subtitles on Hulu with a subscription plan that starts from $5.99 per month.</li>

|

| 10 |

-

<li>Amazon Prime Video: Amazon Prime Video is a streaming service that is part of the Amazon Prime membership. You can watch Fireflies movie with English subtitles on Amazon Prime Video with a Prime membership that costs $12.99 per month or $119 per year.</li>

|

| 11 |

-

</ul>

|

| 12 |

-

<p>These are some of the best ways to watch Fireflies movie with English subtitles online. We hope you enjoy this beautiful and touching film.</p>

|

| 13 |

-

<p></p>

|

| 14 |

-

|

| 15 |

-

<p>If you are looking for a more in-depth analysis of Fireflies movie, you may want to read some of the reviews that have been written by critics and fans. One of the reviews that we found helpful is from The Hollywood Reporter, which praises the film's visuals and themes. According to the review[^1^], Fireflies does a good job of rendering port locations that are vast and unfriendly by day and depopulated and ghostly by night, both moods being entirely appropriate. The review also notes that the film explores the themes of exile, identity, and belonging with sensitivity and nuance.</p>

|

| 16 |

-

<p>Fireflies movie is a masterpiece of animation that will touch your heart and make you think. Whether you watch it online or in a theater, you will not regret spending your time on this film. We hope you enjoy Fireflies movie with English subtitles as much as we did.</p>

|

| 17 |

-

|

| 18 |

-

<p>Fireflies movie also boasts an impressive cast of voice actors who bring the characters to life. The film features the voices of Ryan Reynolds, Willem Dafoe, Emily Watson, Carrie-Anne Moss, Julia Roberts, Ioan Gruffudd and Kate Mara[^1^]. They deliver emotional and nuanced performances that capture the personalities and struggles of their roles.</p>

|

| 19 |

-

<p>Another aspect of Fireflies movie that deserves praise is the music. The film features a beautiful and haunting score composed by Joe Hisaishi, who has collaborated with Hayao Miyazaki on many of his previous films. The music enhances the mood and atmosphere of the film, creating a sense of wonder and melancholy. The film also features a song by Yoko Ono, who wrote it specifically for Fireflies movie.</p>

|

| 20 |

-

<p>Fireflies movie is a rare gem of animation that will stay with you long after you watch it. It is a film that celebrates the power of imagination, friendship and love in the face of adversity. It is a film that challenges you to think about the meaning of life and the value of human connection. It is a film that will make you laugh, cry and smile.</p> d5da3c52bf<br />

|

| 21 |

-

<br />

|

| 22 |

-

<br />

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1phancelerku/anime-remove-background/Download Free and Unlimited Android Mods with APKMODEL.md

DELETED

|

@@ -1,75 +0,0 @@

|

|

| 1 |

-

|

| 2 |

-

<h1>APKMODEL: The Ultimate Source for Modded Games and Apps for Android</h1>

|

| 3 |

-

<p>If you are an Android user who loves playing games and using apps on your device, you might have heard of apkmodel. But what is apkmodel and why should you use it? In this article, we will answer these questions and show you how apkmodel can enhance your gaming and app experience.</p>

|

| 4 |

-

<h2>What is APKMODEL?</h2>

|

| 5 |

-

<h3>APKMODEL is a website that offers modded games and apps for Android devices.</h3>

|

| 6 |

-

<p>Modded games and apps are modified versions of the original ones that have extra features, unlocked content, unlimited resources, or other enhancements. For example, you can play a modded version of Subway Surfers with unlimited coins and keys, or a modded version of Spotify with premium features for free.</p>

|

| 7 |

-

<h2>apkmodel</h2><br /><p><b><b>Download</b> === <a href="https://jinyurl.com/2uNKDW">https://jinyurl.com/2uNKDW</a></b></p><br /><br />

|

| 8 |

-

<h3>Modded games and apps are not available on the official Google Play Store, but you can download them from apkmodel.</h3>

|

| 9 |

-

<p>Apkmodel is a website that hosts thousands of modded games and apps from various categories and genres, such as action, adventure, arcade, puzzle, simulation, sports, music, photography, social media, and more. You can find popular titles like Minecraft, Clash of Clans, Candy Crush Saga, TikTok, Instagram, Netflix, and many others on apkmodel.</p>

|

| 10 |

-

<h2>Why use APKMODEL?</h2>

|

| 11 |

-

<h3>APKMODEL has many benefits for Android users who want to enjoy their favorite games and apps without any limitations or restrictions.</h3>

|

| 12 |

-

<h4>APKMODEL provides a large collection of modded games and apps from various categories and genres.</h4>

|

| 13 |

-

<p>Whether you are looking for a game to kill some time, an app to enhance your productivity, or a tool to customize your device, you can find it on apkmodel. You can also discover new games and apps that you might not have heard of before.</p>

|

| 14 |

-

<h4>APKMODEL updates its content regularly and ensures that the mods are safe, tested, and working.</h4>

|

| 15 |

-

<p>Apkmodel keeps up with the latest trends and releases in the gaming and app industry and adds new mods every day. You can also request mods that are not available on the website and they will try to provide them as soon as possible. Moreover, apkmodel checks all the mods for viruses, malware, and compatibility issues before uploading them to the website.</p>

|

| 16 |

-

<h4>APKMODEL has a user-friendly interface and easy download process.</h4>

|

| 17 |

-

<p>Apkmodel has a simple and intuitive design that makes it easy to navigate and find what you are looking for. You can also use the search bar or filter by category to narrow down your options. To download a modded game or app, you just need to click on the download button and wait for the file to be downloaded to your device. You don't need to sign up, log in, or provide any personal information.</p>

|

| 18 |

-

<p>apkmodel modded games<br />

|

| 19 |

-

apkmodel android apps<br />

|

| 20 |

-

apkmodel free download<br />

|

| 21 |

-

apkmodel latest version<br />

|

| 22 |

-

apkmodel premium apk<br />

|

| 23 |

-

apkmodel mod menu<br />

|

| 24 |

-

apkmodel unlimited money<br />

|

| 25 |

-

apkmodel pro apk<br />

|

| 26 |

-

apkmodel hacked games<br />

|

| 27 |

-

apkmodel cracked apps<br />

|

| 28 |

-

apkmodel online games<br />

|

| 29 |

-

apkmodel offline games<br />

|

| 30 |

-

apkmodel action games<br />

|

| 31 |

-

apkmodel adventure games<br />

|

| 32 |

-

apkmodel arcade games<br />

|

| 33 |

-

apkmodel casual games<br />

|

| 34 |

-

apkmodel puzzle games<br />

|

| 35 |

-

apkmodel racing games<br />

|

| 36 |

-

apkmodel role playing games<br />

|

| 37 |

-

apkmodel simulation games<br />

|

| 38 |

-

apkmodel sports games<br />

|

| 39 |

-

apkmodel strategy games<br />

|

| 40 |

-

apkmodel social apps<br />

|

| 41 |

-

apkmodel entertainment apps<br />

|

| 42 |

-

apkmodel productivity apps<br />

|

| 43 |

-

apkmodel photography apps<br />

|

| 44 |

-

apkmodel video apps<br />

|

| 45 |

-

apkmodel music apps<br />

|

| 46 |

-

apkmodel education apps<br />

|

| 47 |

-

apkmodel health apps<br />

|

| 48 |

-

apkmodel lifestyle apps<br />

|

| 49 |

-

apkmodel shopping apps<br />

|

| 50 |

-

apkmodel travel apps<br />

|

| 51 |

-

apkmodel news apps<br />

|

| 52 |

-

apkmodel books apps<br />

|

| 53 |

-

apkmodel communication apps<br />

|

| 54 |

-

apkmodel finance apps<br />

|

| 55 |

-

apkmodel personalization apps<br />

|

| 56 |

-

apkmodel tools apps<br />

|

| 57 |

-

apkmodel weather apps</p>

|

| 58 |

-

<h4>APKMODEL respects the privacy and security of its users and does not require any registration or personal information.</h4>

|

| 59 |

-

<p>Apkmodel does not collect, store, or share any data from its users. You can use the website anonymously and safely without worrying about your privacy or security. Apkmodel also does not host any ads or pop-ups that might annoy you or harm your device.</p>

|

| 60 |

-

<h2>How to use APKMODEL?</h2>

|

| 61 |

-

<h3>Using APKMODEL is simple and straightforward. Here are the steps to follow:</h3>

|

| 62 |

-

<h4>Step 1: Visit the APKMODEL website and browse through the categories or use the search bar to find the game or app you want.</h4>

|

| 63 |

-

<p>Apkmodel has a well-organized and easy-to-use website that allows you to find your desired modded game or app in no time. You can explore the different categories, such as action, arcade, casual, strategy, role-playing, etc., or use the search bar to type in the name of the game or app you are looking for.</p>

|

| 64 |

-

<h4>Step 2: Click on the download button and wait for the file to be downloaded to your device.</h4>

|

| 65 |

-

<p>Once you have found the modded game or app you want, you can click on the download button and choose the version you prefer. Some mods may have different versions with different features or compatibility options. You can also read the description, features, installation guide, and user reviews of the mod before downloading it. The download process is fast and easy, and you don't need to go through any surveys or verification steps.</p>

|

| 66 |

-

<h4>Step 3: Install the modded game or app by enabling the unknown sources option in your settings.</h4>

|

| 67 |

-

<p>After downloading the modded game or app, you need to install it on your device. To do that, you need to enable the unknown sources option in your settings. This option allows you to install apps from sources other than the Google Play Store. To enable it, go to Settings > Security > Unknown Sources and toggle it on. Then, locate the downloaded file in your file manager and tap on it to install it.</p>

|

| 68 |

-

<h4>Step 4: Enjoy your modded game or app with all the features and benefits.</h4>

|

| 69 |

-

<p>Now you are ready to enjoy your modded game or app with all the features and benefits that it offers. You can play unlimited levels, unlock premium content, get unlimited resources, remove ads, and more. You can also update your modded game or app whenever a new version is available on apkmodel.</p>

|

| 70 |

-

<h2>Conclusion</h2>

|

| 71 |

-

<h3>APKMODEL is a great source for modded games and apps for Android users who want to have more fun and convenience with their devices.</h3>

|

| 72 |

-

<p>Apkmodel is a website that provides thousands of modded games and apps for Android devices that have extra features, unlocked content, unlimited resources, or other enhancements. Apkmodel has many benefits for Android users, such as a large collection of mods from various categories and genres, regular updates, safe and tested mods, user-friendly interface, easy download process, privacy and security protection, and no ads or pop-ups. Using apkmodel is simple and straightforward; you just need to visit the website, find the modded game or app you want, download it, install it, and enjoy it. Apkmodel is the ultimate source for modded games and apps for Android users who want to have more fun and convenience with their devices.</p>

|

| 73 |

-

FAQs Q: Is apkmodel legal? A: Apkmodel is legal as long as you use it for personal and educational purposes only. However, some modded games and apps may violate the terms and conditions of the original developers or publishers. Therefore, we advise you to use apkmodel at your own risk and discretion. Q: Is apkmodel safe? A: Apkmodel is safe as long as you download mods from its official website only. Apkmodel checks all the mods for viruses, malware, and compatibility issues before uploading them to the website. However, some mods may require additional permissions or access to your device's functions or data. Therefore, we advise you to read the description, features, installation guide, and user reviews of the mod before downloading it. Q: How can I request a mod that is not available on apkmodel? A: Apkmodel welcomes requests from its users for mods that are not available on its website. You can request a mod by filling out a form on its website or by contacting its support team via email or social media. Q: How can I update my modded game or app? A: Ap kmodel updates its mods regularly and notifies its users whenever a new version is available. You can update your modded game or app by downloading the latest version from apkmodel and installing it over the previous one. You can also check the update history and changelog of the mod on its website. Q: How can I uninstall my modded game or app? A: You can uninstall your modded game or app by following the same steps as you would for any other app on your device. Go to Settings > Apps > Select the modded game or app > Uninstall. You can also delete the downloaded file from your file manager. Q: How can I contact apkmodel or give feedback? A: Apkmodel values the opinions and suggestions of its users and welcomes any feedback or questions. You can contact apkmodel or give feedback by using the contact form on its website or by emailing them at [email protected]. You can also follow them on Facebook, Twitter, Instagram, and YouTube for the latest news and updates.</p> 401be4b1e0<br />

|

| 74 |

-

<br />

|

| 75 |

-

<br />

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1phancelerku/anime-remove-background/Download Nada Dering WA Tiktok Suara Google BTS Chagiya dan Lainnya.md

DELETED

|

@@ -1,76 +0,0 @@

|

|

| 1 |

-

|

| 2 |

-

<h1>How to Download and Use TikTok Sounds as WhatsApp Notifications</h1>

|

| 3 |

-

<p>TikTok is a popular social media app that allows users to create and share short videos with various effects and sounds. WhatsApp is a widely used messaging app that lets users send text, voice, image, video, and audio messages. If you are a fan of both apps, you might want to use some of the catchy or funny sounds from TikTok as your WhatsApp notifications. This way, you can spice up your chats and calls with your friends and family.</p>

|

| 4 |

-

<p>In this article, we will show you how to download and use TikTok sounds as WhatsApp notifications in a few simple steps. You will need a smartphone, an internet connection, a TikTok downloader website, and of course, both TikTok and WhatsApp apps installed on your phone.</p>

|

| 5 |

-

<h2>download notifikasi wa tiktok</h2><br /><p><b><b>DOWNLOAD</b> ->>> <a href="https://jinyurl.com/2uNMAd">https://jinyurl.com/2uNMAd</a></b></p><br /><br />

|

| 6 |

-

<h2>How to Download TikTok Sounds</h2>

|

| 7 |

-

<h3>Find and Copy the Link of the TikTok Video</h3>

|

| 8 |

-

<p>The first step is to find a TikTok video that has a sound that you like and want to use as your WhatsApp notification. You can browse through different categories, hashtags, or trends on TikTok, or search for specific keywords or users. Once you find a video that you like, tap on the share icon at the bottom right corner of the screen. Then, tap on Copy link to copy the link of the video to your clipboard.</p>

|

| 9 |

-

<h3>Paste the Link into a TikTok Downloader Website</h3>

|

| 10 |

-

<p>The next step is to use a TikTok downloader website to download the video as an MP3 file. There are many websites that offer this service for free, such as <a href="(^1^)">TiktokDownloader</a>, <a href="(^2^)">MusicallyDown</a>, or <a href="(^3^)">SnapTik</a>. All you have to do is paste the link of the video that you copied into the input box on these websites and click on Download. Then, choose Download MP3 from the options that appear.</p>

|

| 11 |

-

<h3>Save the MP3 File to Your Phone</h3>

|

| 12 |

-

<p>The final step is to save the downloaded MP3 file to your phone's storage. Depending on your browser settings, you might be asked where you want to save the file or it might be saved automatically in your Downloads folder. You can also rename the file if you want.</p>

|

| 13 |

-

<h2>How to Use TikTok Sounds as WhatsApp Notifications</h2>

|

| 14 |

-

<h3>Move the MP3 File to the Ringtones Folder</h3>

|

| 15 |

-

<p>Before you can use the TikTok sound as your WhatsApp notification, you need to move it to the Ringtones folder on your phone so that it can be used as a notification sound. To do this, you can use a file manager app on your phone, such as <a href="">Files by Google</a>, <a href="">ES File Explorer</a>, or <a href="">File Manager</a>. Open the app and locate the MP3 file that you downloaded. Then, long-press on the file and select Move or Cut. Navigate to the Ringtones folder on your phone, which is usually under Internal storage > Ringtones. Then, tap on Paste or Move here to move the file to the Ringtones folder.</p>

|

| 16 |

-

<h3>Open WhatsApp and Go to Settings</h3>

|

| 17 |

-

<p>Now that you have moved the TikTok sound to the Ringtones folder, you can use it as your WhatsApp notification. To do this, open WhatsApp and tap on the three dots icon at the top right corner of the screen. Then, tap on Settings from the menu that appears. This will open the Settings menu of WhatsApp.</p>

|

| 18 |

-

<p>Download nada dering wa tiktok viral<br />

|

| 19 |

-

Cara download sound tiktok ke wa jadi nada dering lucu<br />

|

| 20 |

-

Download notifikasi wa chagiya tiktok viral lucu dan imut<br />

|

| 21 |

-

Download kumpulan nada dering wa pendek dari tiktok<br />

|

| 22 |

-

Download nada dering wa bts dari lagu-lagu tiktok<br />

|

| 23 |

-

Download nada dering wa suara google dari tiktok<br />

|

| 24 |

-

Download nada dering wa doraemon baling-baling bambu dari tiktok<br />

|

| 25 |

-

Download nada dering wa ayam dj lucu jawa dari tiktok<br />

|

| 26 |

-

Download nada dering wa minion beatbox dari tiktok<br />

|

| 27 |

-

Download nada dering wa lel funny dari tiktok<br />

|

| 28 |

-

Download nada dering wa bahasa sunda dari tiktok<br />

|

| 29 |

-

Download nada dering wa bahasa jawa dari tiktok<br />

|

| 30 |

-

Download nada dering wa hihi hahah dari tiktok<br />

|

| 31 |

-

Download nada dering wa intro dari tiktok<br />

|

| 32 |

-

Download nada dering wa suara air jatuh dari tiktok<br />

|

| 33 |

-

Download nada dering wa ketuk pintu dari tiktok<br />

|

| 34 |

-

Download nada dering wa lucu super mario dari tiktok<br />

|

| 35 |

-

Download nada dering wa lucu orang batuk dari tiktok<br />

|

| 36 |

-

Download nada dering wa sahur suara google dari tiktok<br />

|

| 37 |

-

Download nada dering wa nani ohayo yang viral di tiktok<br />

|

| 38 |

-

Download nada dering wa dynamite bts yang viral di tiktok<br />

|

| 39 |

-

Download nada dering wa morning call bts yang viral di tiktok<br />

|

| 40 |

-

Download nada dering wa jungkook bts yang viral di tiktok<br />

|

| 41 |

-

Download nada dering wa v bts yang viral di tiktok<br />

|

| 42 |

-

Download nada dering wa jimin bts yang viral di tiktok<br />

|

| 43 |

-

Download nada dering wa rm bts yang viral di tiktok<br />

|

| 44 |

-

Download nada dering wa jin bts yang viral di tiktok<br />

|

| 45 |

-

Download nada dering wa suga bts yang viral di tiktok<br />

|

| 46 |

-

Download nada dering wa j-hope bts yang viral di tiktok<br />

|

| 47 |

-

Download nada dering wa korea imut yang viral di tiktok<br />

|

| 48 |

-

Download nada dering wa mobile legends yang viral di tiktok<br />

|

| 49 |

-

Download nada dering wa harvest moon yang viral di tiktok<br />

|

| 50 |

-

Download nada dering wa kata sayang yang viral di tiktok<br />

|

| 51 |

-

Download nada dering wa 1 detik yang viral di tiktok<br />

|

| 52 |

-

Cara membuat notifikasi wa pakai suara sendiri dari tiktok<br />

|

| 53 |

-

Cara mengganti notifikasi wa dengan mp3 dari tiktok<br />

|

| 54 |

-

Cara download notifikasi wa di jalantikus dari tiktok<br />

|

| 55 |

-

Aplikasi download notifikasi wa terbaik dari tiktok<br />

|

| 56 |

-

Kumpulan ringtone wa terbaik lainnya dari tiktok<br />

|

| 57 |

-

Tips memilih notifikasi wa yang sesuai dengan kepribadian dari tiktok</p>

|

| 58 |

-

<h3>Choose the Notification Sound that You Want to Change</h3>

|

| 59 |

-

<p>In the Settings menu, tap on Notifications to access the notification settings of WhatsApp. Here, you can choose between message, call, or group notifications and customize them according to your preferences. For example, if you want to change the notification sound for messages, tap on Notification tone under Message notifications. This will open a list of available notification tones on your phone.</p>

|

| 60 |

-

<h3>Select the TikTok Sound from the List</h3>

|

| 61 |

-

<p>In the list of notification tones, scroll down until you find the TikTok sound that you downloaded and moved to the Ringtones folder. It should have the same name as the MP3 file that you saved. Tap on it to select it as your notification tone for messages. You can also preview the sound by tapping on the play icon next to it. Once you are satisfied with your choice, tap on OK to save it.</p>

|

| 62 |

-

<h2>Conclusion</h2>

|

| 63 |

-

<p>Congratulations! You have successfully downloaded and used a TikTok sound as your WhatsApp notification. You can repeat the same steps for any other TikTok sound that you like and use it for different types of notifications on WhatsApp. You can also share your TikTok sounds with your friends and family by sending them the MP3 files or the links of the videos. This way, you can have fun and express yourself with TikTok sounds on WhatsApp.</p>

|

| 64 |

-

<h2>FAQs</h2>

|

| 65 |

-

<h4>Q: Can I use TikTok sounds as my phone's ringtone?</h4>

|

| 66 |

-

<p>A: Yes, you can use TikTok sounds as your phone's ringtone by following the same steps as above, but instead of choosing Notification tone, choose Phone ringtone in the Settings menu of WhatsApp.</p>

|

| 67 |

-

<h4>Q: Can I use TikTok sounds as my alarm sound?</h4>

|

| 68 |

-

<p>A: Yes, you can use TikTok sounds as your alarm sound by following the same steps as above, but instead of moving the MP3 file to the Ringtones folder, move it to the Alarms folder on your phone.</p>

|

| 69 |

-

<h4>Q: How can I delete a TikTok sound from my phone?</h4>

|

| 70 |

-

<p>A: If you want to delete a TikTok sound from your phone, you can use a file manager app to locate and delete the MP3 file from your phone's storage. You can also go to the Settings menu of WhatsApp and choose Reset notification settings to restore the default notification sounds.</p>

|

| 71 |

-

<h4>Q: How can I edit a TikTok sound before using it as my WhatsApp notification?</h4>

|

| 72 |

-

<p>A: If you want to edit a TikTok sound before using it as your WhatsApp notification, you can use an audio editor app on your phone, such as <a href="">MP3 Cutter and Ringtone Maker</a>, <a href="">Ringtone Maker</a>, or <a href="">Audio MP3 Cutter Mix Converter and Ringtone Maker</a>. These apps allow you to trim, cut, merge, mix, or add effects to your audio files.</p>

|

| 73 |

-

<h4>Q: How can I find more TikTok sounds that I like?</h4>

|

| 74 |

-

<p>A: If you want to find more TikTok sounds that you like, you can explore different categories, hashtags, or trends on TikTok, or search for specific keywords or users. You can also follow your favorite creators or celebrities on TikTok and see what sounds they use in their videos.</p> 401be4b1e0<br />

|

| 75 |

-

<br />

|

| 76 |

-

<br />

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/ADOPLE/Multi-Doc-Virtual-Chatbot/app.py

DELETED

|

@@ -1,202 +0,0 @@

|

|

| 1 |

-

from pydantic import NoneStr

|

| 2 |

-

import os

|

| 3 |

-

from langchain.chains.question_answering import load_qa_chain

|

| 4 |

-

from langchain.document_loaders import UnstructuredFileLoader

|

| 5 |

-

from langchain.embeddings.openai import OpenAIEmbeddings

|

| 6 |

-

from langchain.llms import OpenAI

|

| 7 |

-

from langchain.text_splitter import CharacterTextSplitter

|

| 8 |

-

from langchain.vectorstores import FAISS

|

| 9 |

-

from langchain.vectorstores import Chroma

|

| 10 |

-

from langchain.chains import ConversationalRetrievalChain

|

| 11 |

-

import gradio as gr

|

| 12 |

-

import openai

|

| 13 |

-

from langchain import PromptTemplate, OpenAI, LLMChain

|

| 14 |

-

import validators

|

| 15 |

-

import requests

|

| 16 |

-

import mimetypes

|

| 17 |

-

import tempfile

|

| 18 |

-

|

| 19 |

-

class Chatbot:

|

| 20 |

-

def __init__(self):

|

| 21 |

-

openai.api_key = os.getenv("OPENAI_API_KEY")

|

| 22 |

-

def get_empty_state(self):

|

| 23 |

-

|

| 24 |

-

""" Create empty Knowledge base"""

|

| 25 |

-

|

| 26 |

-

return {"knowledge_base": None}

|

| 27 |

-

|

| 28 |

-

def create_knowledge_base(self,docs):

|

| 29 |

-

|

| 30 |

-

"""Create a knowledge base from the given documents.

|

| 31 |

-

Args:

|

| 32 |

-

docs (List[str]): List of documents.

|

| 33 |

-

Returns:

|

| 34 |

-

FAISS: Knowledge base built from the documents.

|

| 35 |

-

"""

|

| 36 |

-

|

| 37 |

-

# Initialize a CharacterTextSplitter to split the documents into chunks

|

| 38 |

-

# Each chunk has a maximum length of 500 characters

|

| 39 |

-

# There is no overlap between the chunks

|

| 40 |

-

text_splitter = CharacterTextSplitter(

|

| 41 |

-

separator="\n", chunk_size=1000, chunk_overlap=200, length_function=len

|

| 42 |

-

)

|

| 43 |

-

|

| 44 |

-

# Split the documents into chunks using the text_splitter

|

| 45 |

-

chunks = text_splitter.split_documents(docs)

|

| 46 |

-

|

| 47 |

-

# Initialize an OpenAIEmbeddings model to compute embeddings of the chunks

|

| 48 |

-

embeddings = OpenAIEmbeddings()

|

| 49 |

-

|

| 50 |

-

# Build a knowledge base using Chroma from the chunks and their embeddings

|

| 51 |

-

knowledge_base = Chroma.from_documents(chunks, embeddings)

|

| 52 |

-

|

| 53 |

-

# Return the resulting knowledge base

|

| 54 |

-

return knowledge_base

|

| 55 |

-

|

| 56 |

-

|

| 57 |

-

def upload_file(self,file_paths):

|

| 58 |

-

"""Upload a file and create a knowledge base from its contents.

|

| 59 |

-

Args:

|

| 60 |

-

file_paths : The files to uploaded.

|

| 61 |

-

Returns:

|

| 62 |

-

tuple: A tuple containing the file name and the knowledge base.

|

| 63 |

-

"""

|

| 64 |

-

|

| 65 |

-

file_paths = [i.name for i in file_paths]

|

| 66 |

-

print(file_paths)

|

| 67 |

-

|

| 68 |

-

|

| 69 |

-

loaders = [UnstructuredFileLoader(file_obj, strategy="fast") for file_obj in file_paths]

|

| 70 |

-

|

| 71 |

-

# Load the contents of the file using the loader

|

| 72 |

-

docs = []

|

| 73 |

-

for loader in loaders:

|

| 74 |

-

docs.extend(loader.load())

|

| 75 |

-

|

| 76 |

-

# Create a knowledge base from the loaded documents using the create_knowledge_base() method

|

| 77 |

-

knowledge_base = self.create_knowledge_base(docs)

|

| 78 |

-

|

| 79 |

-

|

| 80 |

-

# Return a tuple containing the file name and the knowledge base

|

| 81 |

-

return file_paths, {"knowledge_base": knowledge_base}

|

| 82 |

-

|

| 83 |

-

def add_text(self,history, text):

|

| 84 |

-

history = history + [(text, None)]

|

| 85 |

-

print("History for Add text : ",history)

|

| 86 |

-

return history, gr.update(value="", interactive=False)

|

| 87 |

-

|

| 88 |

-

|

| 89 |

-

|

| 90 |

-

def upload_multiple_urls(self,urls):

|

| 91 |

-

urlss = [url.strip() for url in urls.split(',')]

|

| 92 |

-

all_docs = []

|

| 93 |

-

file_paths = []

|

| 94 |

-

for url in urlss:

|

| 95 |

-

if validators.url(url):

|

| 96 |

-

headers = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36',}

|

| 97 |

-

r = requests.get(url,headers=headers)

|

| 98 |

-

if r.status_code != 200:

|

| 99 |

-

raise ValueError("Check the url of your file; returned status code %s" % r.status_code)

|

| 100 |

-

content_type = r.headers.get("content-type")

|

| 101 |

-

file_extension = mimetypes.guess_extension(content_type)

|

| 102 |

-

temp_file = tempfile.NamedTemporaryFile(suffix=file_extension, delete=False)

|

| 103 |

-

temp_file.write(r.content)

|

| 104 |

-

file_path = temp_file.name

|

| 105 |

-

file_paths.append(file_path)

|

| 106 |

-

|

| 107 |

-

loaders = [UnstructuredFileLoader(file_obj, strategy="fast") for file_obj in file_paths]

|

| 108 |

-

|

| 109 |

-

# Load the contents of the file using the loader

|

| 110 |

-

docs = []

|

| 111 |

-

for loader in loaders:

|

| 112 |

-

docs.extend(loader.load())

|

| 113 |

-

|

| 114 |

-

# Create a knowledge base from the loaded documents using the create_knowledge_base() method

|

| 115 |

-

knowledge_base = self.create_knowledge_base(docs)

|

| 116 |

-

|

| 117 |

-

return file_paths,{"knowledge_base":knowledge_base}

|

| 118 |

-

|

| 119 |

-

def answer_question(self, question,history,state):

|

| 120 |

-

"""Answer a question based on the current knowledge base.

|

| 121 |

-

Args:

|

| 122 |

-

state (dict): The current state containing the knowledge base.

|

| 123 |

-

Returns:

|

| 124 |

-

str: The answer to the question.

|

| 125 |

-

"""

|

| 126 |

-

|

| 127 |

-

# Retrieve the knowledge base from the state dictionary

|

| 128 |

-

knowledge_base = state["knowledge_base"]

|

| 129 |

-

retriever = knowledge_base.as_retriever()

|

| 130 |

-

qa = ConversationalRetrievalChain.from_llm(

|

| 131 |

-

llm=OpenAI(temperature=0.1),

|

| 132 |

-

retriever=retriever,

|

| 133 |

-

return_source_documents=False)

|

| 134 |

-

# Set the question for which we want to find the answer

|

| 135 |

-

res = []

|

| 136 |

-

question = history[-1][0]

|

| 137 |

-

for human, ai in history[:-1]:

|

| 138 |

-

pair = (human, ai)

|

| 139 |

-

res.append(pair)

|

| 140 |

-

|

| 141 |

-

chat_history = []

|

| 142 |

-

|

| 143 |

-

query = question

|

| 144 |

-

result = qa({"question": query, "chat_history": chat_history})

|

| 145 |

-

# Perform a similarity search on the knowledge base to retrieve relevant documents

|

| 146 |

-

response = result["answer"]

|

| 147 |

-

# Return the response as the answer to the question

|

| 148 |

-

history[-1][1] = response

|

| 149 |

-

print("History for QA : ",history)

|

| 150 |

-

return history

|

| 151 |

-

|

| 152 |

-

|

| 153 |

-

def clear_function(self,state):

|

| 154 |

-

state.clear()

|

| 155 |

-

# state = gr.State(self.get_empty_state())

|

| 156 |

-

|

| 157 |

-

def gradio_interface(self):

|

| 158 |

-

|

| 159 |

-

"""Create the Gradio interface for the Chemical Identifier."""

|

| 160 |

-

|

| 161 |

-

with gr.Blocks(css="style.css",theme='karthikeyan-adople/hudsonhayes-gray') as demo:

|

| 162 |

-

gr.HTML("""<center class="darkblue" style='background-color:rgb(0,1,36); text-align:center;padding:25px;'>

|

| 163 |

-

<center>

|

| 164 |

-

<h1 class ="center" style="color:#fff">

|

| 165 |

-

ADOPLE AI

|

| 166 |

-

</h1>

|

| 167 |

-

</center>

|

| 168 |

-

<be>

|

| 169 |

-

<h1 style="color:#fff">

|

| 170 |

-

Virtual Assistant Chatbot

|

| 171 |

-

</h1>

|

| 172 |

-

</center>""")

|

| 173 |

-

state = gr.State(self.get_empty_state())

|

| 174 |

-

with gr.Column(elem_id="col-container"):

|

| 175 |

-

with gr.Accordion("Upload Files", open = False):

|

| 176 |

-

with gr.Row(elem_id="row-flex"):

|

| 177 |

-

with gr.Row(elem_id="row-flex"):

|

| 178 |

-

with gr.Column(scale=1,):

|

| 179 |

-

file_url = gr.Textbox(label='file url :',show_label=True, placeholder="")

|

| 180 |

-

with gr.Row(elem_id="row-flex"):

|

| 181 |

-

with gr.Column(scale=1):

|

| 182 |

-

file_output = gr.File()

|

| 183 |

-

with gr.Column(scale=1):

|

| 184 |

-

upload_button = gr.UploadButton("Browse File", file_types=[".txt", ".pdf", ".doc", ".docx"],file_count = "multiple")

|

| 185 |

-

with gr.Row():

|

| 186 |

-

chatbot = gr.Chatbot([], elem_id="chatbot")

|

| 187 |

-

with gr.Row():

|

| 188 |

-

txt = gr.Textbox(label = "Question",show_label=True,placeholder="Enter text and press Enter")

|

| 189 |

-

with gr.Row():

|

| 190 |

-

clear_btn = gr.Button(value="Clear")

|

| 191 |

-

|

| 192 |

-

txt_msg = txt.submit(self.add_text, [chatbot, txt], [chatbot, txt], queue=False).then(self.answer_question, [txt, chatbot, state], chatbot)

|

| 193 |

-

txt_msg.then(lambda: gr.update(interactive=True), None, [txt], queue=False)

|

| 194 |

-

file_url.submit(self.upload_multiple_urls, file_url, [file_output, state])

|

| 195 |

-

clear_btn.click(self.clear_function,[state],[])

|

| 196 |

-

clear_btn.click(lambda: None, None, chatbot, queue=False)

|

| 197 |

-

upload_button.upload(self.upload_file, upload_button, [file_output,state])

|

| 198 |

-

demo.queue().launch(debug=True)

|

| 199 |

-

|

| 200 |

-

if __name__=="__main__":

|

| 201 |

-

chatbot = Chatbot()

|

| 202 |

-

chatbot.gradio_interface()

|

|

|

|

|

|

|

|