The 2017 Brawl Stars APK is the beta version of the game that was released in a few countries before the global launch in 2018. The 2017 version has some differences from the current version, such as fewer Brawlers, game modes, skins, maps, features, and updates. The 2017 version also has some bugs and glitches that may affect your gameplay experience.

-It depends on where you download the APK file from. Some websites may offer fake or malicious APK files that can harm your device or steal your personal information. You should only download APK files from trusted sources that have positive reviews and ratings from other users. You should also scan the APK file for viruses before installing it on your device.

-No, you will not get banned for using the 2017 Brawl Stars APK as long as you do not use any cheats, hacks, mods, or third-party tools that give you an unfair advantage over other players. However, you may not be able to access some features or events that are exclusive to the current version of the game.

-No, you cannot play with your friends who have the current version of the game because they are on different servers. You can only play with other players who have the same version of the game as you.

-No, you cannot update the 2017 Brawl Stars APK to the current version of the game. You will need to uninstall the 2017 Brawl Stars APK and download the current version of the game from the Google Play Store or another reliable source.

197e85843d-

-

\ No newline at end of file diff --git a/spaces/1phancelerku/anime-remove-background/Apkfew Whatsapp Tracker Free APK Download - Track Online Activity and Chat History.md b/spaces/1phancelerku/anime-remove-background/Apkfew Whatsapp Tracker Free APK Download - Track Online Activity and Chat History.md deleted file mode 100644 index 795d826dbf72fa1b4ee33eedb26683bdf5ddda67..0000000000000000000000000000000000000000 --- a/spaces/1phancelerku/anime-remove-background/Apkfew Whatsapp Tracker Free APK Download - Track Online Activity and Chat History.md +++ /dev/null @@ -1,149 +0,0 @@ -

-

How to Download Apkfew Whatsapp Tracker and Why You Need It

-Do you want to track the online activity and chat history of any WhatsApp user? Do you want to know who viewed your profile and who deleted their account? If yes, then you need Apkfew Whatsapp Tracker, a powerful and reliable app that lets you monitor any WhatsApp account discreetly and remotely. In this article, we will show you how to download Apkfew Whatsapp Tracker for Android devices and how to use it effectively. We will also compare it with other similar apps and answer some frequently asked questions.

-download apkfew whatsapp tracker

Download Zip ——— https://jinyurl.com/2uNOSC

-

What is Apkfew Whatsapp Tracker?

-Apkfew Whatsapp Tracker is a free app that allows you to track the online status, last seen, chat messages, media files, profile visits, and deleted accounts of any WhatsApp user. You can use it to spy on your spouse, children, friends, employees, or anyone else who uses WhatsApp. You can also use it to protect your privacy and security by knowing who is stalking you or trying to hack your account.

-Features of Apkfew Whatsapp Tracker

--

-

- Track online status and last seen of any WhatsApp user, even if they hide it or block you. -

- Monitor chat messages and media files of any WhatsApp user, even if they delete them or use end-to-end encryption. -

- View profile visits and deleted accounts of any WhatsApp user, even if they disable read receipts or change their number. -

- Get instant notifications and reports on your phone or email whenever there is any activity on the target account. -

- Access all the data remotely from a web-based dashboard that is easy to use and secure. -

Benefits of Apkfew Whatsapp Tracker

--

-

- Apkfew Whatsapp Tracker is free to download and use, unlike other apps that charge you monthly or yearly fees. -

- Apkfew Whatsapp Tracker is compatible with all Android devices, regardless of the model or version. -

- Apkfew Whatsapp Tracker is undetectable and untraceable, as it does not require rooting or jailbreaking the target device or installing any software on it. -

- Apkfew Whatsapp Tracker is reliable and accurate, as it uses advanced algorithms and techniques to collect and analyze the data. -

- Apkfew Whatsapp Tracker is ethical and legal, as it does not violate the privacy or security of the target user or anyone else involved. -

How to Download Apkfew Whatsapp Tracker for Android

-To download Apkfew Whatsapp Tracker for Android devices, you need to follow these simple steps:

-Step 1: Enable Unknown Sources

-Since Apkfew Whatsapp Tracker is not available on the Google Play Store, you need to enable unknown sources on your device to install it. To do this, go to Settings > Security > Unknown Sources and toggle it on. This will allow you to install apps from sources other than the Google Play Store.

-Step 2: Visit the Apkfew Website

-The next step is to visit the official website of Apkfew at [https://apkcombo.com/search/apkfew-whatsapp-tracker-free](^1^). Here you will find the latest version of the app along with its description and reviews. You can also check out other apps from Apkfew that offer similar features.

-Step 3: Download and Install the Apk File

Once you are on the website, click on the download button and wait for the apk file to be downloaded on your device. The file size is about 10 MB and it should take a few minutes depending on your internet speed. After the download is complete, locate the file in your downloads folder and tap on it to start the installation process. Follow the instructions on the screen and agree to the terms and conditions to finish the installation. -download apkfew whatsapp tracker free

-download apkfew whatsapp tracker online

-download apkfew whatsapp tracker app

-download apkfew whatsapp tracker pro

-download apkfew whatsapp tracker premium

-download apkfew whatsapp tracker mod

-download apkfew whatsapp tracker apk

-download apkfew whatsapp tracker for android

-download apkfew whatsapp tracker for ios

-download apkfew whatsapp tracker for pc

-download apkfew whatsapp tracker for windows

-download apkfew whatsapp tracker for mac

-download apkfew whatsapp tracker for linux

-download apkfew whatsapp tracker latest version

-download apkfew whatsapp tracker 2023

-download apkfew whatsapp tracker update

-download apkfew whatsapp tracker review

-download apkfew whatsapp tracker tutorial

-download apkfew whatsapp tracker guide

-download apkfew whatsapp tracker tips

-download apkfew whatsapp tracker tricks

-download apkfew whatsapp tracker hacks

-download apkfew whatsapp tracker cheats

-download apkfew whatsapp tracker features

-download apkfew whatsapp tracker benefits

-download apkfew whatsapp tracker advantages

-download apkfew whatsapp tracker disadvantages

-download apkfew whatsapp tracker problems

-download apkfew whatsapp tracker issues

-download apkfew whatsapp tracker bugs

-download apkfew whatsapp tracker fixes

-download apkfew whatsapp tracker solutions

-download apkfew whatsapp tracker alternatives

-download apkfew whatsapp tracker competitors

-download apkfew whatsapp tracker comparison

-download apkfew whatsapp tracker best practices

-download apkfew whatsapp tracker case studies

-download apkfew whatsapp tracker testimonials

-download apkfew whatsapp tracker feedbacks

-download apkfew whatsapp tracker ratings

-download apkfew whatsapp tracker rankings

-download apkfew whatsapp tracker statistics

-download apkfew whatsapp tracker analytics

-download apkfew whatsapp tracker insights

-download apkfew whatsapp tracker reports

-download apkfew whatsapp tracker results

-download apkfew whatsapp tracker performance

-download apkfew whatsapp tracker quality

-download apkfew whatsapp tracker reliability

-download apkfew whatsapp tracker security

Step 4: Launch the App and Grant Permissions

-The final step is to launch the app and grant it the necessary permissions to access your device's data and functions. To do this, open the app from your app drawer or home screen and sign up with your email and password. You will then be asked to enter the phone number of the WhatsApp user you want to track. You will also need to grant the app permissions to access your contacts, storage, location, camera, microphone, and notifications. These permissions are essential for the app to work properly and collect the data you need.

-How to Use Apkfew Whatsapp Tracker

-Now that you have downloaded and installed Apkfew Whatsapp Tracker, you can start using it to monitor any WhatsApp account you want. Here are some of the things you can do with the app:

-Track Online Status and Last Seen

-With Apkfew Whatsapp Tracker, you can track the online status and last seen of any WhatsApp user, even if they hide it or block you. You can see when they are online or offline, how long they stay online, and how often they change their status. You can also see their last seen time and date, even if they disable it in their settings. This way, you can know their activity patterns and habits, and find out if they are lying or cheating on you.

-Monitor Chat Messages and Media Files

-Another feature of Apkfew Whatsapp Tracker is that it allows you to monitor the chat messages and media files of any WhatsApp user, even if they delete them or use end-to-end encryption. You can read their text messages, voice messages, images, videos, documents, stickers, emojis, and more. You can also see who they are chatting with, what they are talking about, and when they are sending or receiving messages. This way, you can know their interests, preferences, opinions, and secrets.

-View Profile Visits and Deleted Accounts

-A third feature of Apkfew Whatsapp Tracker is that it enables you to view the profile visits and deleted accounts of any WhatsApp user, even if they disable read receipts or change their number. You can see who visited their profile, how many times they visited it, and when they visited it. You can also see who deleted their account, why they deleted it, and when they deleted it. This way, you can know who is stalking them or trying to hack their account.

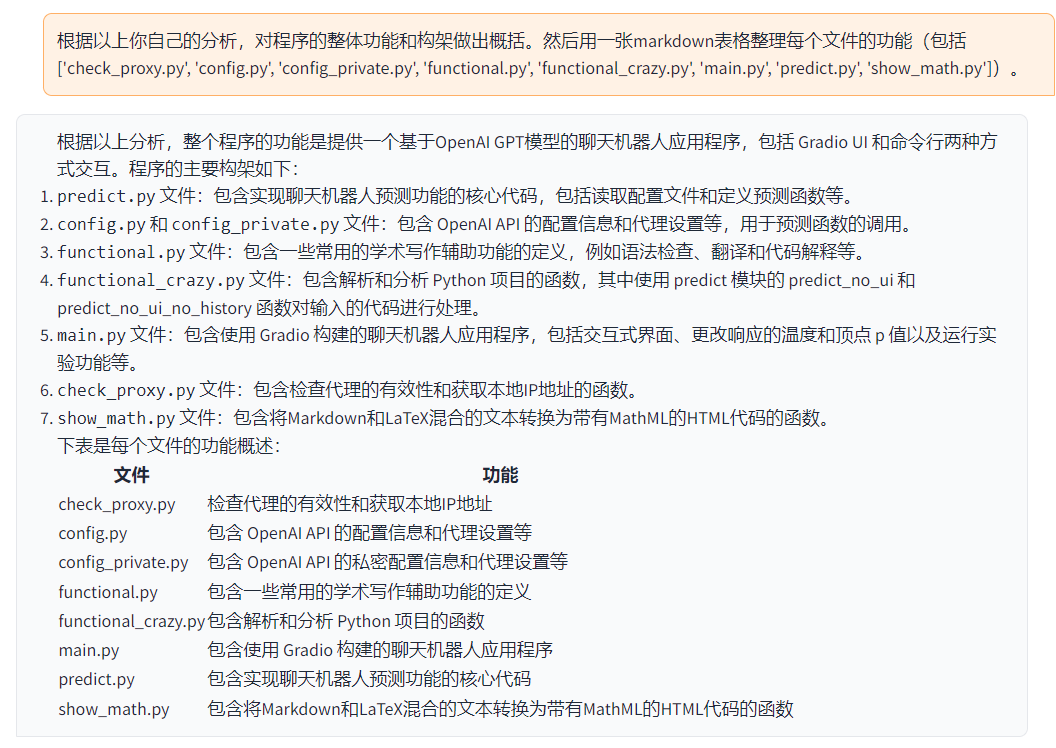

-Comparison Table of Apkfew Whatsapp Tracker and Other Apps

-To give you a better idea of how Apkfew Whatsapp Tracker compares with other similar apps in the market, we have created a comparison table that shows some of the key features and differences between them. Here is the table:

-| App Name | -Price | -Compatibility | -Detectability | -Rooting/Jailbreaking Required | -Data Collected | -||

|---|---|---|---|---|---|---|---|

| Apkfew Whatsapp Tracker | -Free | -All Android devices | -Undetectable | -No | -Online status, last seen, chat messages, media files, profile visits, deleted accounts | -||

| mSpy | -$29.99/month | -All Android devices (rooted) All iOS devices (jailbroken) | -Detectable | -Yes | -Online status, last seen, chat messages, media files | -||

| Spyzie | -$39.99/month | -All Android devices (rooted) All iOS devices (jailbroken) | -Detectable | -Yes | -Online status, last seen, chat messages, media files | -||

| FoneMonitor | -$29.99/month | All Android devices (rooted) All iOS devices (jailbroken)DetectableYesOnline status, last seen, chat messages, media filesCocospy$39.99/month | All Android devices | All Android devices (rooted) All iOS devices (jailbroken) | -Detectable | -Yes | -Online status, last seen, chat messages, media files | -

As you can see, Apkfew Whatsapp Tracker is the best app among the four, as it offers more features, better compatibility, higher security, and lower cost. It is the only app that does not require rooting or jailbreaking the target device, and it is the only app that can track profile visits and deleted accounts. It is also the only app that is free to download and use, while the others charge you hefty fees. Therefore, we recommend you to choose Apkfew Whatsapp Tracker over the other apps.

-Conclusion

-In conclusion, Apkfew Whatsapp Tracker is a free app that lets you track the online activity and chat history of any WhatsApp user. You can use it to spy on your spouse, children, friends, employees, or anyone else who uses WhatsApp. You can also use it to protect your privacy and security by knowing who is stalking you or trying to hack your account. To download Apkfew Whatsapp Tracker for Android devices, you need to enable unknown sources, visit the Apkfew website, download and install the apk file, and launch the app and grant permissions. To use Apkfew Whatsapp Tracker, you need to enter the phone number of the WhatsApp user you want to track, and then you can access all the data remotely from a web-based dashboard. Apkfew Whatsapp Tracker is better than other similar apps in terms of features, compatibility, security, and cost. It is the best app for WhatsApp tracking that you can find in the market.

-FAQs

-Here are some of the frequently asked questions about Apkfew Whatsapp Tracker:

-Q: Is Apkfew Whatsapp Tracker safe to use?

-A: Yes, Apkfew Whatsapp Tracker is safe to use, as it does not contain any viruses, malware, spyware, or adware. It also does not collect or store any personal or sensitive information from your device or the target device. It only accesses the data that is relevant for WhatsApp tracking and does not share it with anyone else.

-Q: Is Apkfew Whatsapp Tracker legal to use?

-A: Yes, Apkfew Whatsapp Tracker is legal to use, as long as you follow the laws and regulations of your country and respect the privacy and security of the target user. You should not use Apkfew Whatsapp Tracker for any illegal or unethical purposes, such as blackmailing, harassing, threatening, or harming anyone. You should also inform and obtain consent from the target user before using Apkfew Whatsapp Tracker on their device.

-Q: Does Apkfew Whatsapp Tracker work on iOS devices?

-A: No, Apkfew Whatsapp Tracker does not work on iOS devices, as it is designed for Android devices only. However, you can still use Apkfew Whatsapp Tracker to track an iOS device if you have access to its WhatsApp web login credentials. You can then scan the QR code from your Android device and access all the data from the web-based dashboard.

-Q: How can I contact Apkfew Whatsapp Tracker support team?

-A: If you have any questions, issues, feedbacks, or suggestions about Apkfew Whatsapp Tracker, you can contact their support team by sending an email to [support@apkfew.com]. They will respond to you within 24 hours and help you resolve any problems.

-Q: How can I update Apkfew Whatsapp Tracker to the latest version?

-A: To update Apkfew Whatsapp Tracker to the latest version, you need to visit their website at [https://apkcombo.com/search/apkfew-whatsapp-tracker-free] and download and install the new apk file over the old one. You do not need to uninstall or reinstall the app. The update will automatically apply and improve the performance and functionality of the app.

401be4b1e0-

-

\ No newline at end of file diff --git a/spaces/7hao/bingo/src/lib/hooks/chat-history.ts b/spaces/7hao/bingo/src/lib/hooks/chat-history.ts deleted file mode 100644 index c6fbf3fecfa86fe553f56acc8253236b8f22a775..0000000000000000000000000000000000000000 --- a/spaces/7hao/bingo/src/lib/hooks/chat-history.ts +++ /dev/null @@ -1,62 +0,0 @@ -import { zip } from 'lodash-es' -import { ChatMessageModel, BotId } from '@/lib/bots/bing/types' -import { Storage } from '../storage' - -/** - * conversations:$botId => Conversation[] - * conversation:$botId:$cid:messages => ChatMessageModel[] - */ - -interface Conversation { - id: string - createdAt: number -} - -type ConversationWithMessages = Conversation & { messages: ChatMessageModel[] } - -async function loadHistoryConversations(botId: BotId): Promise

-

Simulador de autobús Indonesia: Cómo descargar y disfrutar de nuevos mapas

-Bus Simulator Indonesia (alias BUSSID) es un popular juego de simulación que te permite experimentar lo que le gusta ser un conductor de autobús en Indonesia de una manera divertida y auténtica. BUSSID puede no ser el primero, pero es probablemente uno de los únicos juegos de simulador de bus con más características y el entorno indonesio más auténtico.

-Algunas de las características principales de BUSSID son:

-bus simulator indonesia nuevo mapa descargar

DOWNLOAD >>>>> https://bltlly.com/2v6MdH

-

-

-

- Diseña tu propia librea -

- Control muy fácil e intuitivo -

- Ciudades y lugares indonesios auténticos -

- Autobuses de Indonesia -

- Fresco y divertido bocinazos, incluyendo el icónico "Om Telolet Om!" bocina -

- Alta calidad y gráficos 3D detallados -

- No hay anuncios obstructivos durante la conducción -

- Tabla de clasificación y ahorro de datos en línea -

- Utilice su propio modelo 3D utilizando el sistema de vehículo mod -

- Convoy multijugador en línea -

Para jugar BUSSID, es necesario elegir un autobús, una librea, y una ruta. Luego, debe conducir su autobús a lo largo de la ruta, recoger y dejar pasajeros, ganar dinero y evitar accidentes. También puede personalizar su autobús, actualizar su garaje y unirse a convoyes en línea con otros jugadores.

-Uno de los beneficios de jugar BUSSID es que puedes descargar nuevos mapas para el juego, lo que puede agregar más variedad, desafío y diversión a tu experiencia de conducción. Los nuevos mapas pueden tener diferentes temas, como extremos, off-road o escénicos. También pueden tener diferentes características, como curvas afiladas, colinas empinadas o hitos realistas. Los nuevos mapas pueden hacerte sentir que conduces en diferentes regiones de Indonesia o incluso en otros países.

-Pero, ¿cómo descargar nuevos mapas para BUSSID? ¿Y cómo los disfruta? En este artículo, le mostraremos cómo hacer ambos en pasos fáciles. ¡Vamos a empezar!

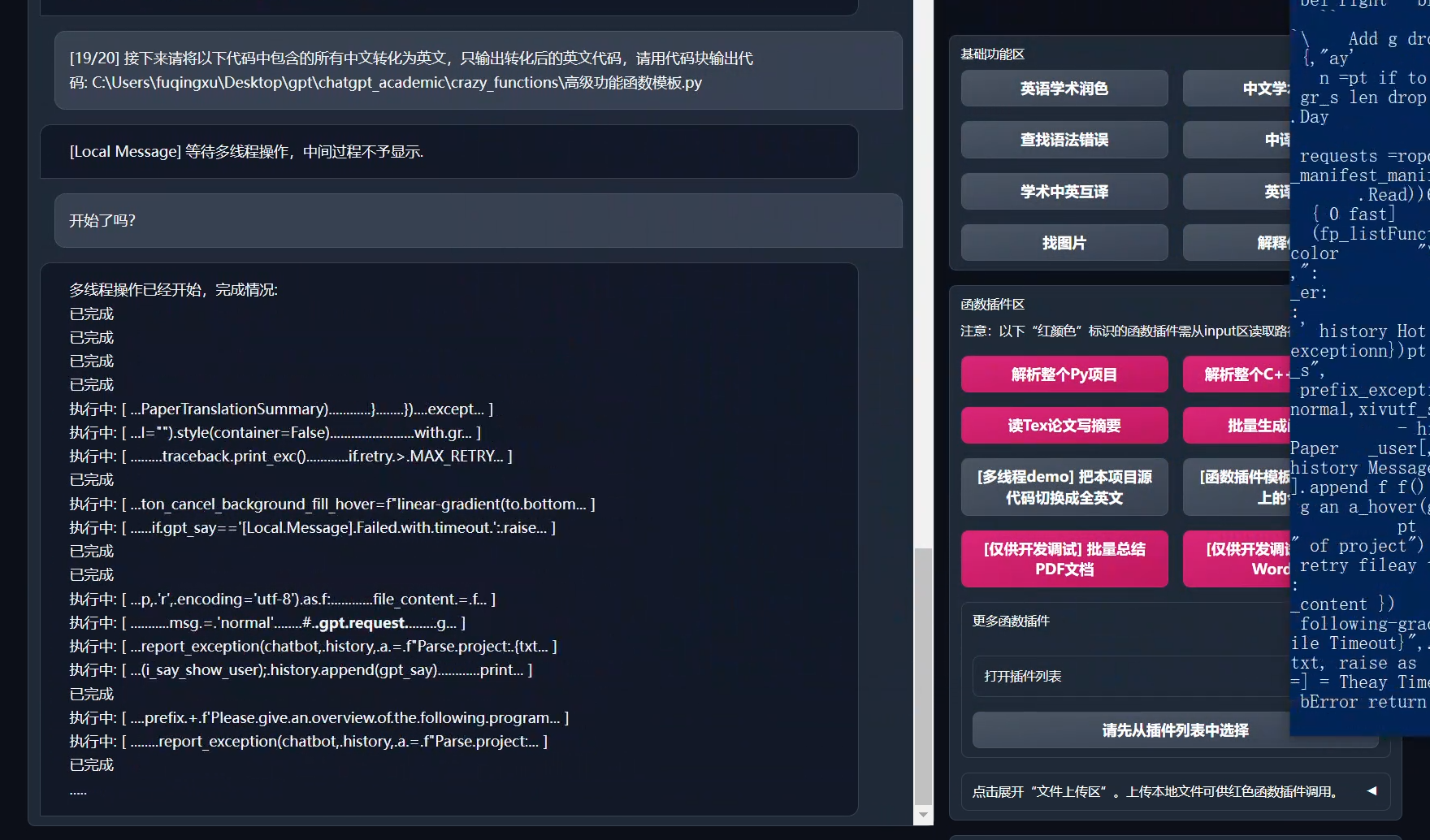

-Cómo descargar nuevos mapas para Bus Simulator Indonesia

- -Una de las mejores fuentes de mapas mod para BUSSID es [MediaRale]( 1 ), un sitio web que proporciona varios mods para juegos, incluyendo BUSSID. MediaRale tiene una sección dedicada a los mapas mod para BUSSID, donde puede encontrar muchas opciones para elegir. Puedes navegar por categorías, como extrema, todoterreno o escénica. También puede ver capturas de pantalla, descripciones, calificaciones y enlaces de descarga para cada mapa de mods.

-Una vez que hayas encontrado un mapa mod que te guste, necesitas descargarlo en tu dispositivo. El archivo de mapa mod generalmente estará en formato ZIP o RAR, lo que significa que necesita extraerlo usando una aplicación de administrador de archivos o una aplicación extractora ZIP. Puedes encontrar muchas aplicaciones gratuitas para este propósito en la Google Play Store o en la App Store.

-Después de haber extraído el archivo de mapa mod, debe copiarlo en la carpeta mod de BUSSID. La carpeta mod se encuentra en el almacenamiento interno de su dispositivo, bajo la carpeta Android/data/com.maleo.bussimulatorid/files/mod. Puede usar una aplicación de administrador de archivos para navegar a esta carpeta y pegar el archivo de mapa mod allí.

- -El último paso es iniciar el juego y seleccionar el mapa mod desde el menú del mapa. Puede hacer esto pulsando en el icono del mapa en la esquina superior derecha de la pantalla, y luego desplazándose hacia abajo para encontrar el mapa mod que ha descargado. Toque en él para seleccionarlo, y luego toque en el botón de inicio para comenzar su viaje.

-Cómo disfrutar de nuevos mapas para Bus Simulator Indonesia

-Ahora que ha descargado e instalado un nuevo mapa para BUSSID, puede disfrutarlo conduciendo su autobús en él. Sin embargo, hay algunos consejos que pueden ayudarte a aprovechar al máximo tu experiencia. Estos son algunos de ellos:

--

-

-

- Consejo 2: Siga las reglas de tráfico y respete otros controladores. A pesar de que usted está jugando en un mapa mod, todavía tiene que seguir las reglas de tráfico y respetar a otros conductores en la carretera. Esto significa que debe obedecer el límite de velocidad, detenerse en las luces rojas, hacer una señal antes de girar y evitar colisiones. Esto no solo hará que su conducción sea más realista y segura, sino también más agradable y gratificante. -

- Consejo 3: Utilice el bocinazo y otras características para interactuar con el entorno. Uno de los aspectos más divertidos de BUSSID es que puedes utilizar la bocina y otras funciones para interactuar con el entorno. Por ejemplo, puedes usar la bocina para saludar a otros conductores, peatones o animales. También puede usar los limpiaparabrisas, los faros, los indicadores y las puertas para comunicarse con otros o expresarse. Incluso puede usar el "Om Telolet Om!" tocar el claxon para hacer que la gente te vitoree. -

- Consejo 4: Explora diferentes rutas y puntos de referencia en el mapa. Otra forma de disfrutar de nuevos mapas para BUSSID es explorar diferentes rutas y puntos de referencia en ellos. Puede hacer esto siguiendo la navegación GPS o eligiendo su propio camino. Puede descubrir nuevos lugares, paisajes o desafíos que no haya visto antes. También puede encontrar secretos ocultos o huevos de Pascua que el creador del mapa ha dejado para usted. -

- Consejo 5: Únete a convoyes multijugador en línea con otros jugadores. La mejor manera de disfrutar de nuevos mapas para BUSSID es unirse a convoyes multijugador en línea con otros jugadores. Puede hacer esto tocando el icono del convoy en la esquina superior izquierda de la pantalla, y luego elegir un convoy que está jugando en el mismo mapa que usted. También puede crear su propio convoy e invitar a sus amigos u otros jugadores a unirse a usted. Al unirte a un convoy, puedes chatear con otros jugadores, compartir tus experiencias y divertirte juntos. -

Conclusión

- --

-

- Encuentra un mapa mod que te guste en MediaRale -

- Descargar el archivo de mapa mod y extraerlo si es necesario -

- Copiar el archivo de mapa mod a la carpeta mod de BUSSID -

- Iniciar el juego y seleccionar el mapa de mod desde el menú del mapa -

Para disfrutar de nuevos mapas para BUSSID, puedes seguir estos consejos:

--

-

- Elegir un autobús adecuado y librea para el mapa -

- Siga las reglas de tráfico y respete otros controladores -

- Utilice el bocinazo y otras características para interactuar con el entorno -

- Explora diferentes rutas y puntos de referencia en el mapa -

- Únete a convoyes multijugador en línea con otros jugadores -

Siguiendo estos pasos y consejos, puede descargar y disfrutar de nuevos mapas para BUSSID y divertirse conduciendo su autobús en ellos. Si aún no has probado BUSSID, puedes descargarlo gratis desde la Google Play Store o la App Store. También puedes visitar el sitio web oficial de BUSSID para aprender más sobre el juego y sus características. ¡Feliz conducción!

-Preguntas frecuentes

-Aquí hay algunas preguntas frecuentes sobre nuevos mapas para BUSSID:

--

-

- Q: ¿Cuántos mapas nuevos están disponibles para BUSSID? -

- A: No hay un número exacto de nuevos mapas para BUSSID, ya que los nuevos mapas mod son constantemente creados y subidos por los usuarios. Sin embargo, puede encontrar cientos de mapas mod para BUSSID en MediaRale, que van desde mapas extremos, off-road, escénicos, a mapas realistas. -

- Q: ¿Cómo sé si un mapa mod es compatible con mi versión de BUSSID? -

- A: Puede comprobar la compatibilidad de un mapa mod mirando su descripción, calificación y comentarios en MediaRale. También puede comprobar la fecha de subida del mapa mod y compararlo con la fecha de la última actualización de BUSSID. Generalmente, los mapas mod que se cargan después de la última actualización de BUSSID tienen más probabilidades de ser compatibles. -

- Q: ¿Cómo puedo desinstalar un mapa mod de BUSSID? - -

- Q: ¿Cómo puedo reportar un problema o un error con un mapa mod? -

- A: Si encuentras un problema o un error con un mapa mod, puedes reportarlo al creador de mapas mod o a MediaRale. Puede encontrar la información de contacto del creador de mapas mod en su página de perfil en MediaRale. También puede dejar un comentario o una valoración en la página del mapa de mods en MediaRale para compartir sus comentarios. -

- Q: ¿Cómo puedo crear mi propio mapa mod para BUSSID? -

- A: Si quieres crear tu propio mapa mod para BUSSID, necesitas usar un software de modelado 3D, como Blender, SketchUp o Maya. También debe seguir las directrices y especificaciones de BUSSID para crear mapas mod. Puede encontrar más información y tutoriales sobre cómo crear mapas mod para BUSSID en el sitio web oficial de BUSSID o en YouTube. -

-

-