Commit

·

3985e99

1

Parent(s):

88ad4d3

Update parquet files (step 59 of 476)

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- spaces/12Venusssss/text_generator/app.py +0 -11

- spaces/1acneusushi/gradio-2dmoleculeeditor/data/Aggiornamento Software Di Controllo Uniemens Individuale Recensioni e Opinioni degli Utenti sulla Versione 3.9.6.md +0 -139

- spaces/1phancelerku/anime-remove-background/ .md +0 -92

- spaces/1phancelerku/anime-remove-background/Askies I 39m Sorry De Mthuda Mp3 Download Fakaza [Extra Quality].md +0 -90

- spaces/1phancelerku/anime-remove-background/Brawl Stars Hack APK 2022 A Simple Guide to Install and Use.md +0 -121

- spaces/1phancelerku/anime-remove-background/Candy Crush Saga MOD APK 1.141 0.4 Unlocked Download Now for Android Devices.md +0 -87

- spaces/1phancelerku/anime-remove-background/Cars Daredevil Garage APK The Ultimate Racing Game for Cars Fans.md +0 -99

- spaces/1phancelerku/anime-remove-background/Free Download Educational Games for Kids-6 Years Old - Engaging Creative and Safe.md +0 -168

- spaces/801artistry/RVC801/infer/lib/uvr5_pack/utils.py +0 -121

- spaces/801artistry/RVC801/julius/resample.py +0 -216

- spaces/801artistry/RVC801/tools/dlmodels.bat +0 -348

- spaces/AI-Hobbyist/Hoyo-RVC/uvr5_pack/lib_v5/layers_33966KB.py +0 -126

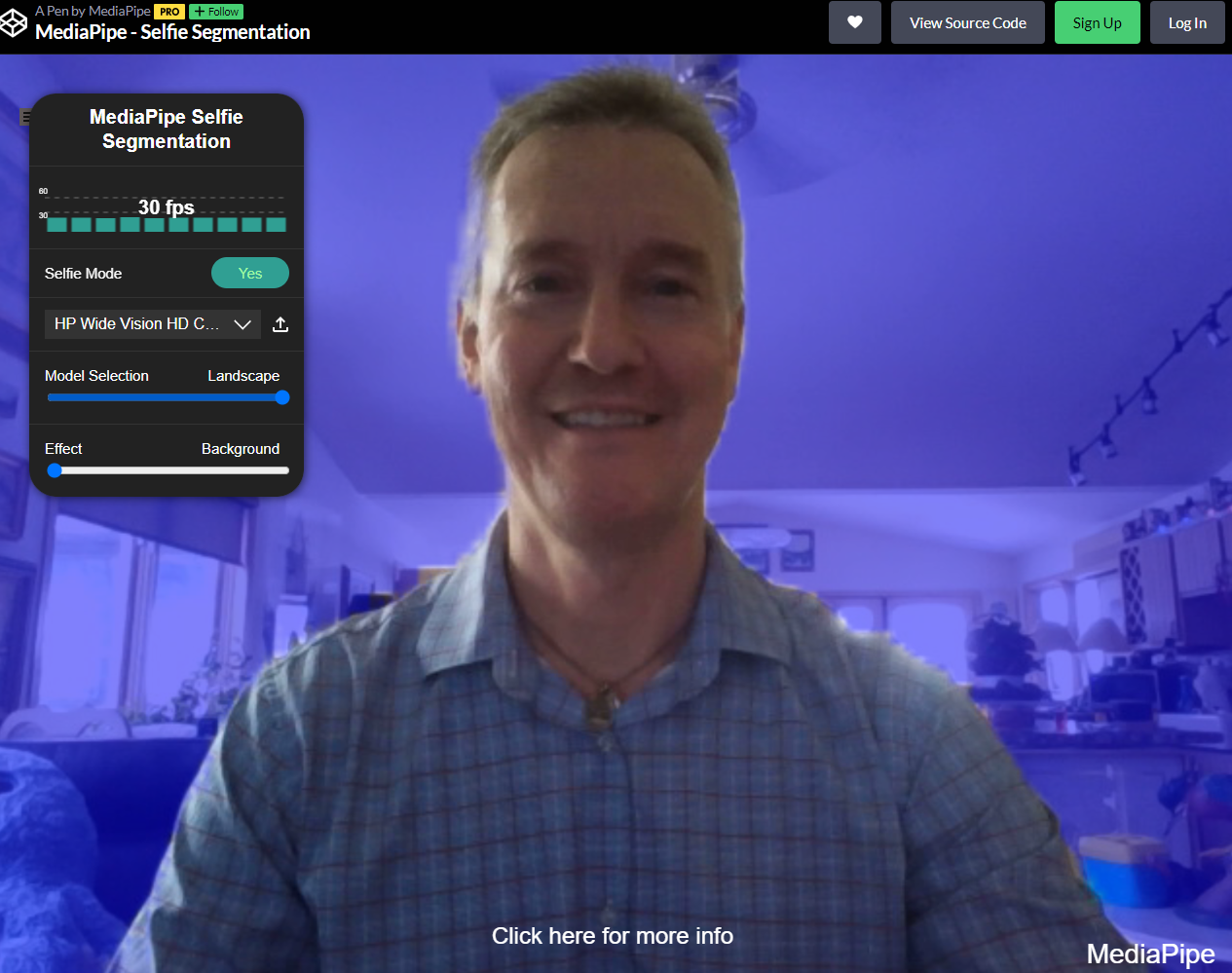

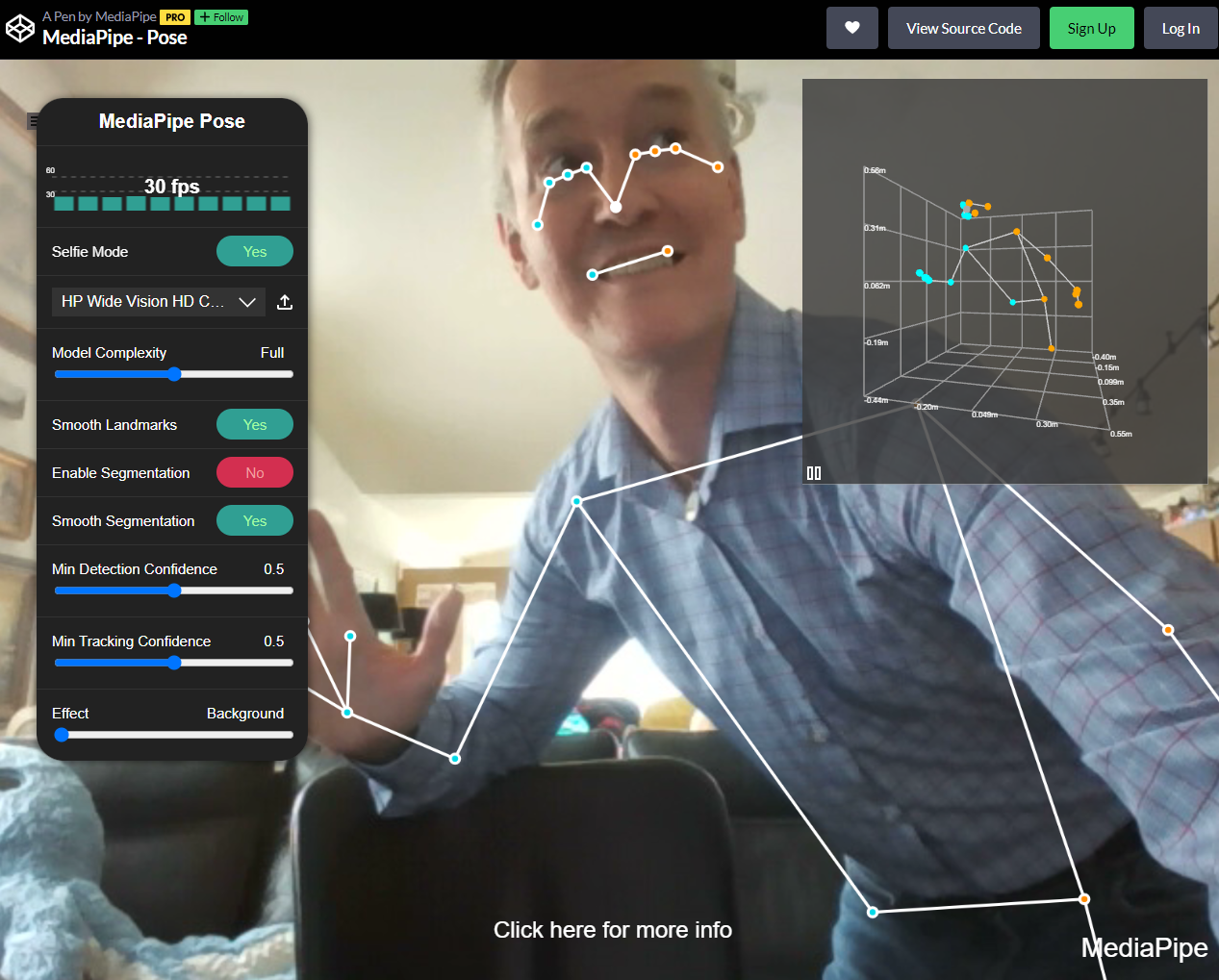

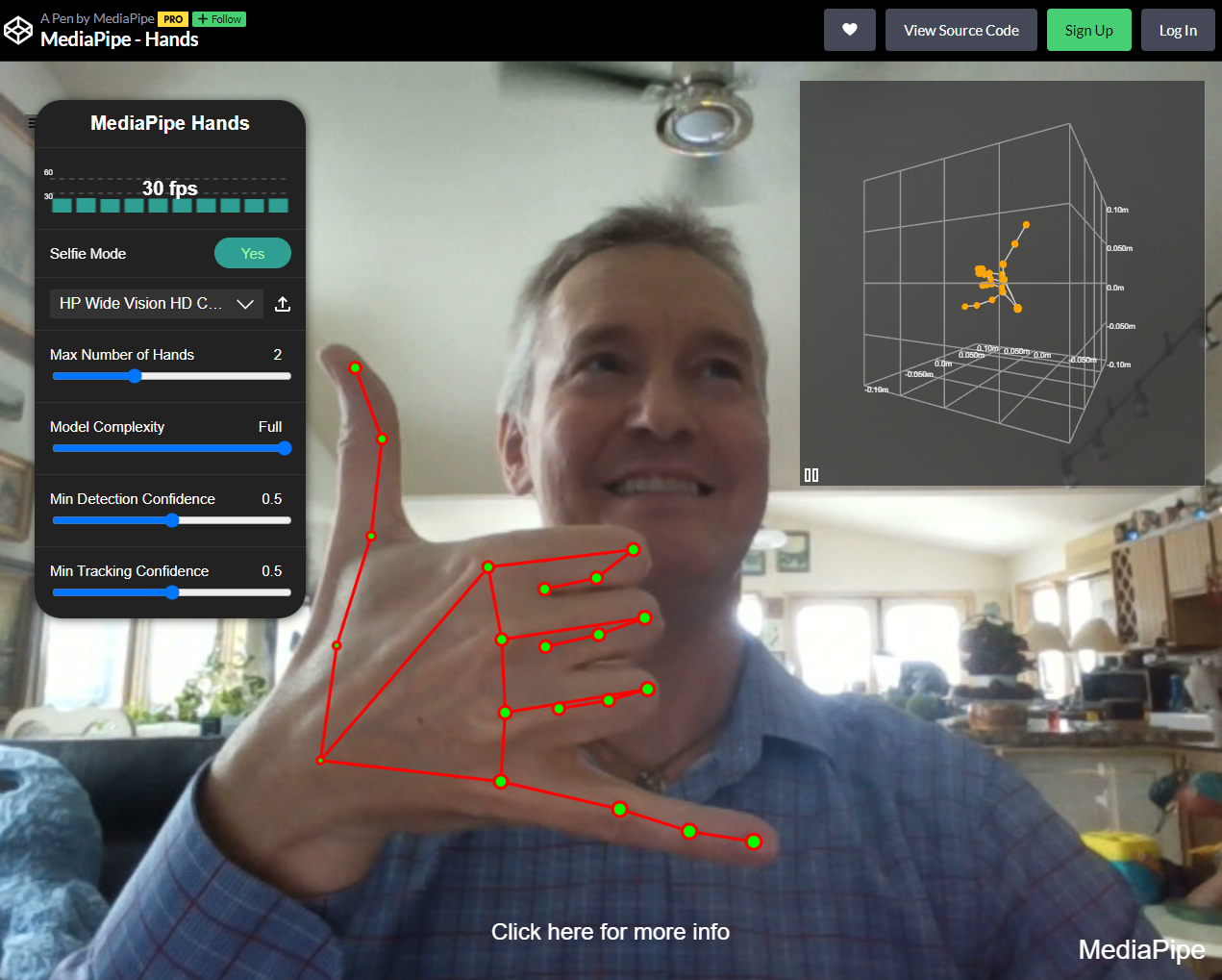

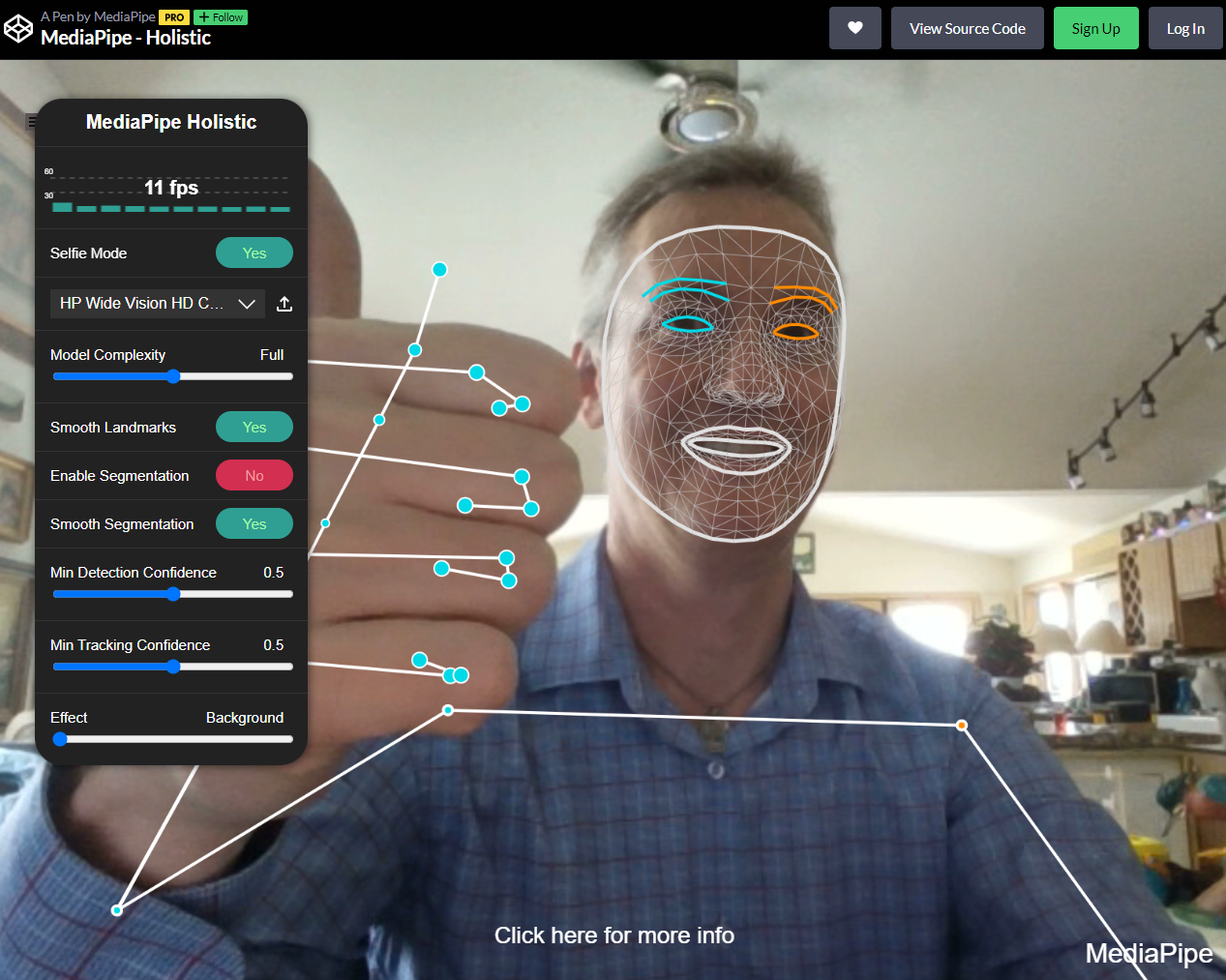

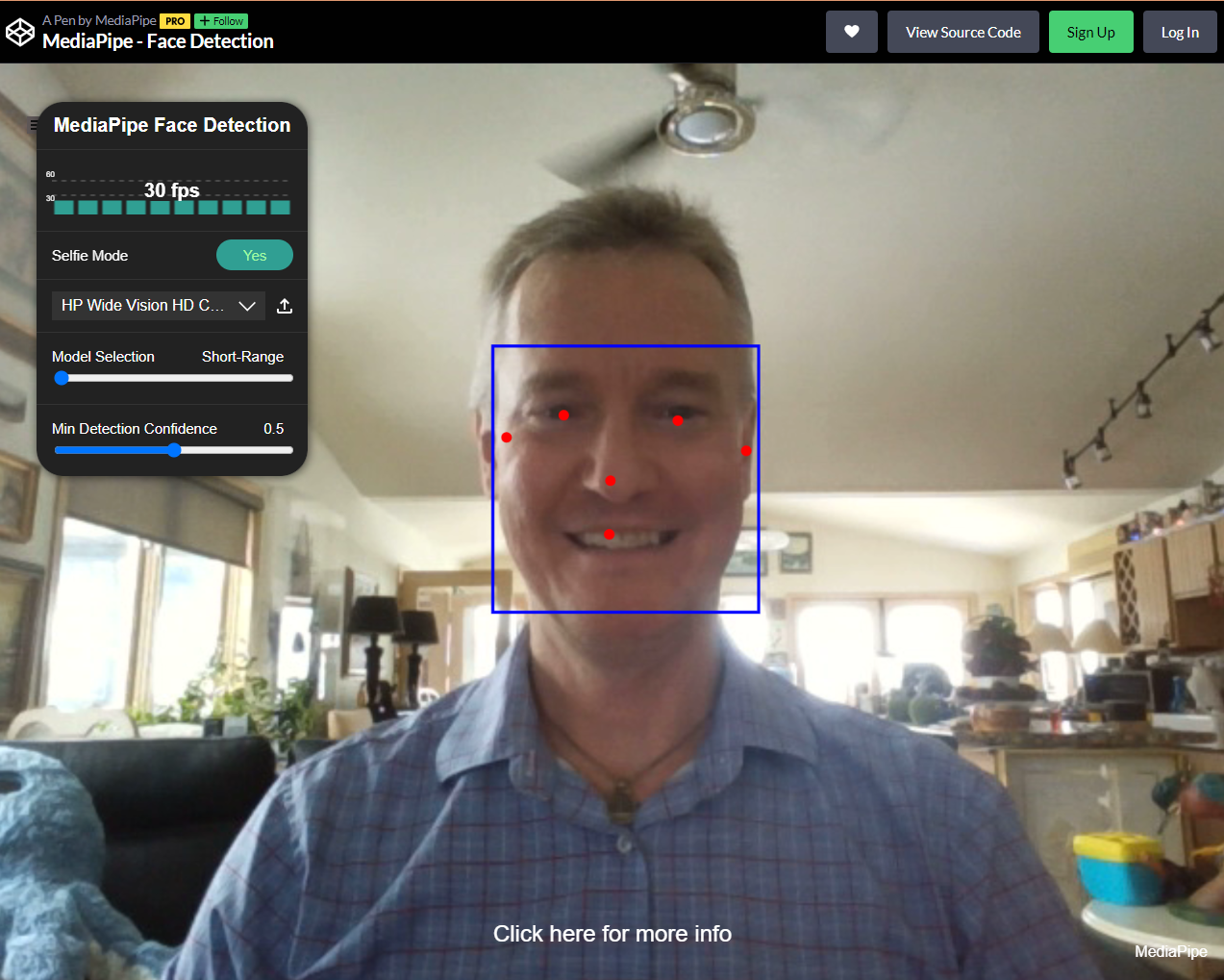

- spaces/AI-ZTH-03-23/4.RealTime-MediaPipe-AI-From-Video-On-Any-Device/app.py +0 -59

- spaces/AIGC-Audio/AudioGPT/NeuralSeq/modules/parallel_wavegan/utils/utils.py +0 -169

- spaces/AIGC-Audio/AudioGPT/text_to_audio/Make_An_Audio/ldm/modules/losses_audio/lpaps.py +0 -152

- spaces/AIGC-Audio/AudioGPT/text_to_speech/modules/commons/wavenet.py +0 -97

- spaces/ATang0729/Forecast4Muses/Model/Model6/Model6_1_ClothesKeyPoint/work_dirs_1-x/td_hm_res50_4xb64-120e_deepfashion2_long_sleeved_shirt_256x192/td_hm_res50_4xb64-120e_deepfashion2_long_sleeved_shirt_256x192.py +0 -2861

- spaces/AchyuthGamer/OpenGPT/g4f/Provider/Yqcloud.py +0 -59

- spaces/Adapting/TrendFlow/mypages/charts.py +0 -80

- spaces/AgentVerse/agentVerse/agentverse/agents/tasksolving_agent/__init__.py +0 -6

- spaces/AlanMars/QYL-AI-Space/modules/models/tokenization_moss.py +0 -368

- spaces/AlexWang/lama/saicinpainting/training/visualizers/__init__.py +0 -15

- spaces/AlexWelcing/MusicLM/README.md +0 -14

- spaces/Aloento/9Nine-VITS/to_wave.py +0 -82

- spaces/Alpaca233/ChatPDF-GUI/gpt_reader/paper.py +0 -20

- spaces/Andy1621/uniformer_image_detection/configs/fcos/fcos_center-normbbox-centeronreg-giou_r50_caffe_fpn_gn-head_dcn_1x_coco.py +0 -54

- spaces/Andy1621/uniformer_image_detection/configs/mask_rcnn/mask_rcnn_r50_fpn_1x_coco.py +0 -5

- spaces/Andy1621/uniformer_image_detection/mmdet/models/dense_heads/base_dense_head.py +0 -59

- spaces/Andy1621/uniformer_image_segmentation/configs/deeplabv3/deeplabv3_r101-d8_480x480_80k_pascal_context_59.py +0 -2

- spaces/AnishKumbhar/ChatBot/text-generation-webui-main/extensions/superboogav2/parameters.py +0 -369

- spaces/ApathyINC/CustomGPT/baidu_translate/module.py +0 -106

- spaces/AshtonIsNotHere/xlmr-longformer_comparison/README.md +0 -13

- spaces/Ataturk-Chatbot/HuggingFaceChat/venv/lib/python3.11/site-packages/pip/_vendor/pyparsing/testing.py +0 -331

- spaces/Awiny/Image2Paragraph/models/grit_src/third_party/CenterNet2/configs/new_baselines/mask_rcnn_regnety_4gf_dds_FPN_400ep_LSJ.py +0 -14

- spaces/Benson/text-generation/Examples/Colina Subida De Carreras De Descarga Para PC Ventanas 10 64 Bits.md +0 -89

- spaces/Benson/text-generation/Examples/Descargar Fifa 21 Descargar.md +0 -91

- spaces/Benson/text-generation/Examples/Descargar Gratis Aplicaciones De Desbloqueo Para Android.md +0 -106

- spaces/Big-Web/MMSD/env/Lib/site-packages/pip/_vendor/tomli/_re.py +0 -107

- spaces/Boadiwaa/Recipes/openai/_openai_scripts.py +0 -74

- spaces/CVPR/Dual-Key_Backdoor_Attacks/datagen/detectron2/tests/test_model_zoo.py +0 -29

- spaces/CVPR/LIVE/aabb.h +0 -67

- spaces/CVPR/LIVE/thrust/cmake/ThrustBuildTargetList.cmake +0 -283

- spaces/CVPR/LIVE/thrust/dependencies/cub/cmake/CubBuildTargetList.cmake +0 -261

- spaces/CVPR/LIVE/thrust/dependencies/cub/test/mersenne.h +0 -162

- spaces/CVPR/WALT/mmdet/models/dense_heads/ssd_head.py +0 -265

- spaces/CVPR/WALT/walt/train.py +0 -188

- spaces/Cecil8352/vits-models/text/symbols.py +0 -39

- spaces/DQChoi/gpt-demo/venv/lib/python3.11/site-packages/huggingface_hub/community.py +0 -354

- spaces/Dagfinn1962/prodia2/transform.py +0 -13

- spaces/Daimon/translation_demo/tokenization_small100.py +0 -364

spaces/12Venusssss/text_generator/app.py

DELETED

|

@@ -1,11 +0,0 @@

|

|

| 1 |

-

import gradio as gr

|

| 2 |

-

from gradio.mix import Parallel

|

| 3 |

-

|

| 4 |

-

myfirstvariable="My First Text Generation"

|

| 5 |

-

mylovelysecondvariable="Input text and submit."

|

| 6 |

-

|

| 7 |

-

model1=gr.Interface.load("huggingface/gpt2")

|

| 8 |

-

model2=gr.Interface.load("huggingface/EleutherAI/gpt-j-6B")

|

| 9 |

-

model3=gr.Interface.load("huggingface/EleutherAI/gpt-neo-1.3B")

|

| 10 |

-

|

| 11 |

-

gr.Parallel(model1, model2, model3 , title=myfirstvariable, description=mylovelysecondvariable).launch()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1acneusushi/gradio-2dmoleculeeditor/data/Aggiornamento Software Di Controllo Uniemens Individuale Recensioni e Opinioni degli Utenti sulla Versione 3.9.6.md

DELETED

|

@@ -1,139 +0,0 @@

|

|

| 1 |

-

|

| 2 |

-

<h1>Aggiornamento Software Di Controllo Uniemens Individuale</h1>

|

| 3 |

-

<p>Se sei un'impresa o un consulente che deve inviare le denunce mensili dei lavoratori dipendenti all'INPS, sai bene quanto sia importante avere un software di controllo affidabile e aggiornato. Il software di controllo Uniemens individuale è uno strumento gratuito e facile da usare che ti permette di verificare la correttezza formale e sostanziale dei file XML che devi trasmettere all'INPS tramite il portale UniEMens. In questo articolo ti spieghiamo cos'è il software di controllo Uniemens individuale, come si effettua l'aggiornamento alla versione 3.9.6 e quali sono i vantaggi che puoi ottenere con questo aggiornamento.</p>

|

| 4 |

-

<h2>Cos'è il software di controllo Uniemens individuale?</h2>

|

| 5 |

-

<p>Il software di controllo Uniemens individuale è un'applicazione Java che ti consente di effettuare i controlli preliminari sui file XML che devi inviare all'INPS tramite il portale UniEMens. Questi controlli riguardano sia la validità formale dei file XML rispetto allo schema XSD fornito dall'INPS, sia la coerenza sostanziale dei dati contenuti nei file XML rispetto alle regole di business definite dall'INPS.</p>

|

| 6 |

-

<h2>Aggiornamento Software Di Controllo Uniemens Individuale</h2><br /><p><b><b>Download</b> ☑ <a href="https://byltly.com/2uKvwe">https://byltly.com/2uKvwe</a></b></p><br /><br />

|

| 7 |

-

<h3>A cosa serve il software di controllo Uniemens individuale?</h3>

|

| 8 |

-

<p>Il software di controllo Uniemens individuale serve a evitare che i file XML che invii all'INPS siano scartati o rifiutati per motivi formali o sostanziali. In questo modo puoi risparmiare tempo e denaro, evitando di dover correggere e reinviare i file XML con il rischio di incorrere in sanzioni o ritardi nei pagamenti delle prestazioni previdenziali ai lavoratori.</p>

|

| 9 |

-

<h3>Quali sono le caratteristiche principali del software di controllo Uniemens individuale?</h3>

|

| 10 |

-

<p>Le caratteristiche principali del software di controllo Uniemens individuale sono le seguenti:</p>

|

| 11 |

-

<ul>

|

| 12 |

-

<li>Ti permette di effettuare i controlli preliminari sui file XML prima dell'invio al portale UniEMens</li>

|

| 13 |

-

<li>Ti fornisce un report dettagliato degli esiti dei controlli, evidenziando gli eventuali errori o anomalie rilevati</li>

|

| 14 |

-

<li>Ti consente di correggere manualmente i dati errati o incompleti direttamente sul file XML</li>

|

| 15 |

-

<li>Ti permette di salvare i file XML corretti e pronti per l'invio al portale UniEMens</li>

|

| 16 |

-

<li>Ti consente di eseguire i controlli su più file XML contemporaneamente</li>

|

| 17 |

-

<li>Ti consente di personalizzare i parametri dei controlli in base alle tue esigenze</li>

|

| 18 |

-

<li>Ti consente di aggiornare facilmente il software con le ultime versioni rilasciate dall'INPS</li>

|

| 19 |

-

</ul>

|

| 20 |

-

<h2>Come si effettua l'aggiornamento del software di controllo Uniemens individuale?</h2>

|

| 21 |

-

<p>Per effettuare l'aggiornamento del software di controllo Uniemens individuale devi seguire alcuni semplici passaggi:</p>

|

| 22 |

-

<p>Aggiornamento Software Di Controllo Uniemens Individuale Versione 3.9.6<br />

|

| 23 |

-

Aggiornamento Software Di Controllo Uniemens Individuale Settembre 2022<br />

|

| 24 |

-

Aggiornamento Software Di Controllo Uniemens Individuale INPS<br />

|

| 25 |

-

Aggiornamento Software Di Controllo Uniemens Individuale Download<br />

|

| 26 |

-

Aggiornamento Software Di Controllo Uniemens Individuale Manuale<br />

|

| 27 |

-

Aggiornamento Software Di Controllo Uniemens Individuale Certificato Digitale<br />

|

| 28 |

-

Aggiornamento Software Di Controllo Uniemens Individuale Servizio Caratteristiche Contributive<br />

|

| 29 |

-

Aggiornamento Software Di Controllo Uniemens Individuale Portale Inps<br />

|

| 30 |

-

Aggiornamento Software Di Controllo Uniemens Individuale Per le Aziende ed i Consulenti<br />

|

| 31 |

-

Aggiornamento Software Di Controllo Uniemens Individuale Novità della Versione<br />

|

| 32 |

-

Nuovo Software Di Controllo Uniemens Multi-piattaforma Versione 4.0.3<br />

|

| 33 |

-

Nuovo Software Di Controllo Uniemens Multi-piattaforma Novembre 2022<br />

|

| 34 |

-

Nuovo Software Di Controllo Uniemens Multi-piattaforma INPS<br />

|

| 35 |

-

Nuovo Software Di Controllo Uniemens Multi-piattaforma Download<br />

|

| 36 |

-

Nuovo Software Di Controllo Uniemens Multi-piattaforma Manuale<br />

|

| 37 |

-

Nuovo Software Di Controllo Uniemens Multi-piattaforma Desktop e da Riga di Comando<br />

|

| 38 |

-

Nuovo Software Di Controllo Uniemens Multi-piattaforma Microsoft Windows 10<br />

|

| 39 |

-

Nuovo Software Di Controllo Uniemens Multi-piattaforma Microsoft Windows 8<br />

|

| 40 |

-

Nuovo Software Di Controllo Uniemens Multi-piattaforma Microsoft Windows 7<br />

|

| 41 |

-

Nuovo Software Di Controllo Uniemens Multi-piattaforma Linux<br />

|

| 42 |

-

Nuovo Software Di Controllo Uniemens Multi-piattaforma Portale Inps<br />

|

| 43 |

-

Nuovo Software Di Controllo Uniemens Multi-piattaforma Per le Aziende ed i Consulenti<br />

|

| 44 |

-

Nuovo Software Di Controllo Uniemens Multi-piattaforma Novità della Versione<br />

|

| 45 |

-

Come Aggiornare il Software Di Controllo Uniemens Individuale<br />

|

| 46 |

-

Come Scaricare il Software Di Controllo Uniemens Individuale<br />

|

| 47 |

-

Come Usare il Software Di Controllo Uniemens Individuale<br />

|

| 48 |

-

Come Installare il Software Di Controllo Uniemens Individuale<br />

|

| 49 |

-

Come Configurare il Software Di Controllo Uniemens Individuale<br />

|

| 50 |

-

Come Verificare il Software Di Controllo Uniemens Individuale<br />

|

| 51 |

-

Come Risolvere i Problemi del Software Di Controllo Uniemens Individuale<br />

|

| 52 |

-

Cos'è il Software Di Controllo Uniemens Individuale<br />

|

| 53 |

-

A Cosa Serve il Software Di Controllo Uniemens Individuale<br />

|

| 54 |

-

Quali Sono i Vantaggi del Software Di Controllo Uniemens Individuale<br />

|

| 55 |

-

Quali Sono i Requisiti del Software Di Controllo Uniemens Individuale<br />

|

| 56 |

-

Quali Sono le Funzionalità del Software Di Controllo Uniemens Individuale<br />

|

| 57 |

-

Qual è la Differenza tra il Software Di Controllo Uniemens Individuale e il Nuovo Software di controllo UniEMens Multi-piattaforma <br />

|

| 58 |

-

Dove Trovare il Software di controllo UniEMens individuale <br />

|

| 59 |

-

Dove Trovare il Nuovo software di controllo UniEMens multi-piattaforma <br />

|

| 60 |

-

Perché Aggiornare il software di controllo UniEMens individuale <br />

|

| 61 |

-

Perché Usare il nuovo software di controllo UniEMens multi-piattaforma <br />

|

| 62 |

-

Guida al software di controllo UniEMens individuale <br />

|

| 63 |

-

Guida al nuovo software di controllo UniEMens multi-piattaforma <br />

|

| 64 |

-

Recensioni sul software di controllo UniEMens individuale <br />

|

| 65 |

-

Recensioni sul nuovo software di controllo UniEMens multi-piattaforma <br />

|

| 66 |

-

Domande frequenti sul software di controllo UniEMens individuale <br />

|

| 67 |

-

Domande frequenti sul nuovo software di controllo UniEMens multi-piattaforma <br />

|

| 68 |

-

Supporto tecnico per il software di controllo UniEMens individuale <br />

|

| 69 |

-

Supporto tecnico per il nuovo software di controllo UniEMens multi-piattaforma <br />

|

| 70 |

-

Video tutorial sul software di controllo UniEMens individuale <br />

|

| 71 |

-

Video tutorial sul nuovo software di controllo UniEMens multi-piattaforma </p>

|

| 72 |

-

<h3>Quali sono i requisiti per l'aggiornamento del software di controllo Uniemens individuale?</h3>

|

| 73 |

-

<p>Per poter aggiornare il software di controllo Uniemens individuale devi avere i seguenti requisiti:</p>

|

| 74 |

-

<ul>

|

| 75 |

-

<li>Avere una connessione internet attiva</li>

|

| 76 |

-

<li>Avere installato sul tuo computer il sistema operativo Windows (7, 8 o 10) o Linux (64 bit)</li>

|

| 77 |

-

<li>Avere installato sul tuo computer la Java Virtual Machine (JVM) versione 8 o superiore</li>

|

| 78 |

-

<li>Avere a disposizione almeno 50 MB di spazio libero sul disco fisso</li>

|

| 79 |

-

</ul>

|

| 80 |

-

<h3>Quali sono le novità della versione 3.9.6 del software di controllo Uniemens individuale?</h3>

|

| 81 |

-

<p>La versione 3.9.6 del software di controllo Uniemens individuale è stata rilasciata dall'INPS nel settembre 2022 e presenta le seguenti novità:</p>

|

| 82 |

-

<ul>

|

| 83 |

-

<li>Aggiornamento delle regole di business relative alla gestione delle assenze per malattia, maternità, infortunio e quarantena</li>

|

| 84 |

-

<li>Aggiornamento delle regole di business relative alla gestione delle prestazioni integrative del salario (CIG, CIGO, CIGD)</li>

|

| 85 |

-

<li>Aggiornamento delle regole di business relative alla gestione delle prestazioni a sostegno del reddito (NASPI, DIS-COLL, ASDI)</li>

|

| 86 |

-

<li>Aggiornamento delle regole di business relative alla gestione delle prestazioni per la conciliazione vita-lavoro (congedo parentale, assegno per il nucleo familiare)</li>

|

| 87 |

-

<li>Aggiornamento delle regole di business relative alla gestione delle prestazioni per la disabilità (assegno ordinario e straordinario)</li>

|

| 88 |

-

<li>Aggiornamento delle regole di business relative alla gestione delle prestazioni per la non autosufficienza (indennità speciale)</li>

|

| 89 |

-

<li>Aggiornamento delle regole di business relative alla gestione delle prestazioni per la mobilità (indennità ordinaria e speciale)</li>

|

| 90 |

-

<li>Aggiornamento delle regole di business relative alla gestione delle prestazioni per la formazione professionale (indennità formativa)</li>

|

| 91 |

-

<li>Aggiornamento delle regole di business relative alla gestione delle prestazioni per l'inclusione sociale (REIS)</li>

|

| 92 |

-

<li>Aggiornamento delle regole di business relative alla gestione dei contributi previdenziali ed assistenziali</li>

|

| 93 |

-

<li>Miglioramento dell'interfaccia grafica e della navigabilità del software</li>

|

| 94 |

-

<li>Miglioramento della performance e della stabilità del software</li>

|

| 95 |

-

</ul>

|

| 96 |

-

<h3>Come si scarica e si installa la versione 3.9.6 del software di controllo Uniemens individuale?</h3>

|

| 97 |

-

<p>Per scaricare e installare la versione 3.9.6 del software di controllo Uniemens individuale devi seguire questi passaggi:</p>

|

| 98 |

-

<ol>

|

| 99 |

-

<li>Accedere al sito web dell'INPS (<a href="https://www.inps.it">www.inps.it</a>) e cliccare sulla sezione "Software"</li>

|

| 100 |

-

<li>Cercare il software "Software di controllo UniEMens individuale - Versione 3.9.6 - SETTEMBRE 2022" e cliccare sul link "Download"</li>

|

| 101 |

-

```html sistema operativo più adatto al tuo computer tra quelli disponibili (Windows 10, Windows 8, Windows 7 o Linux) e cliccare sul link corrispondente</li>

|

| 102 |

-

<li>Scaricare il file ZIP contenente il software e salvarlo sul tuo computer</li>

|

| 103 |

-

<li>Estrarre il file ZIP in una cartella a tua scelta</li>

|

| 104 |

-

<li>Avviare il file INPS_uniEMensIndiv.exe per lanciare il software</li>

|

| 105 |

-

</ol>

|

| 106 |

-

<h3>Come si utilizza la versione 3.9.6 del software di controllo Uniemens individuale?</h3>

|

| 107 |

-

<p>Per utilizzare la versione 3.9.6 del software di controllo Uniemens individuale devi seguire questi passaggi:</p>

|

| 108 |

-

<ol>

|

| 109 |

-

<li>Selezionare la modalità di controllo che preferisci tra automatica o manuale</li>

|

| 110 |

-

<li>Nella modalità automatica, scegliere la cartella dove sono presenti i file XML da controllare e cliccare sul pulsante "Avvia controlli"</li>

|

| 111 |

-

<li>Nella modalità manuale, scegliere il singolo file XML da controllare e cliccare sul pulsante "Avvia controlli"</li>

|

| 112 |

-

<li>Attendere che il software esegua i controlli sui file XML selezionati</li>

|

| 113 |

-

<li>Visualizzare il report degli esiti dei controlli, con l'indicazione degli eventuali errori o anomalie rilevati</li>

|

| 114 |

-

<li>Correggere manualmente i dati errati o incompleti direttamente sul file XML, utilizzando l'editor integrato nel software</li>

|

| 115 |

-

<li>Salvare i file XML corretti e pronti per l'invio al portale UniEMens</li>

|

| 116 |

-

</ol>

|

| 117 |

-

<h2>Quali sono i vantaggi dell'aggiornamento del software di controllo Uniemens individuale?</h2>

|

| 118 |

-

<p>L'aggiornamento del software di controllo Uniemens individuale alla versione 3.9.6 ti offre diversi vantaggi:</p>

|

| 119 |

-

<h3>Maggiore sicurezza e conformità normativa</h3>

|

| 120 |

-

<p>Con l'aggiornamento del software di controllo Uniemens individuale alla versione 3.9.6 puoi essere sicuro di inviare all'INPS dei file XML conformi alle ultime normative vigenti in materia di previdenza sociale. In questo modo puoi evitare di incorrere in sanzioni o contestazioni da parte dell'INPS per aver inviato dei file XML non aggiornati o non corretti.</p>

|

| 121 |

-

<h3>Migliore qualità e velocità dei controlli</h3>

|

| 122 |

-

<p>Con l'aggiornamento del software di controllo Uniemens individuale alla versione 3.9.6 puoi beneficiare di una migliore qualità e velocità dei controlli sui file XML che devi inviare all'INPS. Il software è infatti in grado di effettuare i controlli in modo più accurato e rapido, grazie all'ottimizzazione delle regole di business e all'implementazione delle ultime tecnologie informatiche.</p>

|

| 123 |

-

<h3>Minore rischio di errori e sanzioni</h3>

|

| 124 |

-

<p>Con l'aggiornamento del software di controllo Uniemens individuale alla versione 3.9.6 puoi ridurre il rischio di commettere errori o omissioni nei file XML che devi inviare all'INPS. Il software infatti ti fornisce un report dettagliato degli esiti dei controlli, evidenziando gli eventuali errori o anomalie rilevati e consentendoti di correggerli manualmente direttamente sul file XML. In questo modo puoi evitare di inviare all'INPS dei file XML errati o incompleti, che potrebbero causarti delle sanzioni o dei ritardi nei pagamenti delle prestazioni previdenziali ai lavoratori.</p>

|

| 125 |

-

<h2>Conclusion</h2>

|

| 126 |

-

<p>In conclusione, il software di controllo Uniemens individuale è uno strumento indispensabile per le imprese e i consulenti che devono inviare le denunce mensili dei lavoratori dipendenti all'INPS tramite il portale UniEMens. L'aggiornamento del software alla versione 3.9.6 ti offre numerosi vantaggi in termini di sicurezza, qualità, velocità e risparmio. Ti consigliamo quindi di scaricare e installare la versione 3.9.6 del software di controllo Uniemens individuale il prima possibile e di utilizzarla per verificare la correttezza dei file XML che devi inviare all'INPS.</p>

|

| 127 |

-

<h2>FAQ</h2>

|

| 128 |

-

<p>Qui di seguito trovi alcune domande frequenti sul software di controllo Uniemens individuale e le relative risposte:</p>

|

| 129 |

-

<table>

|

| 130 |

-

<tr><td><b>Domanda</b></td><td><b>Risposta</b></td></tr>

|

| 131 |

-

<tr><td>Cos'è UniEMens?</td><td>UniEMens è il portale web dell'INPS che consente alle imprese e ai consulenti di inviare le denunce mensili dei lavoratori dipendenti in formato XML.</td></tr>

|

| 132 |

-

<tr><td>Cos'è lo schema XSD?</td><td>Lo schema XSD è il documento che definisce la struttura formale dei file XML che devono essere inviati al portale UniEMens.</td></tr>

|

| 133 |

-

<tr><td>Cos'è una regola di business?</td><td>Una regola di business è una condizione che deve essere rispettata dai dati contenuti nei file XML che devono essere inviati al portale UniEMens.</td></tr>

|

| 134 |

-

<tr><td>Come si aggiorna il software di controllo Uniemens individuale?</td><td>Per aggiornare il software di controllo Uniemens individuale bisogna scaricare e installare la versione più recente rilasciata dall'INPS sul sito web www.inps.it.</td></tr>

|

| 135 |

-

<tr><td>Come si contatta l'assistenza tecnica dell'INPS?</td><td>Per contattare l'assistenza tecnica dell'INPS bisogna chiamare il numero verde 803164 oppure scrivere una mail a [email protected].</td></tr>

|

| 136 |

-

</table>

|

| 137 |

-

</p> 0a6ba089eb<br />

|

| 138 |

-

<br />

|

| 139 |

-

<br />

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1phancelerku/anime-remove-background/ .md

DELETED

|

@@ -1,92 +0,0 @@

|

|

| 1 |

-

<br />

|

| 2 |

-

<h1>اکولایزر چیست و چه کاربردهایی دارد؟</h1>

|

| 3 |

-

<p>اگر شما هم جزو آن دسته از افرادی هستید که علاقهمند به صدا و موسیقی هستید، حتما با اصطلاح اکولایزر آشنا هستید. اما آیا دقیقا میدانید که اکولایزر چیست و چه کاربردهایی دارد؟ در این مقاله قصد داریم به شما تعریف و مفهوم اکولایزر را بگوییم، انواع مختلف آن را معرفی کنیم و کاربردهای آن را در صنعت صوتی و موسیقی برشمریم. همچنین به شما بهترین اکولایزرهای صوتی برای ویندوز 10 در سال 2023 را معرفی خواهیم کرد. پس با ما همراه باشید.</p>

|

| 4 |

-

<h2>تعریف و مفهوم اکولایزر</h2>

|

| 5 |

-

<p>اکولایزر یک نوع فیلتر صوتی است که با استفاده از آن میتوان سطح فرکانسهای مختلف در یک سیگنال صوتی را تغییر داد. به عبارت دیگر، با استفاده از اکولای <p>زر اکولایزر میتوانید صدای خروجی را به دلخواه خود شکل دهید. برای مثال، میتوانید باس را تقویت کنید، تربل را کاهش دهید، میانصدا را افزایش دهید و غیره. اکولایزر به شما امکان میدهد که صدای خود را با توجه به نوع موسیقی، محیط، سلیقه و هدف خود تنظیم کنید.</p>

|

| 6 |

-

<h2>اکولایزر</h2><br /><p><b><b>Download File</b> ❤ <a href="https://jinyurl.com/2uNUzT">https://jinyurl.com/2uNUzT</a></b></p><br /><br />

|

| 7 |

-

<h3>انواع اکولایزرها بر اساس شکل موج و تعداد باندها</h3>

|

| 8 |

-

<p>اکولایزرها بر اساس شکل موج و تعداد باندهایی که در آنها قابل تغییر هستند، به چند نوع تقسیمبندی میشوند. باند یک بازه فرکانسی است که در آن میتوانید سطح صدا را افزایش یا کاهش دهید. برخی از انواع اکولایزرها عبارتند از:</p>

|

| 9 |

-

<h4>اکولایزرهای گرافیکی</h4>

|

| 10 |

-

<p>اکولایزرهای گرافیکی نوعی اکولایزر هستند که در آنها تعداد باندها ثابت است و شما فقط میتوانید سطح هر باند را با استفاده از یک کشویی یا یک دکمه تغییر دهید. این نوع اکولایزر به شما اجازه نمیدهد که فرکانس و عرض هر باند را تغییر دهید. معمولا این نوع اکولایزر دارای 5، 7، 10، 15 یا 31 باند هستند. این نوع اکولایزر به خاطر سادگی و راحتی استفاده از آن در بسیاری از دستگاههای صوتی مانند پخش کنندههای MP3، ست تاپ باکسها، تلویزیونها و غیره استفاده میشود.</p>

|

| 11 |

-

<p>دانلود رایگان 50 اکولایزر حرکتی آماده افترافکت<br />

|

| 12 |

-

با بهترین اکولایزرهای صوتی برای ویندوز 10 آشنا شوید<br />

|

| 13 |

-

ایجاد اکولایزر موزیک برای اینستاگرام و روش های ساخت آن<br />

|

| 14 |

-

اکولایزر چیست؟ هر آنچه باید در این رابطه بدانید<br />

|

| 15 |

-

Viper4Windows یک اکوالیزر منبع باز برای ویندوز 10<br />

|

| 16 |

-

نحوه تنظیم اکولایزر در سامسونگ گلکسی S21<br />

|

| 17 |

-

معرفی نرمافزار Equalizer APO برای تغییر صدا در ویندوز<br />

|

| 18 |

-

آموزش ساخت اکولایزر سه بعدی با نرمافزار Adobe After Effects<br />

|

| 19 |

-

مقایسه اکولایزرهای پارامتریک و گرافیک در صنعت صوت<br />

|

| 20 |

-

دانلود پلاگین FabFilter Pro-Q 3 برای اکولایز کردن صدا<br />

|

| 21 |

-

راهنمای خرید بهترین اکولایزر خودرو در بازار<br />

|

| 22 |

-

تفاوت اکولایزر و کمپرسور در پروسه میکس و مسترینگ<br />

|

| 23 |

-

نحوه استفاده از اپلیکیشن Equalizer FX برای تقویت صدا در اندروید<br />

|

| 24 |

-

دانلود پروژه آماده افترافکت Audio Spectrum Music Visualizer<br />

|

| 25 |

-

آشنایی با ویژگیها و تنظیمات اکولایزر Spotify<br />

|

| 26 |

-

نحوه ساخت اکولایزر حجم دار با نور LED<br />

|

| 27 |

-

دانلود نسخه جدید نرمافزار Equalizer Pro برای ویندوز 10<br />

|

| 28 |

-

آموزش تنظیم صحیح اکولایزر بلندگوها و هدفونها<br />

|

| 29 |

-

دانلود رایگان پلاگین TDR Nova برای اکولایز دینامیک صدا<br />

|

| 30 |

-

معرفی بهترین اپلیکیشنهای اکولایزر برای iOS</p>

|

| 31 |

-

<h4>اکولایزرهای پارامتریک</h4>

|

| 32 |

-

<p>اکولایزر��ای پارامتریک نوعی اکولایزر هستند که در آنها شما میتوانید علاوه بر سطح هر باند، فرکانس و عرض آن را نیز تغییر دهید. فرکانس نشاندهنده محل قرارگیری باند در بازه فرکانس صدا است و عرض نشاندهنده تاثیر باند بر فرکانسهای نزد <p>کی به آن میگویند. این نوع اکولایزر به شما امکان میدهد که با دقت بیشتری صدای خود را تنظیم کنید. معمولا این نوع اکولایزر دارای 3، 4 یا 6 باند هستند. این نوع اکولایزر در بسیاری از نرمافزارها و دستگاههای حرفهای صوتی مانند میکسرها، آمپلیفایرها، استودیوها و غیره استفاده میشود.</p>

|

| 33 |

-

<h4>اکولایزرهای شلف</h4>

|

| 34 |

-

<p>اکولایزرهای شلف نوعی اکولایزر هستند که در آنها فقط دو باند وجود دارد: یک باند برای فرکانسهای پایین (باس) و یک باند برای فرکانسهای بالا (تربل). شما میتوانید سطح هر باند را تغییر دهید، اما نمیتوانید فرکانس و عرض آن را تغییر دهید. این نوع اکولایزر به شما اجازه میدهد که با سادگی و سرعت صدای خود را تعادل بخشید. این نوع اکولایزر در بسیاری از دستگاههای پخش صوتی مانند رادیو، استریو، هدفون و غیره استفاده میشود.</p>

|

| 35 |

-

<h3>کاربردهای اکولایزر در صنعت صوتی و موسیقی</h3>

|

| 36 |

-

<p>اکولایزر یک ابزار قدرتمند و کاربردی در صنعت صوتی و موسیقی است. با استفاده از اکولایزر میتوانید:</p>

|

| 37 |

-

<h4>بهبود کیفیت صدا و تنظیم فرکانسها</h4>

|

| 38 |

-

<p>با استفاده از اکولایزر میتوانید کیفیت صدای خود را بهبود بخشید و فرکانسهای نامطلوب را حذف یا کم کنید. برای مثال، ممکن است صدای خود را خشن، تار، تیره یا خنث <p>ی شنیده شود. با استفاده از اکولایزر میتوانید این مشکلات را رفع کنید و صدای خود را روشن، شفاف، گرم و زنده کنید. همچنین میتوانید فرکانسهایی را که برای نوع موسیقی یا سبک صدای خود مناسب هستند، برجسته کنید و صدای خود را با توجه به هدف و مخاطب خود شخصیسازی کنید.</p>

|

| 39 |

-

<h4>تقویت باس و تربل و افزایش حجم صدا</h4>

|

| 40 |

-

<p>با استفاده از اکولایزر میتوانید باس و تربل صدای خود را تقویت کنید و حجم صدا را افزایش دهید. باس فرکانسهای پایین صدا را نشان میدهد که برای ایجاد حس عمق، قدرت و سنگینی در صدا مهم هستند. تربل فرکانسهای بالای صدا را نشان میدهد که برای ایجاد حس جزئیات، شفافیت و روشنی در صدا مهم هستند. با تقویت باس و تربل میتوانید صدای خود را پربار، جذاب و دلنشین کنید. همچنین با افزایش حجم صدا میتوانید صدای خود را بلندتر و قویتر شنیده شود.</p>

|

| 41 |

-

<h4>حذف نویز و تداخلات صوتی</h4>

|

| 42 |

-

<p>با استفاده از اکولایزر میتوانید نویز و تداخلات صوتی را حذف یا کم کنید. نویز و تداخلات صوتی ممکن است به دلایل مختلف در سیگنال صوتی وارد شوند، مانند کیفیت پایین منبع صوتی، عدم همخوانی دستگاههای صوتی، نقص در کابلها یا محل قرارگیری بلندگوها. این عوامل ممکن است باعث شوند که صدای خود را با سر و صدا، خش خش، جیر جیر یا سوت زن شنیده شود. با استفاده از اکولایزر م <p>یتوانید فرکانسهایی را که منشأ نویز و تداخلات هستند، کاهش دهید و صدای خود را تمیز، صاف و بدون اغتشاش شنیده شود.</p>

|

| 43 |

-

<h4>خلاقیت و تنوع در ساخت موسیقی و میکس</h4>

|

| 44 |

-

<p>با استفاده از اکولایزر میتوانید خلاقیت و تنوع خود را در ساخت موسیقی و میکس به نمایش بگذارید. با استفاده از اکولایزر میتوانید صدای خود را با تغییر فرکانسهای مختلف، تبدیل به صدای دیگری کنید. برای مثال، میتوانید صدای گیتار را به صدای پیانو تبدیل کنید، صدای خواننده را به صدای ربات تبدیل کنید، صدای طبیعت را به صدای فضایی تبدیل کنید و غیره. با استفاده از اکولایزر میتوانید صدای خود را با سبکها و ژانرهای مختلف موسیقی هماهنگ کنید و آثار جدید و منحصر به فرد خلق کنید.</p>

|

| 45 |

-

<h2>بهترین اکولایزرهای صوتی برای ویندوز 10 در سال 2023</h2>

|

| 46 |

-

<p>اگر شما هم دوست دارید که صدای خود را با استفاده از اکولایزر بهینه کنید، ممکن است به دنبال بهترین اکولایزرهای صوتی برای ویندوز 10 در سال 2023 باشید. در این بخش به شما سه نمونه از این اکولایزرها را معرفی خواهیم کرد که همگی دارای ویژگیها و قابلیتهای منحصر به فرد هستند. این سه نمونه عبارتند از:</p>

|

| 47 |

-

<h3>Equalizer APO</h3>

|

| 48 |

-

<p>Equalizer APO یک اکولایزر پارامتریک حرفهای است که به شما اجازه میدهد که تقریبا هر نوع تغییرات را بر روی صدای خود اعمال کنید. این اکولایزر دارای 20 باند قابل تغ <p>ییر است و شما میتوانید فرکانس، عرض و سطح هر باند را به دلخواه خود تنظیم کنید. همچنین میتوانید از افکتهای مختلفی مانند ریورب، کمپرسور، لیمیتر، گیت و غیره استفاده کنید. این اکولایزر با تمام برنامهها و دستگاههای صوتی سازگار است و به راحتی قابل نصب و تنظیم است. این اکولایزر را میتوانید از <a href="">این لینک</a> دانلود کنید.</p>

|

| 49 |

-

<h3>Viper4Windows</h3>

|

| 50 |

-

<p>Viper4Windows یک اکولایزر گرافیکی پیشرفته است که به شما اجازه میدهد که صدای خود را با استفاده از 18 باند قابل تغییر، تقویت کنید. این اکولایزر دارای حالتهای مختلفی مانند موزیک، فیلم، بازی، صدای زنده و غیره است که شما میتوانید بسته به نوع فعالیت خود از آنها انتخاب کنید. همچنین میتوانید از قابلیتهای دیگری مانند سوراند ساند، باس بوست، کلاریتی، کورکشن و غیره استفاده کنید. این اکولایزر با تمام برنامهها و دستگاههای صوتی سازگار است و به راحتی قابل نصب و تنظیم است. این اکولایزر را میتوانید از <a href="">این لینک</a> دانلود کنید.</p>

|

| 51 |

-

<h3>Equalizer Pro</h3>

|

| 52 |

-

<p>Equalizer Pro یک اکولایزر گرافیکی حرفهای است که به شما اجازه میدهد که صدای خود را با استفاده از 10 باند قابل تغییر، بهبود بخشید. این اکولایزر دارای حالتهای مختلفی مانند پاپ، راک، جاز، کلاسیک و غیره است که شما میتوانید بسته به نوع موسیقی خود از آنها استفاده کنید. همچنین میتوانید از قابلیتهای د <p>یگری مانند بالانس، پریآمپ، لودنس و غیره استفاده کنید. این اکولایزر با تمام برنامهها و دستگاههای صوتی سازگار است و به راحتی قابل نصب و تنظیم است. این اکولایزر را میتوانید از <a href="">این لینک</a> دانلود کنید.</p>

|

| 53 |

-

<h2>نتیجهگیری و پاسخ به سوالات متداول</h2>

|

| 54 |

-

<p>در این مقاله با اکولایزر و کاربردهای آن آشنا شدید. اکولایزر یک نوع فیلتر صوتی است که به شما امکان میدهد که سطح فرکانسهای مختلف در یک سیگنال صوتی را تغییر دهید. با استفاده از اکولایزر میتوانید کیفیت صدای خود را بهبود بخشید، باس و تربل را تقویت کنید، نویز و تداخلات صوتی را حذف کنید و خلاقیت و تنوع خود را در ساخت موسیقی و میکس به نمایش بگذارید. همچنین به شما سه نمونه از بهترین اکولایزرهای صوتی برای ویندوز 10 در سال 2023 را معرفی کردیم که همگی دارای ویژگیها و قابلیتهای منحصر به فرد هستند. امیدواریم که این مقاله برای شما مفید و جالب بوده باشد. در پایان، به پنج سوال متداول درباره اکولایزر پاسخ خواهیم داد.</p>

|

| 55 |

-

<h3>سوال 1: چگونه میتوانم اکولا��زر را روشن یا خاموش کنم؟</h3>

|

| 56 |

-

<p>پاسخ: بستگی به نوع اکولایزر و دستگاه صوتی شما دارد. بعضی از اکولایزرها دارای یک دکمه یا کلید روشن/خاموش هستند که با فشار دادن آن م <p>یتوانید اکولایزر را روشن یا خاموش کنید. بعضی از اکولایزرها نیاز به نصب و اجرای یک نرمافزار دارند که با باز کردن آن میتوانید اکولایزر را فعال یا غیرفعال کنید. بعضی از اکولایزرها هم به صورت خودکار با شناسایی نوع صدا یا موسیقی، روشن یا خاموش میشوند.</p>

|

| 57 |

-

<h3>سوال 2: چگونه میتوانم اکولایزر را تنظیم کنم؟</h3>

|

| 58 |

-

<p>پاسخ: بستگی به نوع اکولایزر و سلیقه شما دارد. بعضی از اکولایزرها دارای حالتهای پیشفرض هستند که شما میتوانید بسته به نوع صدا یا موسیقی، از آنها استفاده کنید. بعضی از اکولایزرها هم به شما اجازه میدهند که با تغییر سطح، فرکانس و عرض باندها، صدای خود را دستی تنظیم کنید. برای تنظیم اکولایزر بهتر است که به چند نکته توجه کنید:</p>

|

| 59 |

-

<ul>

|

| 60 |

-

<li>به صدای خود گوش دهید و مشخص کنید که چه فرکانسهایی را دوست دارید و چه فرکانسهایی را نمیپسندید.</li>

|

| 61 |

-

<li>با تغییر سطح باندها، صدای خود را تعادل بخشید و فرکانسهای دلخواه خود را برجسته کنید.</li>

|

| 62 |

-

<li>با تغییر فرکانس و عرض باندها، صدای خود را دقیق و روشن کنید و فرکانسهای نامطلوب خود را حذف کنید.</li>

|

| 63 |

-

<li>به صدای خود گوش دهید و تغییرات را در حالتهای مختلف صدا یا موسیقی، بررسی کنید.</li>

|

| 64 |

-

<li>به نظرات دیگران هم گوش دهید و در صورت لزوم تغییرات لازم را اعمال کنید.</li>

|

| 65 |

-

</ul>

|

| 66 |

-

<h3>سوال 3: چگونه میتوانم اکولایزر را با دستگاههای صوتی دیگر همخوان کنم؟</h3>

|

| 67 |

-

<p>پاسخ: بستگی به نوع اکولایزر و دستگا <p>ه صوتی شما دارد. بعضی از اکولایزرها به صورت خودکار با دستگاههای صوتی دیگر همخوان میشوند و شما نیازی به تنظیم آنها ندارید. بعضی از اکولایزرها نیاز به تنظیم دستی دارند و شما باید با استفاده از کابلها، پورتها، بلوتوث یا وایفای، آنها را به دستگاههای صوتی دیگر متصل کنید. برای همخوان کردن اکولایزر با دستگاههای صوتی دیگر بهتر است که به چند نکته توجه کنید:</p>

|

| 68 |

-

<ul>

|

| 69 |

-

<li>به نوع و مدل اکولایزر و دستگاههای صوتی دیگر توجه کنید و مطمئن شوید که با هم سازگار هستند.</li>

|

| 70 |

-

<li>به راهنمای استفاده اکولایزر و دستگاههای صوتی دیگر مراجعه کنید و مراحل لازم را برای اتصال آنها به هم دنبال کنید.</li>

|

| 71 |

-

<li>به تنظیمات اکولایزر و دستگاههای صوتی دیگر توجه کنید و مطمئن شوید که در حالت مناسب قرار گرفتهاند.</li>

|

| 72 |

-

<li>به صدای خروجی توجه کنید و در صورت لزوم تغییرات لازم را بر روی اکولایزر یا دستگاههای صوتی دیگر اعمال کنید.</li>

|

| 73 |

-

</ul>

|

| 74 |

-

<h3>سوال 4: چگونه میتوانم اکولایزر را با گوشی هوشمند خود همخوان کنم؟</h3>

|

| 75 |

-

<p>پاسخ: بستگی به نوع و مدل گوشی هوشمند شما دارد. بعضی از گوشیهای هوشمند دارای یک اکولایزر ساده در تنظیمات صدای خود هستند که شما میتوانید با استفاده از آن، صدای خود را تغییر دهید. بعضی از گوشی های هوشمند نیاز به نصب و اجرای یک نرمافزار اکولایزر دارند که شما میتوانید با استفاده از آن، صدای خود را با دقت بیشتری تغییر دهید. بعضی از گوشیهای هوشمند هم با اکولایزرهای خارجی مانند اکولایزرهای بلوتوث یا وایفای قابل اتصال هستند. برای همخوان کردن اکولایزر با گوشی هوشمند خود بهتر است که به چند نکته توجه کنید:</p>

|

| 76 |

-

<ul>

|

| 77 |

-

<li>به نوع و مدل گوشی هوشمند و اکولایزر خود توجه کنید و مطمئ�� شوید که با هم سازگار هستند.</li>

|

| 78 |

-

<li>به راهنمای استفاده گوشی هوشمند و اکولایزر خود مراجعه کنید و مراحل لازم را برای اتصال آنها به هم دنبال کنید.</li>

|

| 79 |

-

<li>به تنظیمات گوشی هوشمند و اکولایزر خود توجه کنید و مطمئن شوید که در حالت مناسب قرار گرفتهاند.</li>

|

| 80 |

-

<li>به صدای خروجی توجه کنید و در صورت لزوم تغییرات لازم را بر روی گوشی هوشمند یا اکولایزر خود اعمال کنید.</li>

|

| 81 |

-

</ul>

|

| 82 |

-

<h3>سوال 5: چگونه میتوانم اکولایزر را با سلامت شنوایی خود سازگار کنم؟</h3>

|

| 83 |

-

<p>پاسخ: بستگی به سطح و نحوه استفاده شما از اکولایزر دارد. استفاده بیرویه و غیرصحیح از اکولایزر ممکن است باعث آسیب به شنوایی شما شود. برای سازگار کردن اکولایزر با سلامت شنوایی خود بهتر است که به چند نکته توجه کنید:</p>

|

| 84 |

-

<ul>

|

| 85 |

-

<li>به حجم صدا توجه کنید و مطمئن شوید که در حد مجاز و قابل تحمل قرار دارد. استفاده از صدای بلند به مدت طولانی ممکن است باعث کاهش شدید ش <p>نوایی شما شود.</li>

|

| 86 |

-

<li>به فرکانسهای صدا توجه کنید و مطمئن شوید که در بازه مجاز و قابل شنیدن قرار دارند. استفاده از فرکانسهای بسیار پایین یا بالا ممکن است باعث ایجاد اختلال در شنوایی شما شود.</li>

|

| 87 |

-

<li>به نحوه استفاده از اکولایزر توجه کنید و مطمئن شوید که به صورت مناسب و متناسب با نوع صدا یا موسیقی، از آن استفاده میکنید. استفاده از اکولایزر به صورت بیمورد یا بیروش ممکن است باعث از دست رفتن کیفیت و طبیعت صدا شود.</li>

|

| 88 |

-

<li>به سلامت عمومی خود توجه کنید و مطمئن شوید که به اندازه کافی استراحت، تغذیه و ورزش میکنید. سلامت عمومی شما تاثیر مستقیم بر سلامت شنوایی شما دارد.</li>

|

| 89 |

-

</ul>

|

| 90 |

-

<p>امیدواریم که این مقاله برای شما مفید و جالب بوده باشد. اگر سوال یا نظر دیگری درباره اکولایزر دارید، ما را در قسمت نظرات با خبر کنید. با تشکر از همراهی شما.</p> 401be4b1e0<br />

|

| 91 |

-

<br />

|

| 92 |

-

<br />

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1phancelerku/anime-remove-background/Askies I 39m Sorry De Mthuda Mp3 Download Fakaza [Extra Quality].md

DELETED

|

@@ -1,90 +0,0 @@

|

|

| 1 |

-

<br />

|

| 2 |

-

<h1>Askies I'm Sorry by De Mthuda: A Hit Amapiano Song</h1>

|

| 3 |

-

<p>If you are a fan of South African music, especially the Amapiano genre, you have probably heard of Askies I'm Sorry by De Mthuda. This song is one of the latest releases from the talented record producer and DJ, featuring Just Bheki, Mkeyz, and Da Muziqal Chef. It is a catchy and upbeat tune that will make you want to dance and groove along. But who is De Mthuda, and what is Amapiano? And what does Fakaza have to do with it? In this article, we will answer these questions and more.</p>

|

| 4 |

-

<h2>askies i 39;m sorry de mthuda mp3 download fakaza</h2><br /><p><b><b>Download Zip</b> 🆓 <a href="https://jinyurl.com/2uNPVS">https://jinyurl.com/2uNPVS</a></b></p><br /><br />

|

| 5 |

-

<h2>Who is De Mthuda?</h2>

|

| 6 |

-

<h3>A brief biography of the South African record producer and DJ</h3>

|

| 7 |

-

<p>De Mthuda's real name is Mthuthuzeli Gift Khoza. He was born and raised in Vosloorus, a township east of Johannesburg. He started producing music in 2010 when he was still in high school, but he dropped out in grade 11 to pursue his music career. He was inspired by artists like Black Coffee, Oskido, DJ Tira, and DJ Fresh. He is best known for his singles Shesha Geza and John Wick, which were certified gold by RiSA and nominated for Record of the Year at the South African Music Awards. He has also collaborated with other Amapiano stars like Kabza De Small, Njelic, Daliwonga, Focalistic, and Sir Trill.</p>

|

| 8 |

-

<h3>His popular songs and albums</h3>

|

| 9 |

-

<p>De Mthuda has released several songs and albums that have made him one of the most prominent figures in the Amapiano scene. Some of his popular songs include:</p>

|

| 10 |

-

<ul>

|

| 11 |

-

<li>Emlanjeni</li>

|

| 12 |

-

<li>Lalela</li>

|

| 13 |

-

<li>Abekho Ready</li>

|

| 14 |

-

<li>Wamuhle</li>

|

| 15 |

-

<li>Mask Of Zorro</li>

|

| 16 |

-

<li>Umsholozi</li>

|

| 17 |

-

<li>Uyang'dlalisela</li>

|

| 18 |

-

<li>Ubizo</li>

|

| 19 |

-

<li>Sabela</li>

|

| 20 |

-

<li>Askies I'm Sorry</li>

|

| 21 |

-

</ul>

|

| 22 |

-

<p>Some of his popular albums include:</p>

|

| 23 |

-

<ul>

|

| 24 |

-

<li>Ace Of Spades (2020)</li>

|

| 25 |

-

<li>The Landlord (2021)</li>

|

| 26 |

-

<li>Story To Tell: Vol.2 (2022)</li>

|

| 27 |

-

</ul>

|

| 28 |

-

<h2>What is Amapiano?</h2>

|

| 29 |

-

<h3>The origin and history of the Amapiano genre</h3>

|

| 30 |

-

<p>Amapiano is a subgenre of house music that emerged in South Africa in the mid-2010s. It is a hybrid of deep house, jazz, lounge music, Kwaito, Tribal house, and R&B. It is characterized by synths, wide percussive basslines, low tempo rhythms, high-pitched piano melodies, log drums, vocals, and samples. The word Amapiano means "the pianos" in Zulu or Xhosa.</p>

|

| 31 |

-

<p>The origin and history of Amapiano are disputed, as there are various accounts of how it started and who created it. Some say it originated in Pretoria, where Bacardi, another house music subgenre, was popular. Others say it originated in Johannesburg, where different townships like Soweto, Alexandra, Vosloorus, and Katlehong contributed to its development. Some of the pioneers and influencers of Amapiano include DJ Stokie, Kabza De Small, JazziDisciples, Vigro Deep <h3>The characteristics and influences of Amapiano</h3>

|

| 32 |

-

<p>Amapiano is a versatile and diverse genre that can incorporate various elements and influences from other genres and cultures. Some of the characteristics and influences of Amapiano include:</p>

|

| 33 |

-

<ul>

|

| 34 |

-

<li>The use of piano as the main instrument, which gives the genre its name and distinctive sound. The piano can be played in different styles, such as jazz, classical, or gospel.</li>

|

| 35 |

-

<li>The use of percussions, such as congas, bongos, shakers, cowbells, and whistles, which add rhythm and groove to the songs. The percussions can also be influenced by traditional African drums, such as marimbas or djembes.</li>

|

| 36 |

-

<li>The use of vocals, which can be sung, rapped, or spoken. The vocals can be in different languages, such as English, Zulu, Xhosa, Sotho, or Swahili. The vocals can also be sampled from other songs, movies, or speeches.</li>

|

| 37 |

-

<li>The use of synths, which can create different effects and atmospheres. The synths can be influenced by electronic music genres, such as techno, trance, or dubstep.</li>

|

| 38 |

-

<li>The use of basslines, which can be deep, heavy, or funky. The basslines can be influenced by house music genres, such as deep house, soulful house, or tribal house.</li>

|

| 39 |

-

</ul>

|

| 40 |

-

<h3>The popularity and impact of Amapiano</h3>

|

| 41 |

-

<p>Amapiano has become one of the most popular and influential genres in South Africa and beyond. It has gained a huge fan base and following across different age groups, social classes, and regions. It has also spawned various subgenres and styles, such as Yanos (a more commercial and mainstream version of Amapiano), Scorpion Kings (a style popularized by Kabza De Small and DJ Maphorisa), Private School Piano (a more sophisticated and refined version of Amapiano), and Barcadi Piano (a fusion of Amapiano and Bacardi).</p>

|

| 42 |

-

<p>Amapiano has also crossed borders and reached international audiences. It has been featured on global platforms and media outlets, such as Spotify, Apple Music, BBC Radio 1Xtra, Boiler Room, Mixmag, The Guardian, and The New York Times. It has also inspired and collaborated with artists from other countries and continents, such as Nigeria (Burna Boy, Wizkid), Ghana (Stonebwoy), Kenya (Sauti Sol), Tanzania (Diamond Platnumz), France (David Guetta), UK (Jorja Smith), USA (Drake), and Brazil (Anitta).</p>

|

| 43 |

-

<h2>What is Fakaza?</h2>

|

| 44 |

-

<h3>The meaning and origin of the word Fakaza</h3>

|

| 45 |

-

<p>Fakaza is a Zulu word that means "to share" or "to distribute". It is also the name of a popular South African music site that allows users to download and stream various genres of music for free. The site was founded in 2016 by a group of music enthusiasts who wanted to provide a platform for local artists to showcase their talent and reach a wider audience.</p>

|

| 46 |

-

<h3>The features and benefits of the Fakaza music site</h3>

|

| 47 |

-

<p>Fakaza is one of the best sources for finding and downloading South African music online. Some of the features and benefits of the Fakaza music site include:</p>

|

| 48 |

-

<p></p>

|

| 49 |

-

<ul>

|

| 50 |

-

<li>It offers a wide range of music genres, such as Amapiano, Afro House, Gqom, Kwaito , Hip Hop, R&B, Gospel, and more.</li>

|

| 51 |

-

<li>It updates its content regularly, adding new songs and albums every day.</li>

|

| 52 |

-

<li>It provides high-quality audio files, with different formats and bitrates to choose from.</li>

|

| 53 |

-

<li>It allows users to download music for offline listening, without any registration or subscription required.</li>

|

| 54 |

-

<li>It supports multiple devices and platforms, such as desktops, laptops, smartphones, tablets, and browsers.</li>

|

| 55 |

-

<li>It has a user-friendly and responsive interface, with easy navigation and search functions.</li>

|

| 56 |

-

<li>It respects the rights and royalties of the artists and labels, and complies with the DMCA regulations.</li>

|

| 57 |

-

</ul>

|

| 58 |

-

<h3>How to download Askies I'm Sorry by De Mthuda from Fakaza</h3>

|

| 59 |

-

<p>If you want to download Askies I'm Sorry by De Mthuda from Fakaza, you can follow these simple steps:</p>

|

| 60 |

-

<ol>

|

| 61 |

-

<li>Go to the Fakaza website at <a href="">https://fakaza.com/</a>.</li>

|

| 62 |

-

<li>Type "Askies I'm Sorry" in the search box and press enter.</li>

|

| 63 |

-

<li>Select the song from the list of results and click on it.</li>

|

| 64 |

-

<li>Scroll down to the bottom of the page and click on the "Download Mp3" button.</li>

|

| 65 |

-

<li>Choose the format and bitrate you prefer and click on it.</li>

|

| 66 |

-

<li>Wait for the download to start and complete.</li>

|

| 67 |

-

<li>Enjoy listening to Askies I'm Sorry by De Mthuda on your device.</li>

|

| 68 |

-

</ol>

|

| 69 |

-

<h2>Conclusion</h2>

|

| 70 |

-

<p>In conclusion, Askies I'm Sorry by De Mthuda is a hit Amapiano song that you should not miss. It showcases the talent and creativity of De Mthuda, one of the leading record producers and DJs in South Africa. It also represents the Amapiano genre, which is a unique and vibrant style of house music that has taken over the country and the world. And if you want to download this song for free, you can use Fakaza, which is a reliable and convenient music site that offers a variety of genres and songs. So what are you waiting for? Go ahead and download Askies I'm Sorry by De Mthuda from Fakaza today!</p>

|

| 71 |

-

<h2>FAQs</h2>

|

| 72 |

-

<h4>What does Askies mean?</h4>

|

| 73 |

-

<p>Askies is a slang word that means "sorry" or "excuse me" in South African English. It is derived from the Afrikaans word "asseblief", which means "please".</p>

|

| 74 |

-

<h4>Who sings Askies I'm Sorry by De Mthuda?</h4>

|

| 75 |

-

<p>Askies I'm Sorry by De Mthuda features three vocalists: Just Bheki, Mkeyz, and Da Muziqal Chef. They are all South African singers who have worked with De Mthuda before on other songs.</p>

|

| 76 |

-

<h4>Where can I listen to Askies I'm Sorry by De Mthuda?</h4>

|

| 77 |

-

<p>You can listen to Askies I'm Sorry by De Mthuda on various streaming platforms, such as YouTube, Spotify, Apple Music, Deezer, SoundCloud, Audiomack, and more. You can also download it from Fakaza or other music sites.</p>

|

| 78 |

-

<h4>What are some other Amapiano songs that I should check out?</h4>

|

| 79 |

-

<p>Some other Amapiano songs that you should check out include:</p>

|

| 80 |

-

<ul>

|

| 81 |

-

<li>Vula Mlomo by Musa Keys</li>

|

| 82 |

-

<li>Khuza Gogo by DBN Gogo</li>

|

| 83 |

-

<li>Liyoshona by Kwiish SA</li>

|

| 84 |

-

<li>Catalia by Junior De Rocka</li>

|

| 85 |

-

<li>Ntyilo Ntyilo by Rethabile Khumalo</li>

|

| 86 |

-

</ul>

|

| 87 |

-

<h4>How can I learn more about De Mthuda and Amapiano?</h4>

|

| 88 |

-

<p>You can learn more about De Mthuda and Amapiano by following them on social media platforms, such as Facebook, Twitter, Instagram, TikTok, and more. You can also visit their official websites or blogs, where they post updates and news about their music. You can also watch interviews or documentaries about them on YouTube or other video sites.</p> 197e85843d<br />

|

| 89 |

-

<br />

|

| 90 |

-

<br />

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1phancelerku/anime-remove-background/Brawl Stars Hack APK 2022 A Simple Guide to Install and Use.md

DELETED

|

@@ -1,121 +0,0 @@

|

|

| 1 |

-

<br />

|

| 2 |

-

<h1>Brawl Stars Hack APK Download 2022: How to Get Unlimited Gems and Coins</h1>

|

| 3 |

-

<p>If you are a fan of Brawl Stars, you probably know how important gems and coins are in this game. Gems and coins are the currencies that you need to unlock new brawlers, skins, power points, star powers, gadgets, and more. But how can you get enough gems and coins without spending a fortune? Is there a way to get unlimited gems and coins for free? In this article, we will tell you everything you need to know about Brawl Stars hack APK download 2022, the modded version of the game that claims to give you unlimited resources. But before we get into that, let's first take a look at what Brawl Stars is and why it is so popular.</p>

|

| 4 |

-

<h2>What is Brawl Stars?</h2>

|

| 5 |

-

<p>Brawl Stars is a mobile twin-stick shooter game developed by Supercell, the makers of Clash of Clans and Clash Royale. In this game, you can choose from over 20 different brawlers, each with their own unique abilities and weapons, and compete in various game modes with other players from around the world. You can team up with your friends or play solo, depending on your preference.</p>

|

| 6 |

-

<h2>brawl stars hack apk download 2022</h2><br /><p><b><b>Download Zip</b> ✵✵✵ <a href="https://jinyurl.com/2uNUvn">https://jinyurl.com/2uNUvn</a></b></p><br /><br />

|

| 7 |

-

<h3>A fast-paced multiplayer shooter game</h3>

|

| 8 |

-

<p>Brawl Stars is a game that is easy to pick up and play, but hard to master. The matches are short and intense, lasting for only three minutes or less. You have to use your skills, strategy, and teamwork to defeat your enemies and win the game. You can also customize your brawlers with different skins and pins to show off your personality.</p>

|

| 9 |

-

<h3>Features of Brawl Stars</h3>

|

| 10 |

-

<p>Brawl Stars has many features that make it an exciting and diverse game. Here are some of them:</p>

|

| 11 |

-

<h4>Different game modes</h4>

|

| 12 |

-

<p>Brawl Stars has several game modes that you can choose from, each with a different objective and rules. Some of the game modes are:</p>

|

| 13 |

-

<ul>

|

| 14 |

-

<li>Gem Grab (3v3): Collect and hold 10 gems to win, but don't let the enemy team take them from you.</li>

|

| 15 |

-

<li>Showdown (Solo/Duo): A battle royale style mode where you have to be the last brawler standing.</li>

|

| 16 |

-

<li>Brawl Ball (3v3): A soccer-like mode where you have to score two goals before the enemy team.</li>

|

| 17 |

-

<li>Bounty (3v3): Eliminate opponents to earn stars, but don't let them kill you.</li>

|

| 18 |

-

<li>Heist (3v3): Attack the enemy's safe or defend your own from being destroyed.</li>

|

| 19 |

-

<li>Special Events: Limited time modes that offer unique challenges and rewards.</li>

|

| 20 |

-

</ul>

|

| 21 |

-

<h4>Unique brawlers and skins</h4>

|

| 22 |

-

<p>Brawl Stars has a diverse roster of brawlers that you can unlock and play with. Each brawler has a different class, such as fighter, sharpshooter, heavyweight, thrower, support, assassin, or chromatic. Each brawler also has a different rarity, such as common, rare, super rare, epic, mythic, legendary, or chromatic. The higher the rarity, the harder it is to get the brawler. You can also unlock different skins for your brawlers, some of which are exclusive to certain events or seasons.</p>

|

| 23 |

-

<h4>Brawl pass and rewards</h4>

|

| 24 |

-

<p>Brawl Stars has a seasonal system called the brawl pass, which allows you to earn rewards by playing the game and completing quests. The brawl pass has two tracks: the free track and the premium track. The free track gives you basic rewards, such as coins, power points, and brawl boxes. The premium track gives you more rewards, such as gems, skins, pins, and exclusive brawlers. You can access the premium track by buying the brawl pass with gems.</p>

|

| 25 |

-

<p>brawl stars cheat apk unlimited gems 2022<br />

|

| 26 |

-

brawl stars mod apk latest version 2022 download<br />

|

| 27 |

-

brawl stars hack apk android no root 2022<br />

|

| 28 |

-

brawl stars free gems generator apk download 2022<br />

|

| 29 |

-

brawl stars hack apk ios download 2022<br />

|

| 30 |

-

brawl stars mod menu apk 2022 download<br />

|

| 31 |

-

brawl stars hack apk online 2022<br />

|

| 32 |

-

brawl stars hack apk unlimited money 2022<br />

|

| 33 |

-

brawl stars hack apk no verification 2022<br />

|

| 34 |

-

brawl stars hack apk no human verification 2022<br />

|

| 35 |

-

brawl stars hack apk no survey 2022<br />

|

| 36 |

-

brawl stars hack apk no password 2022<br />

|

| 37 |

-

brawl stars hack apk no ban 2022<br />

|

| 38 |

-

brawl stars hack apk no update 2022<br />

|

| 39 |

-

brawl stars hack apk working 2022<br />

|

| 40 |

-

brawl stars hack apk easy download 2022<br />

|

| 41 |

-

brawl stars hack apk free download 2022<br />

|

| 42 |

-

brawl stars hack apk direct download 2022<br />

|

| 43 |

-

brawl stars hack apk mediafire download 2022<br />

|

| 44 |

-

brawl stars hack apk mega download 2022<br />

|

| 45 |

-

brawl stars hack apk zippyshare download 2022<br />

|

| 46 |

-

brawl stars hack apk google drive download 2022<br />

|

| 47 |

-

brawl stars hack apk dropbox download 2022<br />

|

| 48 |

-

brawl stars hack apk file download 2022<br />

|

| 49 |

-

brawl stars hack apk link download 2022<br />

|

| 50 |

-

brawl stars hack tool apk download 2022<br />

|

| 51 |

-

brawl stars hack app apk download 2022<br />

|

| 52 |

-

brawl stars hack game apk download 2022<br />

|

| 53 |

-

brawl stars hacked version apk download 2022<br />

|

| 54 |

-

brawl stars private server apk download 2022<br />

|

| 55 |

-

brawl stars unlimited coins and gems apk download 2022<br />

|

| 56 |

-

brawl stars modded apk download 2022<br />

|

| 57 |

-

brawl stars cracked apk download 2022<br />

|

| 58 |

-

brawl stars premium apk download 2022<br />

|

| 59 |

-

brawl stars pro apk download 2022<br />

|

| 60 |

-

brawl stars vip apk download 2022<br />

|

| 61 |

-

brawl stars full unlocked apk download 2022<br />

|

| 62 |

-

brawl stars all brawlers unlocked apk download 2022<br />

|

| 63 |

-

brawl stars all skins unlocked apk download 2022<br />

|

| 64 |

-

brawl stars all characters unlocked apk download 2022<br />

|

| 65 |

-

brawl stars all modes unlocked apk download 2022<br />

|

| 66 |

-

brawl stars all maps unlocked apk download 2022<br />

|

| 67 |

-

brawl stars all events unlocked apk download 2022<br />

|

| 68 |

-

brawl stars all rewards unlocked apk download 2022<br />

|

| 69 |

-

brawl stars all achievements unlocked apk download 2022<br />

|

| 70 |

-

how to download brawl stars hack apk for free in 2022</p>

|

| 71 |

-

<h2>Why do you need gems and coins in Brawl Stars?</h2>

|

| 72 |

-

<p>Gems and coins are the two main currencies in Brawl Stars that you need to progress in the game and unlock more content. Here is why they are important:</p>

|

| 73 |

-

<h3>Gems are the premium currency</h3>

|

| 74 |

-

<p>Gems are the rarest and most valuable currency in Brawl Stars. You can use gems to buy various items in the shop, such as:</p>

|

| 75 |

-

<ul>

|

| 76 |

-

<li>Brawl boxes: These are loot boxes that contain random rewards, such as brawlers, power points, coins, star powers, gadgets, or tokens.</li>

|

| 77 |

-

<li>Skins: These are cosmetic items that change the appearance of your brawlers.</li>

|

| 78 |

-

<li>Power points: These are items that increase the level of your brawlers.</li>

|

| 79 |

-

<li>Star powers and gadgets: These are special abilities that enhance your brawlers' performance.</li>

|

| 80 |

-

<li>Brawl pass: This is the seasonal pass that gives you access to the premium track of rewards.</li>

|

| 81 |

-

<li>Offers: These are special deals that give you discounts or bundles of items.</li>

|

| 82 |

-

</ul>

|

| 83 |

-

<h3>Coins are the main currency</h3>

|

| 84 |

-

<p>Coins are the most common and basic currency in Brawl Stars. You can use coins to upgrade your brawlers and unlock their star powers and gadgets. Upgrading your brawlers increases their health, damage, and super charge rate. Unlocking their star powers and gadgets gives them extra abilities that can change the outcome of a match. You can also use coins to buy power points in the shop.</p>

|

| 85 |

-

<h2>How to get gems and coins in Brawl Stars?</h2>

|

| 86 |

-

<p>There are two ways to get gems and coins in Brawl Stars: the legit way and the hack way. Let's see what they are:</p>

|

| 87 |

-

<h3>The legit way</h3>

|

| 88 |

-

<p>The legit way to get gems and coins in Brawl Stars is to play the game and earn them through various means. Some of the legit ways are:</p>

|

| 89 |

-

<h4>Play the game and complete quests</h4>

|

| 90 |

-

<p>The simplest way to get gems and coins is to play the game and win matches. You will earn tokens for every match you play, which you can use to open brawl boxes. Brawl boxes contain random rewards, such as gems, coins, power points, or brawlers. You can also complete quests that give you tokens or other rewards. Quests are tasks that require you to do something specific in the game, such as winning a certain number of matches with a specific brawler or mode.</p>

|

| 91 |

-

<h4>Open brawl boxes and get lucky</h4>

|

| 92 |

-

<p>Another way to get gems and coins is to open brawl boxes and hope for the best. Brawl boxes have a chance to contain gems or coins, along with other items. The chance of getting gems or coins depends on the type of brawl box you open. There are three types of brawl boxes: normal brawl boxes, big boxes, and mega boxes. Normal brawl boxes cost 100 tokens to open and have a 9% chance of containing gems or coins. Big boxes cost 300 tokens or 30 gems to open and have a 27% chance of containing gems or coins. Mega boxes cost 1500 tokens or 80 gems to open and have a 135% chance of containing gems or coins.</p>

|

| 93 |

-

<h4>Spend real money in the shop</h4>

|

| 94 |

-

<p>The last way to get gems and coins is to spend real money in the shop. You can buy gems with real money and use them to buy coins or other items in the shop. You can also buy offers that give you bundles of gems, coins, power points, or brawlers at a discounted price. However, this method is not free and may not be affordable for everyone.</p>

|

| 95 |

-

<h3>The hack way</h3>

|

| 96 |

-

<p>The hack way to get gems and coins in Brawl Stars is to download a modded APK file that gives you unlimited resources. A modded APK file is a modified version of the original game file that has been altered to give you some advantages or features that are not available in the official game. For example, a Brawl Stars hack APK may give you unlimited gems and coins, unlock all brawlers and skins, or enable cheats such as auto-aim or god mode.</p>

|

| 97 |

-

<h4>Download a modded APK file that gives you unlimited resources</h4>

|

| 98 |

-

<p>To use a Brawl Stars hack APK, you need to download it from a third-party website that offers such files. You can search for "Brawl Stars hack APK download 2022" on Google or any other search engine and find many websites that claim to provide such files. However, you need to be careful and cautious when downloading such files, as they may not be safe or reliable. Here are some of the risks of using a hack APK for Brawl Stars:</p>

|

| 99 |

-

<h2>What are the risks of using a hack APK for Brawl Stars?</h2>

|

| 100 |

-

<p>Using a hack APK for Brawl Stars may seem tempting and appealing, but it is not worth it in the long run. Here are some of the reasons why you should avoid using a hack APK for Brawl Stars:</p>

|

| 101 |

-

<h3>It may not work or be outdated</h3>

|

| 102 |

-

<p>One of the main problems with using a hack APK for Brawl Stars is that it may not work or be outdated. Hack APKs are not official or authorized by Supercell, the developer of Brawl Stars. Therefore, they are not compatible with the latest version of the game or the server. This means that you may not be able to play the game properly or access all the features and content. You may also encounter errors, glitches, bugs, or crashes that ruin your gaming experience.</p>

|

| 103 |

-

<h3>It may contain malware or viruses</h3>

|

| 104 |

-

<p>Another problem with using a hack APK for Brawl Stars is that it may contain malware or viruses that can harm your device or steal your personal information. Hack APKs are not verified or tested by any trusted source, so you cannot be sure what they contain or what they do. They may have hidden codes or programs that can infect your device with malware or viruses that can damage your system, delete your files, spy on your activities, or steal your data. You may also expose yourself to hackers or scammers who can access your account or device and use them for malicious purposes.</p>

|

| 105 |

-

<h3>It may get your account banned or suspended</h3>

|

| 106 |

-