Commit

·

231f332

1

Parent(s):

9225a2f

update README

Browse files- README.assets/image-20240411203604268.png +3 -0

- README.md +86 -1

README.assets/image-20240411203604268.png

ADDED

|

Git LFS Details

|

README.md

CHANGED

|

@@ -8,7 +8,9 @@ size_categories:

|

|

| 8 |

|

| 9 |

# Brogue Map Dataset

|

| 10 |

|

| 11 |

-

|

|

|

|

|

|

|

| 12 |

|

| 13 |

Each map is stored in a `.csv` file. The map is a `(32x32)` array, which is the map size.

|

| 14 |

|

|

@@ -31,3 +33,86 @@ Each cell in the array is a `int` number ranged from 0 to 13, which represented

|

|

| 31 |

"G_FIRE": 12,

|

| 32 |

"G_BRIDGE": 13

|

| 33 |

```

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

|

| 9 |

# Brogue Map Dataset

|

| 10 |

|

| 11 |

+

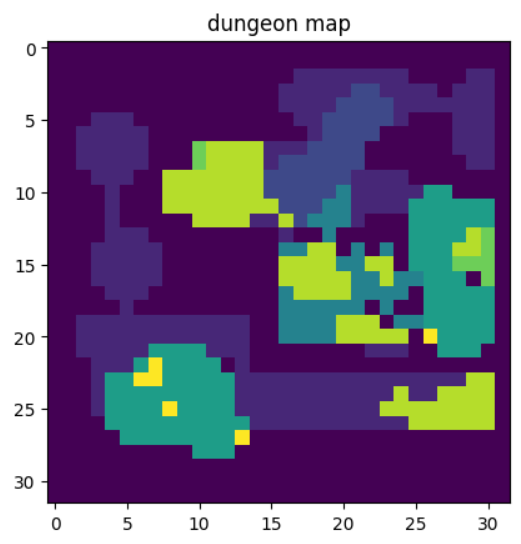

## 1. Data Explanation

|

| 12 |

+

|

| 13 |

+

This is the Map dataset from the open-sourced game [Brogue](https://github.com/tmewett/BrogueCE). It contains 40,000 train dataset, 10,000 test dataset and 10,000 validation dataset.

|

| 14 |

|

| 15 |

Each map is stored in a `.csv` file. The map is a `(32x32)` array, which is the map size.

|

| 16 |

|

|

|

|

| 33 |

"G_FIRE": 12,

|

| 34 |

"G_BRIDGE": 13

|

| 35 |

```

|

| 36 |

+

An example map datapoint is in the format of

|

| 37 |

+

|

| 38 |

+

```

|

| 39 |

+

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

|

| 40 |

+

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

|

| 41 |

+

0,0,0,1,1,1,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

|

| 42 |

+

0,0,1,1,1,8,8,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

|

| 43 |

+

0,1,1,1,8,8,8,0,0,0,0,1,1,1,0,0,0,0,0,0,0,0,0,0,0,0,0,0

|

| 44 |

+

0,1,1,8,8,8,8,0,0,0,1,1,1,1,1,0,1,0,0,0,0,0,0,0,0,0,0,0

|

| 45 |

+

0,1,1,1,8,8,0,0,0,1,1,1,1,1,1,1,1,0,0,1,0,0,0,0,0,0,0,0

|

| 46 |

+

0,1,1,1,1,1,0,0,0,1,1,1,1,1,1,1,1,0,1,8,0,0,1,1,1,1,0,0

|

| 47 |

+

0,1,1,1,1,1,0,0,0,1,1,1,1,1,1,1,0,0,1,1,1,1,1,1,1,1,1,0

|

| 48 |

+

0,0,1,1,1,0,0,0,0,0,1,1,1,1,1,0,0,0,1,1,1,1,1,1,1,1,1,0

|

| 49 |

+

0,0,0,0,1,0,0,0,0,0,0,1,1,1,0,0,0,0,1,1,1,1,0,0,1,1,1,9

|

| 50 |

+

0,0,0,1,1,1,0,0,0,0,0,0,0,0,0,0,0,0,1,1,1,0,0,0,0,1,1,0

|

| 51 |

+

0,0,1,1,1,1,1,0,0,0,0,0,0,1,1,1,1,0,0,0,1,0,0,0,0,0,0,0

|

| 52 |

+

0,0,1,1,1,1,1,0,0,0,0,0,0,1,1,1,1,1,1,1,1,1,0,0,0,0,0,0

|

| 53 |

+

0,1,1,1,1,1,1,0,0,0,0,0,0,1,1,1,1,0,1,1,1,1,0,0,0,0,0,0

|

| 54 |

+

0,1,8,1,1,1,1,1,0,0,0,0,0,0,1,0,0,0,0,0,0,0,0,0,0,0,1,0

|

| 55 |

+

0,1,8,8,8,8,1,8,0,0,0,0,1,8,1,1,0,0,0,0,0,0,0,0,0,1,1,1

|

| 56 |

+

0,0,8,8,8,8,8,8,0,0,0,8,8,8,8,8,1,0,0,0,1,1,0,0,0,1,1,1

|

| 57 |

+

0,0,1,8,8,8,8,8,8,0,1,8,8,8,8,8,1,0,0,0,1,1,0,0,0,0,1,1

|

| 58 |

+

0,0,0,1,8,8,8,8,8,0,1,1,1,8,8,1,0,0,0,0,1,1,0,1,0,1,1,1

|

| 59 |

+

0,0,0,8,8,8,8,8,8,1,1,1,1,8,1,1,0,0,0,0,1,1,1,1,0,1,1,0

|

| 60 |

+

0,0,0,8,8,8,8,1,0,0,0,3,1,0,1,0,0,0,0,0,0,1,1,1,0,1,1,0

|

| 61 |

+

0,0,0,0,8,8,8,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,1,1,0

|

| 62 |

+

0,0,0,1,1,0,0,0,0,0,0,0,0,0,11,1,1,1,1,1,1,1,1,1,1,1,0,0

|

| 63 |

+

0,1,1,1,8,1,0,0,0,0,0,0,0,0,11,11,11,1,1,1,1,1,1,1,1,1,1,0

|

| 64 |

+

0,0,1,1,1,1,0,0,0,0,0,0,0,0,11,11,0,0,1,1,0,0,1,1,1,1,1,1

|

| 65 |

+

0,0,0,1,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

|

| 66 |

+

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

|

| 67 |

+

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

|

| 68 |

+

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

|

| 69 |

+

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

|

| 70 |

+

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

|

| 71 |

+

```

|

| 72 |

+

|

| 73 |

+

## 2. Data processing

|

| 74 |

+

|

| 75 |

+

Huggingface does not store the map data in the correct format. To get each correct map data, use the following code:

|

| 76 |

+

|

| 77 |

+

```python

|

| 78 |

+

from datasets import load_dataset

|

| 79 |

+

import numpy as np

|

| 80 |

+

import matplotlib.pyplot as plt

|

| 81 |

+

|

| 82 |

+

# load dataset from hugging face

|

| 83 |

+

dataset = load_dataset("DolphinNie/dungeon-dataset")

|

| 84 |

+

|

| 85 |

+

# Dataset stored by huggingface is not in a correct format, we need to do further process

|

| 86 |

+

def dataset_convert(dataset):

|

| 87 |

+

dataset_train = list()

|

| 88 |

+

dataset_test = list()

|

| 89 |

+

dataset_valid = list()

|

| 90 |

+

datasets = [dataset_train, dataset_test, dataset_valid]

|

| 91 |

+

name = ['train', 'test', 'validation']

|

| 92 |

+

for i in range(3):

|

| 93 |

+

datapoint_num = int(dataset[name[i]].num_rows / 32)

|

| 94 |

+

dataset_tf = dataset[name[i]].to_pandas()

|

| 95 |

+

for n in range(datapoint_num):

|

| 96 |

+

env_num = tf_train[n*32:(n+1)*32]

|

| 97 |

+

datasets[i].append(env_num)

|

| 98 |

+

return dataset_train, dataset_test, dataset_valid

|

| 99 |

+

|

| 100 |

+

dataset_train, dataset_test, dataset_valid = dataset_convert(dataset)

|

| 101 |

+

|

| 102 |

+

# Visualize the datapoints if you want

|

| 103 |

+

def visualize_map(dungeon_map):

|

| 104 |

+

"""

|

| 105 |

+

Visualization of the map

|

| 106 |

+

:param map: the dungeon map representation (32x32x6)

|

| 107 |

+

:return:

|

| 108 |

+

"""

|

| 109 |

+

plt.imshow(dungeon_map, cmap='viridis', interpolation='nearest')

|

| 110 |

+

plt.title('dungeon map')

|

| 111 |

+

plt.show()

|

| 112 |

+

|

| 113 |

+

visualize_map(dataset_train[10000])

|

| 114 |

+

```

|

| 115 |

+

|

| 116 |

+

<img src="./README.assets/image-20240411203604268.png" alt="image-20240411203604268" style="zoom:50%;" />

|

| 117 |

+

|

| 118 |

+

Note that this dataset contains a two-dimensional representation of the map, not a three-dimensional one-hot representation. If you need to train a new model, you need to further process the data set.

|