Core ML Converted Model:

- This model was converted to Core ML for use on Apple Silicon devices. Conversion instructions can be found here.

- Provide the model to an app such as Mochi Diffusion Github / Discord to generate images.

split_einsumversion is compatible with all compute unit options including Neural Engine.originalversion is only compatible withCPU & GPUoption.- Custom resolution versions are tagged accordingly.

- The

vae-ft-mse-840000-ema-pruned.ckptVAE is embedded into the model. - This model was converted with a

vae-encoderfor use withimage2image. - Descriptions are posted as-is from original model source.

- Not all features and/or results may be available in

CoreMLformat. - This model does not have the unet split into chunks.

- This model does not include a

safety checker(for NSFW content). - This model can be used with ControlNet.

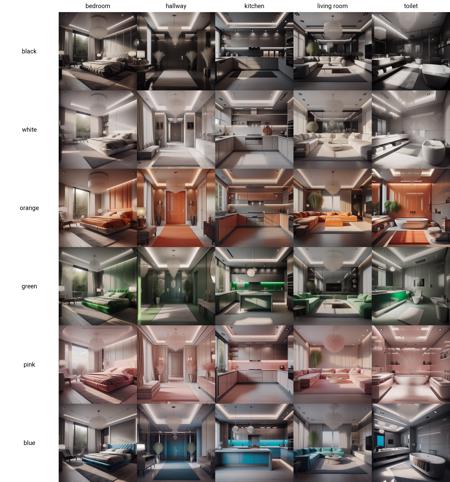

XSarchitectural-InteriorDesign-ForXSLora:

Source(s): CivitAI

XSarchitectural-InteriorDesign-ForXSLora

XSarchitectural-InteriorDesign-ForXSLora Suggestions for use: Lora, TI, VAE and other documents for indoor use

This data model has a corresponding LoRa model. If you wish to use it, please download it:

XSarchitectural-7Modern interior | Stable Diffusion LORA | Civitai

XSarchitectural-38InteriorForBedroom | Stable Diffusion LORA | Civitai

If you think it's good, give me a five-star review

If you have any questions, please contact me at [email protected]

Inference Providers

NEW

This model is not currently available via any of the supported third-party Inference Providers, and

HF Inference API was unable to determine this model's library.