Update README.md

Browse files

README.md

CHANGED

|

@@ -11,7 +11,7 @@ pipeline_tag: visual-question-answering

|

|

| 11 |

---

|

| 12 |

|

| 13 |

|

| 14 |

-

[📃Paper](https://arxiv.org/abs/2406.15252) | [🌐Website](https://tiger-ai-lab.github.io/VideoScore/) | [💻Github](https://github.com/TIGER-AI-Lab/VideoScore) | [🛢️Datasets](https://huggingface.co/datasets/TIGER-Lab/VideoFeedback) | [🤗Model (VideoScore)](https://huggingface.co/TIGER-Lab/VideoScore) | [🤗

|

| 15 |

|

| 16 |

|

| 17 |

|

|

@@ -20,7 +20,7 @@ pipeline_tag: visual-question-answering

|

|

| 20 |

- 🧐🧐[VideoScore-anno-only](https://huggingface.co/TIGER-Lab/VideoScore-anno-only) is a variant from [VideoScore](https://huggingface.co/TIGER-Lab/VideoScore), trained on VideoFeedback dataset

|

| 21 |

excluding the real videos.

|

| 22 |

|

| 23 |

-

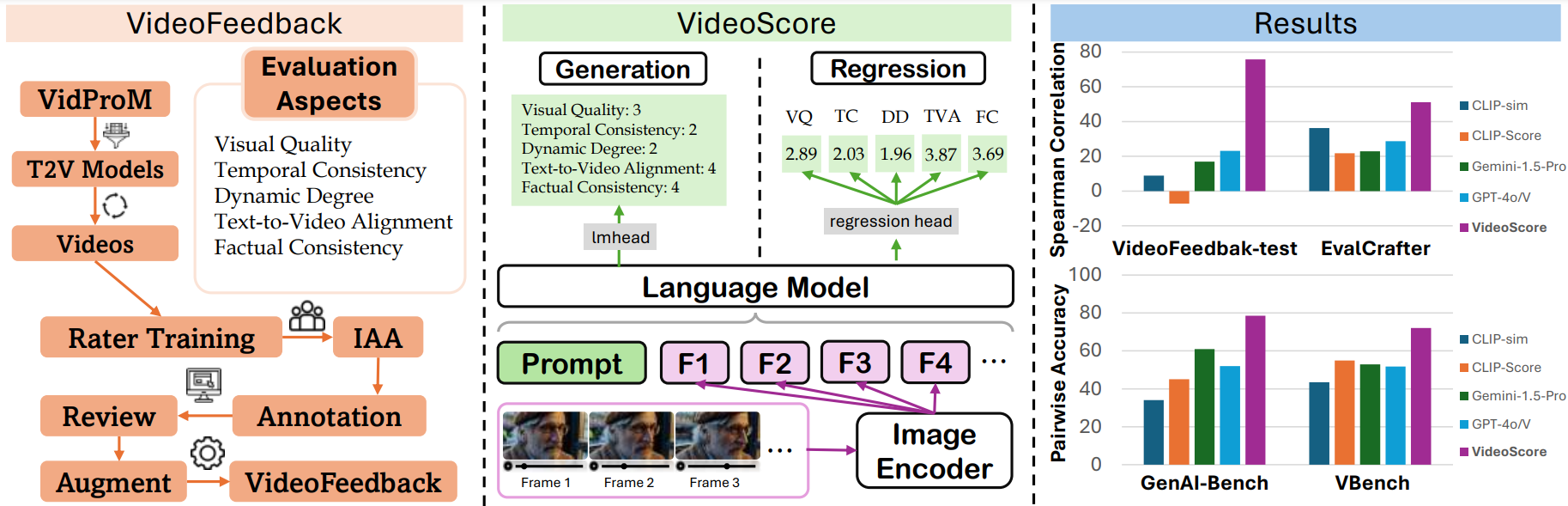

- [VideoScore](https://huggingface.co/TIGER-Lab/VideoScore) series is a video quality evaluation model series, taking [Mantis-8B-Idefics2](https://huggingface.co/TIGER-Lab/Mantis-8B-Idefics2) as base-model

|

| 24 |

and trained on [VideoFeedback](https://huggingface.co/datasets/TIGER-Lab/VideoFeedback),

|

| 25 |

a large video evaluation dataset with multi-aspect human scores.

|

| 26 |

|

|

@@ -49,8 +49,8 @@ The evaluation results are shown below:

|

|

| 49 |

|

| 50 |

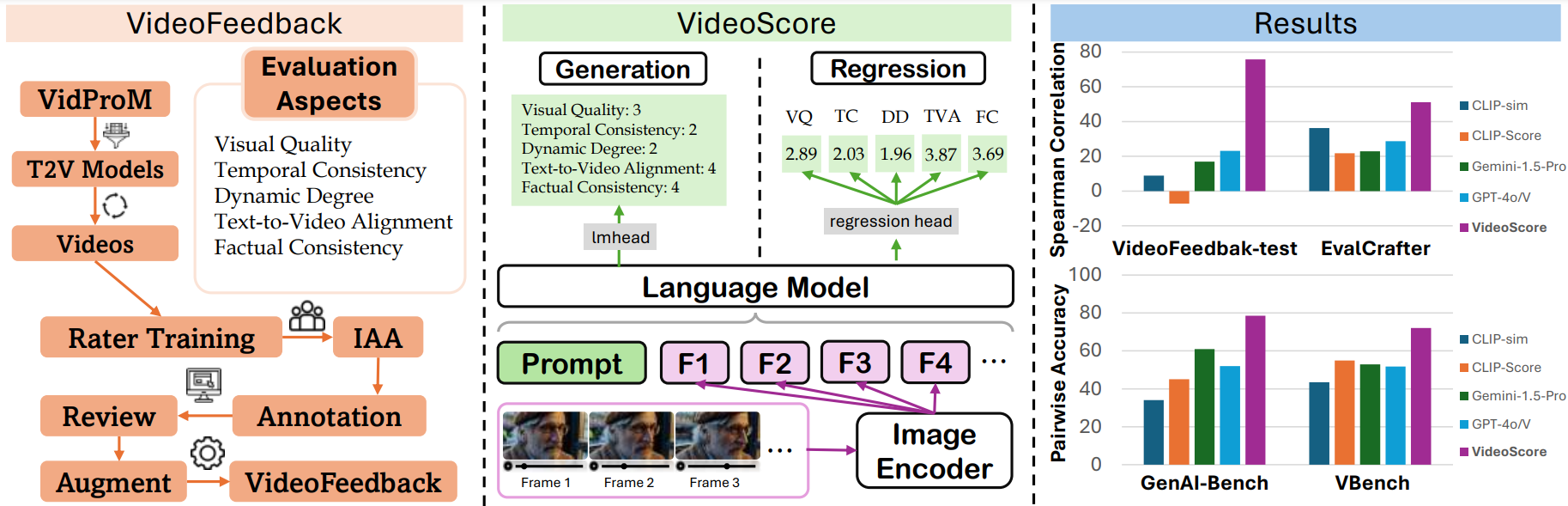

| metric | Final Avg Score | VideoFeedback-test | EvalCrafter | GenAI-Bench | VBench |

|

| 51 |

|:-----------------:|:--------------:|:--------------:|:-----------:|:-----------:|:----------:|

|

| 52 |

-

| VideoScore (reg)

|

| 53 |

-

| VideoScore (gen)

|

| 54 |

| Gemini-1.5-Pro | <u>39.7</u> | 22.1 | 22.9 | 60.9 | 52.9 |

|

| 55 |

| Gemini-1.5-Flash | 39.4 | 20.8 | 17.3 | <u>67.1</u> | 52.3 |

|

| 56 |

| GPT-4o | 38.9 | <u>23.1</u> | 28.7 | 52.0 | 51.7 |

|

|

|

|

| 11 |

---

|

| 12 |

|

| 13 |

|

| 14 |

+

[📃Paper](https://arxiv.org/abs/2406.15252) | [🌐Website](https://tiger-ai-lab.github.io/VideoScore/) | [💻Github](https://github.com/TIGER-AI-Lab/VideoScore) | [🛢️Datasets](https://huggingface.co/datasets/TIGER-Lab/VideoFeedback) | [🤗Model (VideoScore)](https://huggingface.co/TIGER-Lab/VideoScore) | [🤗Demo](https://huggingface.co/spaces/TIGER-Lab/VideoScore) | [📉Wandb (VideoScore-anno-only)](https://api.wandb.ai/links/xuanhe/4vs5k0cq)

|

| 15 |

|

| 16 |

|

| 17 |

|

|

|

|

| 20 |

- 🧐🧐[VideoScore-anno-only](https://huggingface.co/TIGER-Lab/VideoScore-anno-only) is a variant from [VideoScore](https://huggingface.co/TIGER-Lab/VideoScore), trained on VideoFeedback dataset

|

| 21 |

excluding the real videos.

|

| 22 |

|

| 23 |

+

- [VideoScore](https://huggingface.co/TIGER-Lab/VideoScore) series is a video quality evaluation model series, taking [Mantis-8B-Idefics2](https://huggingface.co/TIGER-Lab/Mantis-8B-Idefics2) or [Qwen/Qwen2-VL](https://huggingface.co/Qwen/Qwen2-VL-7B-Instruct) as base-model,

|

| 24 |

and trained on [VideoFeedback](https://huggingface.co/datasets/TIGER-Lab/VideoFeedback),

|

| 25 |

a large video evaluation dataset with multi-aspect human scores.

|

| 26 |

|

|

|

|

| 49 |

|

| 50 |

| metric | Final Avg Score | VideoFeedback-test | EvalCrafter | GenAI-Bench | VBench |

|

| 51 |

|:-----------------:|:--------------:|:--------------:|:-----------:|:-----------:|:----------:|

|

| 52 |

+

| VideoScore (reg) | **69.6** | 75.7 | **51.1** | **78.5** | **73.0** |

|

| 53 |

+

| VideoScore (gen) | 55.6 | **77.1** | 27.6 | 59.0 | 58.7 |

|

| 54 |

| Gemini-1.5-Pro | <u>39.7</u> | 22.1 | 22.9 | 60.9 | 52.9 |

|

| 55 |

| Gemini-1.5-Flash | 39.4 | 20.8 | 17.3 | <u>67.1</u> | 52.3 |

|

| 56 |

| GPT-4o | 38.9 | <u>23.1</u> | 28.7 | 52.0 | 51.7 |

|