File size: 2,339 Bytes

ffa709e |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 |

---

license: cc-by-4.0

pipeline_tag: image-to-image

tags:

- pytorch

- super-resolution

---

[Link to Github Release](https://github.com/Phhofm/models/releases/tag/4xNomos2_hq_mosr)

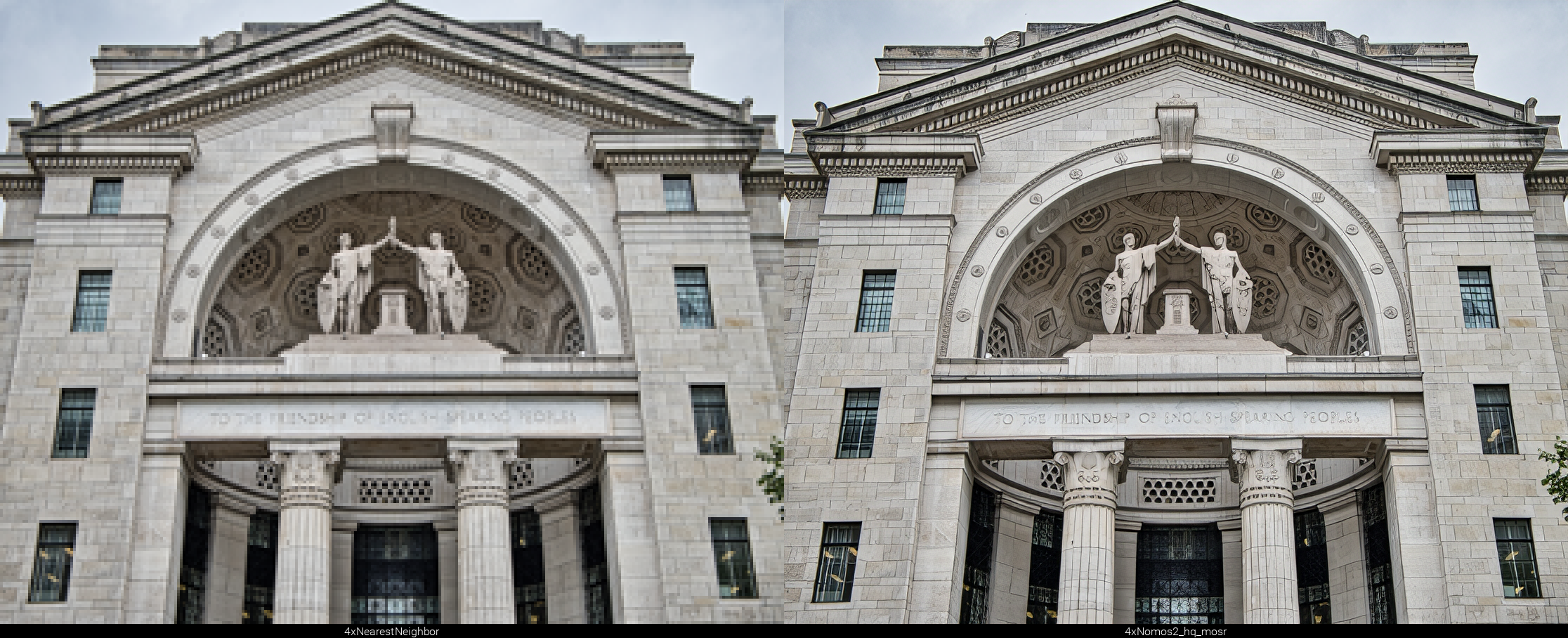

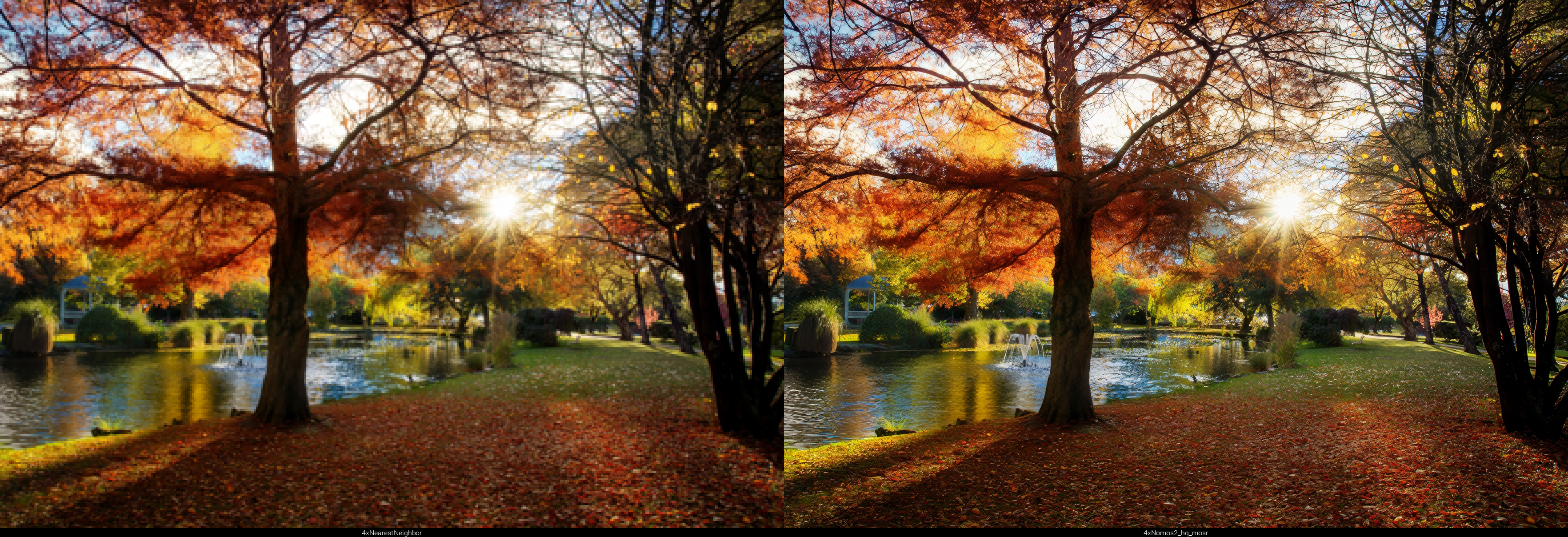

# 4xNomos2_hq_mosr

Scale: 4

Architecture: [MoSR](https://github.com/umzi2/MoSR)

Architecture Option: [mosr](https://github.com/umzi2/MoSR/blob/95c5bf73cca014493fe952c2fbc0bdbe593da08f/neosr/archs/mosr_arch.py#L117)

Author: Philip Hofmann

License: CC-BY-0.4

Purpose: Upscaler

Subject: Photography

Input Type: Images

Release Date: 25.08.2024

Dataset: [nomosv2](https://github.com/muslll/neosr/?tab=readme-ov-file#-datasets)

Dataset Size: 6000

OTF (on the fly augmentations): No

Pretrained Model: [4xmssim_mosr_pretrain](https://github.com/Phhofm/models/releases/tag/4xmssim_mosr_pretrain)

Iterations: 190'000

Batch Size: 6

Patch Size: 64

Description:

A 4x [MoSR](https://github.com/umzi2/MoSR) upscaling model, meant for non-degraded input, since this model was trained on non-degraded input to give good quality output.

If your input is degraded, use a 1x degrade model first. So for example if your input is a .jpg file, you could use a 1x dejpg model first.

PS I also provide an onnx conversion in the Attachements, I verified correct output with chainner:

<img src="https://github.com/user-attachments/assets/7f2be678-9d63-43e3-bfb6-59c94827a828" width=25% height=25%>

## Model Showcase:

[Slowpics](https://slow.pics/c/cqGJb0gT)

(Click on image for better view)

|