diff --git a/.gitattributes b/.gitattributes

index a6344aac8c09253b3b630fb776ae94478aa0275b..5116dccd1b776ad634705271cf14f3b1737f2309 100644

--- a/.gitattributes

+++ b/.gitattributes

@@ -33,3 +33,56 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

*.zip filter=lfs diff=lfs merge=lfs -text

*.zst filter=lfs diff=lfs merge=lfs -text

*tfevents* filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/testA/11_3.png filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/testA/12_12.png filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/testA/13_8.png filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](12).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](188).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](192).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](197).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](215).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](231).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](238).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](254).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](281).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](288).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](302).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](307).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](310).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](329).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](33).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](374).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](392).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](41).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](445).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](449).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](454).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](512).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](517).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](529).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](533).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](54).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](565).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](576).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](587).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](642).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](648).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](670).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](672).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](732).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](751).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](757).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](759).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](825).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](834).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](845).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](846).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](849).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](880).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](884).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](90).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](902).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](93).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](940).jpg filter=lfs diff=lfs merge=lfs -text

+datasets/bw2color/trainB/a[[:space:]](95).jpg filter=lfs diff=lfs merge=lfs -text

+imgs/horse2zebra.gif filter=lfs diff=lfs merge=lfs -text

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..0ea65dbceaae276ee7e2ca5025d04c891e49b8ea

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,46 @@

+.DS_Store

+debug*

+datasets/

+checkpoints/

+results/

+build/

+dist/

+*.png

+torch.egg-info/

+*/**/__pycache__

+torch/version.py

+torch/csrc/generic/TensorMethods.cpp

+torch/lib/*.so*

+torch/lib/*.dylib*

+torch/lib/*.h

+torch/lib/build

+torch/lib/tmp_install

+torch/lib/include

+torch/lib/torch_shm_manager

+torch/csrc/cudnn/cuDNN.cpp

+torch/csrc/nn/THNN.cwrap

+torch/csrc/nn/THNN.cpp

+torch/csrc/nn/THCUNN.cwrap

+torch/csrc/nn/THCUNN.cpp

+torch/csrc/nn/THNN_generic.cwrap

+torch/csrc/nn/THNN_generic.cpp

+torch/csrc/nn/THNN_generic.h

+docs/src/**/*

+test/data/legacy_modules.t7

+test/data/gpu_tensors.pt

+test/htmlcov

+test/.coverage

+*/*.pyc

+*/**/*.pyc

+*/**/**/*.pyc

+*/**/**/**/*.pyc

+*/**/**/**/**/*.pyc

+*/*.so*

+*/**/*.so*

+*/**/*.dylib*

+test/data/legacy_serialized.pt

+*~

+.idea

+

+#Ignore Wandb

+wandb/

diff --git a/.replit b/.replit

new file mode 100644

index 0000000000000000000000000000000000000000..94513bcb6de8effa36d8335fba26ff7b3d11a703

--- /dev/null

+++ b/.replit

@@ -0,0 +1,2 @@

+language = "python3"

+run = " [Tensorflow] (by Christopher Hesse), [Tensorflow] (by Eyyüb Sariu), [Tensorflow (face2face)] (by Dat Tran), [Tensorflow (film)] (by Arthur Juliani), [Tensorflow (zi2zi)] (by Yuchen Tian), [Chainer] (by mattya), [tf/torch/keras/lasagne] (by tjwei), [Pytorch] (by taey16)

"

\ No newline at end of file

diff --git a/CycleGAN.ipynb b/CycleGAN.ipynb

new file mode 100644

index 0000000000000000000000000000000000000000..99df56ac5ed8348393207e33a490e8c450d72498

--- /dev/null

+++ b/CycleGAN.ipynb

@@ -0,0 +1,273 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "view-in-github"

+ },

+ "source": [

+ " "

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "5VIGyIus8Vr7"

+ },

+ "source": [

+ "Take a look at the [repository](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix) for more information"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "7wNjDKdQy35h"

+ },

+ "source": [

+ "# Install"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "TRm-USlsHgEV"

+ },

+ "outputs": [],

+ "source": [

+ "!git clone https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "Pt3igws3eiVp"

+ },

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "os.chdir('pytorch-CycleGAN-and-pix2pix/')"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "z1EySlOXwwoa"

+ },

+ "outputs": [],

+ "source": [

+ "!pip install -r requirements.txt"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "8daqlgVhw29P"

+ },

+ "source": [

+ "# Datasets\n",

+ "\n",

+ "Download one of the official datasets with:\n",

+ "\n",

+ "- `bash ./datasets/download_cyclegan_dataset.sh [apple2orange, summer2winter_yosemite, horse2zebra, monet2photo, cezanne2photo, ukiyoe2photo, vangogh2photo, maps, cityscapes, facades, iphone2dslr_flower, ae_photos]`\n",

+ "\n",

+ "Or use your own dataset by creating the appropriate folders and adding in the images.\n",

+ "\n",

+ "- Create a dataset folder under `/dataset` for your dataset.\n",

+ "- Create subfolders `testA`, `testB`, `trainA`, and `trainB` under your dataset's folder. Place any images you want to transform from a to b (cat2dog) in the `testA` folder, images you want to transform from b to a (dog2cat) in the `testB` folder, and do the same for the `trainA` and `trainB` folders."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "vrdOettJxaCc"

+ },

+ "outputs": [],

+ "source": [

+ "!bash ./datasets/download_cyclegan_dataset.sh horse2zebra"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "gdUz4116xhpm"

+ },

+ "source": [

+ "# Pretrained models\n",

+ "\n",

+ "Download one of the official pretrained models with:\n",

+ "\n",

+ "- `bash ./scripts/download_cyclegan_model.sh [apple2orange, orange2apple, summer2winter_yosemite, winter2summer_yosemite, horse2zebra, zebra2horse, monet2photo, style_monet, style_cezanne, style_ukiyoe, style_vangogh, sat2map, map2sat, cityscapes_photo2label, cityscapes_label2photo, facades_photo2label, facades_label2photo, iphone2dslr_flower]`\n",

+ "\n",

+ "Or add your own pretrained model to `./checkpoints/{NAME}_pretrained/latest_net_G.pt`"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "B75UqtKhxznS"

+ },

+ "outputs": [],

+ "source": [

+ "!bash ./scripts/download_cyclegan_model.sh horse2zebra"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "yFw1kDQBx3LN"

+ },

+ "source": [

+ "# Training\n",

+ "\n",

+ "- `python train.py --dataroot ./datasets/horse2zebra --name horse2zebra --model cycle_gan`\n",

+ "\n",

+ "Change the `--dataroot` and `--name` to your own dataset's path and model's name. Use `--gpu_ids 0,1,..` to train on multiple GPUs and `--batch_size` to change the batch size. I've found that a batch size of 16 fits onto 4 V100s and can finish training an epoch in ~90s.\n",

+ "\n",

+ "Once your model has trained, copy over the last checkpoint to a format that the testing model can automatically detect:\n",

+ "\n",

+ "Use `cp ./checkpoints/horse2zebra/latest_net_G_A.pth ./checkpoints/horse2zebra/latest_net_G.pth` if you want to transform images from class A to class B and `cp ./checkpoints/horse2zebra/latest_net_G_B.pth ./checkpoints/horse2zebra/latest_net_G.pth` if you want to transform images from class B to class A.\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "0sp7TCT2x9dB"

+ },

+ "outputs": [],

+ "source": [

+ "!python train.py --dataroot ./datasets/horse2zebra --name horse2zebra --model cycle_gan --display_id -1"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "9UkcaFZiyASl"

+ },

+ "source": [

+ "# Testing\n",

+ "\n",

+ "- `python test.py --dataroot datasets/horse2zebra/testA --name horse2zebra_pretrained --model test --no_dropout`\n",

+ "\n",

+ "Change the `--dataroot` and `--name` to be consistent with your trained model's configuration.\n",

+ "\n",

+ "> from https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix:\n",

+ "> The option --model test is used for generating results of CycleGAN only for one side. This option will automatically set --dataset_mode single, which only loads the images from one set. On the contrary, using --model cycle_gan requires loading and generating results in both directions, which is sometimes unnecessary. The results will be saved at ./results/. Use --results_dir {directory_path_to_save_result} to specify the results directory.\n",

+ "\n",

+ "> For your own experiments, you might want to specify --netG, --norm, --no_dropout to match the generator architecture of the trained model."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "uCsKkEq0yGh0"

+ },

+ "outputs": [],

+ "source": [

+ "!python test.py --dataroot datasets/horse2zebra/testA --name horse2zebra_pretrained --model test --no_dropout"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "OzSKIPUByfiN"

+ },

+ "source": [

+ "# Visualize"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "9Mgg8raPyizq"

+ },

+ "outputs": [],

+ "source": [

+ "import matplotlib.pyplot as plt\n",

+ "\n",

+ "img = plt.imread('./results/horse2zebra_pretrained/test_latest/images/n02381460_1010_fake.png')\n",

+ "plt.imshow(img)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "0G3oVH9DyqLQ"

+ },

+ "outputs": [],

+ "source": [

+ "import matplotlib.pyplot as plt\n",

+ "\n",

+ "img = plt.imread('./results/horse2zebra_pretrained/test_latest/images/n02381460_1010_real.png')\n",

+ "plt.imshow(img)"

+ ]

+ }

+ ],

+ "metadata": {

+ "accelerator": "GPU",

+ "colab": {

+ "collapsed_sections": [],

+ "include_colab_link": true,

+ "name": "CycleGAN",

+ "provenance": []

+ },

+ "environment": {

+ "name": "tf2-gpu.2-3.m74",

+ "type": "gcloud",

+ "uri": "gcr.io/deeplearning-platform-release/tf2-gpu.2-3:m74"

+ },

+ "kernelspec": {

+ "display_name": "Python 3",

+ "language": "python",

+ "name": "python3"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.7.10"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 4

+}

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 0000000000000000000000000000000000000000..d75f0ee8466f00cf04da906a6fca115c7910399f

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,58 @@

+Copyright (c) 2017, Jun-Yan Zhu and Taesung Park

+All rights reserved.

+

+Redistribution and use in source and binary forms, with or without

+modification, are permitted provided that the following conditions are met:

+

+* Redistributions of source code must retain the above copyright notice, this

+ list of conditions and the following disclaimer.

+

+* Redistributions in binary form must reproduce the above copyright notice,

+ this list of conditions and the following disclaimer in the documentation

+ and/or other materials provided with the distribution.

+

+THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

+AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

+IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

+DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

+FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

+DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

+SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

+CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

+OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

+OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

+

+

+--------------------------- LICENSE FOR pix2pix --------------------------------

+BSD License

+

+For pix2pix software

+Copyright (c) 2016, Phillip Isola and Jun-Yan Zhu

+All rights reserved.

+

+Redistribution and use in source and binary forms, with or without

+modification, are permitted provided that the following conditions are met:

+

+* Redistributions of source code must retain the above copyright notice, this

+ list of conditions and the following disclaimer.

+

+* Redistributions in binary form must reproduce the above copyright notice,

+ this list of conditions and the following disclaimer in the documentation

+ and/or other materials provided with the distribution.

+

+----------------------------- LICENSE FOR DCGAN --------------------------------

+BSD License

+

+For dcgan.torch software

+

+Copyright (c) 2015, Facebook, Inc. All rights reserved.

+

+Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

+

+Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

+

+Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

+

+Neither the name Facebook nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

+

+THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

diff --git a/README.md b/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..539bce8585fa2465344a5bed7422ca7c50d84422

--- /dev/null

+++ b/README.md

@@ -0,0 +1,246 @@

+

+

"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "5VIGyIus8Vr7"

+ },

+ "source": [

+ "Take a look at the [repository](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix) for more information"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "7wNjDKdQy35h"

+ },

+ "source": [

+ "# Install"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "TRm-USlsHgEV"

+ },

+ "outputs": [],

+ "source": [

+ "!git clone https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "Pt3igws3eiVp"

+ },

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "os.chdir('pytorch-CycleGAN-and-pix2pix/')"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "z1EySlOXwwoa"

+ },

+ "outputs": [],

+ "source": [

+ "!pip install -r requirements.txt"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "8daqlgVhw29P"

+ },

+ "source": [

+ "# Datasets\n",

+ "\n",

+ "Download one of the official datasets with:\n",

+ "\n",

+ "- `bash ./datasets/download_cyclegan_dataset.sh [apple2orange, summer2winter_yosemite, horse2zebra, monet2photo, cezanne2photo, ukiyoe2photo, vangogh2photo, maps, cityscapes, facades, iphone2dslr_flower, ae_photos]`\n",

+ "\n",

+ "Or use your own dataset by creating the appropriate folders and adding in the images.\n",

+ "\n",

+ "- Create a dataset folder under `/dataset` for your dataset.\n",

+ "- Create subfolders `testA`, `testB`, `trainA`, and `trainB` under your dataset's folder. Place any images you want to transform from a to b (cat2dog) in the `testA` folder, images you want to transform from b to a (dog2cat) in the `testB` folder, and do the same for the `trainA` and `trainB` folders."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "vrdOettJxaCc"

+ },

+ "outputs": [],

+ "source": [

+ "!bash ./datasets/download_cyclegan_dataset.sh horse2zebra"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "gdUz4116xhpm"

+ },

+ "source": [

+ "# Pretrained models\n",

+ "\n",

+ "Download one of the official pretrained models with:\n",

+ "\n",

+ "- `bash ./scripts/download_cyclegan_model.sh [apple2orange, orange2apple, summer2winter_yosemite, winter2summer_yosemite, horse2zebra, zebra2horse, monet2photo, style_monet, style_cezanne, style_ukiyoe, style_vangogh, sat2map, map2sat, cityscapes_photo2label, cityscapes_label2photo, facades_photo2label, facades_label2photo, iphone2dslr_flower]`\n",

+ "\n",

+ "Or add your own pretrained model to `./checkpoints/{NAME}_pretrained/latest_net_G.pt`"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "B75UqtKhxznS"

+ },

+ "outputs": [],

+ "source": [

+ "!bash ./scripts/download_cyclegan_model.sh horse2zebra"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "yFw1kDQBx3LN"

+ },

+ "source": [

+ "# Training\n",

+ "\n",

+ "- `python train.py --dataroot ./datasets/horse2zebra --name horse2zebra --model cycle_gan`\n",

+ "\n",

+ "Change the `--dataroot` and `--name` to your own dataset's path and model's name. Use `--gpu_ids 0,1,..` to train on multiple GPUs and `--batch_size` to change the batch size. I've found that a batch size of 16 fits onto 4 V100s and can finish training an epoch in ~90s.\n",

+ "\n",

+ "Once your model has trained, copy over the last checkpoint to a format that the testing model can automatically detect:\n",

+ "\n",

+ "Use `cp ./checkpoints/horse2zebra/latest_net_G_A.pth ./checkpoints/horse2zebra/latest_net_G.pth` if you want to transform images from class A to class B and `cp ./checkpoints/horse2zebra/latest_net_G_B.pth ./checkpoints/horse2zebra/latest_net_G.pth` if you want to transform images from class B to class A.\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "0sp7TCT2x9dB"

+ },

+ "outputs": [],

+ "source": [

+ "!python train.py --dataroot ./datasets/horse2zebra --name horse2zebra --model cycle_gan --display_id -1"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "9UkcaFZiyASl"

+ },

+ "source": [

+ "# Testing\n",

+ "\n",

+ "- `python test.py --dataroot datasets/horse2zebra/testA --name horse2zebra_pretrained --model test --no_dropout`\n",

+ "\n",

+ "Change the `--dataroot` and `--name` to be consistent with your trained model's configuration.\n",

+ "\n",

+ "> from https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix:\n",

+ "> The option --model test is used for generating results of CycleGAN only for one side. This option will automatically set --dataset_mode single, which only loads the images from one set. On the contrary, using --model cycle_gan requires loading and generating results in both directions, which is sometimes unnecessary. The results will be saved at ./results/. Use --results_dir {directory_path_to_save_result} to specify the results directory.\n",

+ "\n",

+ "> For your own experiments, you might want to specify --netG, --norm, --no_dropout to match the generator architecture of the trained model."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "uCsKkEq0yGh0"

+ },

+ "outputs": [],

+ "source": [

+ "!python test.py --dataroot datasets/horse2zebra/testA --name horse2zebra_pretrained --model test --no_dropout"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "OzSKIPUByfiN"

+ },

+ "source": [

+ "# Visualize"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "9Mgg8raPyizq"

+ },

+ "outputs": [],

+ "source": [

+ "import matplotlib.pyplot as plt\n",

+ "\n",

+ "img = plt.imread('./results/horse2zebra_pretrained/test_latest/images/n02381460_1010_fake.png')\n",

+ "plt.imshow(img)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {},

+ "colab_type": "code",

+ "id": "0G3oVH9DyqLQ"

+ },

+ "outputs": [],

+ "source": [

+ "import matplotlib.pyplot as plt\n",

+ "\n",

+ "img = plt.imread('./results/horse2zebra_pretrained/test_latest/images/n02381460_1010_real.png')\n",

+ "plt.imshow(img)"

+ ]

+ }

+ ],

+ "metadata": {

+ "accelerator": "GPU",

+ "colab": {

+ "collapsed_sections": [],

+ "include_colab_link": true,

+ "name": "CycleGAN",

+ "provenance": []

+ },

+ "environment": {

+ "name": "tf2-gpu.2-3.m74",

+ "type": "gcloud",

+ "uri": "gcr.io/deeplearning-platform-release/tf2-gpu.2-3:m74"

+ },

+ "kernelspec": {

+ "display_name": "Python 3",

+ "language": "python",

+ "name": "python3"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.7.10"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 4

+}

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 0000000000000000000000000000000000000000..d75f0ee8466f00cf04da906a6fca115c7910399f

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,58 @@

+Copyright (c) 2017, Jun-Yan Zhu and Taesung Park

+All rights reserved.

+

+Redistribution and use in source and binary forms, with or without

+modification, are permitted provided that the following conditions are met:

+

+* Redistributions of source code must retain the above copyright notice, this

+ list of conditions and the following disclaimer.

+

+* Redistributions in binary form must reproduce the above copyright notice,

+ this list of conditions and the following disclaimer in the documentation

+ and/or other materials provided with the distribution.

+

+THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

+AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

+IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

+DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

+FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

+DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

+SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

+CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

+OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

+OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

+

+

+--------------------------- LICENSE FOR pix2pix --------------------------------

+BSD License

+

+For pix2pix software

+Copyright (c) 2016, Phillip Isola and Jun-Yan Zhu

+All rights reserved.

+

+Redistribution and use in source and binary forms, with or without

+modification, are permitted provided that the following conditions are met:

+

+* Redistributions of source code must retain the above copyright notice, this

+ list of conditions and the following disclaimer.

+

+* Redistributions in binary form must reproduce the above copyright notice,

+ this list of conditions and the following disclaimer in the documentation

+ and/or other materials provided with the distribution.

+

+----------------------------- LICENSE FOR DCGAN --------------------------------

+BSD License

+

+For dcgan.torch software

+

+Copyright (c) 2015, Facebook, Inc. All rights reserved.

+

+Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

+

+Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

+

+Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

+

+Neither the name Facebook nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

+

+THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

diff --git a/README.md b/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..539bce8585fa2465344a5bed7422ca7c50d84422

--- /dev/null

+++ b/README.md

@@ -0,0 +1,246 @@

+

+ +

+

+

+

+

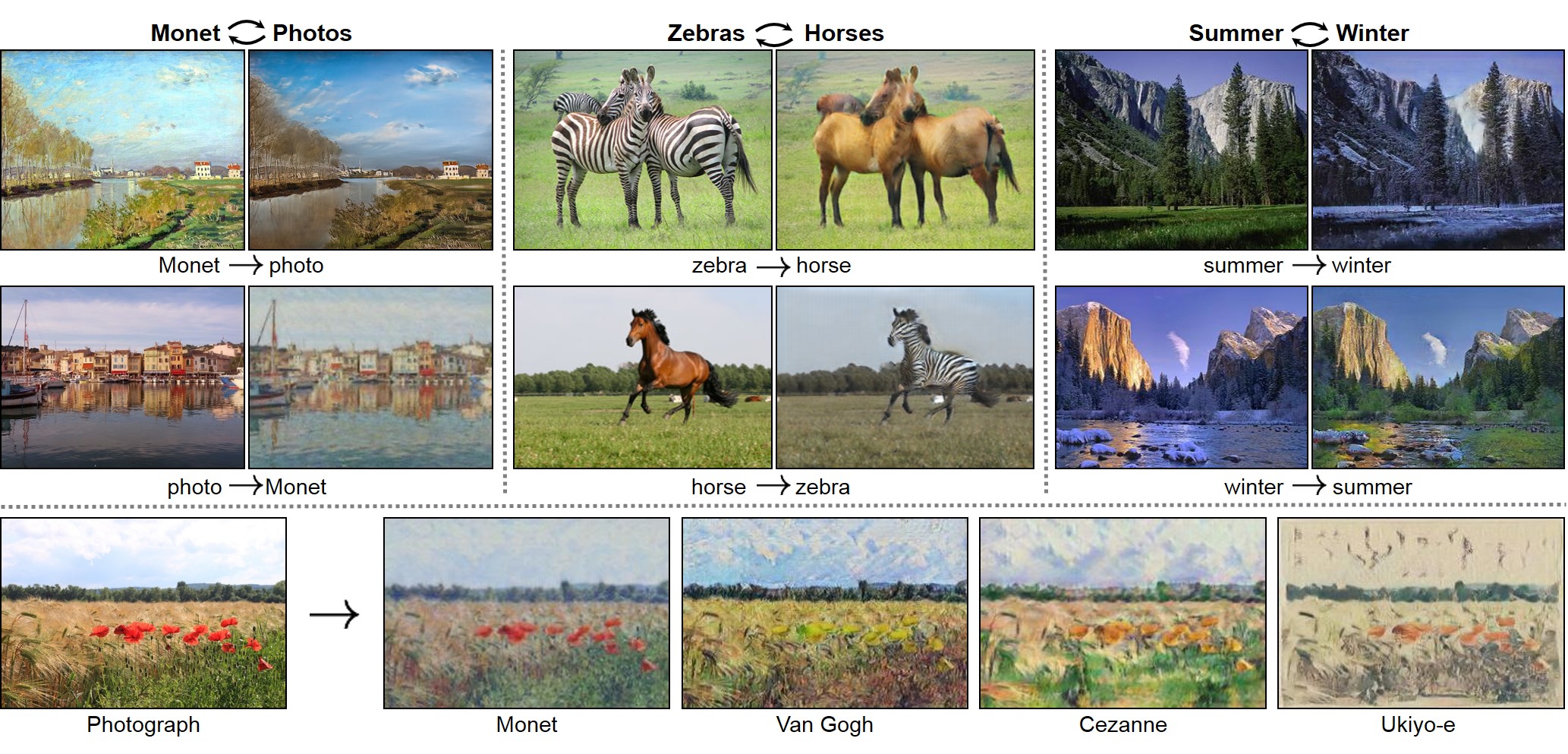

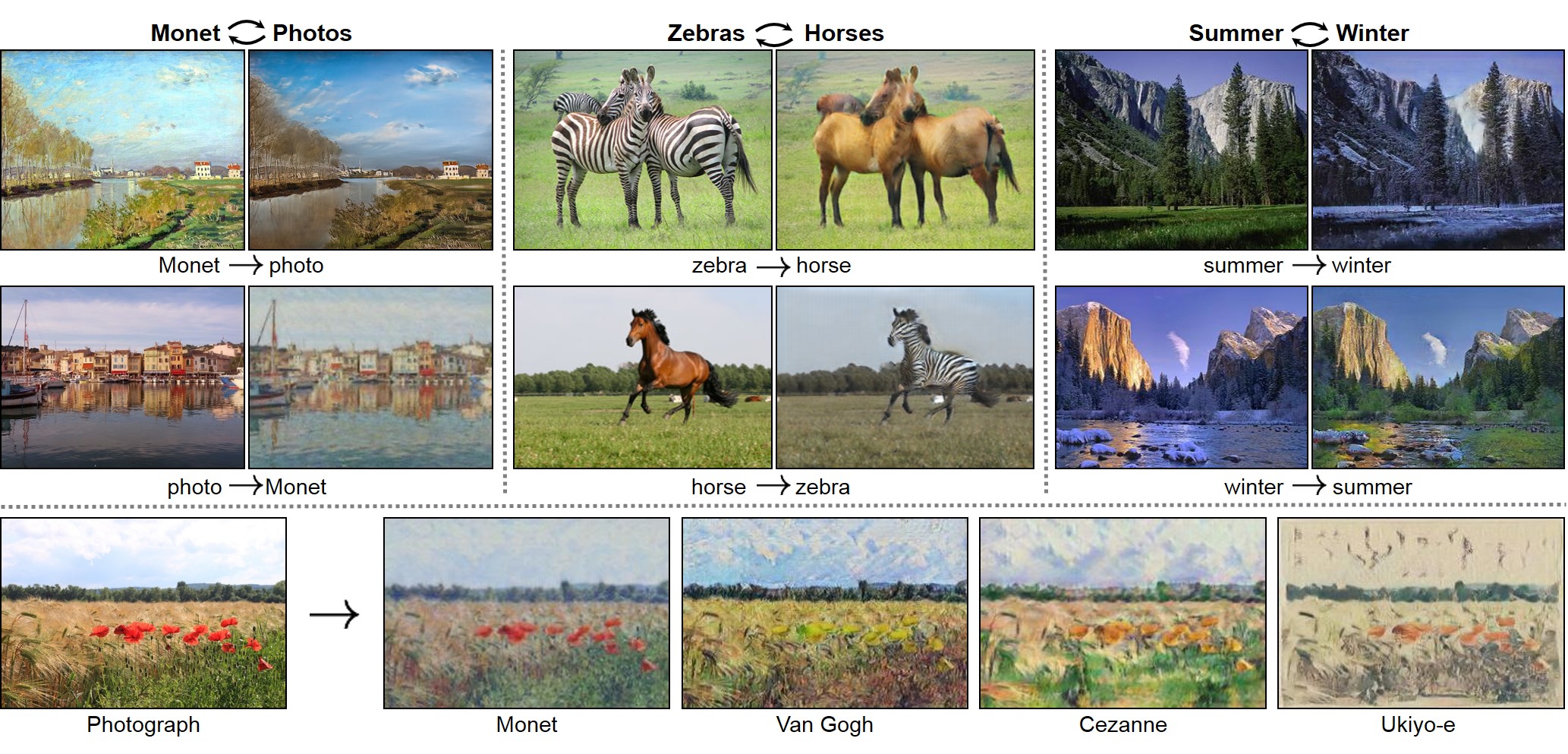

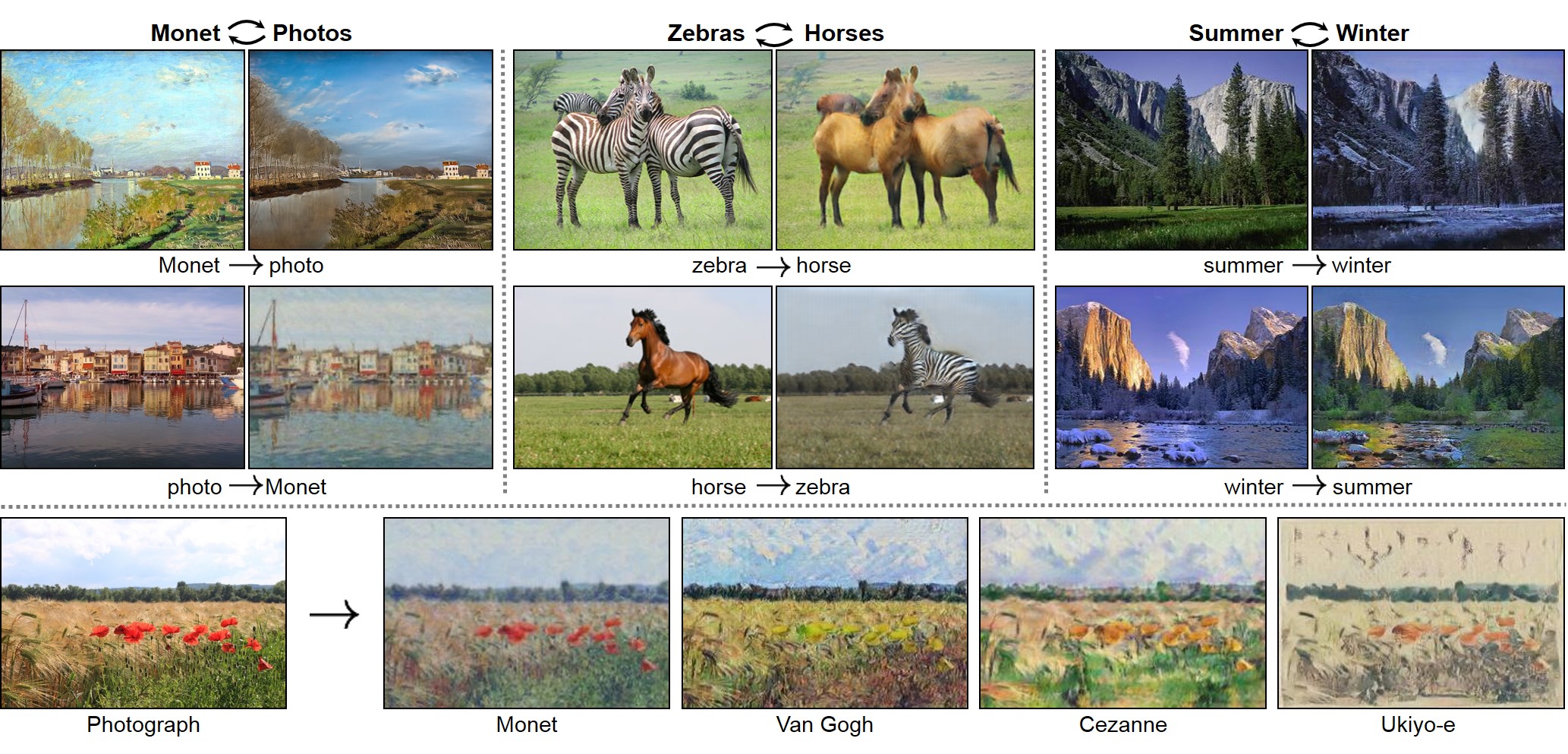

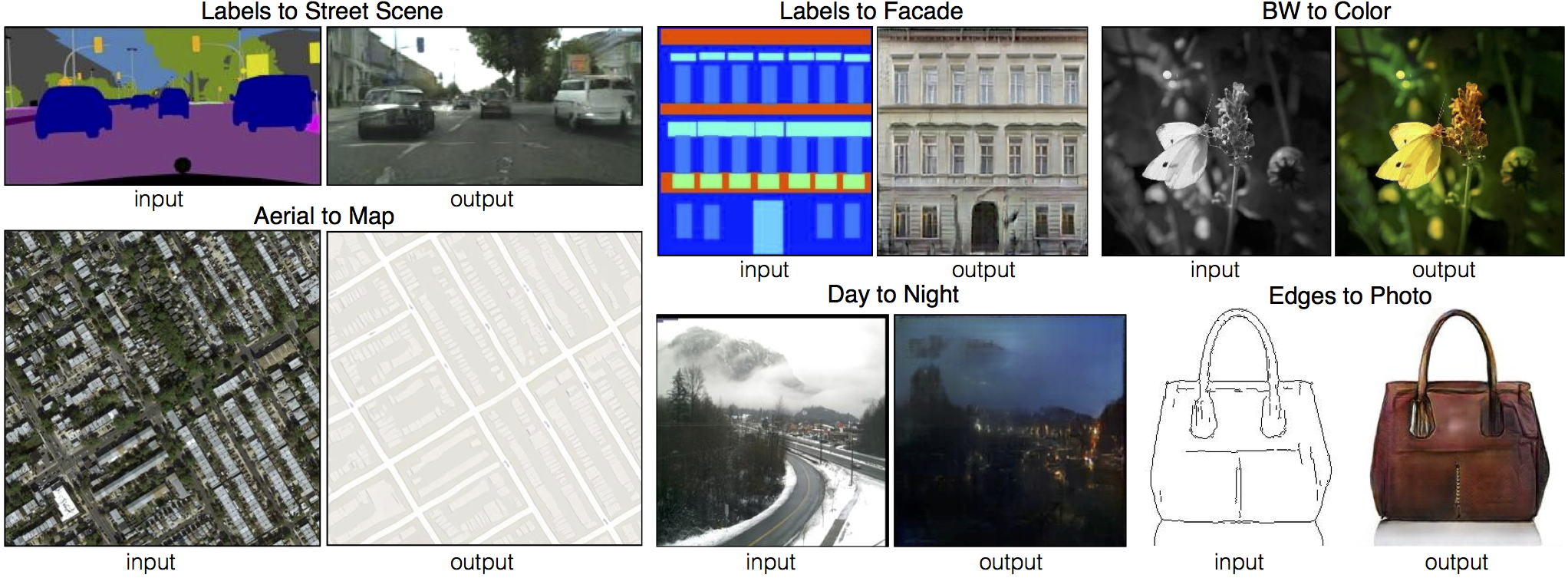

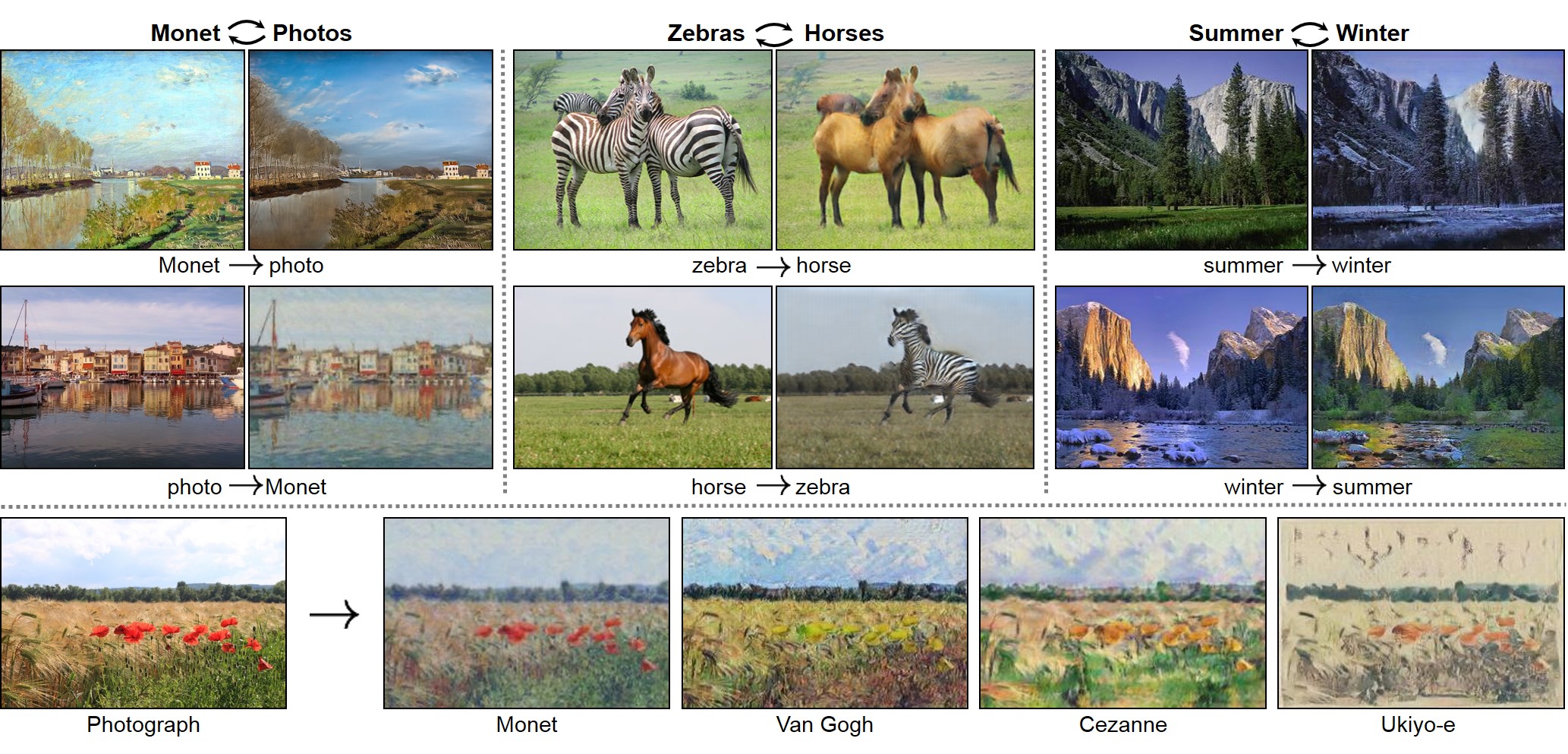

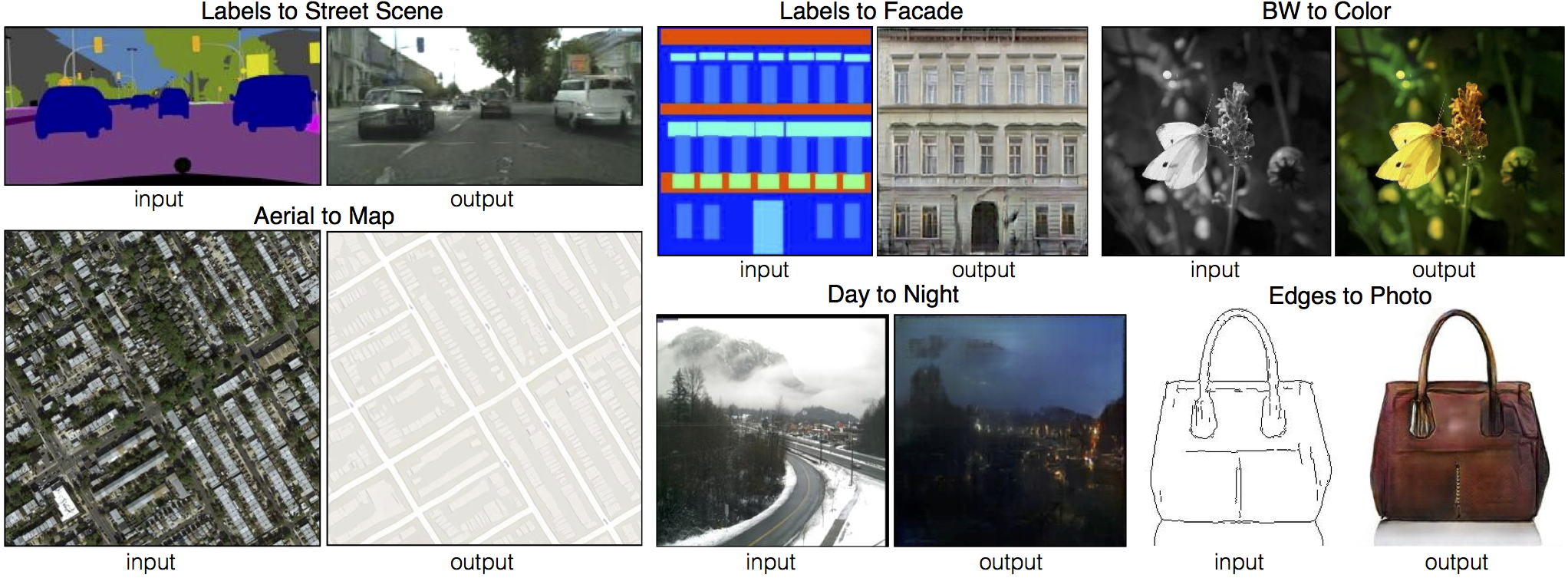

+# CycleGAN and pix2pix in PyTorch

+

+**New**: Please check out [contrastive-unpaired-translation](https://github.com/taesungp/contrastive-unpaired-translation) (CUT), our new unpaired image-to-image translation model that enables fast and memory-efficient training.

+

+We provide PyTorch implementations for both unpaired and paired image-to-image translation.

+

+The code was written by [Jun-Yan Zhu](https://github.com/junyanz) and [Taesung Park](https://github.com/taesungp), and supported by [Tongzhou Wang](https://github.com/SsnL).

+

+This PyTorch implementation produces results comparable to or better than our original Torch software. If you would like to reproduce the same results as in the papers, check out the original [CycleGAN Torch](https://github.com/junyanz/CycleGAN) and [pix2pix Torch](https://github.com/phillipi/pix2pix) code in Lua/Torch.

+

+**Note**: The current software works well with PyTorch 1.4. Check out the older [branch](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/tree/pytorch0.3.1) that supports PyTorch 0.1-0.3.

+

+You may find useful information in [training/test tips](docs/tips.md) and [frequently asked questions](docs/qa.md). To implement custom models and datasets, check out our [templates](#custom-model-and-dataset). To help users better understand and adapt our codebase, we provide an [overview](docs/overview.md) of the code structure of this repository.

+

+**CycleGAN: [Project](https://junyanz.github.io/CycleGAN/) | [Paper](https://arxiv.org/pdf/1703.10593.pdf) | [Torch](https://github.com/junyanz/CycleGAN) |

+[Tensorflow Core Tutorial](https://www.tensorflow.org/tutorials/generative/cyclegan) | [PyTorch Colab](https://colab.research.google.com/github/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/CycleGAN.ipynb)**

+

+ +

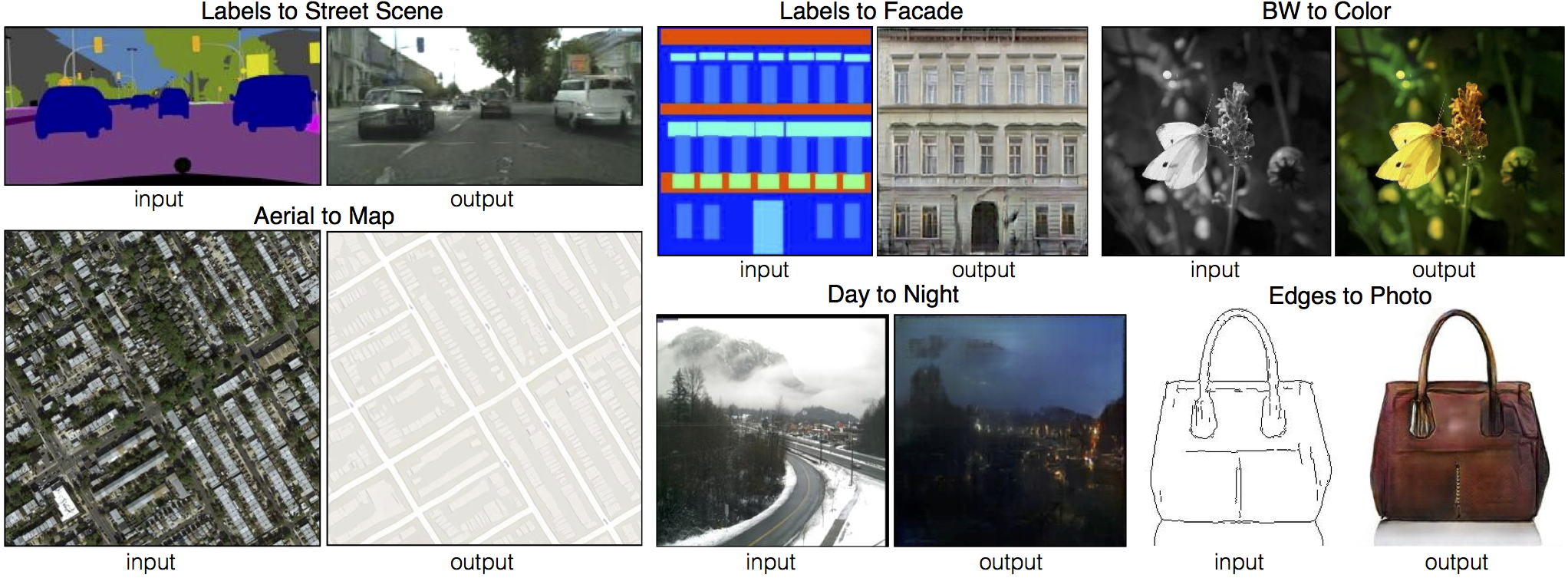

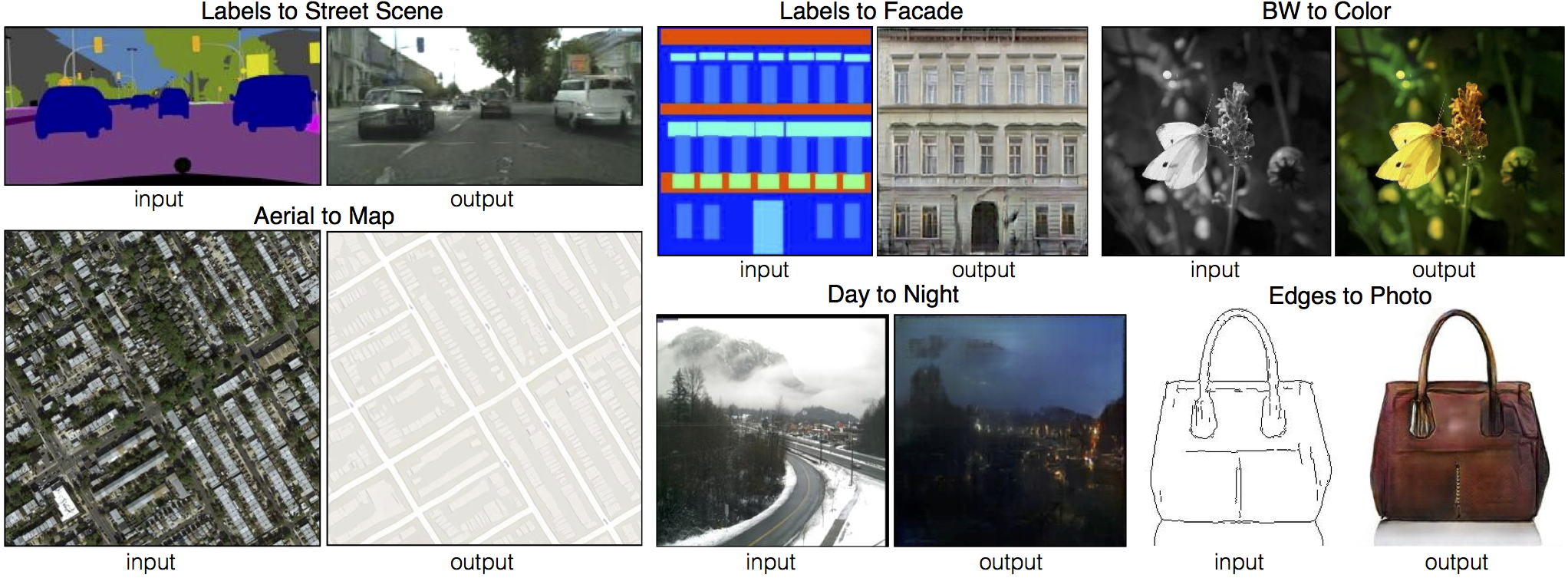

+**Pix2pix: [Project](https://phillipi.github.io/pix2pix/) | [Paper](https://arxiv.org/pdf/1611.07004.pdf) | [Torch](https://github.com/phillipi/pix2pix) |

+[Tensorflow Core Tutorial](https://www.tensorflow.org/tutorials/generative/pix2pix) | [PyTorch Colab](https://colab.research.google.com/github/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/pix2pix.ipynb)**

+

+

+

+**Pix2pix: [Project](https://phillipi.github.io/pix2pix/) | [Paper](https://arxiv.org/pdf/1611.07004.pdf) | [Torch](https://github.com/phillipi/pix2pix) |

+[Tensorflow Core Tutorial](https://www.tensorflow.org/tutorials/generative/pix2pix) | [PyTorch Colab](https://colab.research.google.com/github/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/pix2pix.ipynb)**

+

+ +

+

+**[EdgesCats Demo](https://affinelayer.com/pixsrv/) | [pix2pix-tensorflow](https://github.com/affinelayer/pix2pix-tensorflow) | by [Christopher Hesse](https://twitter.com/christophrhesse)**

+

+

+

+

+**[EdgesCats Demo](https://affinelayer.com/pixsrv/) | [pix2pix-tensorflow](https://github.com/affinelayer/pix2pix-tensorflow) | by [Christopher Hesse](https://twitter.com/christophrhesse)**

+

+ +

+If you use this code for your research, please cite:

+

+Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks.

+

+If you use this code for your research, please cite:

+

+Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks.

+[Jun-Yan Zhu](https://www.cs.cmu.edu/~junyanz/)\*, [Taesung Park](https://taesung.me/)\*, [Phillip Isola](https://people.eecs.berkeley.edu/~isola/), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros). In ICCV 2017. (* equal contributions) [[Bibtex]](https://junyanz.github.io/CycleGAN/CycleGAN.txt)

+

+

+Image-to-Image Translation with Conditional Adversarial Networks.

+[Phillip Isola](https://people.eecs.berkeley.edu/~isola), [Jun-Yan Zhu](https://www.cs.cmu.edu/~junyanz/), [Tinghui Zhou](https://people.eecs.berkeley.edu/~tinghuiz), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros). In CVPR 2017. [[Bibtex]](https://www.cs.cmu.edu/~junyanz/projects/pix2pix/pix2pix.bib)

+

+## Talks and Course

+pix2pix slides: [keynote](http://efrosgans.eecs.berkeley.edu/CVPR18_slides/pix2pix.key) | [pdf](http://efrosgans.eecs.berkeley.edu/CVPR18_slides/pix2pix.pdf),

+CycleGAN slides: [pptx](http://efrosgans.eecs.berkeley.edu/CVPR18_slides/CycleGAN.pptx) | [pdf](http://efrosgans.eecs.berkeley.edu/CVPR18_slides/CycleGAN.pdf)

+

+CycleGAN course assignment [code](http://www.cs.toronto.edu/~rgrosse/courses/csc321_2018/assignments/a4-code.zip) and [handout](http://www.cs.toronto.edu/~rgrosse/courses/csc321_2018/assignments/a4-handout.pdf) designed by Prof. [Roger Grosse](http://www.cs.toronto.edu/~rgrosse/) for [CSC321](http://www.cs.toronto.edu/~rgrosse/courses/csc321_2018/) "Intro to Neural Networks and Machine Learning" at University of Toronto. Please contact the instructor if you would like to adopt it in your course.

+

+## Colab Notebook

+TensorFlow Core CycleGAN Tutorial: [Google Colab](https://colab.research.google.com/github/tensorflow/docs/blob/master/site/en/tutorials/generative/cyclegan.ipynb) | [Code](https://github.com/tensorflow/docs/blob/master/site/en/tutorials/generative/cyclegan.ipynb)

+

+TensorFlow Core pix2pix Tutorial: [Google Colab](https://colab.research.google.com/github/tensorflow/docs/blob/master/site/en/tutorials/generative/pix2pix.ipynb) | [Code](https://github.com/tensorflow/docs/blob/master/site/en/tutorials/generative/pix2pix.ipynb)

+

+PyTorch Colab notebook: [CycleGAN](https://colab.research.google.com/github/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/CycleGAN.ipynb) and [pix2pix](https://colab.research.google.com/github/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/pix2pix.ipynb)

+

+ZeroCostDL4Mic Colab notebook: [CycleGAN](https://colab.research.google.com/github/HenriquesLab/ZeroCostDL4Mic/blob/master/Colab_notebooks_Beta/CycleGAN_ZeroCostDL4Mic.ipynb) and [pix2pix](https://colab.research.google.com/github/HenriquesLab/ZeroCostDL4Mic/blob/master/Colab_notebooks_Beta/pix2pix_ZeroCostDL4Mic.ipynb)

+

+## Other implementations

+### CycleGAN

+ [Tensorflow] (by Harry Yang),

+[Tensorflow] (by Archit Rathore),

+[Tensorflow] (by Van Huy),

+[Tensorflow] (by Xiaowei Hu),

+ [Tensorflow2] (by Zhenliang He),

+ [TensorLayer1.0] (by luoxier),

+ [TensorLayer2.0] (by zsdonghao),

+[Chainer] (by Yanghua Jin),

+[Minimal PyTorch] (by yunjey),

+[Mxnet] (by Ldpe2G),

+[lasagne/Keras] (by tjwei),

+[Keras] (by Simon Karlsson),

+[OneFlow] (by Ldpe2G)

+

+

+

+### pix2pix

+ [Tensorflow] (by Christopher Hesse),

+[Tensorflow] (by Eyyüb Sariu),

+ [Tensorflow (face2face)] (by Dat Tran),

+ [Tensorflow (film)] (by Arthur Juliani),

+[Tensorflow (zi2zi)] (by Yuchen Tian),

+[Chainer] (by mattya),

+[tf/torch/keras/lasagne] (by tjwei),

+[Pytorch] (by taey16)

+

+

+

+## Prerequisites

+- Linux or macOS

+- Python 3

+- CPU or NVIDIA GPU + CUDA CuDNN

+

+## Getting Started

+### Installation

+

+- Clone this repo:

+```bash

+git clone https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

+cd pytorch-CycleGAN-and-pix2pix

+```

+

+- Install [PyTorch](http://pytorch.org) and 0.4+ and other dependencies (e.g., torchvision, [visdom](https://github.com/facebookresearch/visdom) and [dominate](https://github.com/Knio/dominate)).

+ - For pip users, please type the command `pip install -r requirements.txt`.

+ - For Conda users, you can create a new Conda environment using `conda env create -f environment.yml`.

+ - For Docker users, we provide the pre-built Docker image and Dockerfile. Please refer to our [Docker](docs/docker.md) page.

+ - For Repl users, please click [](https://repl.it/github/junyanz/pytorch-CycleGAN-and-pix2pix).

+

+### CycleGAN train/test

+- Download a CycleGAN dataset (e.g. maps):

+```bash

+bash ./datasets/download_cyclegan_dataset.sh maps

+```

+- To view training results and loss plots, run `python -m visdom.server` and click the URL http://localhost:8097.

+- To log training progress and test images to W&B dashboard, set the `--use_wandb` flag with train and test script

+- Train a model:

+```bash

+#!./scripts/train_cyclegan.sh

+python train.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan

+```

+To see more intermediate results, check out `./checkpoints/maps_cyclegan/web/index.html`.

+- Test the model:

+```bash

+#!./scripts/test_cyclegan.sh

+python test.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan

+```

+- The test results will be saved to a html file here: `./results/maps_cyclegan/latest_test/index.html`.

+

+### pix2pix train/test

+- Download a pix2pix dataset (e.g.[facades](http://cmp.felk.cvut.cz/~tylecr1/facade/)):

+```bash

+bash ./datasets/download_pix2pix_dataset.sh facades

+```

+- To view training results and loss plots, run `python -m visdom.server` and click the URL http://localhost:8097.

+- To log training progress and test images to W&B dashboard, set the `--use_wandb` flag with train and test script

+- Train a model:

+```bash

+#!./scripts/train_pix2pix.sh

+python train.py --dataroot ./datasets/facades --name facades_pix2pix --model pix2pix --direction BtoA

+```

+To see more intermediate results, check out `./checkpoints/facades_pix2pix/web/index.html`.

+

+- Test the model (`bash ./scripts/test_pix2pix.sh`):

+```bash

+#!./scripts/test_pix2pix.sh

+python test.py --dataroot ./datasets/facades --name facades_pix2pix --model pix2pix --direction BtoA

+```

+- The test results will be saved to a html file here: `./results/facades_pix2pix/test_latest/index.html`. You can find more scripts at `scripts` directory.

+- To train and test pix2pix-based colorization models, please add `--model colorization` and `--dataset_mode colorization`. See our training [tips](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/docs/tips.md#notes-on-colorization) for more details.

+

+### Apply a pre-trained model (CycleGAN)

+- You can download a pretrained model (e.g. horse2zebra) with the following script:

+```bash

+bash ./scripts/download_cyclegan_model.sh horse2zebra

+```

+- The pretrained model is saved at `./checkpoints/{name}_pretrained/latest_net_G.pth`. Check [here](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/scripts/download_cyclegan_model.sh#L3) for all the available CycleGAN models.

+- To test the model, you also need to download the horse2zebra dataset:

+```bash

+bash ./datasets/download_cyclegan_dataset.sh horse2zebra

+```

+

+- Then generate the results using

+```bash

+python test.py --dataroot datasets/horse2zebra/testA --name horse2zebra_pretrained --model test --no_dropout

+```

+- The option `--model test` is used for generating results of CycleGAN only for one side. This option will automatically set `--dataset_mode single`, which only loads the images from one set. On the contrary, using `--model cycle_gan` requires loading and generating results in both directions, which is sometimes unnecessary. The results will be saved at `./results/`. Use `--results_dir {directory_path_to_save_result}` to specify the results directory.

+

+- For pix2pix and your own models, you need to explicitly specify `--netG`, `--norm`, `--no_dropout` to match the generator architecture of the trained model. See this [FAQ](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/docs/qa.md#runtimeerror-errors-in-loading-state_dict-812-671461-296) for more details.

+

+### Apply a pre-trained model (pix2pix)

+Download a pre-trained model with `./scripts/download_pix2pix_model.sh`.

+

+- Check [here](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/scripts/download_pix2pix_model.sh#L3) for all the available pix2pix models. For example, if you would like to download label2photo model on the Facades dataset,

+```bash

+bash ./scripts/download_pix2pix_model.sh facades_label2photo

+```

+- Download the pix2pix facades datasets:

+```bash

+bash ./datasets/download_pix2pix_dataset.sh facades

+```

+- Then generate the results using

+```bash

+python test.py --dataroot ./datasets/facades/ --direction BtoA --model pix2pix --name facades_label2photo_pretrained

+```

+- Note that we specified `--direction BtoA` as Facades dataset's A to B direction is photos to labels.

+

+- If you would like to apply a pre-trained model to a collection of input images (rather than image pairs), please use `--model test` option. See `./scripts/test_single.sh` for how to apply a model to Facade label maps (stored in the directory `facades/testB`).

+

+- See a list of currently available models at `./scripts/download_pix2pix_model.sh`

+

+## [Docker](docs/docker.md)

+We provide the pre-built Docker image and Dockerfile that can run this code repo. See [docker](docs/docker.md).

+

+## [Datasets](docs/datasets.md)

+Download pix2pix/CycleGAN datasets and create your own datasets.

+

+## [Training/Test Tips](docs/tips.md)

+Best practice for training and testing your models.

+

+## [Frequently Asked Questions](docs/qa.md)

+Before you post a new question, please first look at the above Q & A and existing GitHub issues.

+

+## Custom Model and Dataset

+If you plan to implement custom models and dataset for your new applications, we provide a dataset [template](data/template_dataset.py) and a model [template](models/template_model.py) as a starting point.

+

+## [Code structure](docs/overview.md)

+To help users better understand and use our code, we briefly overview the functionality and implementation of each package and each module.

+

+## Pull Request

+You are always welcome to contribute to this repository by sending a [pull request](https://help.github.com/articles/about-pull-requests/).

+Please run `flake8 --ignore E501 .` and `python ./scripts/test_before_push.py` before you commit the code. Please also update the code structure [overview](docs/overview.md) accordingly if you add or remove files.

+

+## Citation

+If you use this code for your research, please cite our papers.

+```

+@inproceedings{CycleGAN2017,

+ title={Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks},

+ author={Zhu, Jun-Yan and Park, Taesung and Isola, Phillip and Efros, Alexei A},

+ booktitle={Computer Vision (ICCV), 2017 IEEE International Conference on},

+ year={2017}

+}

+

+

+@inproceedings{isola2017image,

+ title={Image-to-Image Translation with Conditional Adversarial Networks},

+ author={Isola, Phillip and Zhu, Jun-Yan and Zhou, Tinghui and Efros, Alexei A},

+ booktitle={Computer Vision and Pattern Recognition (CVPR), 2017 IEEE Conference on},

+ year={2017}

+}

+```

+

+## Other Languages

+[Spanish](docs/README_es.md)

+

+## Related Projects

+**[contrastive-unpaired-translation](https://github.com/taesungp/contrastive-unpaired-translation) (CUT)**

+**[CycleGAN-Torch](https://github.com/junyanz/CycleGAN) |

+[pix2pix-Torch](https://github.com/phillipi/pix2pix) | [pix2pixHD](https://github.com/NVIDIA/pix2pixHD)|

+[BicycleGAN](https://github.com/junyanz/BicycleGAN) | [vid2vid](https://tcwang0509.github.io/vid2vid/) | [SPADE/GauGAN](https://github.com/NVlabs/SPADE)**

+**[iGAN](https://github.com/junyanz/iGAN) | [GAN Dissection](https://github.com/CSAILVision/GANDissect) | [GAN Paint](http://ganpaint.io/)**

+

+## Cat Paper Collection

+If you love cats, and love reading cool graphics, vision, and learning papers, please check out the Cat Paper [Collection](https://github.com/junyanz/CatPapers).

+

+## Acknowledgments

+Our code is inspired by [pytorch-DCGAN](https://github.com/pytorch/examples/tree/master/dcgan).

diff --git a/checkpoints/bw2color/115_net_D.pth b/checkpoints/bw2color/115_net_D.pth

new file mode 100644

index 0000000000000000000000000000000000000000..7a1c259ec9c559028ee87fbd968c6416f33fd288

--- /dev/null

+++ b/checkpoints/bw2color/115_net_D.pth

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:bcd750209fe24f61e92b68560120825b73082495a4640d9e8c01a9dadd7c52e5

+size 11076872

diff --git a/checkpoints/bw2color/115_net_G.pth b/checkpoints/bw2color/115_net_G.pth

new file mode 100644

index 0000000000000000000000000000000000000000..f910e74b44b81d81c56862f7528716489dc43490

--- /dev/null

+++ b/checkpoints/bw2color/115_net_G.pth

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:cd30259282564b9026db75345ad672bff504350a552c19d3413c019d7ee7fdd9

+size 217710092

diff --git a/checkpoints/bw2color/bw2color.pth b/checkpoints/bw2color/bw2color.pth

new file mode 100644

index 0000000000000000000000000000000000000000..6e39de5be415318af18ba864d98e14b4c683f992

--- /dev/null

+++ b/checkpoints/bw2color/bw2color.pth

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:110fc38ccd54ecae7ff81aa131f519a9eed1b839eaf5c4716cb194ee4a8d68e8

+size 217710350

diff --git a/checkpoints/bw2color/latest_net_D.pth b/checkpoints/bw2color/latest_net_D.pth

new file mode 100644

index 0000000000000000000000000000000000000000..d005ba2de4ef075387a5a8b58bfe192cd6ec93c4

--- /dev/null

+++ b/checkpoints/bw2color/latest_net_D.pth

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:a5b83cdcf7625bfb5e196dc9c6d47333a361095690db62f2ad1cf92d9a2986ee

+size 11076950

diff --git a/checkpoints/bw2color/latest_net_G_A.pth b/checkpoints/bw2color/latest_net_G_A.pth

new file mode 100644

index 0000000000000000000000000000000000000000..caefa7332c9d1738270a38d0a9c8957d92f905dc

--- /dev/null

+++ b/checkpoints/bw2color/latest_net_G_A.pth

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:c607ba5bee252b896387d91691222f5d1e4df3f83e7cd27704629621330cab81

+size 217710350

diff --git a/checkpoints/bw2color/loss_log.txt b/checkpoints/bw2color/loss_log.txt

new file mode 100644

index 0000000000000000000000000000000000000000..e2ee1ebd9182533dd615f6bdaa556e013e069822

--- /dev/null

+++ b/checkpoints/bw2color/loss_log.txt

@@ -0,0 +1,17 @@

+================ Training Loss (Sat Nov 4 23:03:46 2023) ================

+(epoch: 8, iters: 2, time: 0.198, data: 0.717) G_GAN: 1.010 G_L1: 7.272 D_real: 0.533 D_fake: 0.516

+(epoch: 15, iters: 4, time: 0.199, data: 0.001) G_GAN: 1.228 G_L1: 4.958 D_real: 0.364 D_fake: 0.573

+(epoch: 22, iters: 6, time: 0.227, data: 0.003) G_GAN: 1.043 G_L1: 4.291 D_real: 0.214 D_fake: 0.797

+(epoch: 29, iters: 8, time: 2.188, data: 0.001) G_GAN: 0.901 G_L1: 2.646 D_real: 0.669 D_fake: 0.546

+(epoch: 36, iters: 10, time: 0.257, data: 0.005) G_GAN: 1.026 G_L1: 2.751 D_real: 0.526 D_fake: 0.560

+(epoch: 43, iters: 12, time: 0.242, data: 0.001) G_GAN: 1.380 G_L1: 3.614 D_real: 0.305 D_fake: 0.586

+(epoch: 50, iters: 14, time: 0.256, data: 0.003) G_GAN: 0.709 G_L1: 2.387 D_real: 0.763 D_fake: 0.772

+(epoch: 58, iters: 2, time: 2.606, data: 0.526) G_GAN: 0.973 G_L1: 3.211 D_real: 0.583 D_fake: 0.805

+(epoch: 65, iters: 4, time: 0.239, data: 0.005) G_GAN: 0.849 G_L1: 2.521 D_real: 0.685 D_fake: 0.521

+(epoch: 72, iters: 6, time: 0.227, data: 0.004) G_GAN: 0.768 G_L1: 2.132 D_real: 0.898 D_fake: 0.606

+(epoch: 79, iters: 8, time: 0.186, data: 0.003) G_GAN: 0.764 G_L1: 1.370 D_real: 0.824 D_fake: 0.625

+(epoch: 86, iters: 10, time: 1.048, data: 0.020) G_GAN: 1.167 G_L1: 3.618 D_real: 0.286 D_fake: 0.943

+(epoch: 93, iters: 12, time: 0.256, data: 0.001) G_GAN: 0.800 G_L1: 1.420 D_real: 0.879 D_fake: 0.532

+(epoch: 100, iters: 14, time: 0.250, data: 0.003) G_GAN: 0.689 G_L1: 1.218 D_real: 0.590 D_fake: 0.869

+(epoch: 108, iters: 2, time: 0.168, data: 0.382) G_GAN: 0.871 G_L1: 2.465 D_real: 0.585 D_fake: 0.526

+(epoch: 115, iters: 4, time: 1.077, data: 0.006) G_GAN: 0.732 G_L1: 1.168 D_real: 0.869 D_fake: 0.569

diff --git a/checkpoints/bw2color/opt.txt b/checkpoints/bw2color/opt.txt

new file mode 100644

index 0000000000000000000000000000000000000000..247925ff67cd509001b687316a1f6b49011a7d5b

--- /dev/null

+++ b/checkpoints/bw2color/opt.txt

@@ -0,0 +1,35 @@

+------------ Options -------------

+align_data: False

+aspect_ratio: 1.0

+batchSize: 1

+checkpoints_dir: ./checkpoints

+dataroot: None

+display_id: 1

+display_winsize: 256

+fineSize: 256

+gpu_ids: []

+how_many: 50

+identity: 0.0

+image_path: C:\Users\thera\Downloads\DataSet\09.png

+input_nc: 3

+isTrain: False

+loadSize: 286

+max_dataset_size: inf

+model: colorization

+nThreads: 2

+n_layers_D: 3

+name: bw2color

+ndf: 64

+ngf: 64

+norm: instance

+ntest: inf

+output_nc: 3

+phase: test

+results_dir: ./results/

+serial_batches: False

+use_dropout: True

+which_direction: AtoB

+which_epoch: latest

+which_model_netD: basic

+which_model_netG: resnet_9blocks

+-------------- End ----------------

diff --git a/checkpoints/bw2color/test_opt.txt b/checkpoints/bw2color/test_opt.txt

new file mode 100644

index 0000000000000000000000000000000000000000..2d40b8ee7f57fe901a9d6024118f78657001a51b

--- /dev/null

+++ b/checkpoints/bw2color/test_opt.txt

@@ -0,0 +1,45 @@

+----------------- Options ---------------

+ aspect_ratio: 1.0

+ batch_size: 1

+ checkpoints_dir: ./checkpoints

+ crop_size: None

+ dataroot: None

+ dataset_mode: colorization

+ direction: AtoB

+ display_winsize: 256

+ epoch: latest

+ eval: False

+ gpu_ids: -1 [default: 0]

+ how_many: 50

+ image_path: C:\Users\thera\Downloads\55Sin t�tulo.png [default: None]

+ init_gain: 0.02

+ init_type: normal

+ input_nc: 1

+ isTrain: False [default: None]

+ load_iter: 0 [default: 0]

+ load_size: None

+ max_dataset_size: inf

+ model: colorization

+ n_layers_D: 3

+ name: bw2color [default: experiment_name]

+ ndf: 64

+ netD: basic

+ netG: unet_256

+ ngf: 64

+ no_dropout: False

+ no_flip: False

+ norm: batch

+ ntest: inf

+ num_test: 50

+ num_threads: 4

+ output_nc: 2

+ phase: test

+ preprocess: resize_and_crop

+ results_dir: ./results/

+ serial_batches: False

+ suffix:

+ use_wandb: False

+ verbose: False

+ wandb_project_name: CycleGAN-and-pix2pix

+ which_epoch: latest

+----------------- End -------------------

diff --git a/checkpoints/bw2color/web/images/epoch004_fake_A.png b/checkpoints/bw2color/web/images/epoch004_fake_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..c148cf7084c10dc41dc385b4296a594cbf99aa05

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch004_fake_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch004_fake_B.png b/checkpoints/bw2color/web/images/epoch004_fake_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..83b99977bf31554c825109a536d4335197c07542

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch004_fake_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch004_idt_A.png b/checkpoints/bw2color/web/images/epoch004_idt_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..de3162b5db5b2fe0d57c0f0e9f7f10b63345a488

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch004_idt_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch004_idt_B.png b/checkpoints/bw2color/web/images/epoch004_idt_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..5f4ae90de070c1683989ea1cc3f703c77f160979

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch004_idt_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch004_real_A.png b/checkpoints/bw2color/web/images/epoch004_real_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..ee6d01b30d16fc063cbb3977222f9df43d2dfd3b

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch004_real_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch004_real_B.png b/checkpoints/bw2color/web/images/epoch004_real_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..fbb64932b8df839a83f9c0010f263e19bbd6b2d2

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch004_real_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch004_rec_A.png b/checkpoints/bw2color/web/images/epoch004_rec_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..74bc77fa649a61cbd0f9b55a3358ea45dfe9622e

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch004_rec_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch004_rec_B.png b/checkpoints/bw2color/web/images/epoch004_rec_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..e7bfd222090a9fc624b25c5117e4cbeee05fd7b1

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch004_rec_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch008_fake_A.png b/checkpoints/bw2color/web/images/epoch008_fake_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..c48a81d6d1f0b4d82c0d81c72e3310bb95c807c7

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch008_fake_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch008_fake_B.png b/checkpoints/bw2color/web/images/epoch008_fake_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..e4c0f1bd309e63d2eb50b0c1938343b7ad02fc64

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch008_fake_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch008_idt_A.png b/checkpoints/bw2color/web/images/epoch008_idt_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..68f9ac38f7c2631d7b17e83b52560b8d3dfe4398

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch008_idt_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch008_idt_B.png b/checkpoints/bw2color/web/images/epoch008_idt_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..46280456c73233aaa8c11f05c2bb11cee70ae0a2

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch008_idt_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch008_real_A.png b/checkpoints/bw2color/web/images/epoch008_real_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..0f6b5d466fdc8ad31d996cf80761a28442c5a7c4

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch008_real_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch008_real_B.png b/checkpoints/bw2color/web/images/epoch008_real_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..700671efacc86176d95899c7d7655c717f5d6cc4

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch008_real_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch008_rec_A.png b/checkpoints/bw2color/web/images/epoch008_rec_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..6779a1da2766b2693c949b76b5c66da0c9363a11

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch008_rec_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch008_rec_B.png b/checkpoints/bw2color/web/images/epoch008_rec_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..5571f3369cf8b1cbfbb5bae12bde37fc4b1c8832

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch008_rec_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch012_fake_A.png b/checkpoints/bw2color/web/images/epoch012_fake_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..905efdab6442d520d62285f77909ff85657759a1

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch012_fake_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch012_fake_B.png b/checkpoints/bw2color/web/images/epoch012_fake_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..a04c928924519364af66952291194f44baed52ec

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch012_fake_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch012_idt_A.png b/checkpoints/bw2color/web/images/epoch012_idt_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..60fb1d444834e904ed37a187352a2cb2e6b2d589

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch012_idt_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch012_idt_B.png b/checkpoints/bw2color/web/images/epoch012_idt_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..e8fe9df75ced8b5aca1827ac013d3bfc41de0e64

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch012_idt_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch012_real_A.png b/checkpoints/bw2color/web/images/epoch012_real_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..f2ccf6bbd59fb4fb712e9bcc7d8b2f13e69015a1

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch012_real_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch012_real_B.png b/checkpoints/bw2color/web/images/epoch012_real_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..300019800d45b11bded572500f45af8ebef96f9e

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch012_real_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch012_rec_A.png b/checkpoints/bw2color/web/images/epoch012_rec_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..501adff5d0436bd7f23301351632a9213425c46e

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch012_rec_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch012_rec_B.png b/checkpoints/bw2color/web/images/epoch012_rec_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..ee95f7eb52104cbcf9cfe709b58bf4928773a6c6

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch012_rec_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch016_fake_A.png b/checkpoints/bw2color/web/images/epoch016_fake_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..ec0b79ddb86feb95f748a1e3aab4f19c9fd0b1ed

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch016_fake_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch016_fake_B.png b/checkpoints/bw2color/web/images/epoch016_fake_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..1ad417f668bcfbbe5ebb3be357ce0f25aaf027ec

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch016_fake_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch016_idt_A.png b/checkpoints/bw2color/web/images/epoch016_idt_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..c808d657a25a7c6240e52e25d7b73bfe078f8941

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch016_idt_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch016_idt_B.png b/checkpoints/bw2color/web/images/epoch016_idt_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..1690c4a3558128e11d9a82b68b1e5a3b8537917c

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch016_idt_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch016_real_A.png b/checkpoints/bw2color/web/images/epoch016_real_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..fe246bf5b8f93017853813d3b8883e79804b660f

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch016_real_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch016_real_B.png b/checkpoints/bw2color/web/images/epoch016_real_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..addc44ec9caa17a6471214a6ad08dd2476c6ea67

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch016_real_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch016_rec_A.png b/checkpoints/bw2color/web/images/epoch016_rec_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..48e4a3516ee9b4057839abf6829cec3842b6da31

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch016_rec_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch016_rec_B.png b/checkpoints/bw2color/web/images/epoch016_rec_B.png

new file mode 100644

index 0000000000000000000000000000000000000000..2a0ae5f3c0344add1f21998ee7e61678d77af4d9

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch016_rec_B.png differ

diff --git a/checkpoints/bw2color/web/images/epoch029_fake_B_rgb.png b/checkpoints/bw2color/web/images/epoch029_fake_B_rgb.png

new file mode 100644

index 0000000000000000000000000000000000000000..92b50c2b9588ec45249cf413ac4fe612f3dd125a

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch029_fake_B_rgb.png differ

diff --git a/checkpoints/bw2color/web/images/epoch029_real_A.png b/checkpoints/bw2color/web/images/epoch029_real_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..59933c12623d64d8e871c57a81935ff35a250568

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch029_real_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch029_real_B_rgb.png b/checkpoints/bw2color/web/images/epoch029_real_B_rgb.png

new file mode 100644

index 0000000000000000000000000000000000000000..f1a93df086f6d43b977fae7a61fb063982df6803

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch029_real_B_rgb.png differ

diff --git a/checkpoints/bw2color/web/images/epoch058_fake_B_rgb.png b/checkpoints/bw2color/web/images/epoch058_fake_B_rgb.png

new file mode 100644

index 0000000000000000000000000000000000000000..866b269c4ef4a6a6ba7a3edec3d936aa5fb94865

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch058_fake_B_rgb.png differ

diff --git a/checkpoints/bw2color/web/images/epoch058_real_A.png b/checkpoints/bw2color/web/images/epoch058_real_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..277bc91384a1c5704b3f5b3f805153e8e30a883b

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch058_real_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch058_real_B_rgb.png b/checkpoints/bw2color/web/images/epoch058_real_B_rgb.png

new file mode 100644

index 0000000000000000000000000000000000000000..8ce7018ae008de777f60db962d7710dad951f453

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch058_real_B_rgb.png differ

diff --git a/checkpoints/bw2color/web/images/epoch086_fake_B_rgb.png b/checkpoints/bw2color/web/images/epoch086_fake_B_rgb.png

new file mode 100644

index 0000000000000000000000000000000000000000..d5fa36af2c159ef91fe857f69e551b8518b95e53

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch086_fake_B_rgb.png differ

diff --git a/checkpoints/bw2color/web/images/epoch086_real_A.png b/checkpoints/bw2color/web/images/epoch086_real_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..84e44952f033b2d88956e44101f680b28eef49d4

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch086_real_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch086_real_B_rgb.png b/checkpoints/bw2color/web/images/epoch086_real_B_rgb.png

new file mode 100644

index 0000000000000000000000000000000000000000..1b0bc2a119c91c50fb5d49fe0b186bbf117acda6

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch086_real_B_rgb.png differ

diff --git a/checkpoints/bw2color/web/images/epoch115_fake_B_rgb.png b/checkpoints/bw2color/web/images/epoch115_fake_B_rgb.png

new file mode 100644

index 0000000000000000000000000000000000000000..75cbaad7118e58c3a574c4cdf864b20ef16b5500

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch115_fake_B_rgb.png differ

diff --git a/checkpoints/bw2color/web/images/epoch115_real_A.png b/checkpoints/bw2color/web/images/epoch115_real_A.png

new file mode 100644

index 0000000000000000000000000000000000000000..32ff12add6f07c6ca024c29debda2064f36d767f

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch115_real_A.png differ

diff --git a/checkpoints/bw2color/web/images/epoch115_real_B_rgb.png b/checkpoints/bw2color/web/images/epoch115_real_B_rgb.png

new file mode 100644

index 0000000000000000000000000000000000000000..26d883e0eae8e9b576dbb415c94ad6ade3bd146b

Binary files /dev/null and b/checkpoints/bw2color/web/images/epoch115_real_B_rgb.png differ

diff --git a/checkpoints/bw2color/web/index.html b/checkpoints/bw2color/web/index.html

new file mode 100644

index 0000000000000000000000000000000000000000..be7e64f4d57844a7015883576984b8f26ac1d7fd

--- /dev/null

+++ b/checkpoints/bw2color/web/index.html

@@ -0,0 +1,3344 @@

+

+

+

+ Experiment name = bw2color

+

+

+

+ epoch [115]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [114]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [113]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [112]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [111]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [110]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [109]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [108]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [107]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [106]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [105]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [104]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [103]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [102]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [101]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [100]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [99]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [98]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [97]

+

+

+ |

+

+

+  +

+

+ real_A

+

+ |

+

+

+

+  +

+

+ real_B_rgb

+

+ |

+

+

+

+  +

+

+ fake_B_rgb

+

+ |

+

+

+ epoch [96]

+

+

+ |

+

+