File size: 3,183 Bytes

1b8cbec 0836d16 f9416f6 1b8cbec 4dba962 1b8cbec 0871ead e6be305 e17a296 0871ead 935ae32 0871ead f9416f6 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 |

---

base_model: facebook/detr-resnet-50

base_model_relation: finetune

license: mit

library_name: transformers

pipeline_tag: object-detection

tags:

- spheres

- photogrammetry

- calibration

widget:

- src: examples/chevaux.jpg

example_title: Chevaux

- src: examples/mammouths.jpg

example_title: Mammouths

- src: examples/synth.png

example_title: Synth

---

# Model card for REVA-QCAV

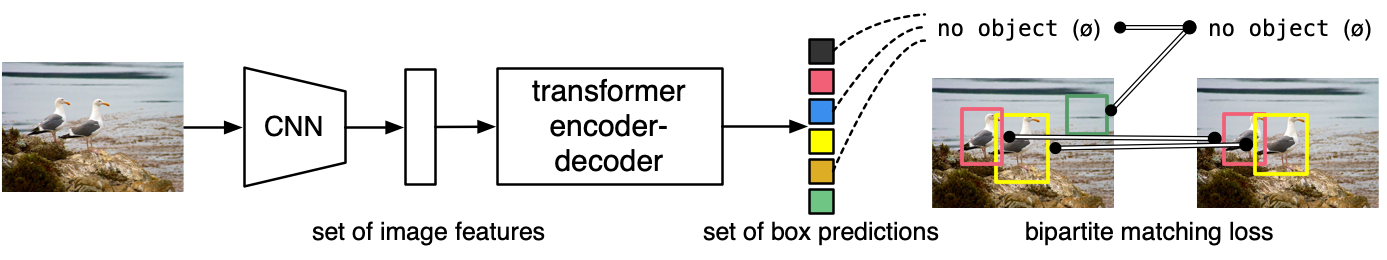

A DEtection TRansformer (DETR) model with a ResNet-50 backbone ([facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50)) finetuned on a custom photogrammetry calibration sphere dataset.

## Model Usage

### Object Detection (using `transformers`)

```python

from transformers import AutoImageProcessor, AutoModelForObjectDetection

from huggingface_hub import hf_hub_download

from PIL import Image

import torch

# download example image

img_path = hf_hub_download(repo_id="1aurent/REVA-QCAV", filename="examples/chevaux.jpg")

img = Image.open(img_path)

# transform image using image_processor

image_processor = AutoImageProcessor.from_pretrained("1aurent/REVA-QCAV")

data = image_processor(img, return_tensors="pt")

# get outputs from the model

model = AutoModelForObjectDetection.from_pretrained("1aurent/REVA-QCAV")

with torch.no_grad():

output = model(**data)

# use image_processor post processing

img_CHW = torch.tensor([img.height, img.width]).unsqueeze(0)

output_processed = image_processor.post_process_object_detection(output, threshold=0.9, target_sizes=img_CHW)

```

### Object Detection (using `onnxruntime`)

```python

from transformers.models.detr.modeling_detr import DetrObjectDetectionOutput

from transformers import AutoImageProcessor

from huggingface_hub import hf_hub_download

import onnxruntime as ort

from PIL import Image

import torch

# download onnx and start inference session

onnx_path = hf_hub_download(repo_id="1aurent/REVA-QCAV", filename="model.onnx")

session = ort.InferenceSession(onnx_path)

# download example image

img_path = hf_hub_download(repo_id="1aurent/REVA-QCAV", filename="examples/chevaux.jpg")

img = Image.open(img_path)

# transform image using image_processor

image_processor = AutoImageProcessor.from_pretrained("1aurent/REVA-QCAV")

data = image_processor(img, return_tensors="np").data

# get logits and bbox predictions using onnx session

logits, pred_boxes = session.run(

output_names=["logits", "pred_boxes"],

input_feed=data,

)

# wrap outputs inside DetrObjectDetectionOutput

output = DetrObjectDetectionOutput(

logits=torch.tensor(logits),

pred_boxes=torch.tensor(pred_boxes),

)

# use image_processor post processing

img_CHW = torch.tensor([img.height, img.width]).unsqueeze(0)

output_processed = image_processor.post_process_object_detection(output, threshold=0.9, target_sizes=img_CHW)

```

## Citation

```bibtex

@article{reva-qcav,

author = {Laurent Fainsin and Jean Mélou and Lilian Calvet and Antoine Laurent and Axel Carlier and Jean-Denis Durou},

title = {Neural sphere detection in images for lighting calibration},

journal = {QCAV},

year = {2023},

url = {https://hal.science/hal-04160733}

}

```

|